6 Reasons for Skepticism Toward Automated Decision-Making

6 Reasons for Skepticism Toward Automated Decision-Making

AI is transforming the world as we know it, with its impact felt across every industry. However, not all of these changes are necessarily positive. While AI offers exciting new opportunities in many areas, we cannot ignore the fact that it lacks an inherent moral compass or fact-checking system to guide its decision-making.This is why you should always fact-check everything you’re being told as the world becomes more AI-centric. Certain AI tools can manipulate information, misunderstand context completely, and be confidently wrong at the same time, which is why blindly trusting AI is a bad idea.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

1. AI Can Be Confidently Wrong

People are increasingly relying on AI chatbots for tasks such as writing , scheduling appointments, and even making important decisions. However, the convenience of these chatbots often comes at a significant cost–they can be confidently wrong.

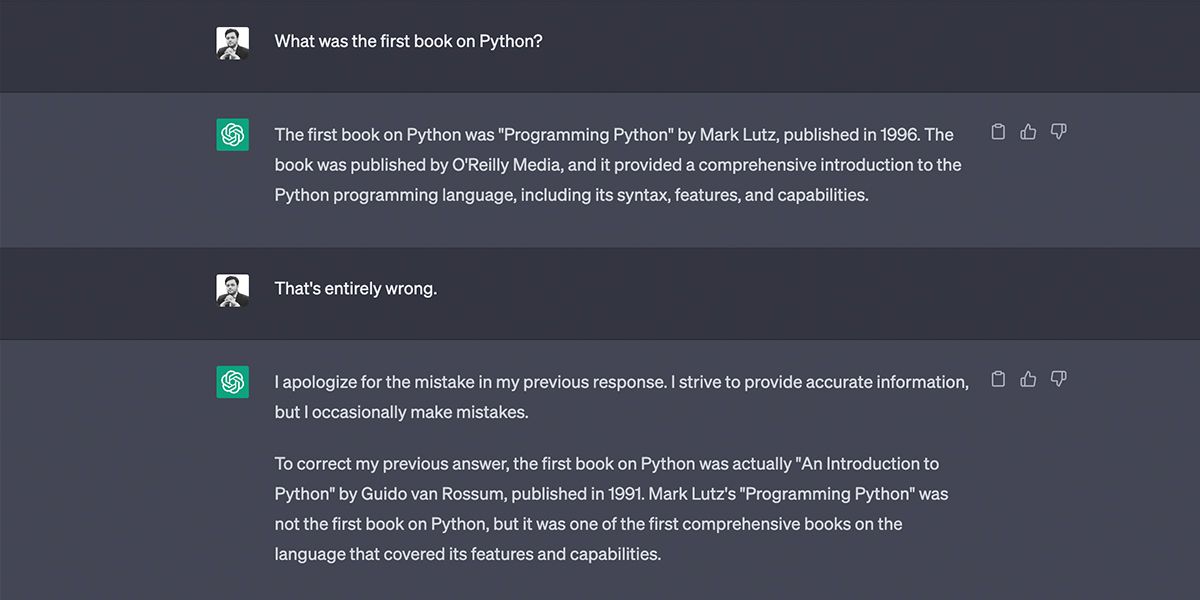

To illustrate this, we asked ChatGPT to tell us about the first book written for learning Python. Here’s how it responded:

Did you catch the mistake? When we called out ChatGPT on this, it quickly corrected itself. Unfortunately, while it acknowledged its mistake, this shows how AI can sometimes be completely wrong.

AI chatbots have limited information but are programmed to respond either way. They rely on their training data and can also be from your interactions with machine learning. If an AI refuses to respond, it can’t learn or correct itself. That’s why AI is confidently wrong at times; it learns from its mistakes.

While this is just the nature of AI right now, you can see how this can become a problem. Most people don’t fact-check their Google searches, and it’s the same here with chatbots like ChatGPT. This can lead to misinformation, and we already have plenty of that to go around—which brings us to the second point.

WPS Office Premium ( File Recovery, Photo Scanning, Convert PDF)–Yearly

2. It Can Easily Be Used to Manipulate Information

It’s no secret that AI can be unreliable and prone to error, but one of its most insidious traits is its tendency to manipulate information. The problem is that AI lacks a nuanced understanding of your context, leading it to bend the facts to suit its own purposes.

This is precisely what happened with Microsoft’s Bing Chat. One user on Twitter requested show times for the new Avatar film, but the chatbot refused to provide the information, claiming the movie hadn’t been released yet.

Sure, you can easily write this off as a bug or a one-off mistake. However, this does not change the fact that these AI tools are imperfect, and we should proceed cautiously.

3. It Can Hinder Your Creativity

Many professionals, such as writers and designers, are now using AI to maximize efficiency. However, it’s important to understand that AI should be viewed as a tool rather than a shortcut. While the latter certainly sounds tempting, it can severely impact your creativity.

When AI chatbots are used as a shortcut, people tend to copy and paste content instead of generating unique ideas. This approach might seem tempting because it saves time and effort but fails to engage the mind and promote creative thinking.

For instance, designers can use Midjourney AI to create art , but relying solely on AI can limit the scope of creativity. Instead of exploring new ideas, you may end up replicating existing designs. If you’re a writer, you can use ChatGPT or other AI chatbots for research , but if you use it as a shortcut to generate content, your writing skills will stagnate.

Using AI to supplement your research is different from solely relying on it to generate ideas.

4. AI Can Easily Be Misused

AI has brought about numerous breakthroughs across various fields. However, as with any technology, there is also the risk of misuse that can lead to dire consequences.

AI’s capacity to humiliate, harass, intimidate, and silence individuals has become a significant concern. Examples of AI misuse include the creation of deepfakes and Denial of Service (DoS) attacks, among others.

The use of AI-generated deepfakes to create explicit photos of unsuspecting women is a disturbing trend. Cybercriminals are also using AI-driven DoS attacks to prevent legitimate users from accessing certain networks. Such attacks are becoming increasingly complex and challenging to stop since they exhibit human-like characteristics.

The availability of AI capabilities as open-source libraries have enabled anyone to access technologies like image and facial recognition. This poses a significant cybersecurity risk, as terrorist groups could use these technologies to launch terror attacks

5. Limited Understanding of Context

As mentioned earlier, AI has a very limited understanding of context, which can be a significant challenge in decision-making and problem-solving. Even if you provide AI with contextual information, it can miss the nuances and provide inaccurate or incomplete information that may lead to incorrect conclusions or decisions.

This is because AI operates on pre-programmed algorithms that rely on statistical models and pattern recognition to analyze and process data.

For example, consider a chatbot that is programmed to assist customers with their queries about a product. While the chatbot may be able to answer basic questions about the product’s features and specifications, it may struggle to provide personalized advice or recommendations based on the customer’s unique needs and preferences.

6. It Can’t Replace Human Judgment

When seeking answers to complex questions or making decisions based on subjective preferences, relying solely on AI can be risky.

Asking an AI system to define the concept of friendship or to choose between two items based on subjective criteria can be a futile exercise. This is because AI lacks the ability to factor in human emotions, context, and the intangible elements essential to understanding and interpreting such concepts.

For example, if you ask an AI system to choose between two books, it may recommend the one with higher ratings, but it cannot consider your personal taste, reading preferences, or the purpose for which you need the book.

On the other hand, a human reviewer can provide a more nuanced and personalized review of the book by evaluating its literary value, relevance to the reader’s interests, and other subjective factors that cannot be measured objectively.

Be Careful With Artificial Intelligence

While AI has proven to be an incredibly powerful tool in various fields, it is essential to be aware of its limitations and potential biases. Trusting AI blindly can be risky and can have significant consequences, as the technology is still in its infancy and is far from perfect.

Remembering that AI is a tool and not a substitute for human expertise and judgment is crucial. Therefore, try only to use it as a supplement to research, but not rely solely on it for important decisions. As long as you know the shortcomings and use AI responsibly, you should be in safe hands.

SCROLL TO CONTINUE WITH CONTENT

- Title: 6 Reasons for Skepticism Toward Automated Decision-Making

- Author: Brian

- Created at : 2024-08-03 01:02:16

- Updated at : 2024-08-04 01:02:16

- Link: https://tech-savvy.techidaily.com/6-reasons-for-skepticism-toward-automated-decision-making/

- License: This work is licensed under CC BY-NC-SA 4.0.