AI Jargon Uncovered: Essential Terms for Tech Professionals

AI Jargon Uncovered: Essential Terms for Tech Professionals

Exploring artificial intelligence (AI) can feel like entering a maze of confusing technical terms and nonsensical jargon. It’s no wonder that even those familiar with AI can find themselves scratching their heads in confusion.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

With that in mind, we’ve created a comprehensive AI glossary to equip you with the necessary knowledge. From artificial intelligence itself to machine learning and data mining, we’ll decode all the essential AI terms in plain and simple language.

Whether you’re a curious beginner or an AI enthusiast, understanding the following AI concepts will bring you closer to unlocking the power of AI.

FREE DOWNLOAD: This cheat sheet is available as a downloadable PDF from our distribution partner, TradePub. You will have to complete a short form to access it for the first time. Download the AI Glossary Cheat Sheet .

1. Algorithm

An algorithm is a set of instructions or rules machines follow to solve a problem or accomplish a task.

2. Artificial Intelligence

AI is the ability of machines to mimic human intelligence and perform tasks commonly associated with intelligent beings.

3. Artificial General Intelligence (AGI)

AGI, also called strong AI, is a type of AI that possesses advanced intelligence capabilities similar to human beings. While artificial general intelligence was once primarily a theoretical concept and a rich playground for research, many AI developers now believe humanity will reach AGI sometime in the next decade.,

4. Backpropagation

Backpropagation is an algorithm neural networks use to improve their accuracy and performance. It works by calculating error in the output, propagating it back through the network, and adjusting the weights and biases of connections to get better results.

5. Bias

AI bias refers to the tendency of a model to make certain predictions more often than others. Bias can be caused due to the training data of a model or its inherent assumptions.

6. Big Data

Big data is a term that describes datasets that are too large or too complex to process using traditional methods. It involves analyzing vast sets of information to extract valuable insights and patterns to improve decision-making.

7. Chatbot

A chatbot is a program that can simulate conversations with human users through text or voice commands. Chatbots can understand and generate human-like responses, making them a powerful tool for customer service applications.

8. Cognitive Computing

Cognitive computing is an AI field focusing on developing systems that imitate human cognitive abilities, such as perception, learning, reasoning, and problem-solving.

9. Computational Learning Theory

A branch of artificial intelligence that studies algorithms and mathematical models of machine learning. It focuses on the theoretical foundations of learning to understand how machines can acquire knowledge, make predictions, and improve their performance.

10. Computer Vision

Computer vision refers to the ability of machines to extract visual information from digital images and videos. Computer vision algorithms are widely used in applications like object detection, face recognition, medical imaging, and autonomous vehicles.

11. Data Mining

Data mining is the process of acquiring valuable knowledge from large datasets. It uses statistical analysis and machine learning techniques to identify patterns, relationships, and trends in data to improve decision-making.

12. Data Science

Data science involves extracting insights from data using scientific methods, algorithms, and systems. It’s more comprehensive than data mining and encompasses a wide range of activities, including data collection, data visualization, and predictive modeling to solve complex problems.

13. Deep Learning

Deep learning is a branch of AI that uses artificial neural networks with multiple layers (interconnected nodes within the neural network) to learn from vast amounts of data. It enables machines to perform complex tasks, such as natural language processing , image, and speech recognition.

14. Generative AI

Generative AI describes artificial intelligence systems and algorithms that can create text, audio, video, and simulations. These AI systems learn patterns and examples from existing data and use that knowledge to create new and original outputs.

15. Hallucination

AI hallucination refers to the instances where a model produces factually incorrect, irrelevant, or nonsensical results. This can happen for several reasons, including lack of context, limitations in training data, or architecture.

16. Hyperparameters

Hyperparameters are settings that define how an algorithm or a machine learning model learns and behaves. Hyperparameters include learning rate, regularization strength, and the number of hidden layers in the network. You can tinker with these parameters to fine-tune the model’s performance according to your needs.

17. Large Language Model (LLM)

An LLM is a machine learning model trained on vast amounts of data and uses supervised learning to produce the next token in a given context to produce meaningful, contextual responses to user inputs. The word “large” indicates the use of extensive parameters by the language model. For example, GPT models use hundreds of billions of parameters to carry out a wide range of NLP tasks.

18. Machine Learning

Machine learning is a way for machines to learn and make predictions without being explicitly programmed. It’s like feeding a computer with data and empowering it to make decisions or predictions by identifying patterns within the data.

19. Neural Network

A neural network is a computational model inspired by the human brain. It consists of interconnected nodes, or neurons, organized in layers. Each neuron receives input from other neurons in the network, allowing it to learn patterns and make decisions. Neural networks are a key component in machine learning models that enable them to excel in a wide array of tasks.

20. Natural Language Generation (NLG)

Natural language generation deals with the creation of human-readable text from structured data. NLG finds applications in content creation, chatbots, and voice assistants.

21. Natural Language Processing (NLP)

Natural language processing is the ability of machines to interpret, understand, and respond to human-readable text or speech. It’s used in various applications, including sentiment analysis, text classification, and question answering.

22. OpenAI

OpenAI is an artificial intelligence research laboratory, founded in 2015 and based in San Francisco, USA. The company develops and deploys AI tools that can appear to be as smart as humans. OpenAI’s best-known product, ChatGPT, was released in November 2022 and is heralded as the most advanced chatbot for its ability to provide answers on a wide range of topics.

23. Pattern Recognition

Pattern recognition is the ability of an AI system to identify and interpret patterns in data. Pattern recognition algorithms find applications in facial recognition, fraud detection, and speech recognition.

dotConnect for Oracle is an ADO.NET data provider for Oracle with Entity Framework Support.

dotConnect for Oracle is an ADO.NET data provider for Oracle with Entity Framework Support.

24. Recurrent Neural Network (RNN)

A type of neural network that can process sequential data using feedback connections. RNNs can retain the memory of previous inputs and are suitable for tasks like NLP and machine translation.

25. Reinforcement Learning

Reinforcement learning is a machine learning technique where an AI agent learns to make decisions through interactions by trial and error. The agent receives rewards or punishments from an algorithm based on its actions, guiding it to enhance its performance over time.

26. Supervised Learning

A machine learning method where the model is trained using labeled data with the desired output. The model generalizes from the labeled data and makes accurate predictions on new data.

27. Tokenization

Tokenization is the process of splitting a text document into smaller units called tokens. These tokens can represent words, numbers, phrases, symbols, or any elements in text that a program can work with. The purpose of tokenization is to make the most sense out of unstructured data without processing the entire text as a single string, which is computationally inefficient and difficult to model.

28. Turing Test

Introduced by Alan Turing in 1950, this test evaluates a machine’s ability to exhibit intelligence indistinguishable from that of a human. The Turing test involves a human judge interacting with a human and a machine without knowing which is which. If the judge fails to distinguish the machine from the human, the machine is considered to have passed the test.

29. Unsupervised Learning

A machine learning method where the model makes inferences from unlabeled datasets. It discovers patterns in the data to make predictions on unseen data.

ZoneAlarm Extreme Security NextGen

ZoneAlarm Extreme Security NextGen

Embracing the Language of Artificial Intelligence

AI is a rapidly evolving field changing how we interact with technology. However, with so many new buzzwords constantly emerging, it can be hard to keep up with the latest developments in the field.

While some terms may seem abstract without context, their significance becomes clear when combined with a basic understanding of machine learning. Understanding these terms and concepts can lay a powerful foundation that will empower you to make informed decisions within the realm of artificial intelligence.

SCROLL TO CONTINUE WITH CONTENT

With that in mind, we’ve created a comprehensive AI glossary to equip you with the necessary knowledge. From artificial intelligence itself to machine learning and data mining, we’ll decode all the essential AI terms in plain and simple language.

Whether you’re a curious beginner or an AI enthusiast, understanding the following AI concepts will bring you closer to unlocking the power of AI.

FREE DOWNLOAD: This cheat sheet is available as a downloadable PDF from our distribution partner, TradePub. You will have to complete a short form to access it for the first time. Download the AI Glossary Cheat Sheet .

1. Algorithm

An algorithm is a set of instructions or rules machines follow to solve a problem or accomplish a task.

2. Artificial Intelligence

AI is the ability of machines to mimic human intelligence and perform tasks commonly associated with intelligent beings.

3. Artificial General Intelligence (AGI)

AGI, also called strong AI, is a type of AI that possesses advanced intelligence capabilities similar to human beings. While artificial general intelligence was once primarily a theoretical concept and a rich playground for research, many AI developers now believe humanity will reach AGI sometime in the next decade.,

4. Backpropagation

Backpropagation is an algorithm neural networks use to improve their accuracy and performance. It works by calculating error in the output, propagating it back through the network, and adjusting the weights and biases of connections to get better results.

5. Bias

AI bias refers to the tendency of a model to make certain predictions more often than others. Bias can be caused due to the training data of a model or its inherent assumptions.

6. Big Data

Big data is a term that describes datasets that are too large or too complex to process using traditional methods. It involves analyzing vast sets of information to extract valuable insights and patterns to improve decision-making.

7. Chatbot

A chatbot is a program that can simulate conversations with human users through text or voice commands. Chatbots can understand and generate human-like responses, making them a powerful tool for customer service applications.

8. Cognitive Computing

Cognitive computing is an AI field focusing on developing systems that imitate human cognitive abilities, such as perception, learning, reasoning, and problem-solving.

9. Computational Learning Theory

A branch of artificial intelligence that studies algorithms and mathematical models of machine learning. It focuses on the theoretical foundations of learning to understand how machines can acquire knowledge, make predictions, and improve their performance.

10. Computer Vision

Computer vision refers to the ability of machines to extract visual information from digital images and videos. Computer vision algorithms are widely used in applications like object detection, face recognition, medical imaging, and autonomous vehicles.

11. Data Mining

Data mining is the process of acquiring valuable knowledge from large datasets. It uses statistical analysis and machine learning techniques to identify patterns, relationships, and trends in data to improve decision-making.

12. Data Science

Data science involves extracting insights from data using scientific methods, algorithms, and systems. It’s more comprehensive than data mining and encompasses a wide range of activities, including data collection, data visualization, and predictive modeling to solve complex problems.

13. Deep Learning

Deep learning is a branch of AI that uses artificial neural networks with multiple layers (interconnected nodes within the neural network) to learn from vast amounts of data. It enables machines to perform complex tasks, such as natural language processing , image, and speech recognition.

14. Generative AI

Generative AI describes artificial intelligence systems and algorithms that can create text, audio, video, and simulations. These AI systems learn patterns and examples from existing data and use that knowledge to create new and original outputs.

15. Hallucination

AI hallucination refers to the instances where a model produces factually incorrect, irrelevant, or nonsensical results. This can happen for several reasons, including lack of context, limitations in training data, or architecture.

16. Hyperparameters

Hyperparameters are settings that define how an algorithm or a machine learning model learns and behaves. Hyperparameters include learning rate, regularization strength, and the number of hidden layers in the network. You can tinker with these parameters to fine-tune the model’s performance according to your needs.

17. Large Language Model (LLM)

An LLM is a machine learning model trained on vast amounts of data and uses supervised learning to produce the next token in a given context to produce meaningful, contextual responses to user inputs. The word “large” indicates the use of extensive parameters by the language model. For example, GPT models use hundreds of billions of parameters to carry out a wide range of NLP tasks.

18. Machine Learning

Machine learning is a way for machines to learn and make predictions without being explicitly programmed. It’s like feeding a computer with data and empowering it to make decisions or predictions by identifying patterns within the data.

19. Neural Network

A neural network is a computational model inspired by the human brain. It consists of interconnected nodes, or neurons, organized in layers. Each neuron receives input from other neurons in the network, allowing it to learn patterns and make decisions. Neural networks are a key component in machine learning models that enable them to excel in a wide array of tasks.

20. Natural Language Generation (NLG)

Natural language generation deals with the creation of human-readable text from structured data. NLG finds applications in content creation, chatbots, and voice assistants.

21. Natural Language Processing (NLP)

Natural language processing is the ability of machines to interpret, understand, and respond to human-readable text or speech. It’s used in various applications, including sentiment analysis, text classification, and question answering.

22. OpenAI

OpenAI is an artificial intelligence research laboratory, founded in 2015 and based in San Francisco, USA. The company develops and deploys AI tools that can appear to be as smart as humans. OpenAI’s best-known product, ChatGPT, was released in November 2022 and is heralded as the most advanced chatbot for its ability to provide answers on a wide range of topics.

SwifDoo PDF Perpetual (1 PC) Free upgrade. No monthly fees ever.

SwifDoo PDF Perpetual (1 PC) Free upgrade. No monthly fees ever.

23. Pattern Recognition

Pattern recognition is the ability of an AI system to identify and interpret patterns in data. Pattern recognition algorithms find applications in facial recognition, fraud detection, and speech recognition.

24. Recurrent Neural Network (RNN)

A type of neural network that can process sequential data using feedback connections. RNNs can retain the memory of previous inputs and are suitable for tasks like NLP and machine translation.

25. Reinforcement Learning

Reinforcement learning is a machine learning technique where an AI agent learns to make decisions through interactions by trial and error. The agent receives rewards or punishments from an algorithm based on its actions, guiding it to enhance its performance over time.

26. Supervised Learning

A machine learning method where the model is trained using labeled data with the desired output. The model generalizes from the labeled data and makes accurate predictions on new data.

27. Tokenization

Tokenization is the process of splitting a text document into smaller units called tokens. These tokens can represent words, numbers, phrases, symbols, or any elements in text that a program can work with. The purpose of tokenization is to make the most sense out of unstructured data without processing the entire text as a single string, which is computationally inefficient and difficult to model.

28. Turing Test

Introduced by Alan Turing in 1950, this test evaluates a machine’s ability to exhibit intelligence indistinguishable from that of a human. The Turing test involves a human judge interacting with a human and a machine without knowing which is which. If the judge fails to distinguish the machine from the human, the machine is considered to have passed the test.

29. Unsupervised Learning

A machine learning method where the model makes inferences from unlabeled datasets. It discovers patterns in the data to make predictions on unseen data.

Embracing the Language of Artificial Intelligence

AI is a rapidly evolving field changing how we interact with technology. However, with so many new buzzwords constantly emerging, it can be hard to keep up with the latest developments in the field.

While some terms may seem abstract without context, their significance becomes clear when combined with a basic understanding of machine learning. Understanding these terms and concepts can lay a powerful foundation that will empower you to make informed decisions within the realm of artificial intelligence.

SCROLL TO CONTINUE WITH CONTENT

With that in mind, we’ve created a comprehensive AI glossary to equip you with the necessary knowledge. From artificial intelligence itself to machine learning and data mining, we’ll decode all the essential AI terms in plain and simple language.

Whether you’re a curious beginner or an AI enthusiast, understanding the following AI concepts will bring you closer to unlocking the power of AI.

FREE DOWNLOAD: This cheat sheet is available as a downloadable PDF from our distribution partner, TradePub. You will have to complete a short form to access it for the first time. Download the AI Glossary Cheat Sheet .

1. Algorithm

An algorithm is a set of instructions or rules machines follow to solve a problem or accomplish a task.

2. Artificial Intelligence

AI is the ability of machines to mimic human intelligence and perform tasks commonly associated with intelligent beings.

3. Artificial General Intelligence (AGI)

AGI, also called strong AI, is a type of AI that possesses advanced intelligence capabilities similar to human beings. While artificial general intelligence was once primarily a theoretical concept and a rich playground for research, many AI developers now believe humanity will reach AGI sometime in the next decade.,

4. Backpropagation

Backpropagation is an algorithm neural networks use to improve their accuracy and performance. It works by calculating error in the output, propagating it back through the network, and adjusting the weights and biases of connections to get better results.

5. Bias

AI bias refers to the tendency of a model to make certain predictions more often than others. Bias can be caused due to the training data of a model or its inherent assumptions.

6. Big Data

Big data is a term that describes datasets that are too large or too complex to process using traditional methods. It involves analyzing vast sets of information to extract valuable insights and patterns to improve decision-making.

7. Chatbot

A chatbot is a program that can simulate conversations with human users through text or voice commands. Chatbots can understand and generate human-like responses, making them a powerful tool for customer service applications.

8. Cognitive Computing

Cognitive computing is an AI field focusing on developing systems that imitate human cognitive abilities, such as perception, learning, reasoning, and problem-solving.

9. Computational Learning Theory

A branch of artificial intelligence that studies algorithms and mathematical models of machine learning. It focuses on the theoretical foundations of learning to understand how machines can acquire knowledge, make predictions, and improve their performance.

10. Computer Vision

Computer vision refers to the ability of machines to extract visual information from digital images and videos. Computer vision algorithms are widely used in applications like object detection, face recognition, medical imaging, and autonomous vehicles.

11. Data Mining

Data mining is the process of acquiring valuable knowledge from large datasets. It uses statistical analysis and machine learning techniques to identify patterns, relationships, and trends in data to improve decision-making.

12. Data Science

Data science involves extracting insights from data using scientific methods, algorithms, and systems. It’s more comprehensive than data mining and encompasses a wide range of activities, including data collection, data visualization, and predictive modeling to solve complex problems.

13. Deep Learning

Deep learning is a branch of AI that uses artificial neural networks with multiple layers (interconnected nodes within the neural network) to learn from vast amounts of data. It enables machines to perform complex tasks, such as natural language processing , image, and speech recognition.

14. Generative AI

Generative AI describes artificial intelligence systems and algorithms that can create text, audio, video, and simulations. These AI systems learn patterns and examples from existing data and use that knowledge to create new and original outputs.

15. Hallucination

AI hallucination refers to the instances where a model produces factually incorrect, irrelevant, or nonsensical results. This can happen for several reasons, including lack of context, limitations in training data, or architecture.

16. Hyperparameters

Hyperparameters are settings that define how an algorithm or a machine learning model learns and behaves. Hyperparameters include learning rate, regularization strength, and the number of hidden layers in the network. You can tinker with these parameters to fine-tune the model’s performance according to your needs.

17. Large Language Model (LLM)

An LLM is a machine learning model trained on vast amounts of data and uses supervised learning to produce the next token in a given context to produce meaningful, contextual responses to user inputs. The word “large” indicates the use of extensive parameters by the language model. For example, GPT models use hundreds of billions of parameters to carry out a wide range of NLP tasks.

18. Machine Learning

Machine learning is a way for machines to learn and make predictions without being explicitly programmed. It’s like feeding a computer with data and empowering it to make decisions or predictions by identifying patterns within the data.

19. Neural Network

A neural network is a computational model inspired by the human brain. It consists of interconnected nodes, or neurons, organized in layers. Each neuron receives input from other neurons in the network, allowing it to learn patterns and make decisions. Neural networks are a key component in machine learning models that enable them to excel in a wide array of tasks.

20. Natural Language Generation (NLG)

Natural language generation deals with the creation of human-readable text from structured data. NLG finds applications in content creation, chatbots, and voice assistants.

21. Natural Language Processing (NLP)

Natural language processing is the ability of machines to interpret, understand, and respond to human-readable text or speech. It’s used in various applications, including sentiment analysis, text classification, and question answering.

22. OpenAI

OpenAI is an artificial intelligence research laboratory, founded in 2015 and based in San Francisco, USA. The company develops and deploys AI tools that can appear to be as smart as humans. OpenAI’s best-known product, ChatGPT, was released in November 2022 and is heralded as the most advanced chatbot for its ability to provide answers on a wide range of topics.

23. Pattern Recognition

Pattern recognition is the ability of an AI system to identify and interpret patterns in data. Pattern recognition algorithms find applications in facial recognition, fraud detection, and speech recognition.

24. Recurrent Neural Network (RNN)

A type of neural network that can process sequential data using feedback connections. RNNs can retain the memory of previous inputs and are suitable for tasks like NLP and machine translation.

25. Reinforcement Learning

Reinforcement learning is a machine learning technique where an AI agent learns to make decisions through interactions by trial and error. The agent receives rewards or punishments from an algorithm based on its actions, guiding it to enhance its performance over time.

26. Supervised Learning

A machine learning method where the model is trained using labeled data with the desired output. The model generalizes from the labeled data and makes accurate predictions on new data.

27. Tokenization

Tokenization is the process of splitting a text document into smaller units called tokens. These tokens can represent words, numbers, phrases, symbols, or any elements in text that a program can work with. The purpose of tokenization is to make the most sense out of unstructured data without processing the entire text as a single string, which is computationally inefficient and difficult to model.

28. Turing Test

Introduced by Alan Turing in 1950, this test evaluates a machine’s ability to exhibit intelligence indistinguishable from that of a human. The Turing test involves a human judge interacting with a human and a machine without knowing which is which. If the judge fails to distinguish the machine from the human, the machine is considered to have passed the test.

29. Unsupervised Learning

A machine learning method where the model makes inferences from unlabeled datasets. It discovers patterns in the data to make predictions on unseen data.

Embracing the Language of Artificial Intelligence

AI is a rapidly evolving field changing how we interact with technology. However, with so many new buzzwords constantly emerging, it can be hard to keep up with the latest developments in the field.

While some terms may seem abstract without context, their significance becomes clear when combined with a basic understanding of machine learning. Understanding these terms and concepts can lay a powerful foundation that will empower you to make informed decisions within the realm of artificial intelligence.

SCROLL TO CONTINUE WITH CONTENT

With that in mind, we’ve created a comprehensive AI glossary to equip you with the necessary knowledge. From artificial intelligence itself to machine learning and data mining, we’ll decode all the essential AI terms in plain and simple language.

Whether you’re a curious beginner or an AI enthusiast, understanding the following AI concepts will bring you closer to unlocking the power of AI.

FREE DOWNLOAD: This cheat sheet is available as a downloadable PDF from our distribution partner, TradePub. You will have to complete a short form to access it for the first time. Download the AI Glossary Cheat Sheet .

1. Algorithm

An algorithm is a set of instructions or rules machines follow to solve a problem or accomplish a task.

2. Artificial Intelligence

AI is the ability of machines to mimic human intelligence and perform tasks commonly associated with intelligent beings.

3. Artificial General Intelligence (AGI)

AGI, also called strong AI, is a type of AI that possesses advanced intelligence capabilities similar to human beings. While artificial general intelligence was once primarily a theoretical concept and a rich playground for research, many AI developers now believe humanity will reach AGI sometime in the next decade.,

4. Backpropagation

Backpropagation is an algorithm neural networks use to improve their accuracy and performance. It works by calculating error in the output, propagating it back through the network, and adjusting the weights and biases of connections to get better results.

5. Bias

AI bias refers to the tendency of a model to make certain predictions more often than others. Bias can be caused due to the training data of a model or its inherent assumptions.

6. Big Data

Big data is a term that describes datasets that are too large or too complex to process using traditional methods. It involves analyzing vast sets of information to extract valuable insights and patterns to improve decision-making.

7. Chatbot

A chatbot is a program that can simulate conversations with human users through text or voice commands. Chatbots can understand and generate human-like responses, making them a powerful tool for customer service applications.

8. Cognitive Computing

Cognitive computing is an AI field focusing on developing systems that imitate human cognitive abilities, such as perception, learning, reasoning, and problem-solving.

9. Computational Learning Theory

A branch of artificial intelligence that studies algorithms and mathematical models of machine learning. It focuses on the theoretical foundations of learning to understand how machines can acquire knowledge, make predictions, and improve their performance.

10. Computer Vision

Computer vision refers to the ability of machines to extract visual information from digital images and videos. Computer vision algorithms are widely used in applications like object detection, face recognition, medical imaging, and autonomous vehicles.

11. Data Mining

Data mining is the process of acquiring valuable knowledge from large datasets. It uses statistical analysis and machine learning techniques to identify patterns, relationships, and trends in data to improve decision-making.

12. Data Science

Data science involves extracting insights from data using scientific methods, algorithms, and systems. It’s more comprehensive than data mining and encompasses a wide range of activities, including data collection, data visualization, and predictive modeling to solve complex problems.

13. Deep Learning

Deep learning is a branch of AI that uses artificial neural networks with multiple layers (interconnected nodes within the neural network) to learn from vast amounts of data. It enables machines to perform complex tasks, such as natural language processing , image, and speech recognition.

14. Generative AI

Generative AI describes artificial intelligence systems and algorithms that can create text, audio, video, and simulations. These AI systems learn patterns and examples from existing data and use that knowledge to create new and original outputs.

WPS Office Premium ( File Recovery, Photo Scanning, Convert PDF)–Yearly

15. Hallucination

AI hallucination refers to the instances where a model produces factually incorrect, irrelevant, or nonsensical results. This can happen for several reasons, including lack of context, limitations in training data, or architecture.

16. Hyperparameters

Hyperparameters are settings that define how an algorithm or a machine learning model learns and behaves. Hyperparameters include learning rate, regularization strength, and the number of hidden layers in the network. You can tinker with these parameters to fine-tune the model’s performance according to your needs.

17. Large Language Model (LLM)

An LLM is a machine learning model trained on vast amounts of data and uses supervised learning to produce the next token in a given context to produce meaningful, contextual responses to user inputs. The word “large” indicates the use of extensive parameters by the language model. For example, GPT models use hundreds of billions of parameters to carry out a wide range of NLP tasks.

18. Machine Learning

Machine learning is a way for machines to learn and make predictions without being explicitly programmed. It’s like feeding a computer with data and empowering it to make decisions or predictions by identifying patterns within the data.

19. Neural Network

A neural network is a computational model inspired by the human brain. It consists of interconnected nodes, or neurons, organized in layers. Each neuron receives input from other neurons in the network, allowing it to learn patterns and make decisions. Neural networks are a key component in machine learning models that enable them to excel in a wide array of tasks.

20. Natural Language Generation (NLG)

Natural language generation deals with the creation of human-readable text from structured data. NLG finds applications in content creation, chatbots, and voice assistants.

21. Natural Language Processing (NLP)

Natural language processing is the ability of machines to interpret, understand, and respond to human-readable text or speech. It’s used in various applications, including sentiment analysis, text classification, and question answering.

22. OpenAI

OpenAI is an artificial intelligence research laboratory, founded in 2015 and based in San Francisco, USA. The company develops and deploys AI tools that can appear to be as smart as humans. OpenAI’s best-known product, ChatGPT, was released in November 2022 and is heralded as the most advanced chatbot for its ability to provide answers on a wide range of topics.

23. Pattern Recognition

Pattern recognition is the ability of an AI system to identify and interpret patterns in data. Pattern recognition algorithms find applications in facial recognition, fraud detection, and speech recognition.

24. Recurrent Neural Network (RNN)

A type of neural network that can process sequential data using feedback connections. RNNs can retain the memory of previous inputs and are suitable for tasks like NLP and machine translation.

25. Reinforcement Learning

Reinforcement learning is a machine learning technique where an AI agent learns to make decisions through interactions by trial and error. The agent receives rewards or punishments from an algorithm based on its actions, guiding it to enhance its performance over time.

26. Supervised Learning

A machine learning method where the model is trained using labeled data with the desired output. The model generalizes from the labeled data and makes accurate predictions on new data.

27. Tokenization

Tokenization is the process of splitting a text document into smaller units called tokens. These tokens can represent words, numbers, phrases, symbols, or any elements in text that a program can work with. The purpose of tokenization is to make the most sense out of unstructured data without processing the entire text as a single string, which is computationally inefficient and difficult to model.

28. Turing Test

Introduced by Alan Turing in 1950, this test evaluates a machine’s ability to exhibit intelligence indistinguishable from that of a human. The Turing test involves a human judge interacting with a human and a machine without knowing which is which. If the judge fails to distinguish the machine from the human, the machine is considered to have passed the test.

29. Unsupervised Learning

A machine learning method where the model makes inferences from unlabeled datasets. It discovers patterns in the data to make predictions on unseen data.

Embracing the Language of Artificial Intelligence

AI is a rapidly evolving field changing how we interact with technology. However, with so many new buzzwords constantly emerging, it can be hard to keep up with the latest developments in the field.

While some terms may seem abstract without context, their significance becomes clear when combined with a basic understanding of machine learning. Understanding these terms and concepts can lay a powerful foundation that will empower you to make informed decisions within the realm of artificial intelligence.

- Title: AI Jargon Uncovered: Essential Terms for Tech Professionals

- Author: Brian

- Created at : 2024-08-10 02:10:37

- Updated at : 2024-08-11 02:10:37

- Link: https://tech-savvy.techidaily.com/ai-jargon-uncovered-essential-terms-for-tech-professionals/

- License: This work is licensed under CC BY-NC-SA 4.0.

Any DRM Removal for Win:Remove DRM from Adobe, Kindle, Sony eReader, Kobo, etc, read your ebooks anywhere.

Any DRM Removal for Win:Remove DRM from Adobe, Kindle, Sony eReader, Kobo, etc, read your ebooks anywhere.

Screensaver Factory, Create stunning professional screensavers within minutes. Create screensavers for yourself, for marketing or unlimited royalty-free commercial distribution. Make screensavers from images, video and swf flash, add background music and smooth sprite and transition effects. Screensaver Factory is very easy to use, and it enables you to make self-installing screensaver files and CDs for easy setup and distribution. Screensaver Factory is the most advanced software of its kind.

Screensaver Factory, Create stunning professional screensavers within minutes. Create screensavers for yourself, for marketing or unlimited royalty-free commercial distribution. Make screensavers from images, video and swf flash, add background music and smooth sprite and transition effects. Screensaver Factory is very easy to use, and it enables you to make self-installing screensaver files and CDs for easy setup and distribution. Screensaver Factory is the most advanced software of its kind.

OtsAV Radio Webcaster

OtsAV Radio Webcaster

WonderFox DVD Ripper Pro

WonderFox DVD Ripper Pro /a>

/a>

Greeting Card Builder

Greeting Card Builder

EaseText Audio to Text Converter for Windows (Personal Edition) - An intelligent tool to transcribe & convert audio to text freely

EaseText Audio to Text Converter for Windows (Personal Edition) - An intelligent tool to transcribe & convert audio to text freely

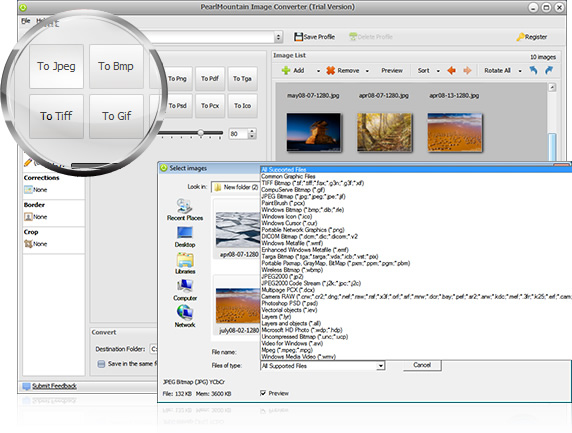

PearlMountain Image Converter

PearlMountain Image Converter

LYRX is an easy-to-use karaoke software with the professional features karaoke hosts need to perform with precision. LYRX is karaoke show hosting software that supports all standard karaoke file types as well as HD video formats, and it’s truly fun to use.

LYRX is an easy-to-use karaoke software with the professional features karaoke hosts need to perform with precision. LYRX is karaoke show hosting software that supports all standard karaoke file types as well as HD video formats, and it’s truly fun to use.

vMix HD - Software based live production. vMix HD includes everything in vMix Basic HD plus 1000 inputs, Video List, 4 Overlay Channels, and 1 vMix Call

vMix HD - Software based live production. vMix HD includes everything in vMix Basic HD plus 1000 inputs, Video List, 4 Overlay Channels, and 1 vMix Call