Apple Vision Pro Overview: In-Depth Analysis of Costs, Capabilities & Hands-On Reviews for Tech Enthusiasts

Why You Won’t Find New Apple AI Capabilities on Previous iPhones - Insights for Tech Enthusiasts

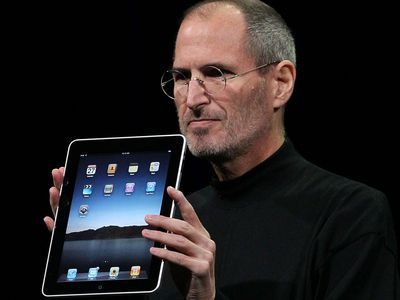

Jason Hiner/ZDNET

During WWDC 2024 , Apple unveiled “Apple Intelligence ,” which incorporates advanced AI capabilities throughout its ecosystem. However, these features are only available on high-end devices such as the iPhone 15 Pro , iPad Pro with M-series chips, and Macs running on Apple Silicon.

Also: Apple staged the AI comeback we’ve been hoping for - but here’s where it still needs work

Why didn’t Apple roll these features out to the entry-level iPhone 15 and earlier models? Although there may be other reasons why the company chose not to do so, the decision is almost certainly influenced by the substantial costs and infrastructure challenges involved in large-scale AI implementation.

Newsletters

ZDNET Tech Today

ZDNET’s Tech Today newsletter is a daily briefing of the newest, most talked about stories, five days a week.

Subscribe

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

The cost of GPU processing

Advanced AI features require substantial computational power, typically provided by high-performance GPUs. For instance, NVIDIA’s MGX with GH 200 and Grace Hopper superchip designed for AI training, inference, 5G, and HPC cost around $65,000 each. Deploying these servers regionally to support lower-end devices would be prohibitively expensive. Apple would easily need thousands of these units to support its entire user base, resulting in astronomical costs likely passed on to consumers through service fees.

Also: Apple partners with OpenAI to bring ChatGPT to iOS, iPadOS, and MacOS

Even major AI service providers such as OpenAI, Microsoft, and Google encounter challenges in offering dependable and quick access to LLM and Generative AI models to the general public without downtime and overcommitting resources. The shortage and cost of GPU-enabled servers make these issues worse. To maintain the rapid response times expected by its customers, Apple will need to invest substantially in servers, data centers, and edge infrastructure – an infrastructure level it likely does not currently possess.

Apple’s approach to Private Cloud Compute (PCC)

For the initial rollout of Apple Intelligence, the company has chosen a hybrid approach to balance cost and performance, combining on-device processing with Private Cloud Compute (PCC). On-device processing utilizes the A17 Pro chip in the iPhone 15 Pro line and the M-series chips in iPads and Macs to enhance security and privacy. For more demanding tasks, PCC allows cloud operations while maintaining user privacy. PCC is designed with custom Apple silicon and a robust operating system to ensure personal data security and prevent unauthorized access.

Also: Here’s how Apple’s keeping your cloud-processed AI data safe (and why it matters)

Apple is currently focused on rolling out its Generative AI services to high-end devices as part of the initial phase of Apple Intelligence deployment. This allows Apple to enhance its AI capabilities and infrastructure before expanding to a wider range of devices. To bring Apple Intelligence to the rest of its ecosystem, the company will likely deploy AI-accelerated server appliances at the edge, enabling less capable devices to benefit from advanced AI features. However, this infrastructure is not yet ready for large-scale deployment, as Apple’s shift towards AI development is still recent.

The challenges of edge computing

Edge computing , which involves processing data closer to where it is generated rather than relying solely on centralized data centers, could significantly enhance performance and reduce latency. However, deploying edge computing infrastructure is complex and costly, requiring robust hardware and software solutions to ensure seamless integration and security. Apple is known for its meticulous approach to hardware and software development, and the company is likely still testing and refining its edge computing solutions before rolling them out at scale.

Also: Make room for RAG: How Gen AI’s balance of power is shifting

While NVIDIA is a major player in the GPU server space, others include traditional x86 Intel-based and Arm-based server providers like Qualcomm and Ampere. These servers can also use NVIDIA GPUs, but Apple likely wants to control the integration with its operating system and silicon to deploy AI computing. Additionally, the supply chain from NVIDIA or any other HPC server vendor is likely insufficient to meet Apple’s large-scale deployment requirements.

As reported by The Register, Apple is developing its own AI servers , which are expected to be more cost-effective and better integrated with its ecosystem. These servers are currently being tested in data centers for foundation model use, and a broader rollout is anticipated in 2025. This phased approach ensures Apple can maintain high privacy, security, and user experience standards while gradually expanding its AI capabilities across its device lineup.

Broader implications for IoT and other devices

Apple’s decision to limit Apple Intelligence to high-end models is driven by the significant cost and infrastructure challenges associated with deploying AI at scale, allowing the company to ensure a smooth and secure user experience while laying the groundwork for future expansions.

The need for AI-accelerated servers isn’t just about older phones and lower-end devices. Apple’s IoT products, like the Apple Watch, Apple TV, and HomePod, which lack the computational power for on-device AI, would also benefit from such infrastructure. These devices will unlikely handle on-device AI computation shortly, making cloud and edge solutions even more critical.

Also: Here’s every iPhone model that will support Apple’s new AI features (for now)

As Apple introduces Apple Intelligence, users with older or non-Pro models may feel left out. Clear communication from Apple regarding the phased rollout strategy and plans for broader deployment will be important in managing user expectations.

As Apple continues developing its AI infrastructure, including potential edge computing solutions, we expect that a broader rollout of Apple Intelligence will be deployed in the coming years. This phased approach ensures that Apple can maintain its high privacy, security, and user experience standards while gradually expanding its AI capabilities across its device lineup.

Apple

iPhone 16 Pro upgrade: If you have a 3 year-old iPhone, here are all the new features you’ll get

My biggest regret with upgrading my iPhone to iOS 18 (and I’m not alone)

We’ve used every iPhone 16 model and here’s our best buying advice for 2024

6 iOS 18 settings I changed immediately - and why you should too

- iPhone 16 Pro upgrade: If you have a 3 year-old iPhone, here are all the new features you’ll get

- My biggest regret with upgrading my iPhone to iOS 18 (and I’m not alone)

- We’ve used every iPhone 16 model and here’s our best buying advice for 2024

- 6 iOS 18 settings I changed immediately - and why you should too

Also read:

- [Updated] Premiere Pro Masterclass - Essential Templates for Free

- 2024 Approved Mastering the Art of Soundtrack Posts A Copyright Primer for Insta

- 2024 Approved Rhyme & Groove Top 20 Rap Sensations on TikTok Today

- 8 Expert Strategies to Stop Hogwarts Legacy From Freezing at Bootup on PCs

- Banish the Blues of a Non-Opening Notepad: Streamlined Fixes for Windows PCs

- Discover 10 Entertaining Linux Terminal Commands for Endless Hours of Fun

- Discover the Advanced Dell XPS Portables - Say Goodbye to Traditional Trackpads with Integrated Haptic Solutions and Adaptive Illuminated Keys

- Effective Techniques for Utilizing the 'Dig' Command on a Linux System

- Efficiently Disconnect Your Bluetooth Devices From PCs Running Windows 11 or 10

- Effortlessly Eliminate Clutter: The Ultimate Tool for Instantly Deleting Numerous Apps!

- High-Value Mechanical Gear: Comprehensive Review of the Budget-Friendly, Versatile Keychron C3 Pro Wireless Board

- How a Browser Add-On Helped Me Leave Safari Behind

- In 2024, Apple ID Locked for Security Reasons From iPhone 12 Pro? Find the Best Solution Here

- Revitalizing Email Services: Exchange Edition

- Struggling with a Non-Cooperative Blue Yeti Mic? Follow These Steps for Quick Repairs

- Title: Apple Vision Pro Overview: In-Depth Analysis of Costs, Capabilities & Hands-On Reviews for Tech Enthusiasts

- Author: Brian

- Created at : 2024-10-08 08:54:03

- Updated at : 2024-10-14 22:59:40

- Link: https://tech-savvy.techidaily.com/apple-vision-pro-overview-in-depth-analysis-of-costs-capabilities-and-hands-on-reviews-for-tech-enthusiasts/

- License: This work is licensed under CC BY-NC-SA 4.0.