Artificial Sentiments for Financial Frauds

Artificial Sentiments for Financial Frauds

Online dating apps have always been a hotbed for romance scams. Cybercriminals go above and beyond to steal money, personal information, and explicit photos. You’ll find their fake profiles everywhere.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

And with the proliferation of generative AI tools, romance scams are becoming even easier to execute. They lower the barriers to entry. Here are seven common ways romance scammers exploit AI—plus how you can protect yourself.

1. Sending AI-Generated Emails en Masse

Spam emails are getting harder to filter. Romance scammers abuse generative AI tools to write misleading, convincing messages and create multiple accounts within hours. They approach hundreds almost instantly.

You’ll see AI-generated spam messages across various platforms, not just your email inbox. Take the wrong number scam as an example. Crooks send cute selfies or suggestive photos en masse. And if anyone responds, they’ll play it off as an innocent mistake.

Once someone is on the line, they’ll get transferred to another messaging platform (e.g., WhatsApp or Telegram). Most schemes run for weeks. Scammers gradually build trust before asking targets to join investment schemes, shoulder their bills, or pay for trips.

Stay safe by avoiding spam messages entirely. Limit your engagement with strangers, regardless of how they look or what they offer.

2. Responding to More Conversations Quickly

Bots are spreading like wildfire online. Imperva reports that bad bots made up 30 percent of automated web traffic in 2022. You’ll find one within seconds of swiping through Tinder matches.

One of the reasons for this sudden spike in bots is the proliferation of generative AI tools. They churn out bots in bulk. Just input the right prompt, and your tool will present a complete, efficient code snippet for bot generation.

Know when you’re talking to a bot. Although AI uses a natural, conversational tone, its dialogue still sounds monotonous and awkward. After all, chatbots merely follow patterns. It might produce similar responses to different questions, statements, and requests.

3. Creating Multiple Identities From Stolen Images

Close

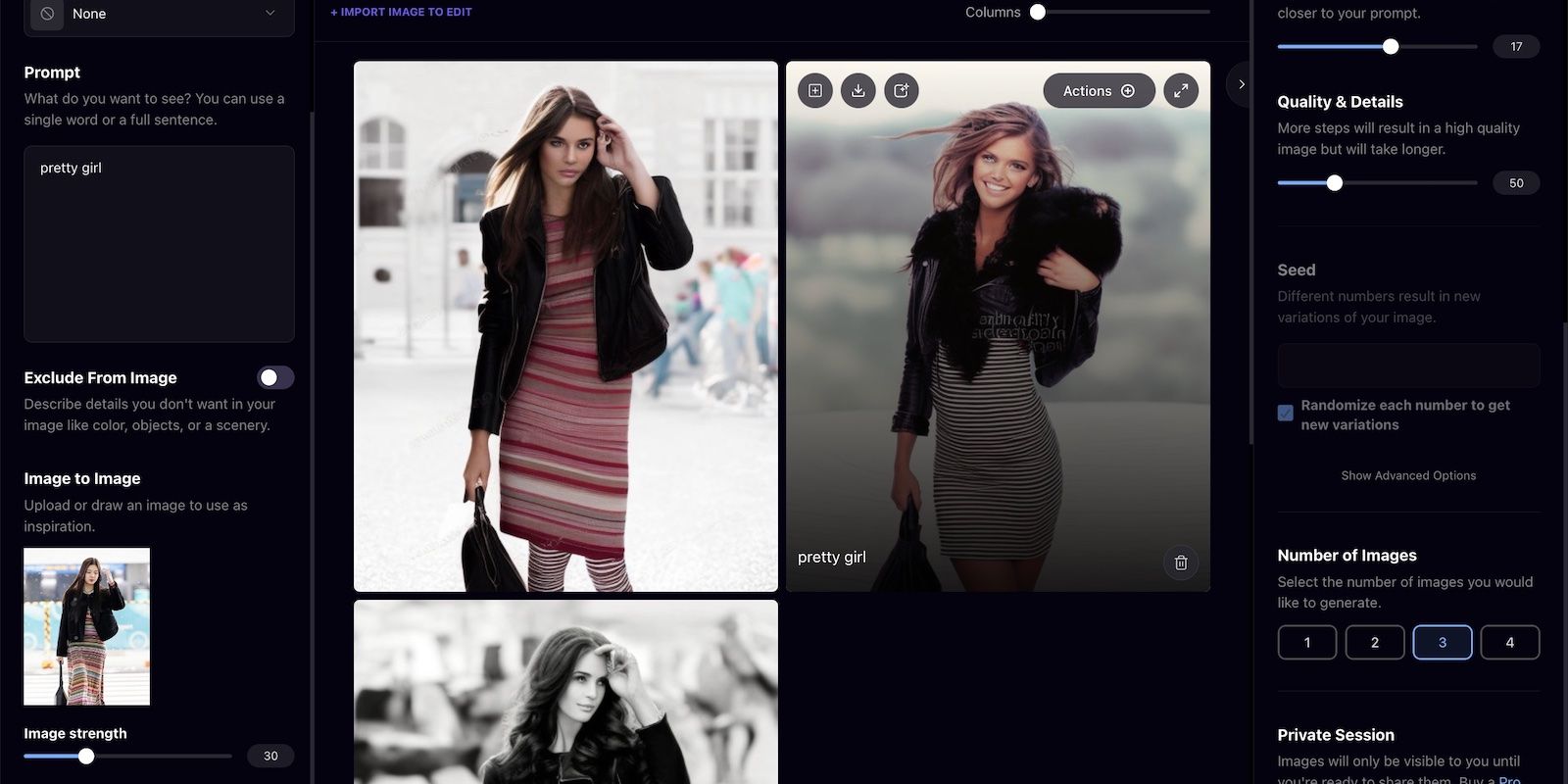

AI art generators manipulate images. Take the below demonstration as an example. We fed Playground AI a candid photograph of a famous singer—the platform produced three variations within seconds.

Yes, they have flaws. But note that we used a free tool running an outdated text-to-image model. Scammers produce more realistic output with sophisticated iterations. They can quickly render hundreds of customized, manipulated photos from just a few samples.

Unfortunately, AI images are hard to detect. Your best bet would be to do a reverse image search and sift through relevant results.

Parental Control Software

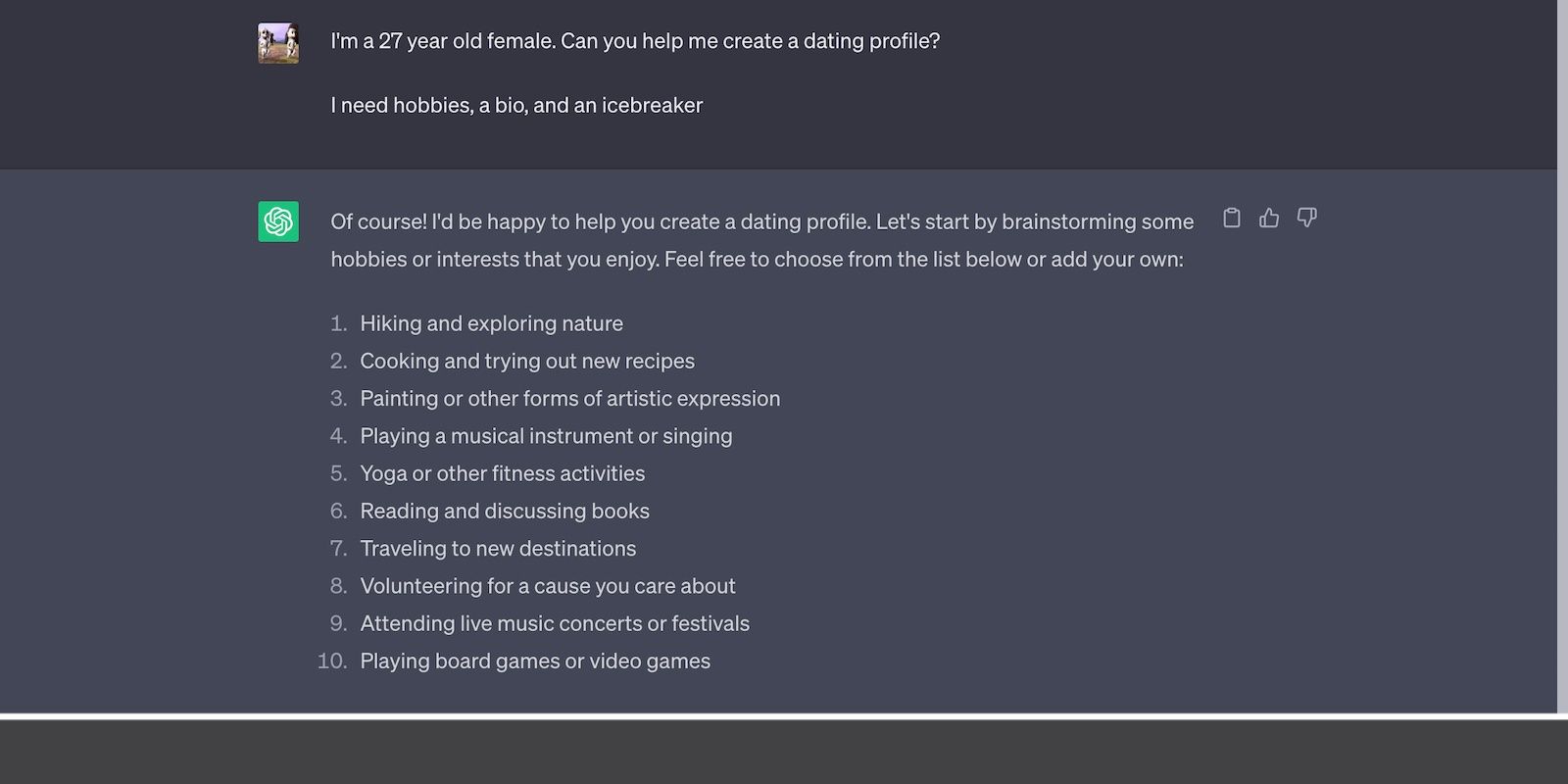

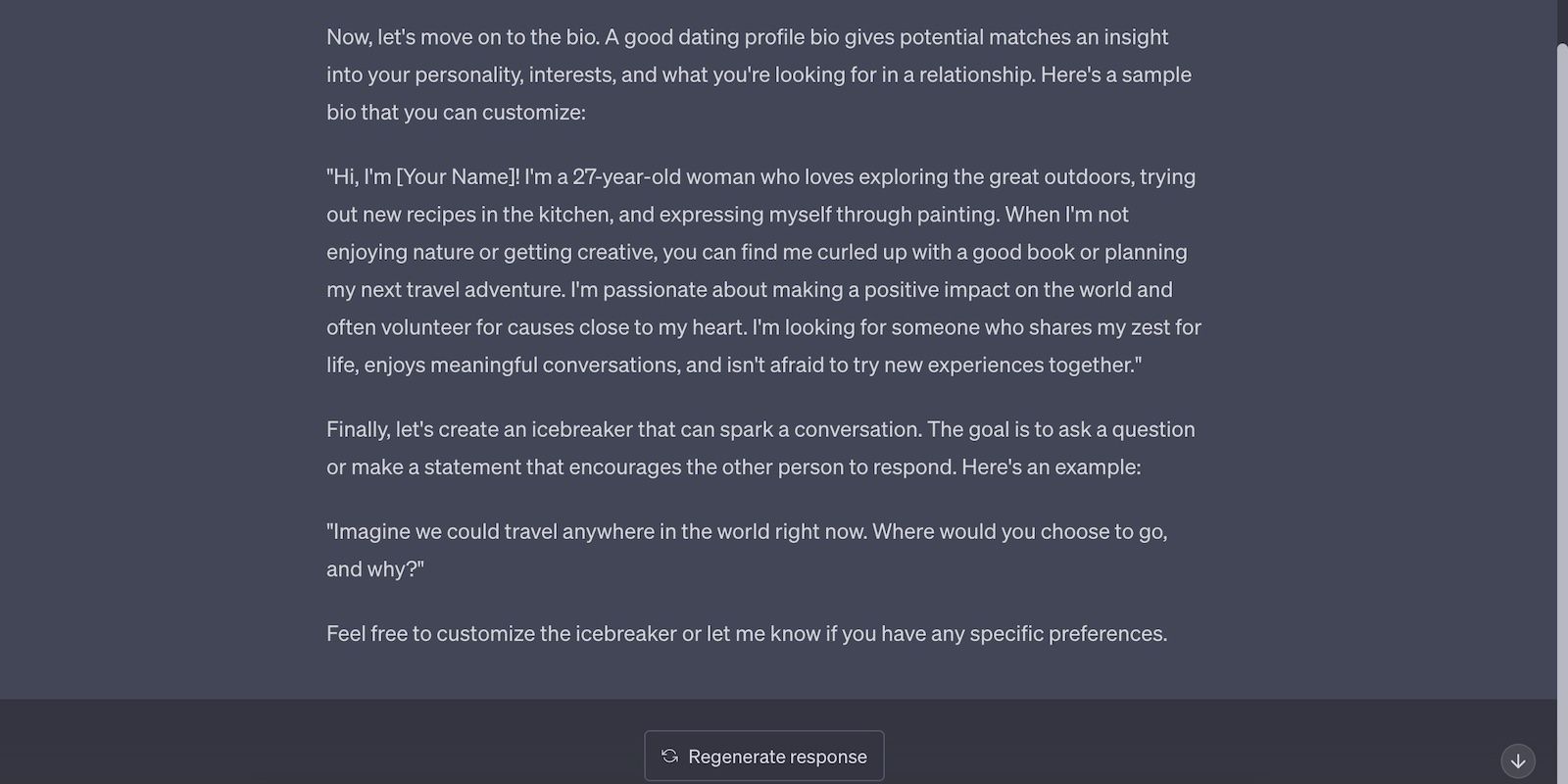

## 4\. Building Deceptively Authentic-Looking ProfilesBots approach victims en masse. So romance scammers who prefer a targeted scheme create just one or two authentic-looking profiles. They’ll use AI to seem convincing. Generative AI tools can compose authentic-looking descriptions that sound natural and genuine; bad grammar will no longer be an issue.

Here’s ChatGPT suggesting some hobbies to list on a dating profile.

And here’s ChatGPT writing an entire biography for your dating profile.

Since this process consumes so much time, it also requires a bigger payoff. So, scammers tend to ask for more. Once they earn your trust, they’ll ask for help with various “problems,” like hospital bills, loan payments, or tuition fees. Some will even claim to visit you if you shoulder their ticket.

These cybercriminals are skilled at manipulating victims. The best tactic is to avoid engaging with them right from the get-go. Don’t let them say anything. Otherwise, you might gradually fall for their deception and gaslighting methods.

5. Exploiting Deepfake Technology for Sexual Extortion

AI advanced deepfake tools at an alarmingly fast rate. New technologies reduce minor imperfections in deepfake videos , like unnatural blinking, uneven skin tones, distorted audio, and inconsistent elements.

Unfortunately, these errors also serve as red flags. Enabling users to remove them makes differentiating between legitimate and deepfake videos harder.

Bloomberg shows how anyone with basic tech knowledge can manipulate their voice and visuals to replicate others.

Apart from creating realistic dating profiles, scammers exploit deepfake tools for sexual extortion. They blend public photos and videos with pornography. After manipulating illicit content, they’ll blackmail victims and demand money, personal data, or sexual favors.

Don’t cave in if you get targeted. Call 1-800-CALL-FBI, send an FBI tip, or visit your local FBI field office should you find yourself in this situation.

6. Integrating AI Models With Brute-Force Hacking Systems

While open-source language models support some AI advancements, they’re also prone to exploitation. Criminals will take advantage of anything. You can’t expect them to ignore the algorithm behind highly sophisticated language models like LLaMA and OpenAssistant .

In romance scams, hackers often integrate language models with password cracking. AI’s NLP and machine learning capabilities enable brute-force hacking systems to produce password combinations quickly and efficiently. They could even make informed predictions if provided with enough context.

You have no control over what scammers do. To protect your accounts, make sure you create a truly secure password comprising special characters, alphanumeric combinations, and 14+ characters.

7. Imitating Real People With Voice Cloning

AI voice generators started as a cool toy. Users would turn sample tracks of their favorite artists into covers or even new songs. Take Heart on My Sleeve as an example. TikTok user Ghostwriter977 made a super-realistic song imitating Drake and The Weeknd, although neither artist sang it.

Despite the jokes and memes around it, speech synthesis is very dangerous . It enables criminals to execute sophisticated attacks. For instance, romance scammers exploit voice cloning tools to call targets and leave deceptive recordings. Victims who are unfamiliar with speech synthesis won’t notice anything unusual.

Protect yourself from AI voice clone scams by studying how synthesized outputs sound. Explore these generators. They merely create near-identical clones—you’ll still spot some imperfections and inconsistencies.

Protect Yourself Against AI Dating Scammers

As generative AI tools advance, romance scammers will develop new ways to exploit them. Developers can’t stop these criminals. Take a proactive role in fighting cybercrimes instead of just trusting that security restrictions work. You can still use dating apps. But make sure you know the person on the other side of the screen before engaging with them.

And watch out for other AI-assisted schemes. Besides romance scams, criminals use AI for identity theft, cyber extortion, blackmail, ransomware attacks, and brute-force hacking. Learn to combat these threats as well.

SCROLL TO CONTINUE WITH CONTENT

And with the proliferation of generative AI tools, romance scams are becoming even easier to execute. They lower the barriers to entry. Here are seven common ways romance scammers exploit AI—plus how you can protect yourself.

- Title: Artificial Sentiments for Financial Frauds

- Author: Brian

- Created at : 2024-08-10 02:10:12

- Updated at : 2024-08-11 02:10:12

- Link: https://tech-savvy.techidaily.com/artificial-sentiments-for-financial-frauds/

- License: This work is licensed under CC BY-NC-SA 4.0.

Greeting Card Builder

Greeting Card Builder