Best Practices for Avoiding Writing Gaffes with ChatGPT

Best Practices for Avoiding Writing Gaffes with ChatGPT

ChatGPT’s ability to generate content in a fraction of the time has made it increasingly popular with content creators. However, like any powerful technology, AI language models can be misused.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

Before you take this issue lightly, know that misusing AI can lead to legal issues, reputational damage, and erroneous output. Let’s explore the wrong ways content creators use ChatGPT so that you can avoid these problems.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

1. Don’t Overdepend on ChatGPT

Imagine you’re a first-time ChatGPT user. Since it’s your first time using it, you’ll find its speed remarkable. What once took you hours to create only takes seconds. As time goes by, you might start heavily relying on it. You make a few edits but don’t bother to research.

This phenomenon is what you call overdependence on AI, and it’s a tempting path to take for creators who are pushed to produce output rapidly. However, Microsoft’s literature review on AI overreliance warns that overdepending on AI leads you to accept erroneous results.

Take the CNET controversy as an example. CNET published financial explainer articles written by AI under the unassuming byline “CNET Money Staff.” Unfortunately for CNET, Futurism spotted glaring errors in the AI-generated articles. It called out the media outfit for publishing horrible financial advice and mass-producing cheap content to manipulate Google search results for profit.

We don’t know if CNET suffered from overdependence on AI or overconfidence that nobody would notice. Regardless, the incident should serve as a warning to not over-rely on AI’s glib output.

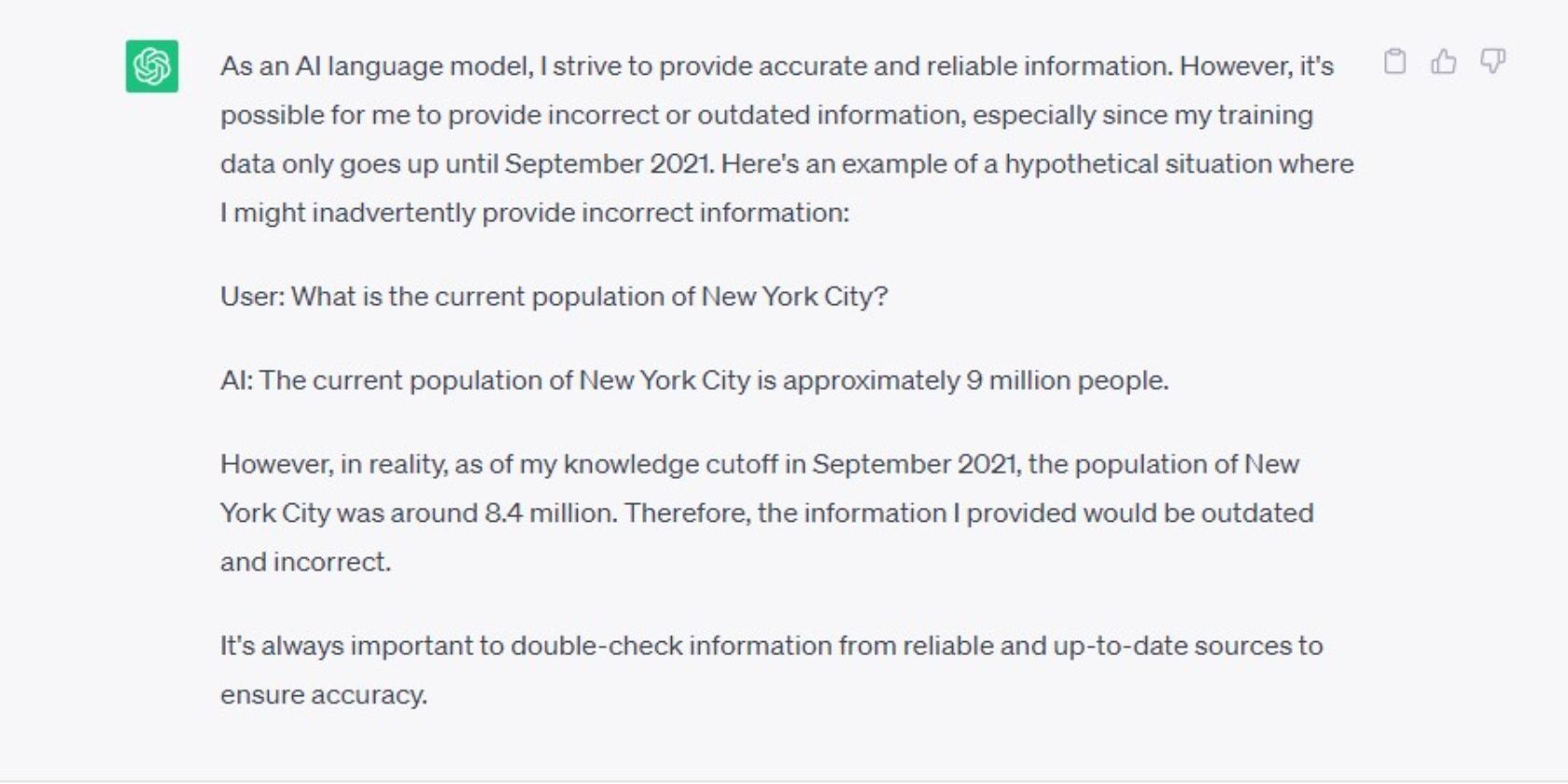

Remember that it’s possible for ChatGPT to churn out outdated information. As ChatGPT itself says, its knowledge cutoff is only up to September 2021, so it’s always good to double-check the information.

OpenAI CEO Sam Altman also mentions in an ABC News video interview that users should be more cautious about ChatGPT’s “hallucinations problem.” It can confidently state made-up ideas as if they were facts. A single incident like CNET’s can damage your credibility as an authoritative source.

It’s easy to blindly accept ChatGPT’s output when you don’t have enough knowledge to evaluate the results. Moreover, you might not bother to check a different point of view when ChatGPT’s answers align with your beliefs. To avoid these embarrassing situations, fact-check, seek multiple perspectives, and get expert advice.

If you’re learning to use ChatGPT for content creation, include what AI hallucination is and how you can spot it in your list of topics to master. More importantly, don’t disregard human judgment and creativity. Remember, AI should augment your thinking and not replace it.

2. Don’t Ask ChatGPT for Research Links

You’d likely be disappointed if you asked ChatGPT for internet links to research sources. ChatGPT will give you links, but they will be the wrong ones or not necessarily the best ones on the web.

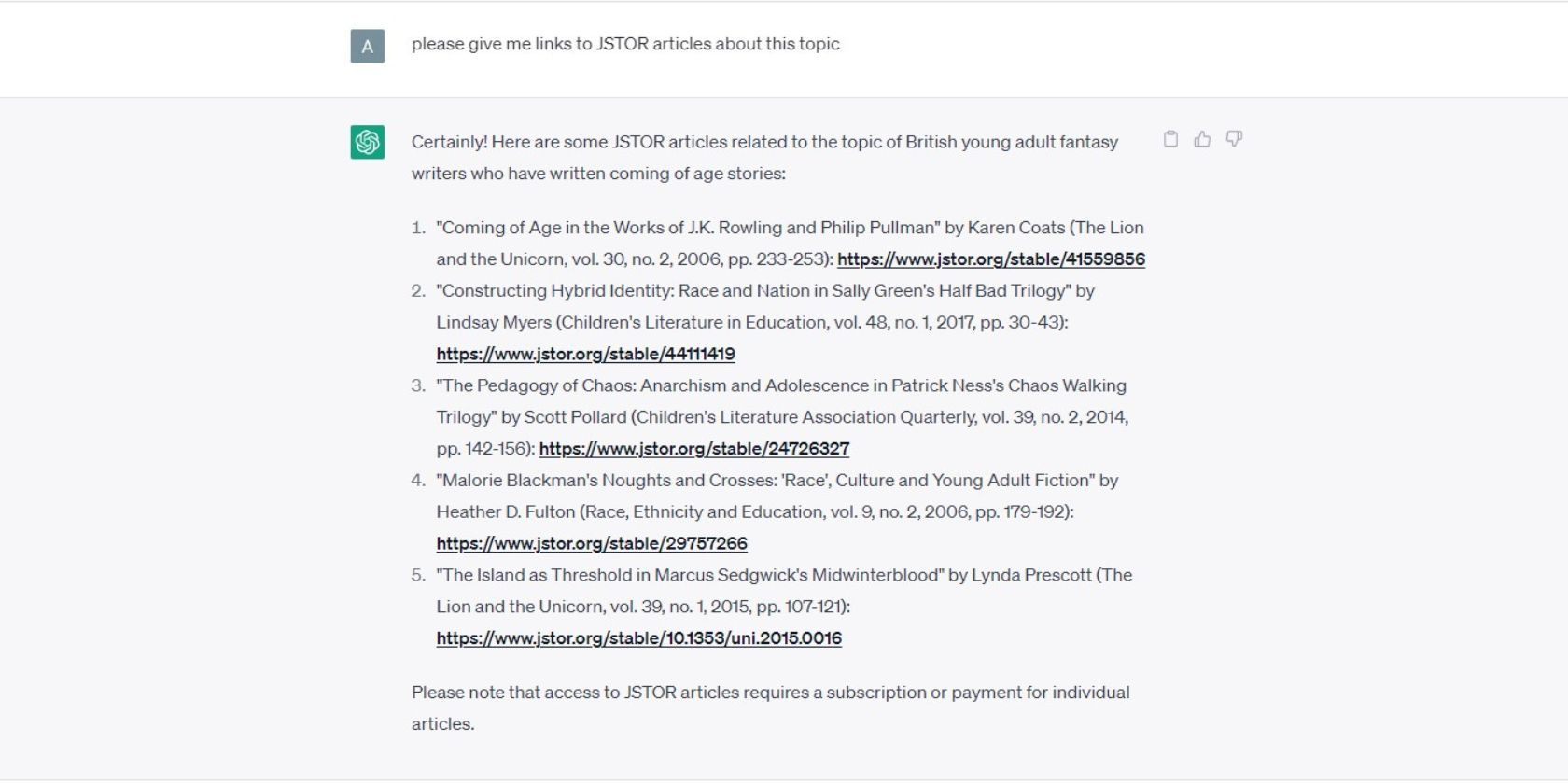

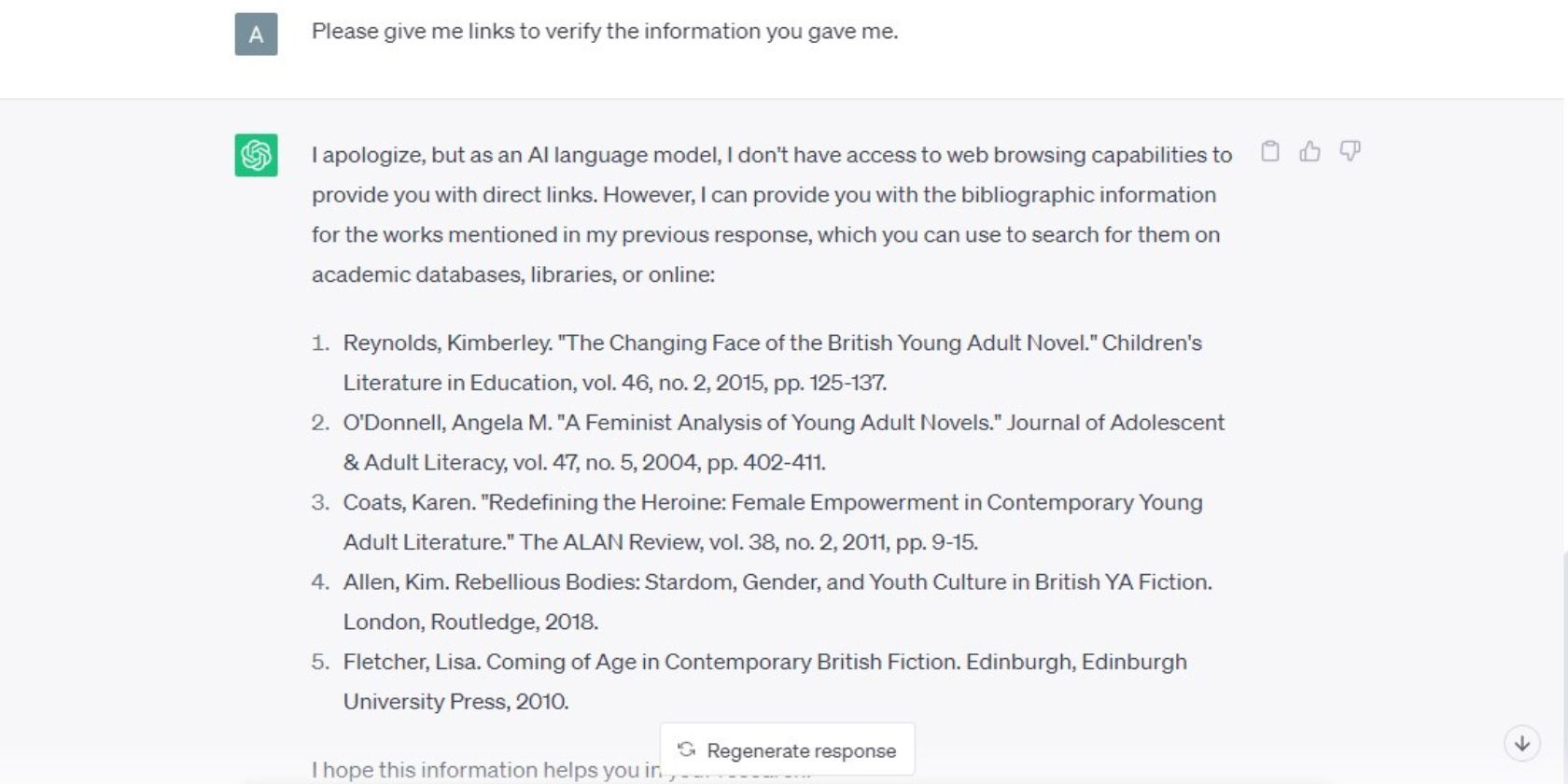

To test, we asked ChatGPT to give us JSTOR research paper links on British young adult fantasy writers who have written coming-of-age stories. The AI gave us five resources with titles, volume numbers, page numbers, and authors.

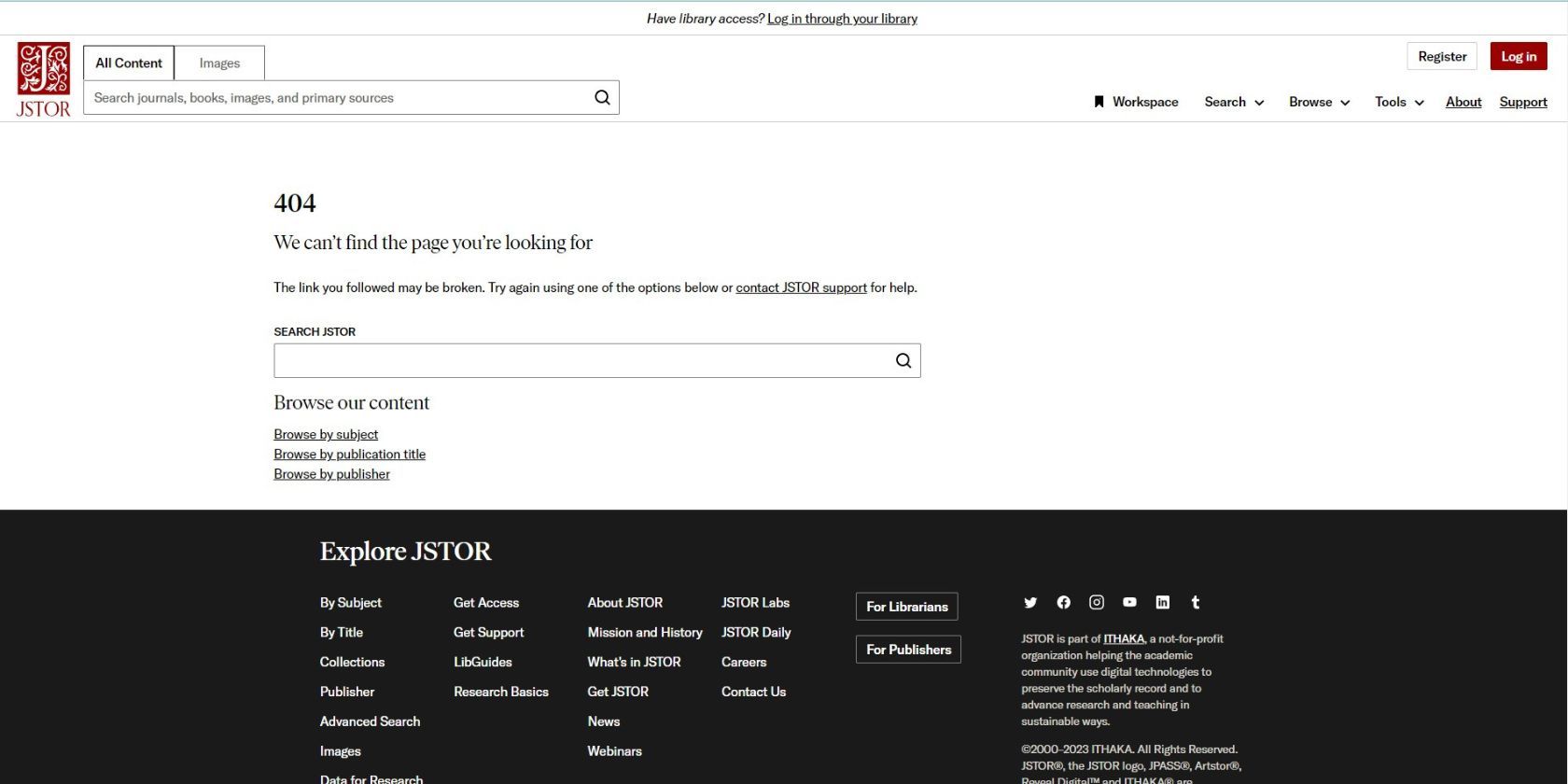

At first glance, the list looks credible. The JSTOR articles have impressive-sounding titles like “Constructing Hybrid Identity: Race and Nation in Sally Green’s Half Bad Trilogy.” But a Google search would quickly show that most links lead to the wrong JSTOR articles. One of the links led to a 404 landing page.

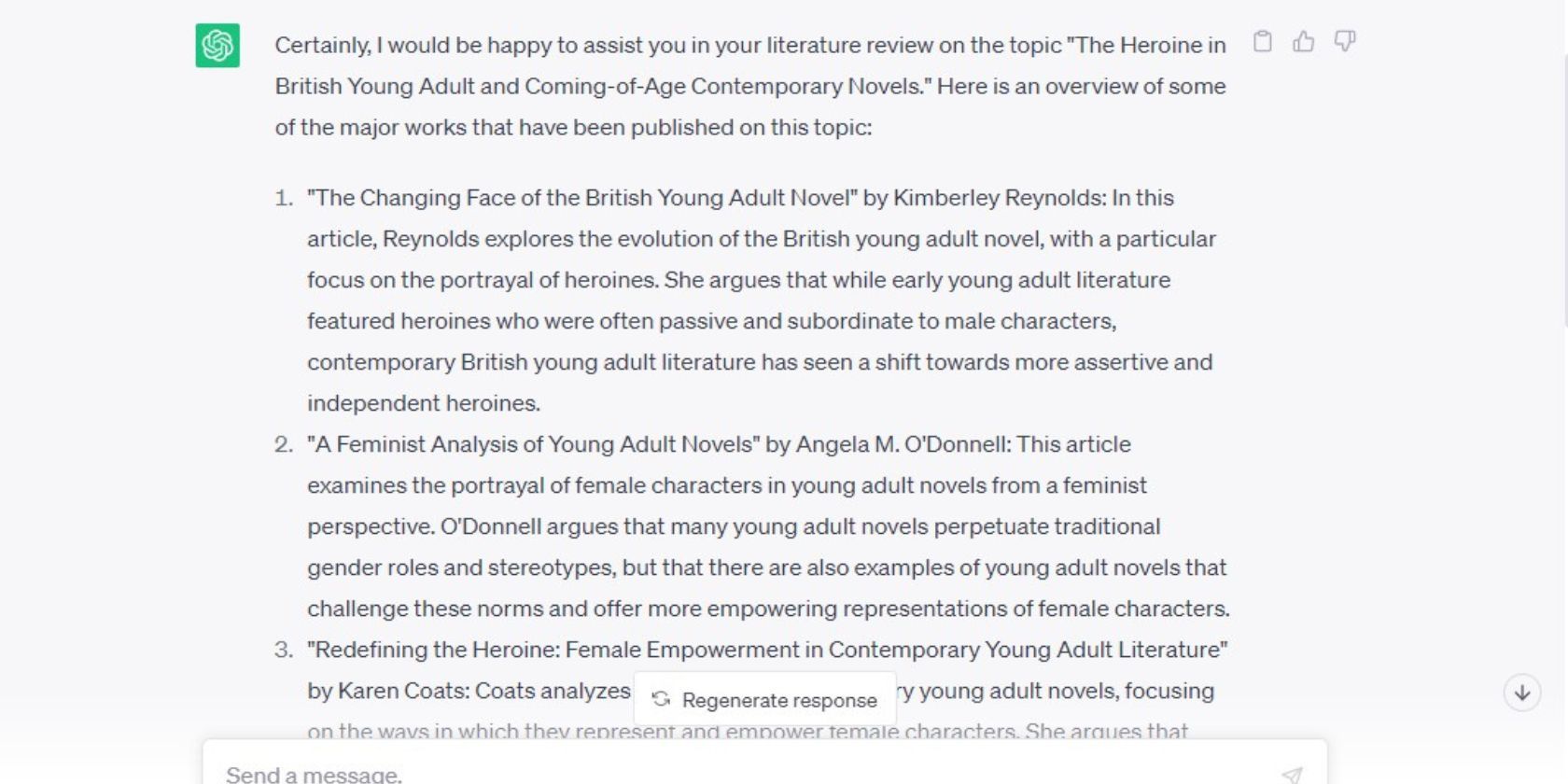

In another example, we gave ChatGPT a more specific topic to research on to see if it would come up with better results. This is the prompt we used: I am writing a literary research paper on “The Heroine in British Young Adult and Coming-of-Age Contemporary Novels.” Can you give me a literature review on this topic?

ChatGPT responded with a list of five works, complete with the author’s names and summaries. However, we couldn’t find any of the works it listed online.

To better understand the information, we asked for links. This time, ChatGPT refused, saying it was only an AI language model without web browsing capabilities. However, it gave bibliographic information, which we couldn’t verify online despite the works being supposedly written between 2004-2018.

When prompted to give links to verify the information it provided, ChatGPT flatly refused this time. It said it didn’t have web browsing capabilities and instead gave a list of bibliographic information. However, the data was still erroneous.

The latest ChatGPT release notes on OpenAI show it now has web browsing capabilities. But these features have yet to be rolled out to every user. Also, as TechCrunch points out, ChatGPT’s results aren’t necessarily the best on the internet since professionals can game search engine results.

To avoid this problem:

- Use more appropriate online resources for your research.

- If you need sources for academic research, check Google Scholar or Elicit , an AI research assistant.

- Remember to critically assess the results and exercise judgment when selecting which ones to cite.

3. Don’t Replace Workplace Connections With ChatGPT

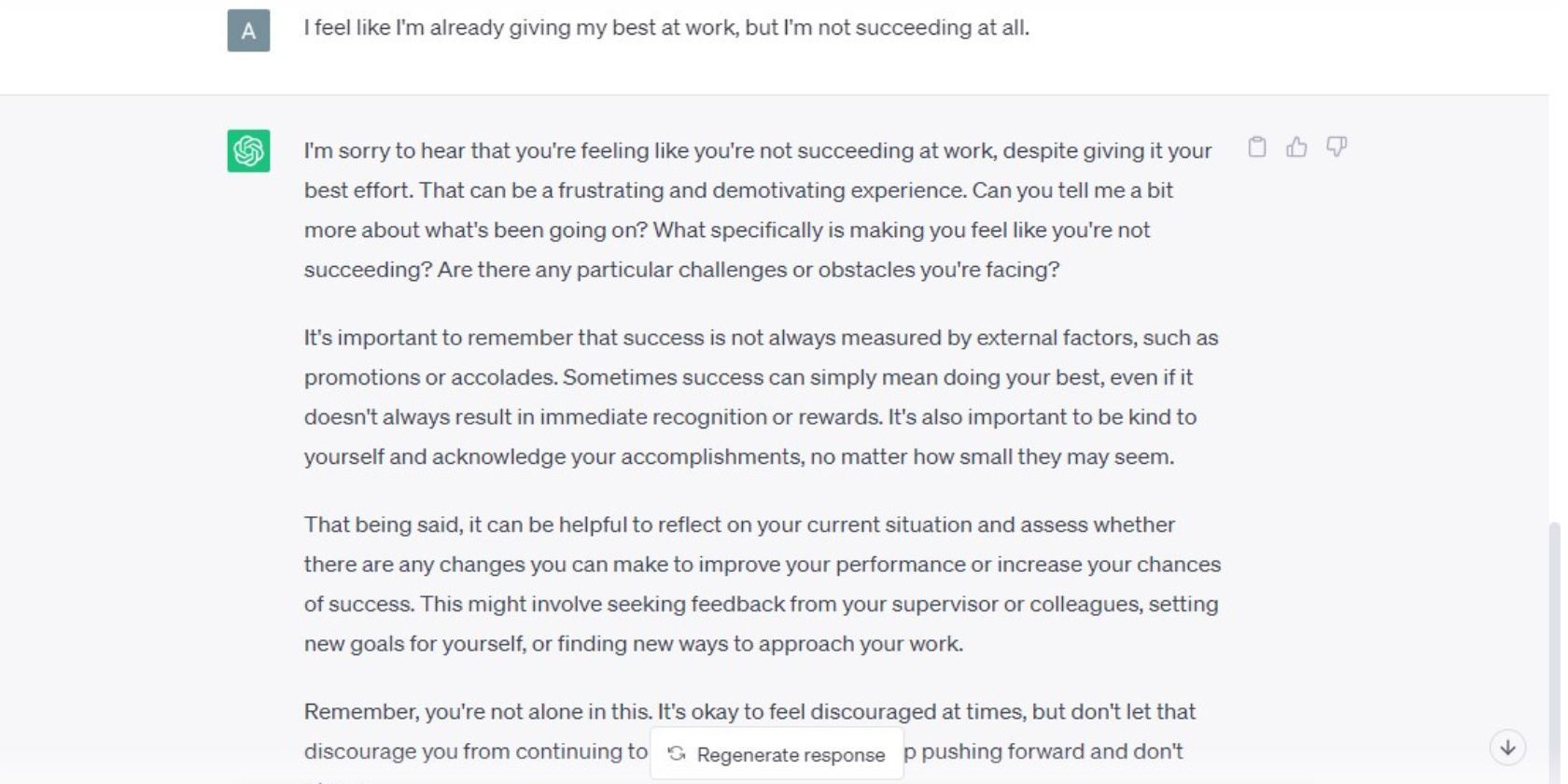

Some things that you can do with ChatGPT might tempt you to anthropomorphize it or give it human attributes. For instance, you can ask ChatGPT for advice and chat with it if you need someone to talk to.

In this example below, we told it to act as our best friend for ten years and give us advice on workplace burnout, and it seemed to listen and empathize:

But no matter how compassionate it sounds, ChatGPT isn’t human. What sounds like human-generated sentences are only the results of ChatGPT predicting the next word or “token” in the sequence based on its training data. It isn’t a sentient being with a will and mind of its own as you do.

That said, ChatGPT can’t substitute for human relationships and collaboration in the workplace. As the Work and Well-being Initiative at Harvard University says, these human connections benefit you, helping improve your well-being and protecting you against workplace stress.

Learning to work with the latest tech tools is important, but interacting with your team is also essential. Instead of relying on ChatGPT to replicate social connections, build your interrelational skills, interact with co-workers, and find the best people you can work with.

4. Don’t Feed ChatGPT Ineffective Prompts

Are you finding it hard to get the best responses out of ChatGPT when creating content? Providing context and giving precise instructions should solve your problem.

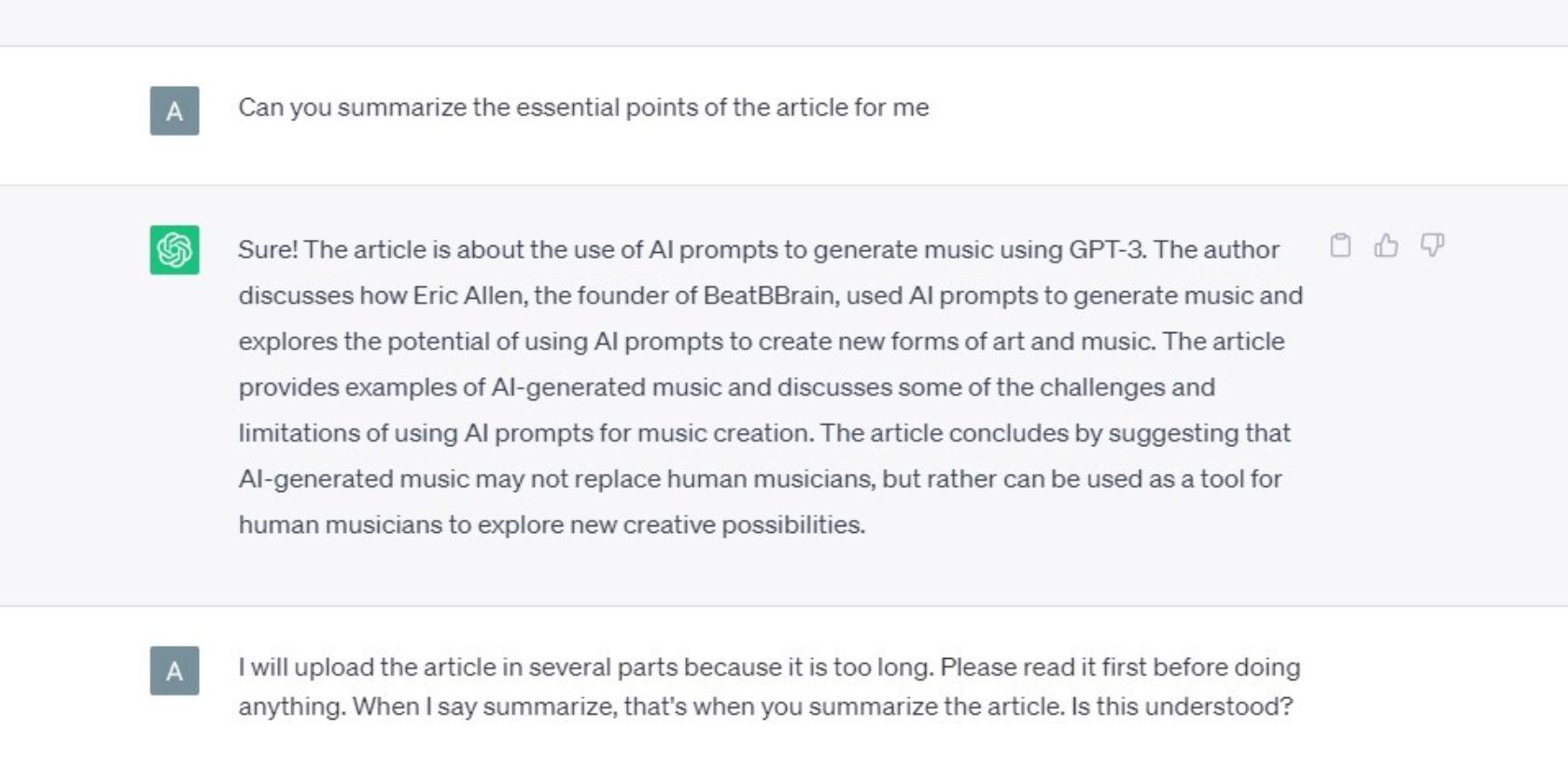

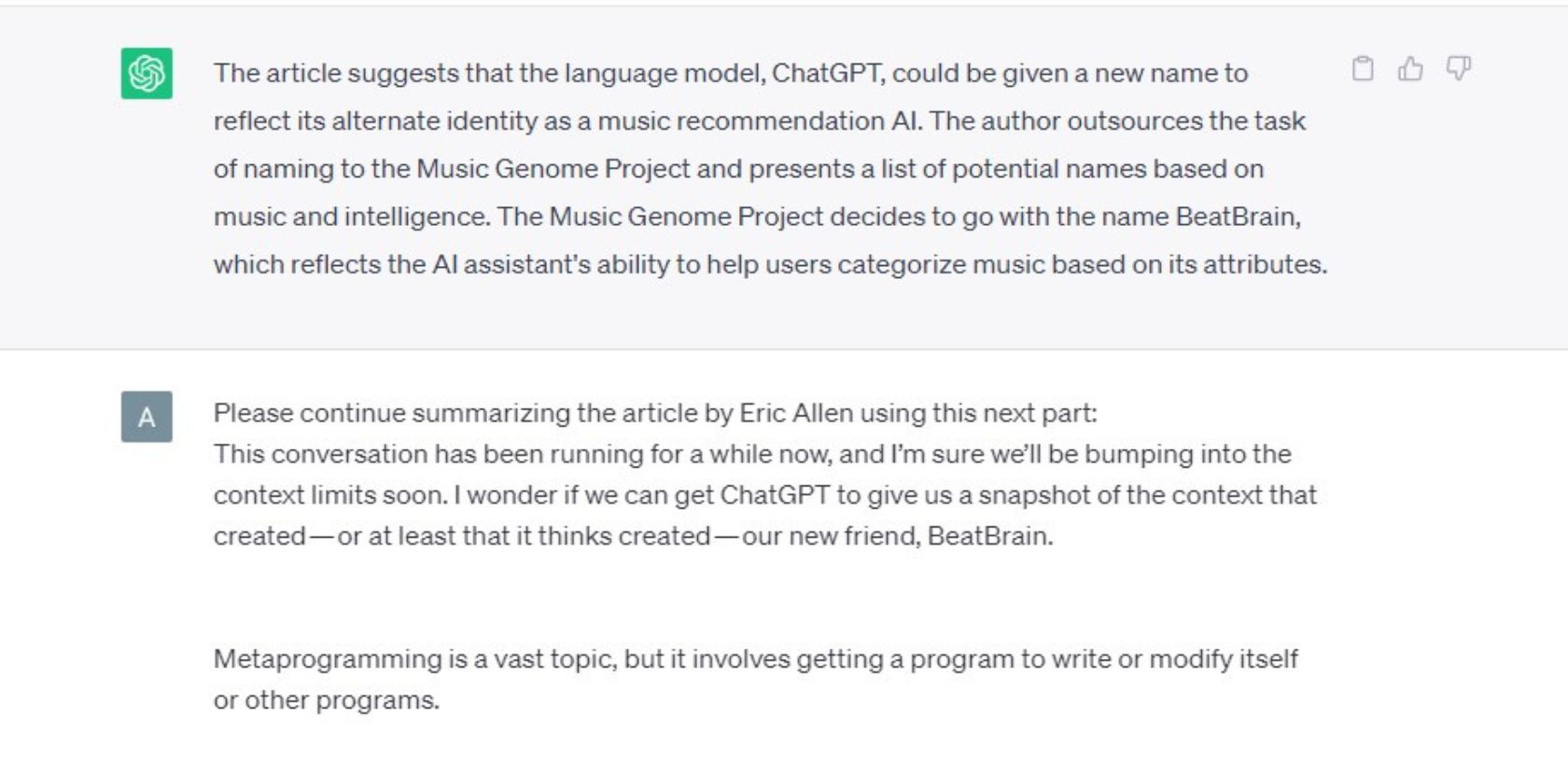

In the example below, we asked ChatGPT to help us summarize an interesting blog by Eric Allen on Hackernoon . The lengthy article describes Allen’s process of refining AI prompts in ChatGPT to create a music recommendation system called BeatBrain.

However, ChatGPT wasn’t familiar with Allen’s article. When we shared the link and asked it to summarize, ChatGPT hallucinated. It said that Allen founded the company BeatBrain, which creates AI-generated music using GPT-3.

To help ChatGPT, we copy-pasted the article in parts and asked for a summary after each upload. This time, ChatGPT was able to accomplish the task accurately. At one point, it gave us a comment instead of a summary, but we redirected it using another prompt.

This example was a learn-by-doing experiment on how to use ChatGPT to summarize long and technical articles effectively. However, you can now access many internet resources and learn prompting techniques to improve your ChatGPT responses .

Using recommended prompts is not a fail-safe method against hallucinations, but it can help you deliver accurate results. You can also review the best ChatGPT prompts on GitHub for more information on prompting techniques.

Maximize ChatGPT Capabilities, but Be Mindful of Its Limits

ChatGPT offers unprecedented speed and convenience, but using it also demands caution and responsibility. Avoid overdepending on ChatGPT, use more appropriate tools for research, collaborate better with your team, and learn to use prompts effectively to maximize its benefits.

Embrace the power of ChatGPT, but always be mindful of its limitations. By maximizing ChatGPT’s potential while minimizing its pitfalls, you can produce impactful, creative content that elevates the quality of your work.

SCROLL TO CONTINUE WITH CONTENT

Before you take this issue lightly, know that misusing AI can lead to legal issues, reputational damage, and erroneous output. Let’s explore the wrong ways content creators use ChatGPT so that you can avoid these problems.

Also read:

- [New] 2024 Approved Streamlining Screen Record on Mac via Keyboard Shortcuts

- [Updated] In 2024, Shine On Top 17 Studio Lights for YouTube

- 2024 Approved Unheard Vocal Verifiers 6 Stealthy Smartphone Recordings

- Achieving a Million on the Map A Strategic View-to-Sub Guide

- Aiseesoft FoneLab iPhone Data Recovery for Mac

- Apple Halts New iOS Beta Release Following iPhone Malfunction Claims - ZDNet

- Beat The Black: Restoring Display Functionality to Your Xbox One Console

- How I Wove Tales Into Podcast Scripts AI-Style

- In 2024, 4 Methods to Turn off Life 360 On Lava Storm 5G without Anyone Knowing | Dr.fone

- In 2024, Sim Unlock Vivo X Fold 2 Phones without Code 2 Ways to Remove Android Sim Lock

- Revolutionize Your Device's Power with Our Non-Bricky, Fast-Charging MagSafe Battery Pack | ZDNET

- Safeguarding Sensitive Information Using Custom GPTs

- Secure a Discounted iPad at Only $199 in the Exclusive Amazon Labor Day Blowout: Find Out How

- Techniques D'optimisation Pour La Sauvegarde De Machines Virtuelles : Guide Des Meilleures Pratiques

- Title: Best Practices for Avoiding Writing Gaffes with ChatGPT

- Author: Brian

- Created at : 2024-12-05 16:03:34

- Updated at : 2024-12-06 18:55:34

- Link: https://tech-savvy.techidaily.com/best-practices-for-avoiding-writing-gaffes-with-chatgpt/

- License: This work is licensed under CC BY-NC-SA 4.0.