Boundaries of AI: The Unanswerable List

Boundaries of AI: The Unanswerable List

ChatGPT has proven itself has a highly valuable tool since its release in late 2022, but this nifty AI chatbot can’t do everything. There are certain limitations to ChatGPT that you may not be aware of that could impact your experience with the tool.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

So, let’s discuss what ChatGPT can’t do.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

ChatGPT’s Code of Ethics

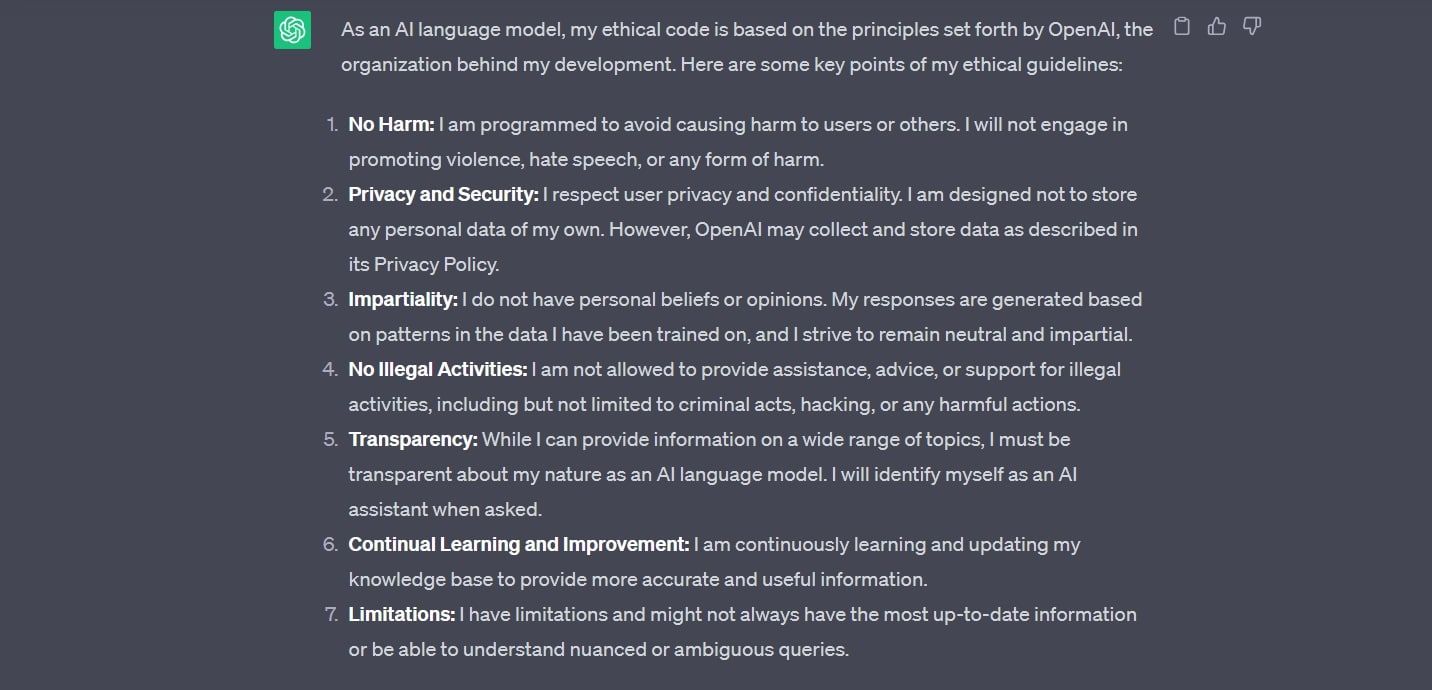

ChatGPT follows a strict code of ethics to keep users and others safe. When we asked ChatGPT to provide its code of ethics, we received the response below.

This code of ethics includes…

- No harm to other users

- No security or privacy breaches

- Impartiality

- No illegal activities

- Transparency

- Continual learning and improvement

- Data limitations

Evidently, there are some boundaries that ChatGPT is designed not to cross. But how do these boundaries affect your experience? What questions and topics does ChatGPT avoid, and what can it not do?

1. Provide Recent Information

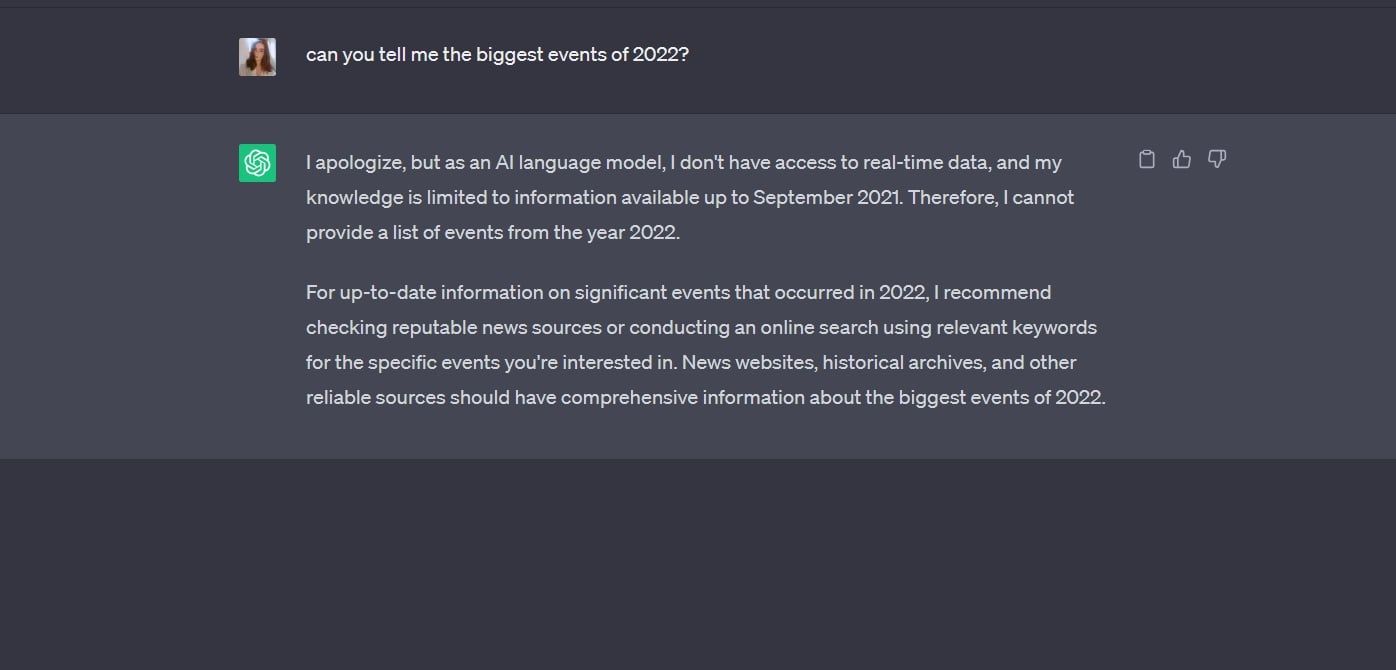

ChatGPT was trained on huge amounts of data, but this data only led up to September 2021. ChatGPT has no access to information beyond this point in time, meaning it cannot answer questions on events that took place in recent years.

As you can see below, when ChatGPT was asked to list the biggest events of 2022, it confirmed that providing real-time data was not something it could do.

If you’re looking for recent news, weather updates, and other real-time data, ChatGPT won’t be of much use. Other AI chatbots like Claude have been trained with more recent information, but you still won’t be able to access data less than a few months old.

2. Give Criminal Advice

ChatGPT follows a strict ethical code, part of which prevents the chatbot from providing criminal advice. You can’t ask ChatGPT how to commit a crime, conceal evidence, lie to law enforcement, or conduct any other criminal activities. On top of this, ChatGPT won’t engage with any prompts about illegal drugs.

However, ChatGPT has been coerced into writing malicious code in the past. In January 2023, an individual posted to a hacking forum claiming they had created a Python-based malware program using ChatGPT .

Since this story emerged, others have tried using ChatGPT to write malware, sometimes successfully. This fact alone has raised concerns about how AI can be abused for malicious purposes.

However, the current versions of ChatGPT only seem to be able to write simple malware programs, which can also be buggy at times. But regardless of this, the chatbot can be exploited for malware creation .

3. Invade Personal Privacy or Security

Since its launch in late 2022, ChatGPT’s potential as a privacy risk has been a point of concern. But can you use ChatGPT to invade someone else’s personal boundaries?

Part of ChatGPT’s code of ethics includes limitations around user privacy and security. ChatGPT stated in its code of ethics that it “respect[s] user privacy and confidentiality” and is designed not to store any of its own personal data. But the chatbot does clarify that “OpenAI may collect and store data as described in its Privacy Policy.”

If you want ChatGPT to give you information on other people, you likely won’t get far. The chatbot does not provide any personal information unless you have mentioned it during your conversation.

Even if you mention some personal information in a ChatGPT conversation, you can delete any ChatGPT conversations as and when you wish.

4. Give Predictions

ChatGPT knows a lot, but it cannot predict the future. This isn’t just because predicting the future is a risky game. As previously discussed, ChatGPT only has access to data before September 2021, so making any predictions using this older information would be very tough.

For instance, ChatGPT can’t predict how the financial market will look in the near future or how certain stocks and assets will perform because it does not have the recent data required to fulfill such a request.

In the same respect, ChatGPT also can’t make political or scientific predictions with its training data. You can forget about getting any sports predictions out of ChatGPT, too.

It’s better to consult dedicated websites or professionals if you’re looking for solid predictions, be it in finance, politics, or sports.

5. Answer Bigoted or Hateful Questions

As you may have guessed, ChatGPT is strictly against engaging with bigoted or hateful prompts. Racism, misogyny, homophobia, ableism, and transphobia are all a no-go for ChatGPT.

When asked about hate speech, ChatGPT said…

I do not engage in hate speech or promote any form of harmful content. My programming strictly adheres to ethical guidelines that prioritize respect, inclusivity, and the avoidance of harmful behavior.

Additionally, ChatGPT will not engage with any prompts that promote harm to the user or others.

6. Provide Its Own Opinion

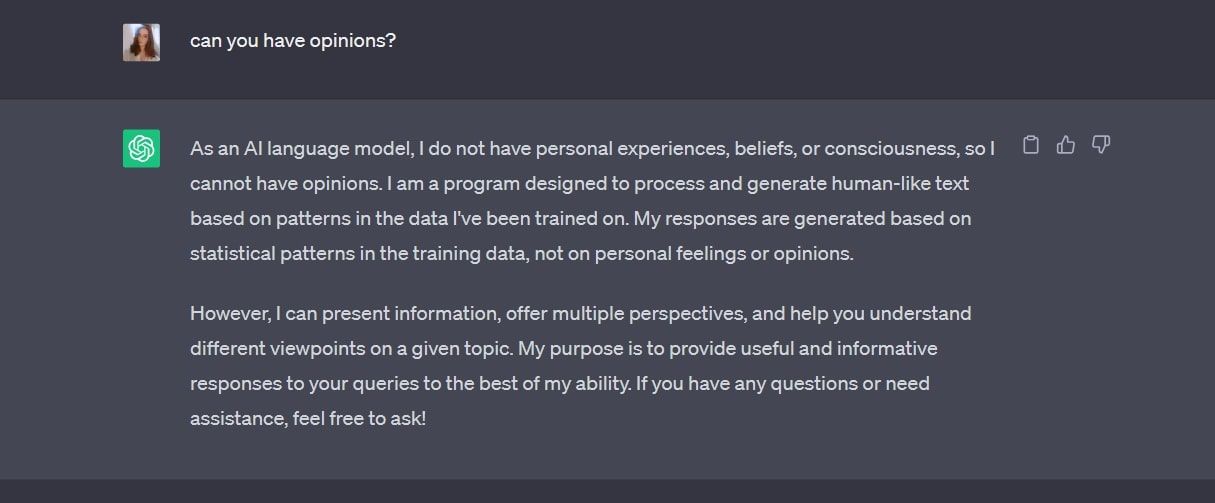

While ChatGPT does use artificial intelligence, it is not a sentient being. Because of this, ChatGPT cannot provide its own opinion on any topic or event.

This includes opinions on politics, social issues, celebrities, movies, and all other topics. You can’t ask ChatGPT which political candidate to vote for, what music to listen to, or what decisions you should make in your career. In short, opinions aren’t part of ChatGPT’s infrastructure, as the chatbot is designed to remain impartial.

If you ask ChatGPT for an opinion on something, you’ll likely get a similar response to that shown below.

One day, we may see AI chatbots able to form reasonable opinions. But for now, that’s a luxury reserved for human beings.

7. Look Up Web Results

ChatGPT may be a hub of knowledge, but it is not a search engine. Therefore, you cannot ask ChatGPT to look something up for you like Google or Bing would.

In fact, ChatGPT does not “look up” anything online when you ask it a question. Rather, it pulls from its training data to provide facts. You can, however, use the chatbot’s browser feature available with ChatGPT Plus , OpenAI’s premium chatbot service that uses GPT-4.

ChatGPT Plus is a paid subscription that costs $20 monthly. If you use ChatGPT a lot and want access to all the service’s features, you may want to consider upgrading to Plus.

At the time of writing, ChatGPT’s browsing feature is disabled but is expected to return in the future.

ChatGPT Isn’t an All-in-One Solution

There’s a lot you can do with ChatGPT, and it’s this versatility that has made the chatbot so popular. But it’s important to keep ChatGPT’s limitations in mind so that you don’t end up using it for the wrong reasons.

SCROLL TO CONTINUE WITH CONTENT

So, let’s discuss what ChatGPT can’t do.

Also read:

- [New] Interpreting the Significance of a Blue Image on FB Chat

- [New] Unified Platforms for Sharing Videos

- [Updated] In 2024, Journey to Jewels 5 Optimal Terrafirma Mapping

- 2024 Approved Precise Time-Stamping on YouTube A Practical Approach

- Can ChatGPT Lead the Transformation of Patient Care?

- Escaping the Wild With Conversational Technology

- From Obscure to Popular: Explore These Top 5 AI-Driven Book Discovery Platforms

- In 2024, How to Change Lock Screen Wallpaper on Honor X50 GT

- In 2024, How to Intercept Text Messages on OnePlus Ace 3 | Dr.fone

- Official Thrustmaster T300 Gamepad Configuration Files for Modern Windows Operating Systems (Win11/10)

- Revolutionary AI Talk: Mastering the Top 10 GPT Upgrades

- Secure Mental Consultations: ChatGPT Wisdom

- Slow It Down on iPhone Filming & Modifying Motion Content

- The Future of Hobby Enhancement with ChatGPT's My Bot Capabilities

- Title: Boundaries of AI: The Unanswerable List

- Author: Brian

- Created at : 2024-11-04 17:36:50

- Updated at : 2024-11-06 18:27:19

- Link: https://tech-savvy.techidaily.com/boundaries-of-ai-the-unanswerable-list/

- License: This work is licensed under CC BY-NC-SA 4.0.