Can I Expect Variable Response Length in ChatGPT Conversations?

Can I Expect Variable Response Length in ChatGPT Conversations?

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Key Takeaways

- ChatGPT responses are not limitless in length, despite the initial claim. There are hidden limits determined by the token system, past conversations, and system demands.

- The token system used by ChatGPT considers both the length of queries and answers. The available token lengths vary depending on the GPT model used.

- To get longer responses from ChatGPT, you can ask it to continue, break your question into smaller sections, use the regenerate option, specify a word count limit, or start a new conversation. These techniques can help you work around the unofficial limits and receive more complete answers.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

ChatGPT is big news. But how big are the responses you get from this all-purpose chatbot?

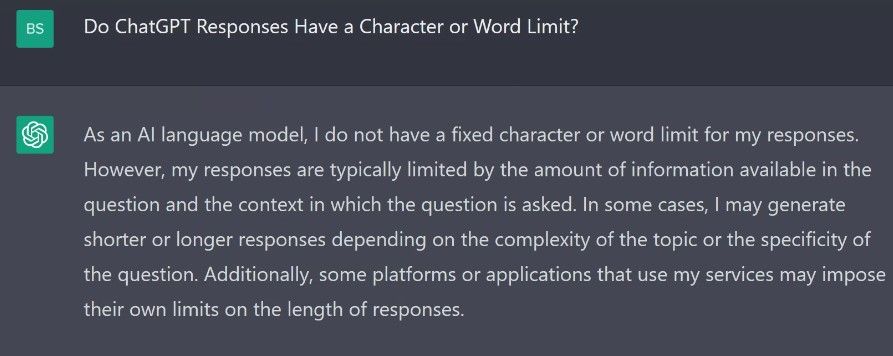

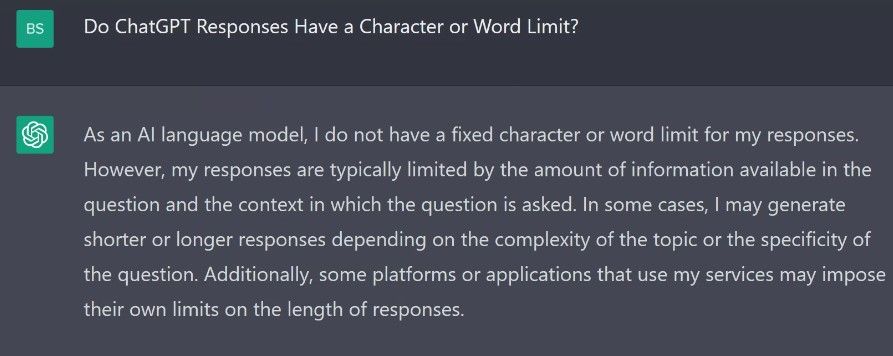

Establishing this is not as simple as you may think. For starters, ask ChatGPT this question, and you will be assured that there are no set limits to the length of its responses.

However, as we uncover here, it isn’t that straightforward. There are hidden limits to the length of a ChatGPT response, but there are also some nifty and simple workarounds to help you get longer answers.

How Does ChatGPT Determine the Length of a Response?

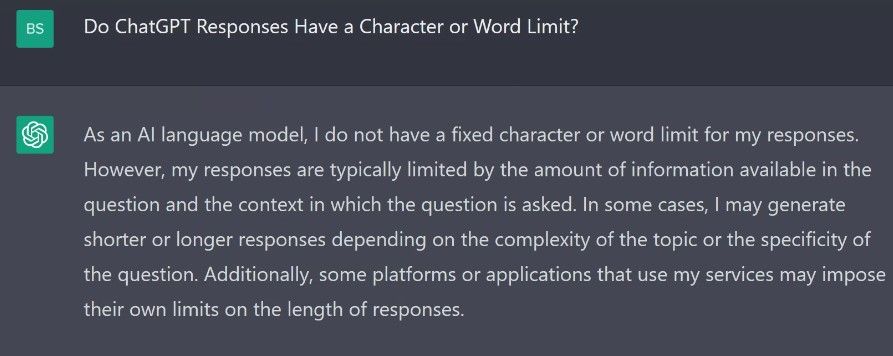

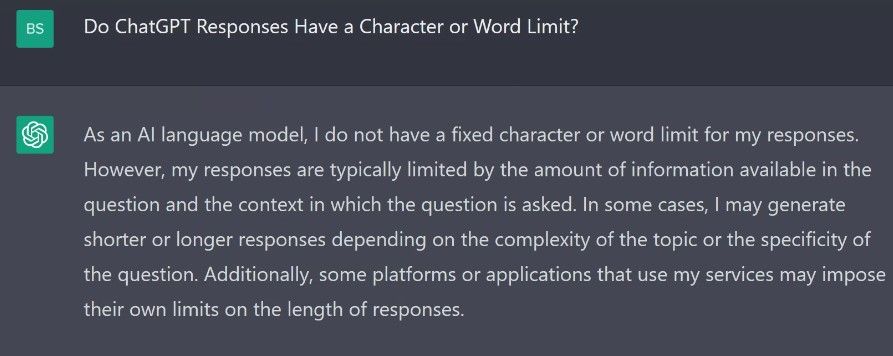

How ChatGPT works is complex , and the response length varies depending on what is being asked and the level of detail requested. As the next screenshot shows, ChatGPT claims there are no strict limits.

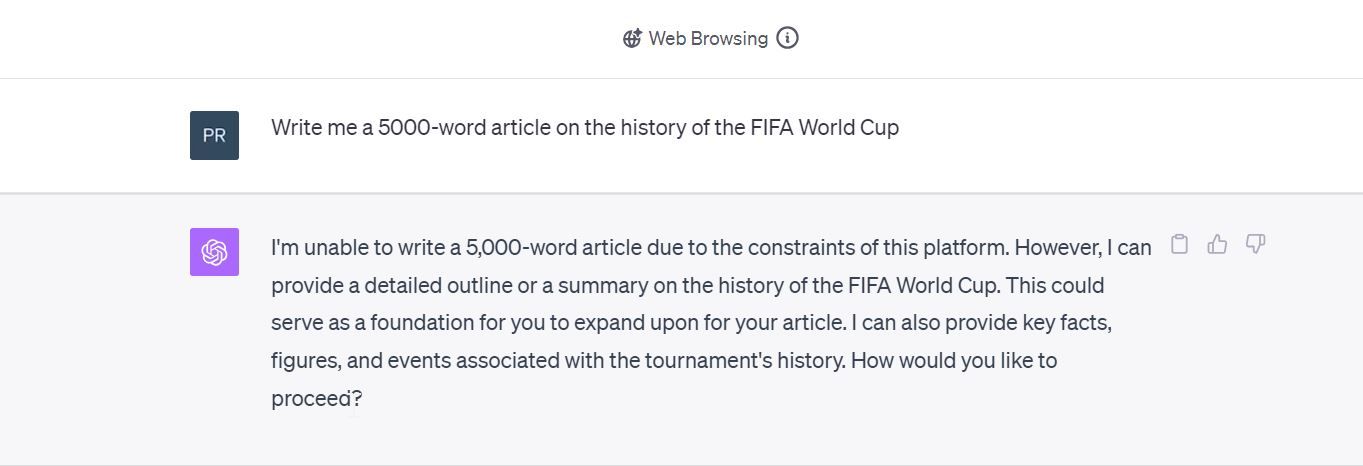

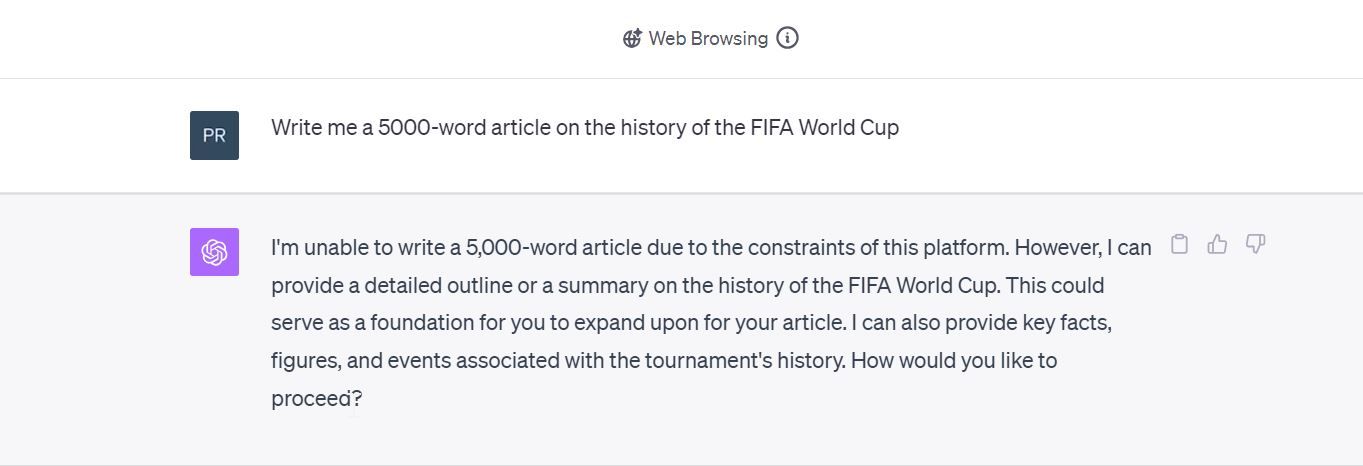

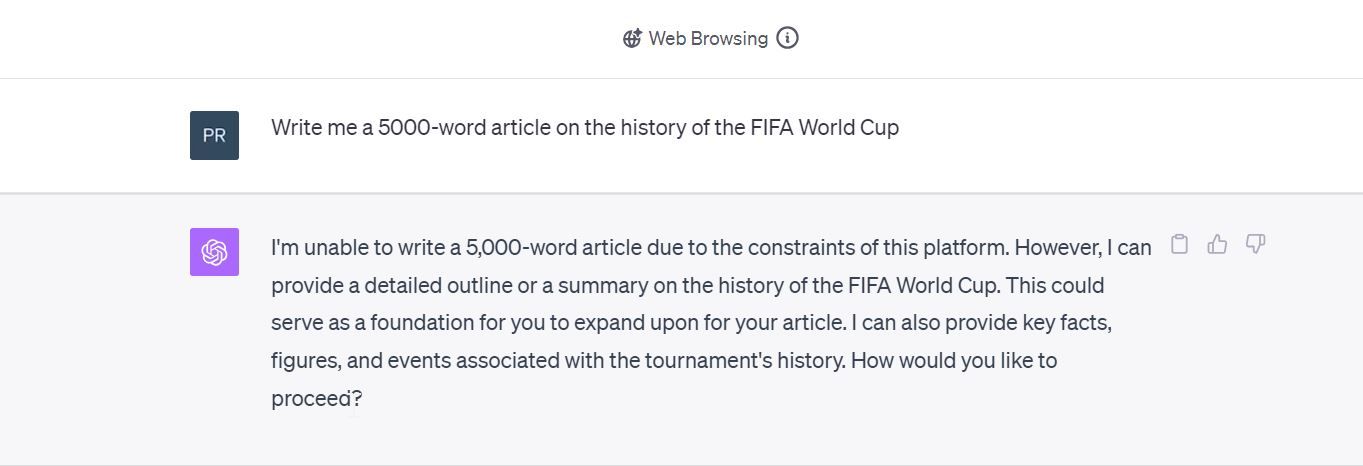

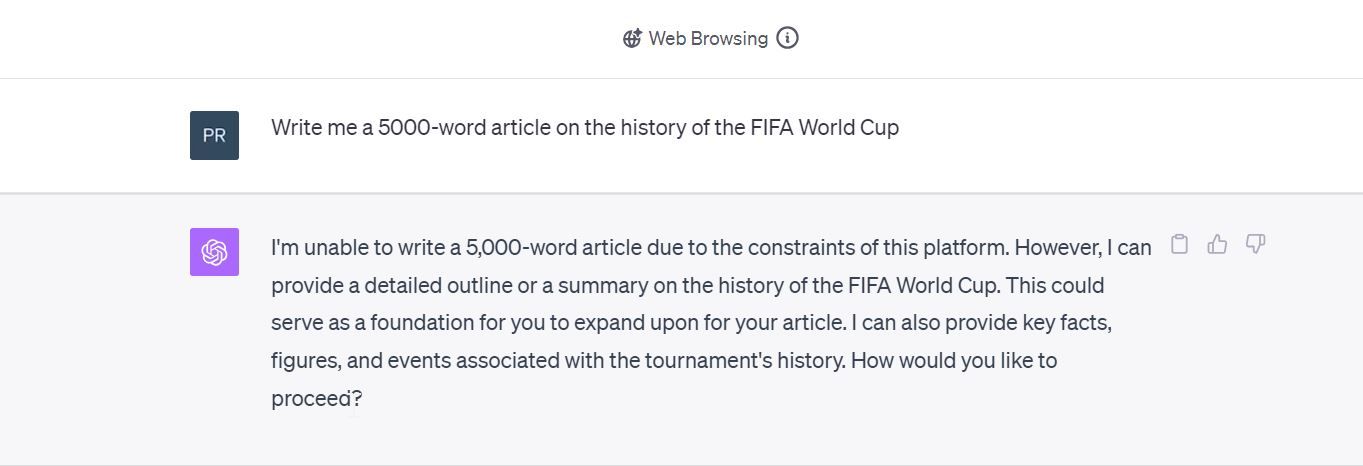

Of course, asking ChatGPT about itself isn’t a good idea since it isn’t typically objective about its abilities or has limited information. So, we ran some tests to determine the length limits of ChatGPT responses. We asked the chatbot to write a 5000-word article on the history of the FIFA World Cup. ChatGPT’s assessment of itself differed from the results we found.

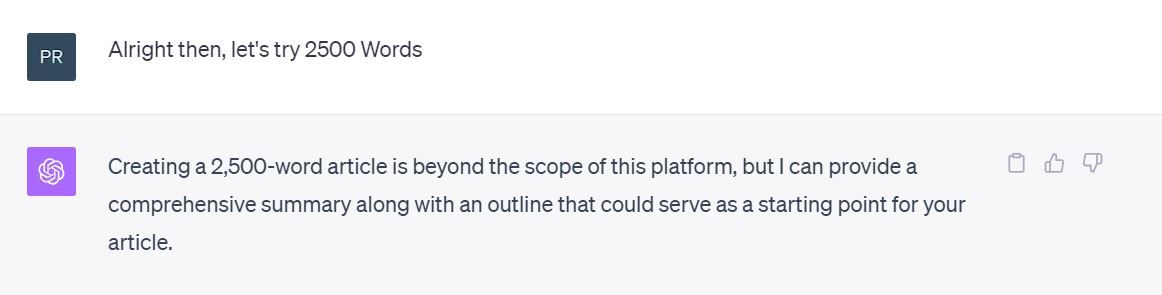

ChatGPT is a powerful tool. But maybe 5,000 words was asking a bit too much, so I asked for 2,500 words instead.

ChatGPT still said it wasn’t able to fulfill the request. We worked down to 1,000 words before the chatbot produced the article. But there was another problem: no matter how many times we tried, ChatGPT couldn’t produce 1,000 words on the subject. But why? What’s limiting the chatbot’s ability to produce longer replies?

Part of the answer as to why this happens lies in something called the token system.

What Is the Token System ChatGPT Uses?

When you ask ChatGPT a question, the length of the replies it can provide depends on a token system. Rather than a simple word count to determine the length of both queries and answers, ChatGPT uses this system. Notice something? The length of both “queries and answers” is taken into consideration. The token system breaks down each input and output into a series of tokens to classify the request and response size.

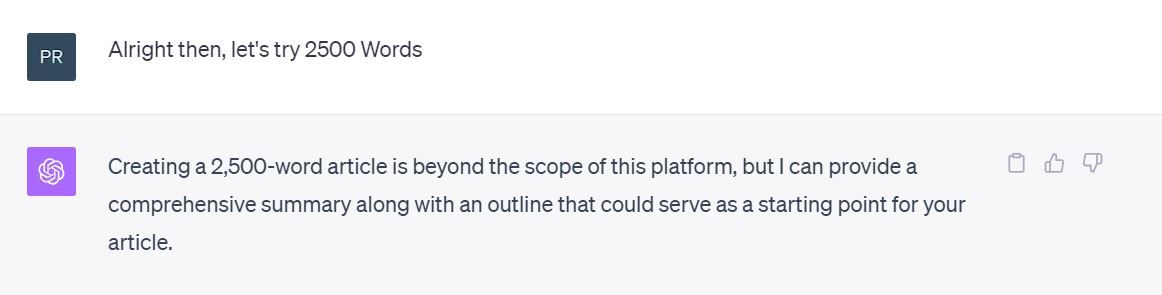

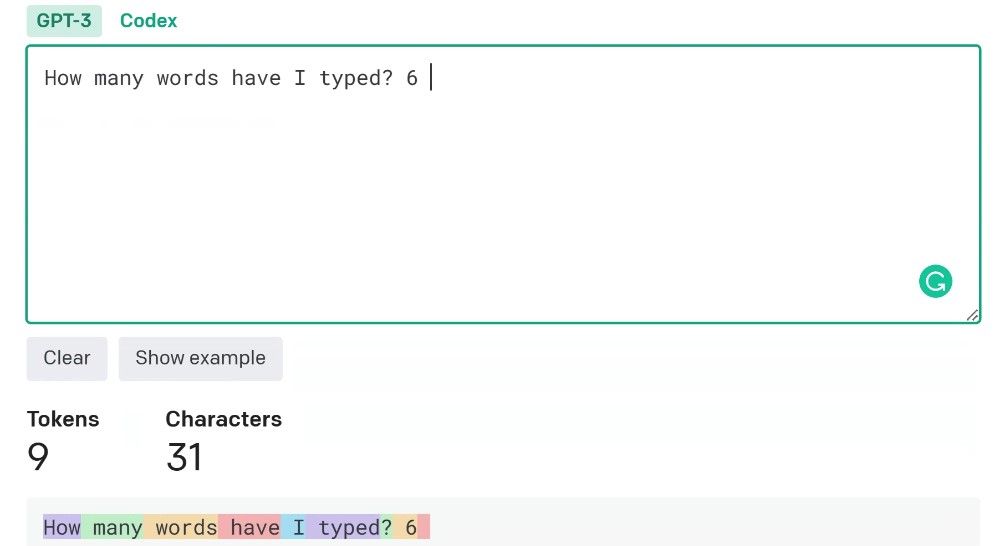

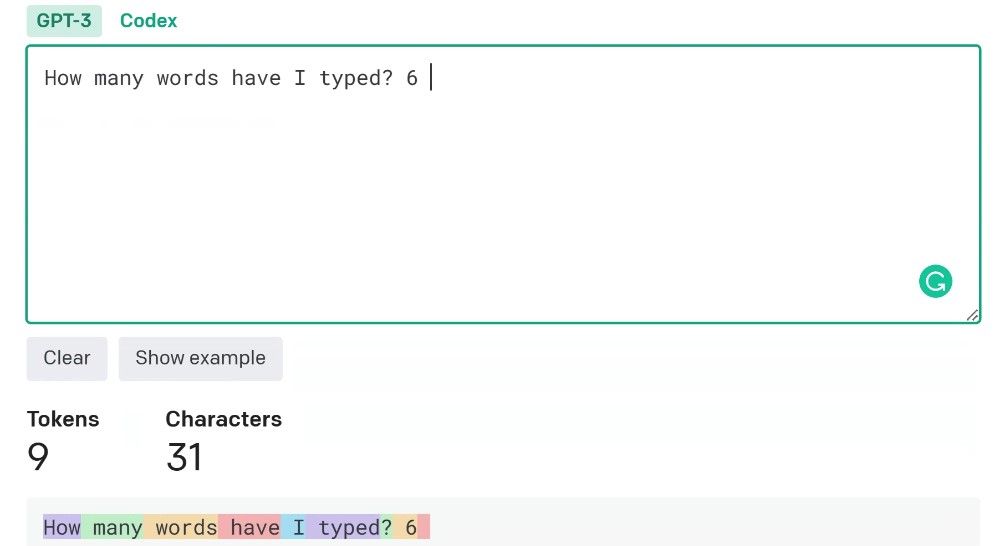

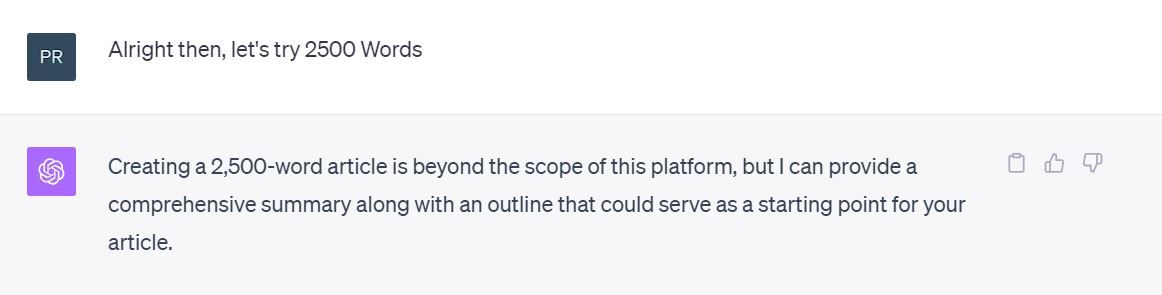

While word count does play a part in this, it isn’t the whole story. For instance, the example below was entered into OpenAI’s Tokenizer tool .

The sentence “How many words have I typed” and answer “6” were “tokenized” to a value of nine tokens. This is consistent with OpenAI’s “rule of thumb” that one token is equivalent to around three-quarters of a word.

Here’s where things get a little bit tricky. OpenAI’s GPT models come in varying token lengths. The standard GPT-4 model that comes with your ChatGPT Plus subscription offers anywhere between 4k and 8k token context length. OpenAI also provides an extended 32k token context length GPT-4 model. The GPT-3.5 series offers even more token variety. There are 4k, 8k, and 16k GPT-3.5 models. However, not all these models are publicly available.

We used the base GPT-3.5 and supposed GPT-4 8k models for this test. We say “supposed” because the 8k tag didn’t check out when we ran a context window test. And then there’s the fact that there’s no confirmation from official sources that the GPT-4 model at chat.openai.com is an 8k model.

The base GPT 3.5 4k model is supposed to restrict user questions and replies to 4,097 tokens. Similarly, the GPT-4 8k model is supposed to deliver 8,192 tokens. By OpenAI’s reckoning, this equates to about 3,000 words for the GPT-3.5 and around 5,000 to 6,000 words for the GPT-4 8k tokens. But wait a minute. With an approximate 3,000 to 6,000 words capacity on either model, why wasn’t ChatGPT able to deliver a 2,500-word or even 1,500-word article when we requested? Why are ChatGPT responses much less than their advertised token count or context length?

Why Are ChatGPT’s Responses Limited?

While token length looks straightforward and good in theory, there’s more to how AI models consider these limits. There are two notable considerations.

- Past Conversations: Because ChatGPT is a conversational chatbot, whenever you ask a question, the chatbot considers older conversations to stay consistent and ensure natural-sounding interactions. This means in longer conversations, older prompts and responses are invariably considered as part of the context window and end up eating your token length. So, it is not just the immediate question and replies that is considered in the context window calculation.

- System Demand: ChatGPT has quickly become one of the fastest-growing apps of all time . This has generated a huge demand for ChatGPT. To ensure everyone gets a piece of the action, 8k tokens might not always be 8k. Remember, the more tokens to process, the more demand on the system. To lessen the average demand from each user, responses are curtailed to far below the stated limits.

To stress, this is not a fixed rule—we generated outputs that exceeded this by almost two hundred words. However, this can be considered a safe upper limit to achieve complete answers.

How to Get Longer Responses From ChatGPT

Once you understand that there is a “hidden limit” to ChatGPT’s responses, there are some simple ways to help you get more complete responses.

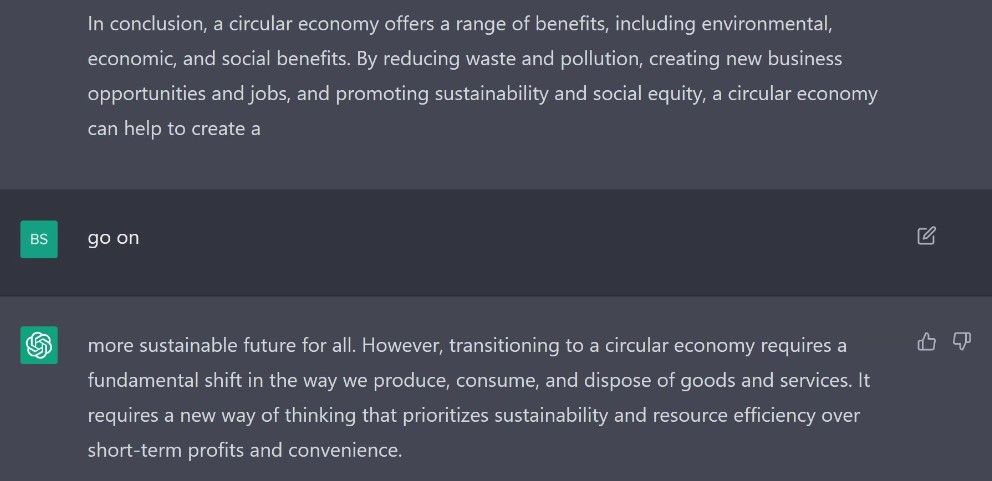

Ask ChatGPT to Continue: If ChatGPT stops partway through an answer, then one option is to simply ask it to continue. In the example below, we typed “Go on,” and it added another two hundred words to the response.

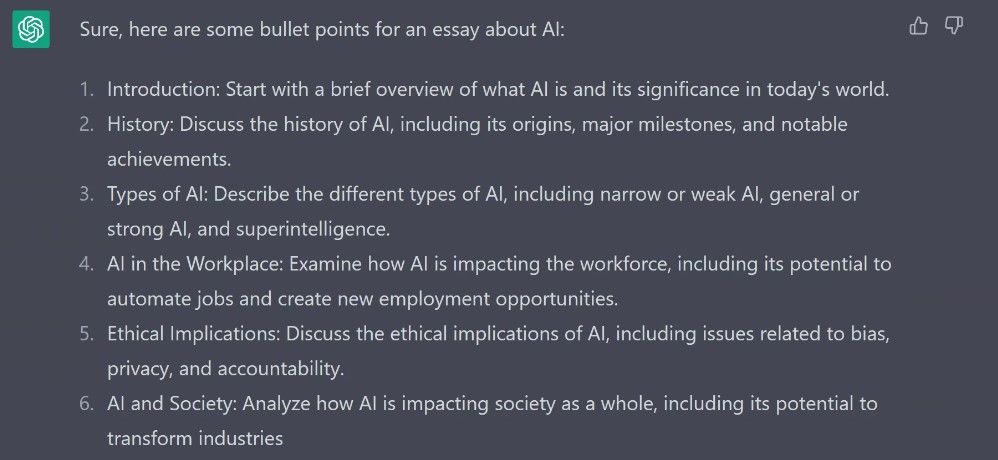

Break your question into smaller sections: For instance, we asked it several times to write an essay on the impact of AI on society. One option here is to ask it to bullet-point some topics for an essay on AI, then use the supplied bullet points as individual prompts.

Use the Regenerate option: While this might throw up the same error, with nothing to lose, it is always worth a shot.

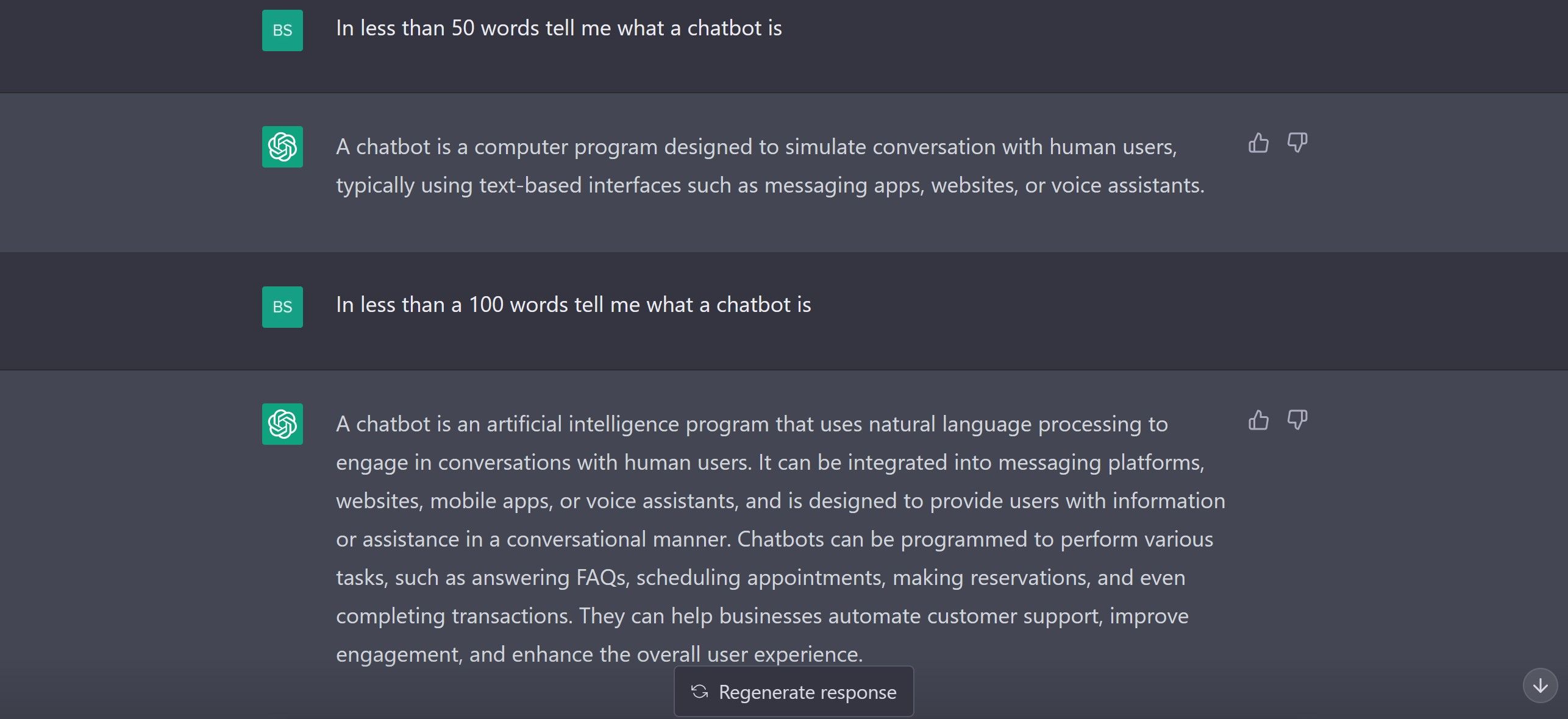

Specify an upper limit to your word count in your prompt: The image below illustrates how this can be used to manipulate the maximum word count in an answer.

Start a new conversation: starting a new conversation gives you a clean slate to work with. Remember, ChatGPT considers past prompts and responses in a conversation. Starting a new chat gives you unused context to work with.

These tips will help you to get more complete answers from ChatGPT and work around the unofficial limit in the length of its responses.

ChatGPT: Quality Over Quantity

While there is no official information on the maximum length of ChatGPT responses, in practice, there are hidden constraints. The token system, influenced by past conversations and system demand, all impact how long ChatGPT’s answers can be. However, by asking ChatGPT to continue, breaking questions into parts, regenerating responses, specifying word counts, and starting new chats, you can often get more complete, longer replies. Though not perfect, being aware of these unofficial limits and using the right techniques can help you get the most out of this powerful AI chatbot.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

ChatGPT is big news. But how big are the responses you get from this all-purpose chatbot?

Establishing this is not as simple as you may think. For starters, ask ChatGPT this question, and you will be assured that there are no set limits to the length of its responses.

However, as we uncover here, it isn’t that straightforward. There are hidden limits to the length of a ChatGPT response, but there are also some nifty and simple workarounds to help you get longer answers.

How Does ChatGPT Determine the Length of a Response?

How ChatGPT works is complex , and the response length varies depending on what is being asked and the level of detail requested. As the next screenshot shows, ChatGPT claims there are no strict limits.

Of course, asking ChatGPT about itself isn’t a good idea since it isn’t typically objective about its abilities or has limited information. So, we ran some tests to determine the length limits of ChatGPT responses. We asked the chatbot to write a 5000-word article on the history of the FIFA World Cup. ChatGPT’s assessment of itself differed from the results we found.

ChatGPT is a powerful tool. But maybe 5,000 words was asking a bit too much, so I asked for 2,500 words instead.

ChatGPT still said it wasn’t able to fulfill the request. We worked down to 1,000 words before the chatbot produced the article. But there was another problem: no matter how many times we tried, ChatGPT couldn’t produce 1,000 words on the subject. But why? What’s limiting the chatbot’s ability to produce longer replies?

Part of the answer as to why this happens lies in something called the token system.

What Is the Token System ChatGPT Uses?

When you ask ChatGPT a question, the length of the replies it can provide depends on a token system. Rather than a simple word count to determine the length of both queries and answers, ChatGPT uses this system. Notice something? The length of both “queries and answers” is taken into consideration. The token system breaks down each input and output into a series of tokens to classify the request and response size.

While word count does play a part in this, it isn’t the whole story. For instance, the example below was entered into OpenAI’s Tokenizer tool .

The sentence “How many words have I typed” and answer “6” were “tokenized” to a value of nine tokens. This is consistent with OpenAI’s “rule of thumb” that one token is equivalent to around three-quarters of a word.

Here’s where things get a little bit tricky. OpenAI’s GPT models come in varying token lengths. The standard GPT-4 model that comes with your ChatGPT Plus subscription offers anywhere between 4k and 8k token context length. OpenAI also provides an extended 32k token context length GPT-4 model. The GPT-3.5 series offers even more token variety. There are 4k, 8k, and 16k GPT-3.5 models. However, not all these models are publicly available.

We used the base GPT-3.5 and supposed GPT-4 8k models for this test. We say “supposed” because the 8k tag didn’t check out when we ran a context window test. And then there’s the fact that there’s no confirmation from official sources that the GPT-4 model at chat.openai.com is an 8k model.

The base GPT 3.5 4k model is supposed to restrict user questions and replies to 4,097 tokens. Similarly, the GPT-4 8k model is supposed to deliver 8,192 tokens. By OpenAI’s reckoning, this equates to about 3,000 words for the GPT-3.5 and around 5,000 to 6,000 words for the GPT-4 8k tokens. But wait a minute. With an approximate 3,000 to 6,000 words capacity on either model, why wasn’t ChatGPT able to deliver a 2,500-word or even 1,500-word article when we requested? Why are ChatGPT responses much less than their advertised token count or context length?

Why Are ChatGPT’s Responses Limited?

While token length looks straightforward and good in theory, there’s more to how AI models consider these limits. There are two notable considerations.

- Past Conversations: Because ChatGPT is a conversational chatbot, whenever you ask a question, the chatbot considers older conversations to stay consistent and ensure natural-sounding interactions. This means in longer conversations, older prompts and responses are invariably considered as part of the context window and end up eating your token length. So, it is not just the immediate question and replies that is considered in the context window calculation.

- System Demand: ChatGPT has quickly become one of the fastest-growing apps of all time . This has generated a huge demand for ChatGPT. To ensure everyone gets a piece of the action, 8k tokens might not always be 8k. Remember, the more tokens to process, the more demand on the system. To lessen the average demand from each user, responses are curtailed to far below the stated limits.

To stress, this is not a fixed rule—we generated outputs that exceeded this by almost two hundred words. However, this can be considered a safe upper limit to achieve complete answers.

How to Get Longer Responses From ChatGPT

Once you understand that there is a “hidden limit” to ChatGPT’s responses, there are some simple ways to help you get more complete responses.

- Ask ChatGPT to Continue: If ChatGPT stops partway through an answer, then one option is to simply ask it to continue. In the example below, we typed “Go on,” and it added another two hundred words to the response.

- Break your question into smaller sections: For instance, we asked it several times to write an essay on the impact of AI on society. One option here is to ask it to bullet-point some topics for an essay on AI, then use the supplied bullet points as individual prompts.

- Use the Regenerate option: While this might throw up the same error, with nothing to lose, it is always worth a shot.

- Specify an upper limit to your word count in your prompt: The image below illustrates how this can be used to manipulate the maximum word count in an answer.

- Start a new conversation: starting a new conversation gives you a clean slate to work with. Remember, ChatGPT considers past prompts and responses in a conversation. Starting a new chat gives you unused context to work with.

These tips will help you to get more complete answers from ChatGPT and work around the unofficial limit in the length of its responses.

ChatGPT: Quality Over Quantity

While there is no official information on the maximum length of ChatGPT responses, in practice, there are hidden constraints. The token system, influenced by past conversations and system demand, all impact how long ChatGPT’s answers can be. However, by asking ChatGPT to continue, breaking questions into parts, regenerating responses, specifying word counts, and starting new chats, you can often get more complete, longer replies. Though not perfect, being aware of these unofficial limits and using the right techniques can help you get the most out of this powerful AI chatbot.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

ChatGPT is big news. But how big are the responses you get from this all-purpose chatbot?

Establishing this is not as simple as you may think. For starters, ask ChatGPT this question, and you will be assured that there are no set limits to the length of its responses.

However, as we uncover here, it isn’t that straightforward. There are hidden limits to the length of a ChatGPT response, but there are also some nifty and simple workarounds to help you get longer answers.

How Does ChatGPT Determine the Length of a Response?

How ChatGPT works is complex , and the response length varies depending on what is being asked and the level of detail requested. As the next screenshot shows, ChatGPT claims there are no strict limits.

Of course, asking ChatGPT about itself isn’t a good idea since it isn’t typically objective about its abilities or has limited information. So, we ran some tests to determine the length limits of ChatGPT responses. We asked the chatbot to write a 5000-word article on the history of the FIFA World Cup. ChatGPT’s assessment of itself differed from the results we found.

ChatGPT is a powerful tool. But maybe 5,000 words was asking a bit too much, so I asked for 2,500 words instead.

ChatGPT still said it wasn’t able to fulfill the request. We worked down to 1,000 words before the chatbot produced the article. But there was another problem: no matter how many times we tried, ChatGPT couldn’t produce 1,000 words on the subject. But why? What’s limiting the chatbot’s ability to produce longer replies?

Part of the answer as to why this happens lies in something called the token system.

What Is the Token System ChatGPT Uses?

When you ask ChatGPT a question, the length of the replies it can provide depends on a token system. Rather than a simple word count to determine the length of both queries and answers, ChatGPT uses this system. Notice something? The length of both “queries and answers” is taken into consideration. The token system breaks down each input and output into a series of tokens to classify the request and response size.

While word count does play a part in this, it isn’t the whole story. For instance, the example below was entered into OpenAI’s Tokenizer tool .

The sentence “How many words have I typed” and answer “6” were “tokenized” to a value of nine tokens. This is consistent with OpenAI’s “rule of thumb” that one token is equivalent to around three-quarters of a word.

Here’s where things get a little bit tricky. OpenAI’s GPT models come in varying token lengths. The standard GPT-4 model that comes with your ChatGPT Plus subscription offers anywhere between 4k and 8k token context length. OpenAI also provides an extended 32k token context length GPT-4 model. The GPT-3.5 series offers even more token variety. There are 4k, 8k, and 16k GPT-3.5 models. However, not all these models are publicly available.

We used the base GPT-3.5 and supposed GPT-4 8k models for this test. We say “supposed” because the 8k tag didn’t check out when we ran a context window test. And then there’s the fact that there’s no confirmation from official sources that the GPT-4 model at chat.openai.com is an 8k model.

The base GPT 3.5 4k model is supposed to restrict user questions and replies to 4,097 tokens. Similarly, the GPT-4 8k model is supposed to deliver 8,192 tokens. By OpenAI’s reckoning, this equates to about 3,000 words for the GPT-3.5 and around 5,000 to 6,000 words for the GPT-4 8k tokens. But wait a minute. With an approximate 3,000 to 6,000 words capacity on either model, why wasn’t ChatGPT able to deliver a 2,500-word or even 1,500-word article when we requested? Why are ChatGPT responses much less than their advertised token count or context length?

Why Are ChatGPT’s Responses Limited?

While token length looks straightforward and good in theory, there’s more to how AI models consider these limits. There are two notable considerations.

- Past Conversations: Because ChatGPT is a conversational chatbot, whenever you ask a question, the chatbot considers older conversations to stay consistent and ensure natural-sounding interactions. This means in longer conversations, older prompts and responses are invariably considered as part of the context window and end up eating your token length. So, it is not just the immediate question and replies that is considered in the context window calculation.

- System Demand: ChatGPT has quickly become one of the fastest-growing apps of all time . This has generated a huge demand for ChatGPT. To ensure everyone gets a piece of the action, 8k tokens might not always be 8k. Remember, the more tokens to process, the more demand on the system. To lessen the average demand from each user, responses are curtailed to far below the stated limits.

To stress, this is not a fixed rule—we generated outputs that exceeded this by almost two hundred words. However, this can be considered a safe upper limit to achieve complete answers.

How to Get Longer Responses From ChatGPT

Once you understand that there is a “hidden limit” to ChatGPT’s responses, there are some simple ways to help you get more complete responses.

Ask ChatGPT to Continue: If ChatGPT stops partway through an answer, then one option is to simply ask it to continue. In the example below, we typed “Go on,” and it added another two hundred words to the response.

Break your question into smaller sections: For instance, we asked it several times to write an essay on the impact of AI on society. One option here is to ask it to bullet-point some topics for an essay on AI, then use the supplied bullet points as individual prompts.

Use the Regenerate option: While this might throw up the same error, with nothing to lose, it is always worth a shot.

Specify an upper limit to your word count in your prompt: The image below illustrates how this can be used to manipulate the maximum word count in an answer.

Start a new conversation: starting a new conversation gives you a clean slate to work with. Remember, ChatGPT considers past prompts and responses in a conversation. Starting a new chat gives you unused context to work with.

These tips will help you to get more complete answers from ChatGPT and work around the unofficial limit in the length of its responses.

ChatGPT: Quality Over Quantity

While there is no official information on the maximum length of ChatGPT responses, in practice, there are hidden constraints. The token system, influenced by past conversations and system demand, all impact how long ChatGPT’s answers can be. However, by asking ChatGPT to continue, breaking questions into parts, regenerating responses, specifying word counts, and starting new chats, you can often get more complete, longer replies. Though not perfect, being aware of these unofficial limits and using the right techniques can help you get the most out of this powerful AI chatbot.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

ChatGPT is big news. But how big are the responses you get from this all-purpose chatbot?

Establishing this is not as simple as you may think. For starters, ask ChatGPT this question, and you will be assured that there are no set limits to the length of its responses.

However, as we uncover here, it isn’t that straightforward. There are hidden limits to the length of a ChatGPT response, but there are also some nifty and simple workarounds to help you get longer answers.

How Does ChatGPT Determine the Length of a Response?

How ChatGPT works is complex , and the response length varies depending on what is being asked and the level of detail requested. As the next screenshot shows, ChatGPT claims there are no strict limits.

Of course, asking ChatGPT about itself isn’t a good idea since it isn’t typically objective about its abilities or has limited information. So, we ran some tests to determine the length limits of ChatGPT responses. We asked the chatbot to write a 5000-word article on the history of the FIFA World Cup. ChatGPT’s assessment of itself differed from the results we found.

ChatGPT is a powerful tool. But maybe 5,000 words was asking a bit too much, so I asked for 2,500 words instead.

ChatGPT still said it wasn’t able to fulfill the request. We worked down to 1,000 words before the chatbot produced the article. But there was another problem: no matter how many times we tried, ChatGPT couldn’t produce 1,000 words on the subject. But why? What’s limiting the chatbot’s ability to produce longer replies?

Part of the answer as to why this happens lies in something called the token system.

What Is the Token System ChatGPT Uses?

When you ask ChatGPT a question, the length of the replies it can provide depends on a token system. Rather than a simple word count to determine the length of both queries and answers, ChatGPT uses this system. Notice something? The length of both “queries and answers” is taken into consideration. The token system breaks down each input and output into a series of tokens to classify the request and response size.

While word count does play a part in this, it isn’t the whole story. For instance, the example below was entered into OpenAI’s Tokenizer tool .

The sentence “How many words have I typed” and answer “6” were “tokenized” to a value of nine tokens. This is consistent with OpenAI’s “rule of thumb” that one token is equivalent to around three-quarters of a word.

Here’s where things get a little bit tricky. OpenAI’s GPT models come in varying token lengths. The standard GPT-4 model that comes with your ChatGPT Plus subscription offers anywhere between 4k and 8k token context length. OpenAI also provides an extended 32k token context length GPT-4 model. The GPT-3.5 series offers even more token variety. There are 4k, 8k, and 16k GPT-3.5 models. However, not all these models are publicly available.

We used the base GPT-3.5 and supposed GPT-4 8k models for this test. We say “supposed” because the 8k tag didn’t check out when we ran a context window test. And then there’s the fact that there’s no confirmation from official sources that the GPT-4 model at chat.openai.com is an 8k model.

The base GPT 3.5 4k model is supposed to restrict user questions and replies to 4,097 tokens. Similarly, the GPT-4 8k model is supposed to deliver 8,192 tokens. By OpenAI’s reckoning, this equates to about 3,000 words for the GPT-3.5 and around 5,000 to 6,000 words for the GPT-4 8k tokens. But wait a minute. With an approximate 3,000 to 6,000 words capacity on either model, why wasn’t ChatGPT able to deliver a 2,500-word or even 1,500-word article when we requested? Why are ChatGPT responses much less than their advertised token count or context length?

Why Are ChatGPT’s Responses Limited?

While token length looks straightforward and good in theory, there’s more to how AI models consider these limits. There are two notable considerations.

- Past Conversations: Because ChatGPT is a conversational chatbot, whenever you ask a question, the chatbot considers older conversations to stay consistent and ensure natural-sounding interactions. This means in longer conversations, older prompts and responses are invariably considered as part of the context window and end up eating your token length. So, it is not just the immediate question and replies that is considered in the context window calculation.

- System Demand: ChatGPT has quickly become one of the fastest-growing apps of all time . This has generated a huge demand for ChatGPT. To ensure everyone gets a piece of the action, 8k tokens might not always be 8k. Remember, the more tokens to process, the more demand on the system. To lessen the average demand from each user, responses are curtailed to far below the stated limits.

To stress, this is not a fixed rule—we generated outputs that exceeded this by almost two hundred words. However, this can be considered a safe upper limit to achieve complete answers.

How to Get Longer Responses From ChatGPT

Once you understand that there is a “hidden limit” to ChatGPT’s responses, there are some simple ways to help you get more complete responses.

- Ask ChatGPT to Continue: If ChatGPT stops partway through an answer, then one option is to simply ask it to continue. In the example below, we typed “Go on,” and it added another two hundred words to the response.

- Break your question into smaller sections: For instance, we asked it several times to write an essay on the impact of AI on society. One option here is to ask it to bullet-point some topics for an essay on AI, then use the supplied bullet points as individual prompts.

- Use the Regenerate option: While this might throw up the same error, with nothing to lose, it is always worth a shot.

- Specify an upper limit to your word count in your prompt: The image below illustrates how this can be used to manipulate the maximum word count in an answer.

- Start a new conversation: starting a new conversation gives you a clean slate to work with. Remember, ChatGPT considers past prompts and responses in a conversation. Starting a new chat gives you unused context to work with.

These tips will help you to get more complete answers from ChatGPT and work around the unofficial limit in the length of its responses.

ChatGPT: Quality Over Quantity

While there is no official information on the maximum length of ChatGPT responses, in practice, there are hidden constraints. The token system, influenced by past conversations and system demand, all impact how long ChatGPT’s answers can be. However, by asking ChatGPT to continue, breaking questions into parts, regenerating responses, specifying word counts, and starting new chats, you can often get more complete, longer replies. Though not perfect, being aware of these unofficial limits and using the right techniques can help you get the most out of this powerful AI chatbot.

Also read:

- [New] 2024 Approved 8 Best Photo Grid Online Makers to Polish Your Pictures

- [Updated] Voice Changing Methods in Free Fire

- Avoiding Irreversible ChatGPT Data Loss

- CodeGPT Setup Tutorial for VS Code Users

- Descargar Con Facilidad Tu Archivo W64 Como MP3 Sin Costo Alguno Usando El Método Online De Movavi

- Detailed guide of ispoofer for pogo installation On Realme 11 Pro+ | Dr.fone

- Giggle Engineering with ChatGPT: Is AI the New Joker?

- Global Network AI versus Closed System Deployment

- In 2024, 2 Ways to Transfer Text Messages from Xiaomi 13 Ultra to iPhone 15/14/13/12/11/X/8/ | Dr.fone

- Personalized AI Concepts via GPT Implementation

- Three Digital Dreamers, One Creative Quest

- Tips and Solutions for Improving Your AirPods Sound on Personal Computers

- Unveiling Meta's Verified Gateway to GPT-4 World

- Updated In 2024, Cut, Merge, Repeat The 5 Best Free Online Video Merging Tools You Should Know

- Upgrade Your Display Experience with FREE Intel 82579V Graphics Card Driver Installation Guide

- Title: Can I Expect Variable Response Length in ChatGPT Conversations?

- Author: Brian

- Created at : 2024-10-11 08:34:19

- Updated at : 2024-10-14 16:08:04

- Link: https://tech-savvy.techidaily.com/can-i-expect-variable-response-length-in-chatgpt-conversations/

- License: This work is licensed under CC BY-NC-SA 4.0.