Charting the Boundaries of ChatGPT's Token Count

Charting the Boundaries of ChatGPT’s Token Count

ChatGPT has taken the technological world by storm, and there is no shortage of advancements and updates. But despite the use of advanced technology, there are quite a few limiting factors within the current version.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

One such factor is the token system, which continues to break the evolution cycle, bringing the app’s utility to its knees. If you use ChatGPT, you might want to know about ChatGPT’s tokens and what to do when you run out of tokens.

Let’s take a step back and give you an insight into all there is to know about the app’s underlying working mechanisms.

What’s a ChatGPT Token?

If you go by the app’s façade, all you see is a computer language that obeys your commands and gives you the answers you seek. However, the backend is just lots of code, learning every minute, and getting up to speed with the questions it answers.

ChatGPT converts each word into a legible token whenever you ask a question. To break it down further, tokens are text fragments, and each programming language uses a different set of token values to understand the requirements.

Since computers don’t understand text values directly, they break them down into a combination of numbers, often called embeddings. You can even consider embeddings like Python lists , which resemble a list of related numbers, such as [1.1,2.1,3.1,4.1…n].

Once ChatGPT takes the initial input, it tries to predict the next possible input based on the previous information. It picks the entire list of prior tokens and, using its coding synthesis, tries to preempt the subsequent input by the user. It utilizes and predicts one token at a time to make it simpler for the language to comprehend the usage.

To enhance predictions and improve accuracy, it picks up the entire list of embeddings and passes it through transformer layers to establish associations between words. For example, when you ask a question like “Who’s Thomas Edison?”, ChatGPT picks up the most valuable words in the list: Who and Edison.

Transformer layers have their role to play in the entire process. These layers are a form of neural network architecture trained to pick up the most relevant keywords from a string of words. However, the training process isn’t as simple as it sounds, for it takes a long time to train transformer layers on gigabytes of data.

Even though ChatGPT predicts only one token at a time, its auto-regressive technology is programmed to predict and feed back into the primary model to release the output. The model is tuned to run only once for every token, so the result is printed one word at a time. The output stops automatically when the application encounters a stop token command.

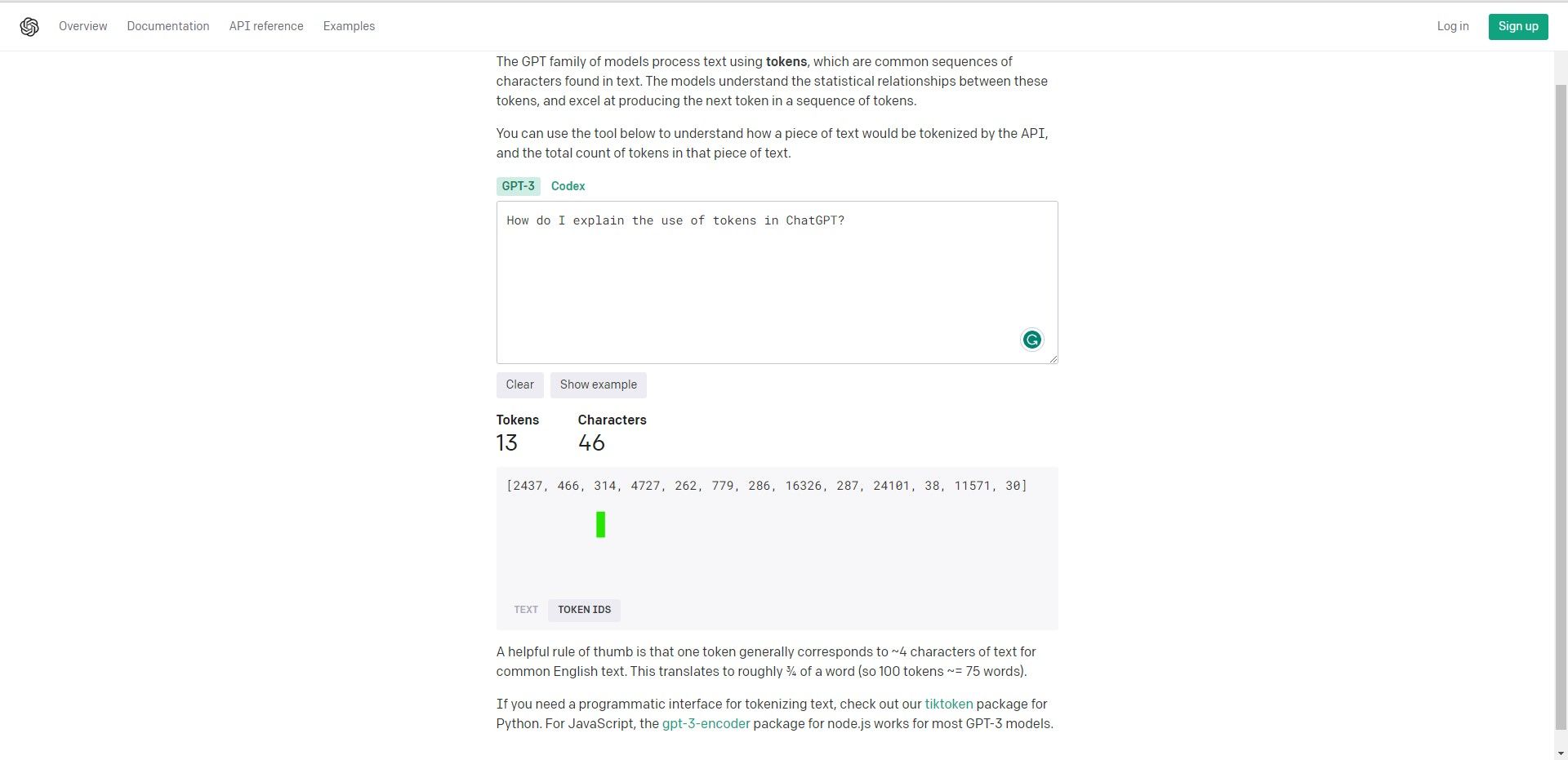

For example, if you look at the token calculator on the ChatGPT website, you can calculate your token usage basis your questions.

Let’s enter some sample text, such as:

How do I explain the use of tokens in ChatGPT?

As per the calculator, there are 46 characters in this string, which will consume 13 tokens. If you break it down into Token IDs, it looks something like this:

[2437, 466, 314, 4727, 262, 779, 286, 16326, 287, 24101, 38, 11571, 30]

dotConnect for Oracle is an ADO.NET data provider for Oracle with Entity Framework Support.

dotConnect for Oracle is an ADO.NET data provider for Oracle with Entity Framework Support.

As per the OpenAI token calculator :

A helpful rule of thumb is that one token generally corresponds to ~4 text characters for common English text. This translates to roughly ¾ of a word (100 tokens ~= 75 words).

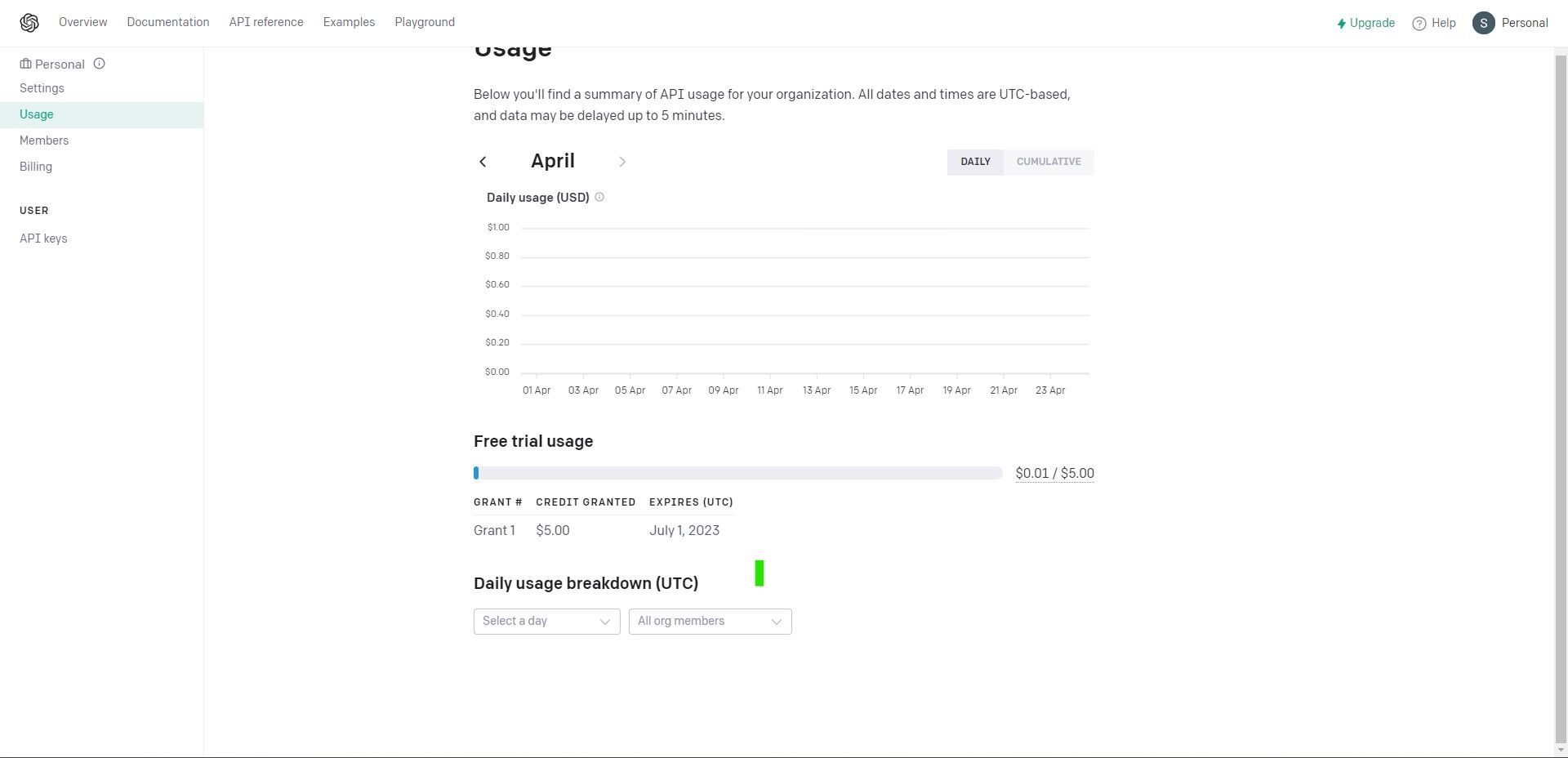

Free vs. Paid Tokens

To give you a flavor of what’s what within the ChatGPT application, OpenAI offers you a free limited token subscription. If you want to experiment with the ChatGPT API, use the free $5 credit, which is valid for three months. Once the limit is exhausted (or the trial period is up), you can pay-as-you-go, which increases the maximum quota to $120.

ChatGPT Plus: Is the Paid Subscription Worth It?

To take it up a notch, you can even sign up for the ChatGPT Plus Offer , which sets you back by $20 a month. Given the extensive usage and popularity of the entire AI model, here are the top features of the paid subscription model:

- Access to ChatGPT, even when the website’s experiencing downtime due to an influx of users

- Improved response rates

- First-hand access to new features and releases

If you feel these features are entirely worth it and up your alley, you can enroll for the paid subscription and benefit from them immediately.

ChatGPT Token Pricing Structure

Project Manager - Asset Browser for 3Ds Max

Project Manager - Asset Browser for 3Ds Max

There are a few different application models available, which you can choose based on your requirements:

| Model | Input Price for 1000 tokens (prompt) | Output Price for 1000 tokens (completion) |

|---|---|---|

| Ada | $0.0004 | $0.0016 |

| Babbage | $0.0005 | $0.0024 |

| Curie | $0.0020 | $0.0120 |

| DaVinci | $0.0200 | $0.1200 |

| ChatGPT | $0.0020 | $0.0020 |

| Chat 4K context | $0.0015 | $0.002 |

| GPT-4 8k context | $0.03 | $0.06 |

| Chat 16K context | $0.003 | $0.004 |

| GPT-4 32k context | $0.06 | $0.12 |

Ada is the fastest, while DaVinci is the most powerful model from this list of models. The prompt is the question, while the completion deals with the answers.

Maximum Token Limits

Each model allows you to set the maximum number of tokens within each query. This method controls the maximum number of tokens the application generates in a single call, limiting the output. The max_token feature is quite useful, especially when you want to control the length of your output while avoiding overusing your token usage and credits. The default length is fixed at 2,048 tokens, while the maximum can be set at 4,096 tokens.

Restricting the token usage can result in short answers, which might limit the output and mar your usage experience.

ChatGPT-3 has an upper limit of 4,096; however, with the introduction of ChatGPT-4, the token limits are increased as follows:

| Model | Token Limit |

|---|---|

| Ada | 2048 |

| Babbage | 2048 |

| Curie | 2048 |

| DaVinci | 4096 |

| ChatGPT | 4096 |

| GPT-4 8k context | 8192 |

| GPT-4 32k context | 32768 |

If your output is truncated, you must increase the specified maximum limit from your dashboard. Remember, the sum of your prompt and maximum tokens should always be less than equal to the model’s maximum token limit.

For example, within the ChatGPT model, the prompt_tokens + max_tokens <= 4096 tokens**.**

Learn to Control Your ChatGPT Prompts for Best Results

No matter how you use the AI-driven application, chances are you will have to devise ways to play smartly with your questions and phrases. Depending on your usage patterns, you will likely use ChatGPT extensively for every task.

To get the best results, you must learn how to work with the token methodology and enhance your skills to ensure your usage limits remain intact while giving you the best possible answers.

SCROLL TO CONTINUE WITH CONTENT

One such factor is the token system, which continues to break the evolution cycle, bringing the app’s utility to its knees. If you use ChatGPT, you might want to know about ChatGPT’s tokens and what to do when you run out of tokens.

Let’s take a step back and give you an insight into all there is to know about the app’s underlying working mechanisms.

- Title: Charting the Boundaries of ChatGPT's Token Count

- Author: Brian

- Created at : 2024-08-10 02:17:49

- Updated at : 2024-08-11 02:17:49

- Link: https://tech-savvy.techidaily.com/charting-the-boundaries-of-chatgpts-token-count/

- License: This work is licensed under CC BY-NC-SA 4.0.

vMix HD - Software based live production. vMix HD includes everything in vMix Basic HD plus 1000 inputs, Video List, 4 Overlay Channels, and 1 vMix Call

vMix HD - Software based live production. vMix HD includes everything in vMix Basic HD plus 1000 inputs, Video List, 4 Overlay Channels, and 1 vMix Call SwifDoo PDF 2-Year Plan

SwifDoo PDF 2-Year Plan