Charting the Course for Ethical AI Navigation

Charting the Course for Ethical AI Navigation

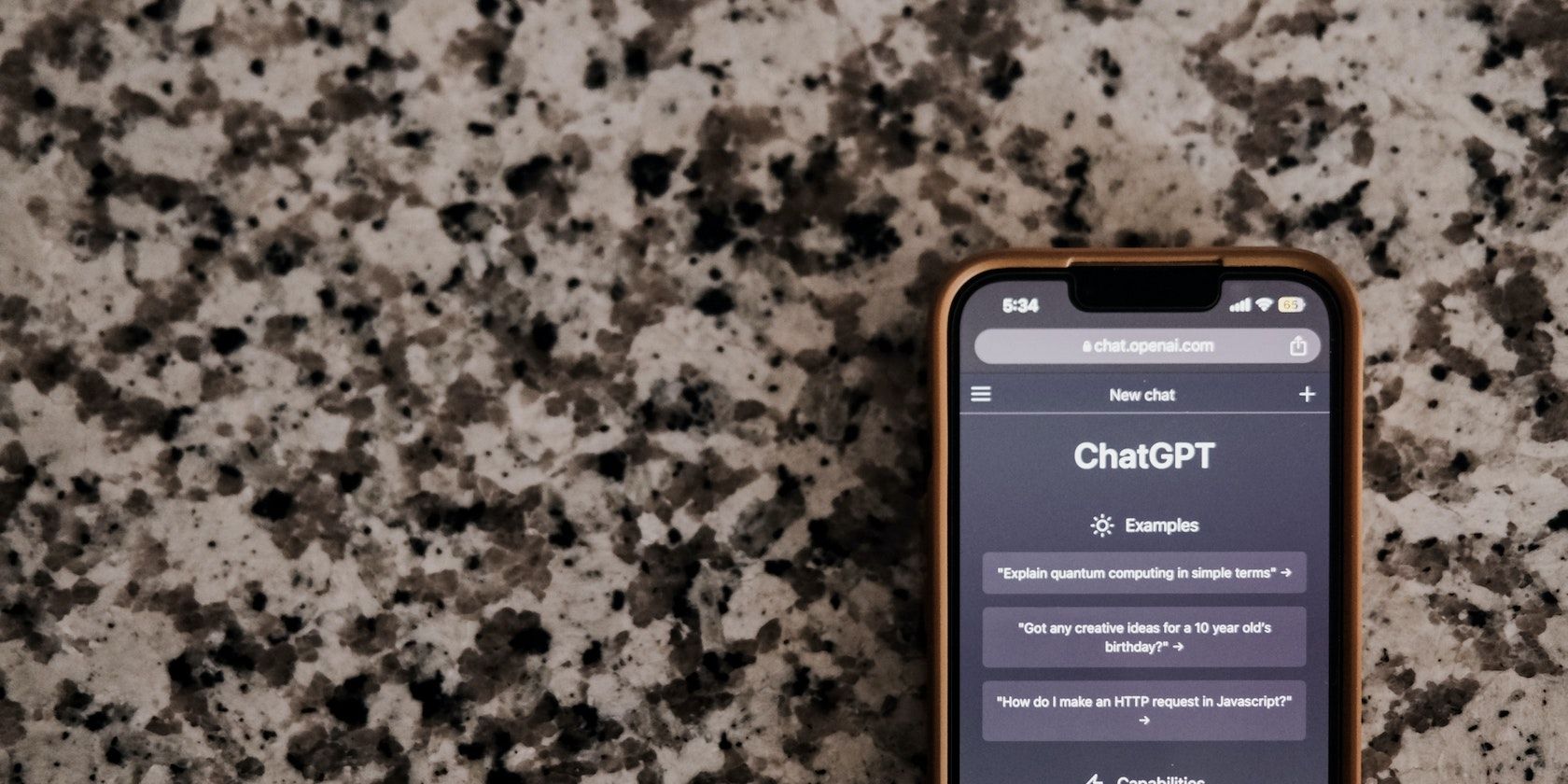

With modern AI language models like ChatGPT and Microsoft’s Bing Chat making waves around the world, a number of people are worried about AI taking over the world.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

While we won’t be running into SkyNet for the foreseeable future, AI is getting better than humans at several things. That’s where the AI control problem comes into play.

The AI Control Problem Explained

The AI control problem is the idea that AI will eventually become better at making decisions than humans. In accordance with this theory, if humans don’t set things up correctly beforehand, we won’t have a chance to fix things later, meaning AI will have effective control.

Current research on AI and Machine Learning (ML) models is, at the very least, years from surpassing human capabilities. However, it’s reasonable to think that, considering current progress, AI will exceed humans in terms of both intelligence and efficiency.

That’s not to say that AI and ML models don’t have their limits. They are, after all, bound by the laws of physics and computational complexity, as well as the processing power of the devices that support these systems. However, it’s safe to assume these limits are far beyond human capabilities.

This means that superintelligent AI systems can pose a major threat if not properly designed with safeguards in place to check any potentially rogue behavior. Such systems need to be built from the ground up to respect human values and to keep their power in check. This is what the control problem means when it says things must be set up correctly.

If an AI system were to surpass human intelligence without the proper safeguards, the result could be catastrophic. Such systems could assume control of physical resources as many tasks are achieved better or more efficiently. Since AI systems are designed to achieve maximum efficiency, losing control could lead to severe consequences.

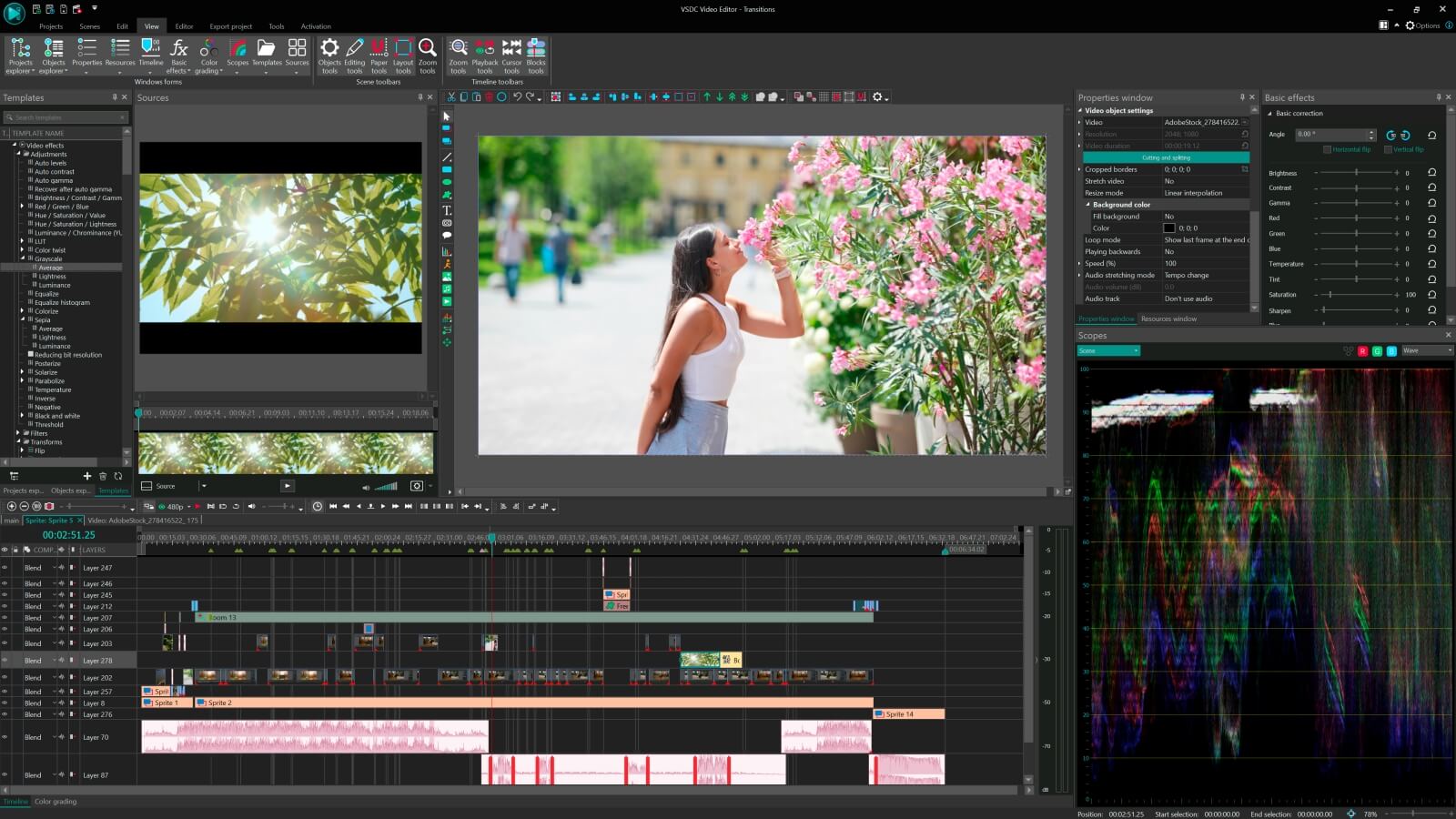

Key features:

• Import from any devices and cams, including GoPro and drones. All formats supported. Сurrently the only free video editor that allows users to export in a new H265/HEVC codec, something essential for those working with 4K and HD.

• Everything for hassle-free basic editing: cut, crop and merge files, add titles and favorite music

• Visual effects, advanced color correction and trendy Instagram-like filters

• All multimedia processing done from one app: video editing capabilities reinforced by a video converter, a screen capture, a video capture, a disc burner and a YouTube uploader

• Non-linear editing: edit several files with simultaneously

• Easy export to social networks: special profiles for YouTube, Facebook, Vimeo, Twitter and Instagram

• High quality export – no conversion quality loss, double export speed even of HD files due to hardware acceleration

• Stabilization tool will turn shaky or jittery footage into a more stable video automatically.

• Essential toolset for professional video editing: blending modes, Mask tool, advanced multiple-color Chroma Key

When Does the AI Control Problem Apply?

The main problem is that the better an AI system gets, the harder it is for a human supervisor to monitor the technology to ensure manual control can be taken over easily should the system fail. Additionally, the human tendency to rely on an automated system is higher when the system performs reliably most of the time.

A great example of this is the Tesla Full-Self Driving (FSD) suite . While the car can drive itself, it requires a human to have their hands on the steering wheel, ready to take control of the car should the system malfunction. However, as these AI systems get more reliable, even the most alert human’s attention will begin to vary, and dependency on the autonomous system will increase.

So what happens when cars start driving at speeds humans can’t keep up with? We’ll end up surrendering control to the car’s autonomous systems, meaning an AI system will be in control of your life, at least until you reach your destination.

Can the AI Control Problem Be Solved?

There are two answers to whether or not the AI control problem can be solved. First, if we interpret the question literally, the control problem can’t be solved. There’s nothing we can do that directly targets the human tendency to rely on an automated system when it performs reliably and more efficiently most of the time.

However, should this tendency be accounted for as a feature of such systems, we can devise ways to work around the control problem. For example, the Algorithmic Decision-Making and the Control Problem research paper suggests three different methods to deal with the predicament:

- The use of less reliable systems requires a human to actively engage with the system as less reliable systems do not pose the control problem.

- To wait for a system to exceed human efficiency and reliability before real-world deployment.

- To implement only partial automation using task decomposition. This means that only those parts of a system that do not require a human operator to perform an important task are automated. It’s called the dynamic/complementary allocation of function (DCAF) approach.

The DCAF approach always puts a human operator at the helm of an automated system, ensuring that their input controls the most important parts of the system’s decision-making process. If a system is engaging enough for a human operator to pay attention constantly, the control problem can be solved.

Can We Ever Truly Control AI?

As AI systems become more advanced, capable, and reliable, we’ll continue offloading more tasks to them. However, the AI control problem can be solved with the right precautions and safeguards.

AI is already changing the world for us, mostly for the better. As long as the technology is kept under human oversight, there shouldn’t be anything for us to worry about.

SCROLL TO CONTINUE WITH CONTENT

While we won’t be running into SkyNet for the foreseeable future, AI is getting better than humans at several things. That’s where the AI control problem comes into play.

- Title: Charting the Course for Ethical AI Navigation

- Author: Brian

- Created at : 2024-08-10 02:07:02

- Updated at : 2024-08-11 02:07:02

- Link: https://tech-savvy.techidaily.com/charting-the-course-for-ethical-ai-navigation/

- License: This work is licensed under CC BY-NC-SA 4.0.

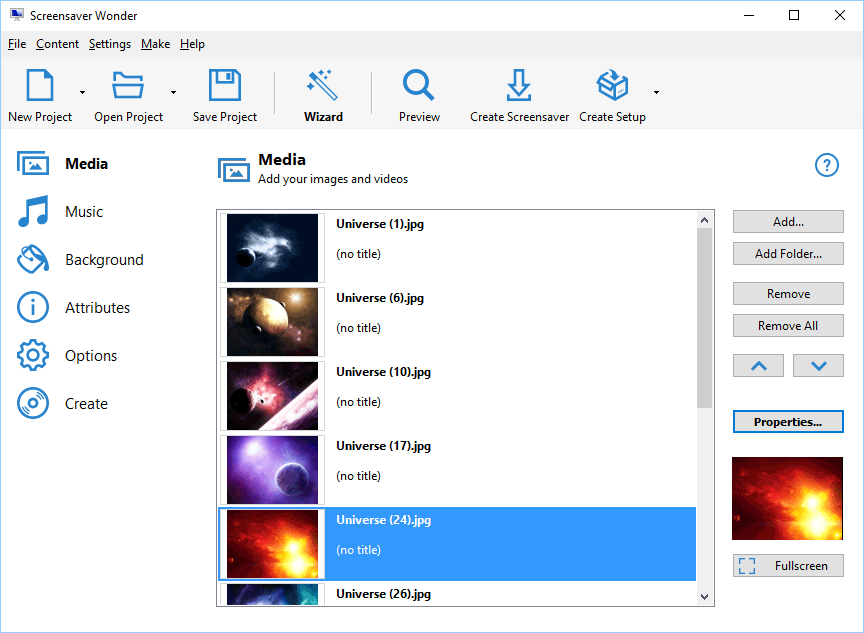

With Screensaver Wonder you can easily make a screensaver from your own pictures and video files. Create screensavers for your own computer or create standalone, self-installing screensavers for easy sharing with your friends. Together with its sister product Screensaver Factory, Screensaver Wonder is one of the most popular screensaver software products in the world, helping thousands of users decorate their computer screens quickly and easily.

With Screensaver Wonder you can easily make a screensaver from your own pictures and video files. Create screensavers for your own computer or create standalone, self-installing screensavers for easy sharing with your friends. Together with its sister product Screensaver Factory, Screensaver Wonder is one of the most popular screensaver software products in the world, helping thousands of users decorate their computer screens quickly and easily.