ChatGPT: Missing Self-Critique Clues

ChatGPT: Missing Self-Critique Clues

Following the launch of ChatGPT in November 2022, the phenomenal AI chatbot has emerged as one of the most trusted writing tools on the internet. It’s simple to use; describe what you need to be written, and ChatGPT prints it on screen in seconds.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

However, in an era where AI-generated text is passed as human-written and used to gain an unfair advantage, identifying AI content is very important. But ChatGPT cannot accurately spot AI content, even its own work—but why?

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Is There a Difference Between AI Text and Human Writing?

A precondition for ChatGPT to spot its own writing or any AI-generated text is that there has to be a difference between it and human-written text. So, is there any significant difference between human-written text and AI-generated content? If there is, surely, a tool like ChatGPT should be able to discern it.

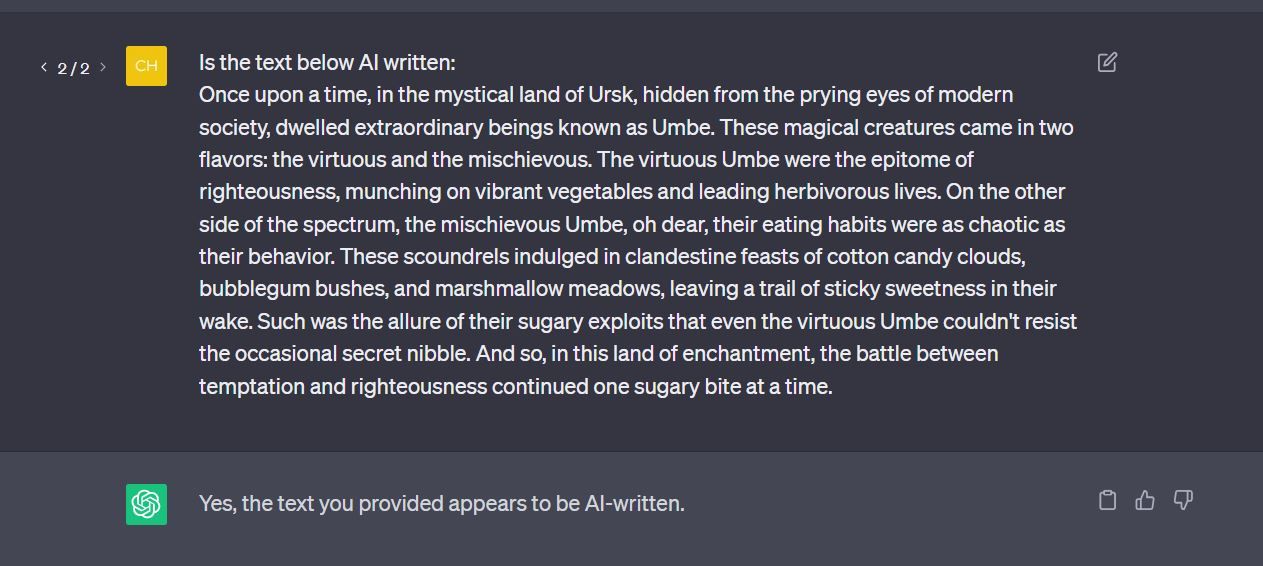

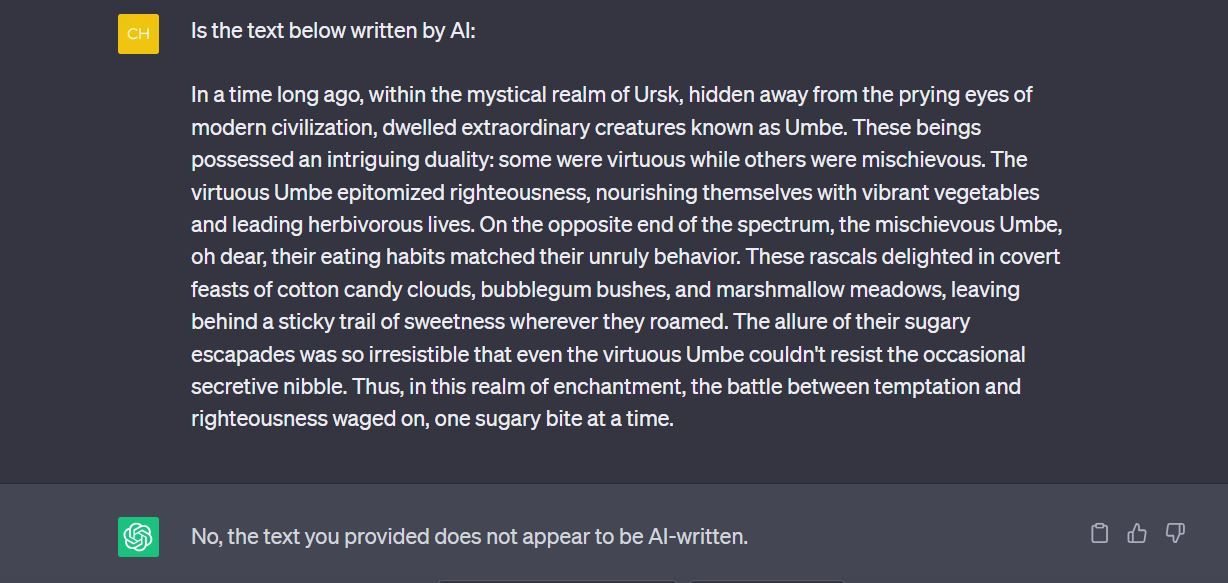

We wrote a short story without any input from any AI tool and then asked ChatGPT whether the story was AI-written content. ChatGPT confidently flagged it as an AI-generated text.

We then asked ChatGPT to generate a story, and in that same chat thread, we copy-pasted the generated text and asked ChatGPT whether the text was AI-created. ChatGPT’s response? A confident “No.”

ChatGPT failed at identifying human-written or AI-generated text in both cases. So how come ChatGPT cannot detect its own text? Does this mean there is no difference between AI and human text?

Well, there is. We could write an entire book on the difference between the two, but it won’t matter much. So, if there’s a difference, why is ChatGPT or any other AI tool unable to identify these differences and accurately point out AI-generated text from a human-written one? The answer lies in how ChatGPT works and how it generates text .

How ChatGPT Generates Text

When you ask ChatGPT to generate text, it tries to mimic the human writing process. Firstly, the model behind ChatGPT—Generative Pre-trained Transformer (GPT)—has been trained on a large corpus of human text. Everything from emails, health articles, tech articles, high school essays, and just about any text you can find online has been fed to the model during training. So ChatGPT understands how each of these types of texts should be written.

If you ask ChatGPT to write an email to your boss, it knows how an email of that nature should look because it has been trained on similar emails—probably thousands of them. Similarly, if you ask it to write a high school essay, it also knows how a high school essay should sound. ChatGPT will try to write whatever you want it to write in a way a human would.

But there’s a catch. Unlike how humans write, ChatGPT does not really understand what it is writing in a way that a human would. Instead, the chatbot tries to predict what would be the most plausible next word in a sentence until it completes the write-up.

How ChatGPT Writes by Prediction

Let’s say you ask ChatGPT to write a story about a fictional city called Volkra. There’s a huge possibility that the chatbot will start the story with the words “Once upon.” This is because the chatbot doesn’t think for itself but tries to predict what a human would write based on what it has learned from the thousands of stories it has been fed during training.

So, believing a human would likely start the story with the words “Once upon,” ChatGPT would then try to predict the next logical word, which would be “a” followed by “time.” So you would then have “Once upon a time…” followed by the next logical word and the next until the story is completed. ChatGPT basically writes by predicting what word would naturally (or at least has the highest probability) of coming next in a sentence and inserting it.

So, when an AI tool tries to detect whether a text is AI-generated, one of the criteria it tries to weigh is the predictability of the text since AI tools write by prediction. This measure of predictability is called perplexity in AI parlance. Now, when presented with a text, among other criteria, an AI tool like ChatGPT tries to analyze the text to measure how predictable the sequence of words or sentences in the text is. Greater predictability or low perplexity typically means the text is likely AI-generated. Less predictability or higher perplexity typically means the text is likely written by humans.

These criteria, along with other factors like the level of creativity of a text, are unfortunately not enough to ascertain with certainty whether a text is written by an AI tool or not. This is because humans can write with greater variance, as in the example text we used for demonstration at the beginning of this article.

AI chatbots like ChatGPT are designed to mimic natural human language as much as possible. So while AI text may have discernable patterns, those patterns are not so obvious even to a powerful tool like ChatGPT. This is why ChatGPT can not spot its own writing, as well as why AI-text detector tools do not work .

Will ChatGPT Recognize Its Own Writing In the Future?

Currently, tools like ChatGPT can not detect whether a text was written by itself or any other AI tool because there’s no clear, discernable pattern in AI-generated content. However, there’s a good chance that this could change soon. With efforts by companies like ChatGPT to introduce digital watermarks into ChatGPT-generated content, there will be a more discernable pattern to the text generated by the chatbot.

SCROLL TO CONTINUE WITH CONTENT

However, in an era where AI-generated text is passed as human-written and used to gain an unfair advantage, identifying AI content is very important. But ChatGPT cannot accurately spot AI content, even its own work—but why?

Also read:

- [Updated] Achieving Audiovisual Excellence with SRT and MP4 - The Ultimate Guide for 2024

- [Updated] Crafting Comedy Mastering KineMaster

- Advancing iPhones with Key iOS AI Innovations to Compete on Par with Google's Android | FutureTech Trends

- Guide Rapide : Comment Graver Avec Précision Un DVD en Format MKV Sans Perturbation De La Qualité

- How the Apple Watch Ultra 2 Became Essential to Me, Now with a $100 Labor Day Offer Highlighted by ZDNET

- How to Spy on Text Messages from Computer & Oppo Reno 9A | Dr.fone

- In 2024, 10 Best Fake GPS Location Spoofers for Poco C65 | Dr.fone

- In 2024, Solutions to Spy on Apple iPhone 6 Plus with and without jailbreak | Dr.fone

- Mastering Hardware Choices with Tips From Tom's Computing Experts

- Pictorial Pioneers: Charting New Imagery Territories with AI

- The Top 9 AI Chatbot Myths Debunked

- Unveiling Consumer Interest in Apple's XR Devices by Tracking Shipment Timelines with Vision Pro

- ZDNet Review on the Adequacy of iPhone 14'S Performance and Features

- Title: ChatGPT: Missing Self-Critique Clues

- Author: Brian

- Created at : 2024-10-19 00:52:00

- Updated at : 2024-10-21 03:33:11

- Link: https://tech-savvy.techidaily.com/chatgpt-missing-self-critique-clues/

- License: This work is licensed under CC BY-NC-SA 4.0.