Content Stealthy Escape: Detectors at a Standstill

Content Stealthy Escape: Detectors at a Standstill

Artificial Intelligence (AI) will transform entire segments of our society whether we like it or not, and that includes the World Wide Web.With software like ChatGPT available to anyone with an internet connection, it’s becoming increasingly difficult to separate AI-generated content, from that created by a human being. Good thing we have AI content detectors, right?

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Do AI Content Detectors Work?

AI content detectors are specialized tools that determine whether something was written by a computer program or a human. If you just Google the words “AI content detector,” you’ll see there are dozens of detectors out there, all claiming they can reliably differentiate between human and non-human text.

The way they work is fairly simple: you paste a piece of writing, and the tool tells you whether it was generated by AI or not. In more technical terms, using a combination of natural language processing techniques and machine learning algorithms, AI content detectors look for patterns and predictability, and make calls based on that.

This sounds great on paper, but if you’ve ever used an AI detection tool, you know very well they are hit-and-miss, to put it mildly. More often than not, they detect human-written content as AI, or text created by human beings as AI-generated. In fact, some are embarrassingly bad at what they’re supposed to do.

How Accurate Are AI Content Detectors?

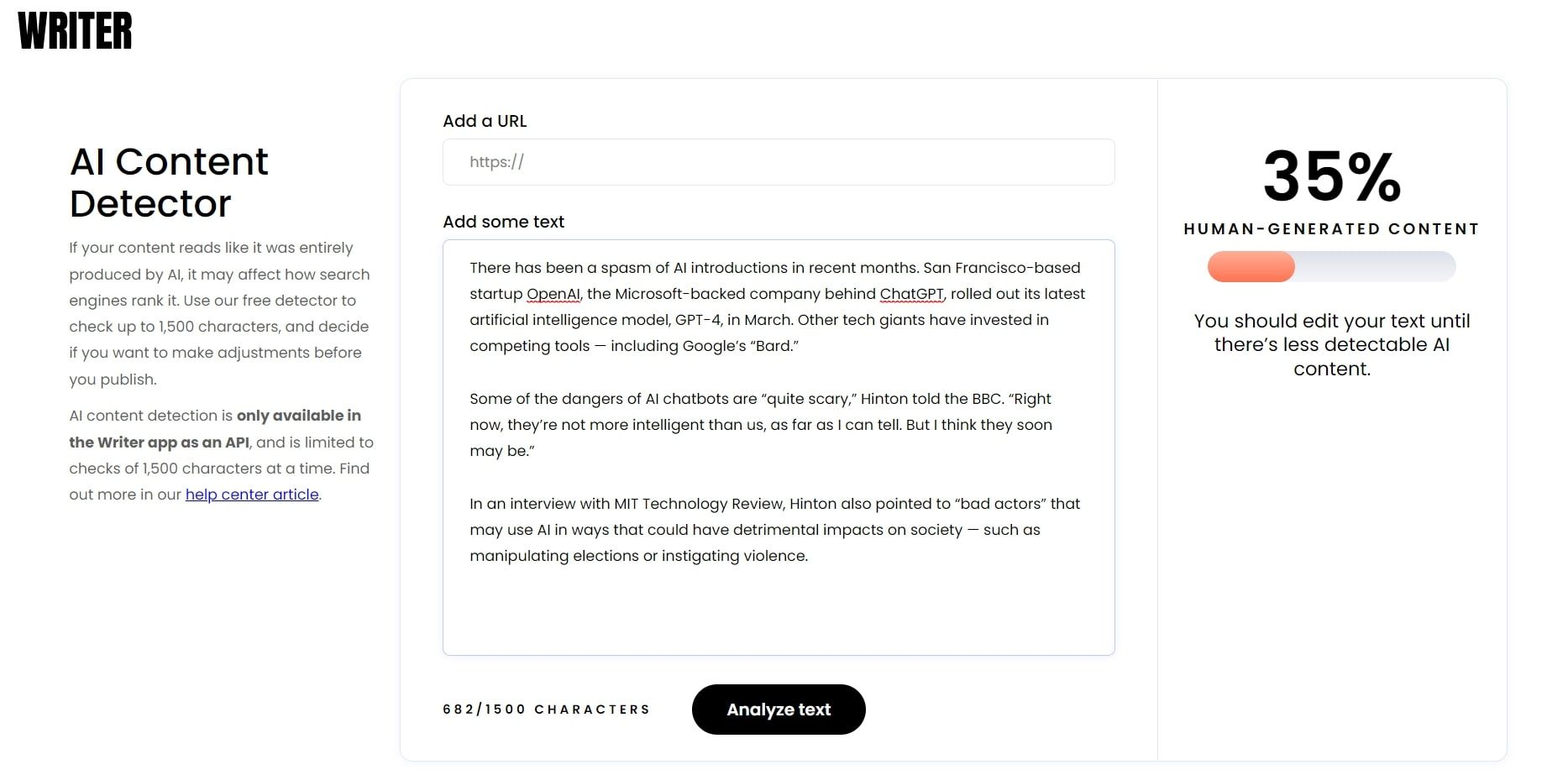

If you’re in the United States, the first Google search result for “AI content detector” is writer.com (previously known as Qordoba; this is an AI content platform that also has its own detector). But when you paste a section of this random Associated Press article into the tool, it claims there’s a very good chance it was generated by artificial intelligence.

So, writer.com has got it wrong.

To be fair, other AI content detectors are hardly any better. Not only do they produce false positives, but they also mark AI content as human. And even when they don’t, making minor tweaks to AI-generated text is enough to pass with flying colors.

In February 2023, University of Wollongong Lecturer Armin Alimardani and Associate Professor at UNSW Sydney Emma A. Jane tested a number of popular AI content detectors, establishing that none of them are reliable. In their analysis, which was published in The Conversation , Alimardani and Jane concluded that this AI “arms race” between text generators and detectors will pose a significant challenge in the future, especially for educators.

But it’s not just educators and teachers who have reason for concern: everyone does. As AI-generated text becomes ubiquitous, being able to differentiate between what’s “real” and what isn’t, i.e. actually spotting when something’s written by AI , will become more difficult. This will have a massive impact on virtually all industries and areas of society, even personal relationships.

AI’s Implications for Cybersecurity and Privacy

The fact that there are no reliable mechanisms to determine whether something was created by software or a human being has serious implications for cybersecurity and privacy.

Threat actors are already using ChatGPT to write malware , generate phishing emails, write spam, create scam sites, and more. And while there are ways to defend against that, it’s certainly worrying that there is no software capable of reliably differentiating between organic and bot content.

Fake news, too, is already a massive problem. With generative AI in the picture, disinformation agents are able to scale their operations in an unprecedented way. A regular person, meanwhile, has no way of knowing if something they’re reading online was created by software or a human being.

Privacy is a whole different matter. Take ChatGPT, for example. It was fed more than 300 billion words before its launch. This content was lifted from books, blog and forum posts, articles, and social media. It was gathered without anyone’s consent, and with seemingly complete disregard for privacy and copyright protections.

Then there’s also the issue of false positives. If content is mistakenly flagged as AI-generated, couldn’t that lead to censorship, which is a massive issue anyway? Not to mention the damage being accused of using AI-created text could do to one’s reputation, both online and in real life.

If there is indeed an arms race between generative AI and content detectors, the former is winning. What’s worse, there seems to be no solution. All we have our half-baked products that don’t even work half the time, or can be tricked very easily.

How to Detect AI Content: Potential Solutions

That we currently don’t seem to have real answers to this problem doesn’t mean we won’t have any in the future. In fact, there are already several serious proposals that could work. Watermarking is one.

When it comes to AI and deep language models, watermarking refers to embedding a secret code of sorts into AI-generated text (e.g. word pattern, punctuation style). Such a watermark would be invisible to the naked eye, and thus next to impossible to remove, but specialized software would be able to detect it.

In fact, back in 2022, University of Maryland researchers developed a new watermarking method for artificial neural networks. Lead researcher Tom Goldstein said at the time that his team had managed to “prove mathematically” that their watermark cannot be removed entirely.

For the time being, what a regular person can do is rely on their instincts and common sense. If there is something off about the content you’re reading—if it feels unnatural, repetitive, unimaginative, banal—it might have been created by software. Of course, you should also verify any information you see online, double-check the source, and stay away from shady websites.

The AI Revolution Is Underway

Some argue that the fifth industrial revolution is already here, as artificial intelligence takes center stage in what is being described as a convergence of the digital and physical. Whether that’s really the case or not, all we can do is adapt.

The good news is, the cybersecurity industry is adjusting to this new reality, and implementing new defense strategies with AI and machine learning at the forefront.

SCROLL TO CONTINUE WITH CONTENT

Also read:

- [New] In 2024, Tomorrow's Digital Playground A Comparative Study of Metaverse & Omniverse

- [New] Mastering Audio Best Practices for iPodcasting Interviews on iOS Devices for 2024

- [Updated] Step-by-Step Journey Mastering the Art of GS with KineMaster for 2024

- [Updated] Strategic Approach to Crafting YouTube Content Headlines

- 2024 Approved Initiate Flawless Zoom Sessions Today

- 2024 Approved PlayStation Palace A Million Gaming Moves

- Comprehending The Turing Test's Nature & Victory Limits

- Cyber Frontiers Collide A Deep-Dive Into Meta & Omniverse for 2024

- Exploring Apple's Smart Tech Integration: The Comprehensive Guide to AI Enhancements on iPhone, Mac, & iPad

- GPTZero Simplified: Identifying Authentic Vs. Artificial Texts

- In 2024, How to Bypass Google FRP Lock on Huawei Devices

- Inside Apple's Latest Marvel - A Comprehensive Look at the iPhone 15 Pro with Revolutionary Titanium Frame & Enhanced USB-C

- Leveraging Quora POE for LLM & Bot Discovery

- Top-Ranking Mac Devices : An Expert's Guide

- Troubleshooting Guide: Correcting Missing mscsctp.dll Issues

- Unbeatable July 2024 Deals: Save Big on iPhone, Apple Watches, and iPads | Smart Shopping

- Understanding GPT's Own Writing Weaknesses

- Unleash Creative Potential - Large-Scale Workflow via Canva & ChatGPT

- Upcoming Zoom Support on Vision Pro Headsets: How It Might Change Your Video Calls | Tech News

- Title: Content Stealthy Escape: Detectors at a Standstill

- Author: Brian

- Created at : 2024-11-29 20:40:26

- Updated at : 2024-12-06 23:51:26

- Link: https://tech-savvy.techidaily.com/content-stealthy-escape-detectors-at-a-standstill/

- License: This work is licensed under CC BY-NC-SA 4.0.