Data-Driven Discourse: Your Own AI Model Blueprints

Data-Driven Discourse: Your Own AI Model Blueprints

Providing GPT technology in a powerful and easy-to-use chatbot, ChatGPT has become the world’s most popular AI tool. Many people use ChatGPT to provide engaging conversations, answer queries, offer creative suggestions, and aid in coding and writing. However, ChatGPT is limited as you cannot store your data for long-term personal use, and its September 2021 knowledge data cutoff point.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

As a workaround, we can use OpenAI’s API and LangChain to provide ChatGPT with custom data and updated info past 2021 to create a custom ChatGPT instance.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Why Provide ChatGPT with Custom Data?

Feeding ChatGPT with custom data and providing updated information beyond its knowledge cutoff date provides several benefits over just using ChatGPT as usual. Here are a few of them:

- Personalized Interactions: By providing ChatGPT with custom data, users can create a more customized experience. The model can be trained on specific datasets relevant to individual users or organizations, resulting in responses tailored to their unique needs and preferences.

- Domain-Specific Expertise: Custom data integration allows ChatGPT to specialize in particular domains or industries. It can be trained on industry-specific knowledge, terminology, and trends, enabling more accurate and insightful responses within those specific areas.

- Current and Accurate Information: Access to updated information ensures that ChatGPT stays current with the latest developments and knowledge. It can provide accurate responses based on recent events, news, or research, making it a more reliable source of information.

Now that you understand the importance of providing custom data to ChatGPT, here’s a step-by-step on how to do so on your local computer.

Step 1: Install and Download Software and Pre-Made Script

Please note the following instructions are for a Windows 10 or Windows 11 machine.

To provide custom data to ChatGPT, you’ll need to install and download the latest Python3, Git, Microsoft C++, and the ChatGPT-retrieval script from GitHub. If you already have some of the software installed on your PC, make sure they are updated with the latest version to avoid any hiccups during the process.

Start by installing:

- Download:Python3 (Free)

- Download:Git (Free)

- Download:Microsoft Visual Build Tools (Free)

Python3 and Microsoft C++ Installation Notes

When installing Python3, make sure that you tick the Add python.exe to PATH option before clicking Install Now. This is important as it allows you to access Python in any directory on your computer.

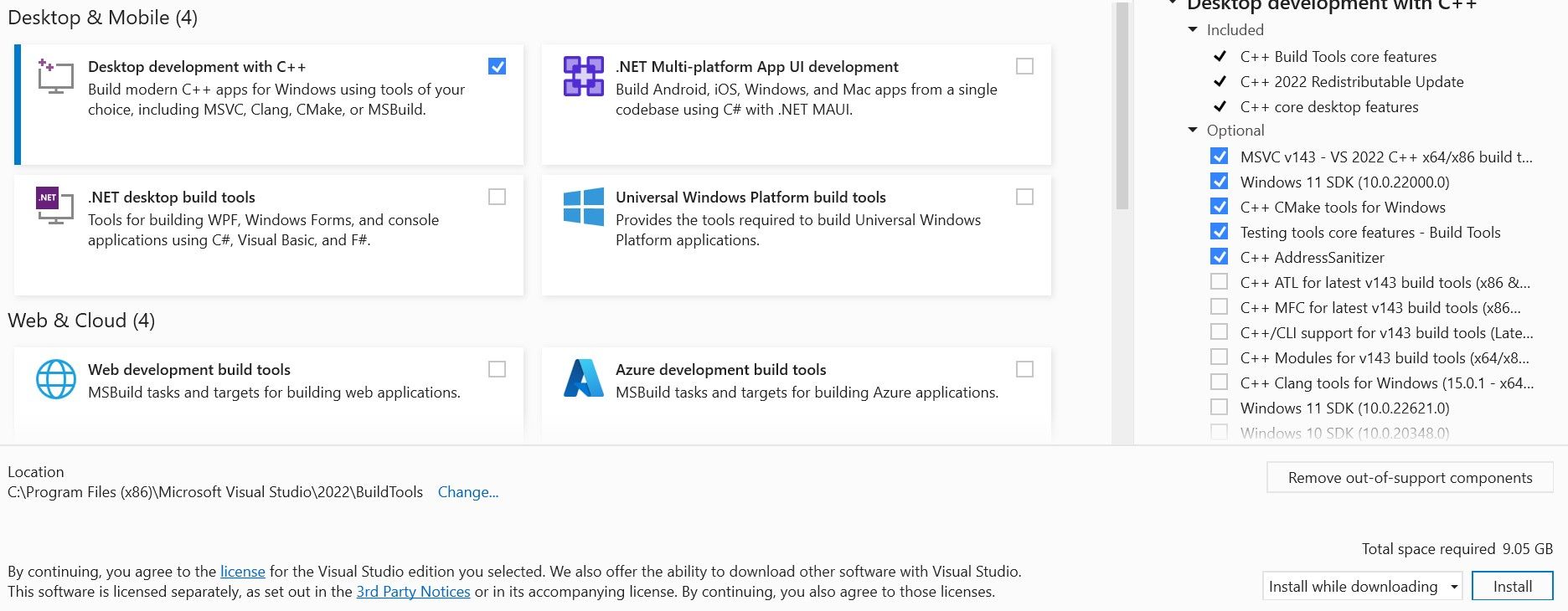

When Installing Microsoft C++, you’ll want to install Microsoft Visual Studio Build Tools first. Once installed, you can tick the Desktop development with C++ option and click Install with all the optional tools automatically ticked on the right sidebar.

Now that you have installed the latest versions of Python3, Git, and Microsoft C++, you can download the Python script to easily query custom local data.

Download: ChatGPT-retrieval script (Free)

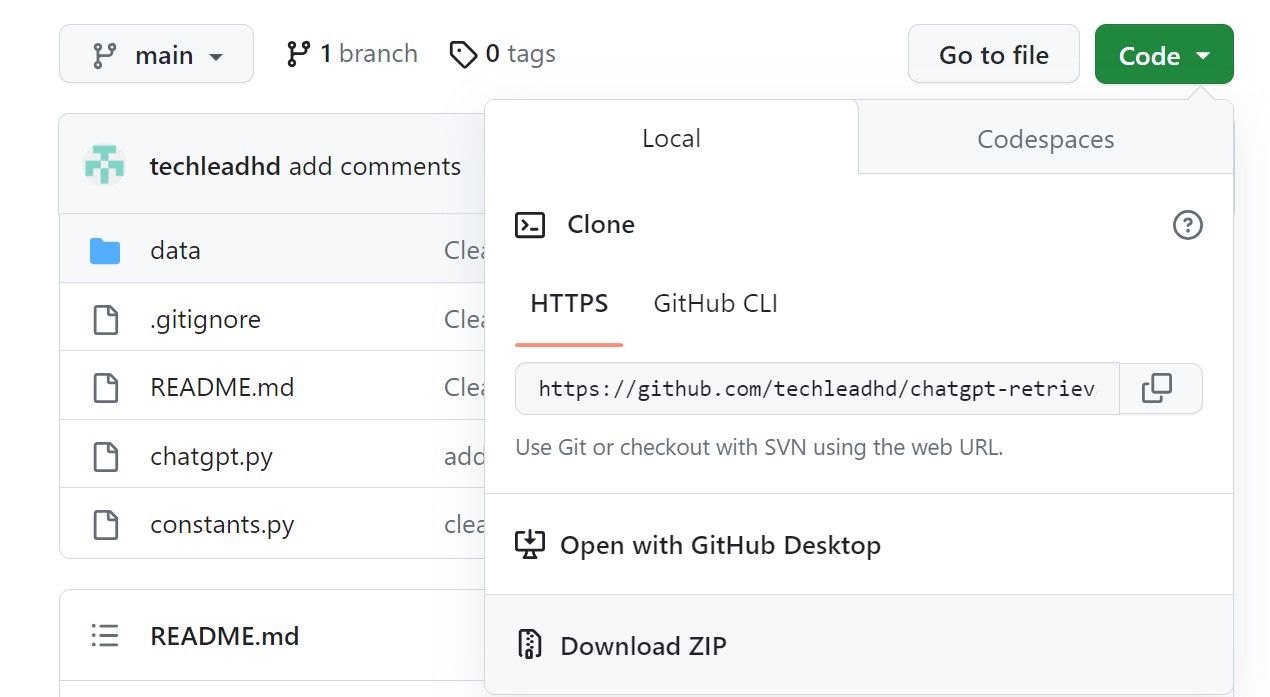

To download the script, click on Code, then select Download ZIP. This should download the Python script into your default or selected directory.

Once downloaded, we can now set up a local environment.

Step 2: Set Up the Local Environment

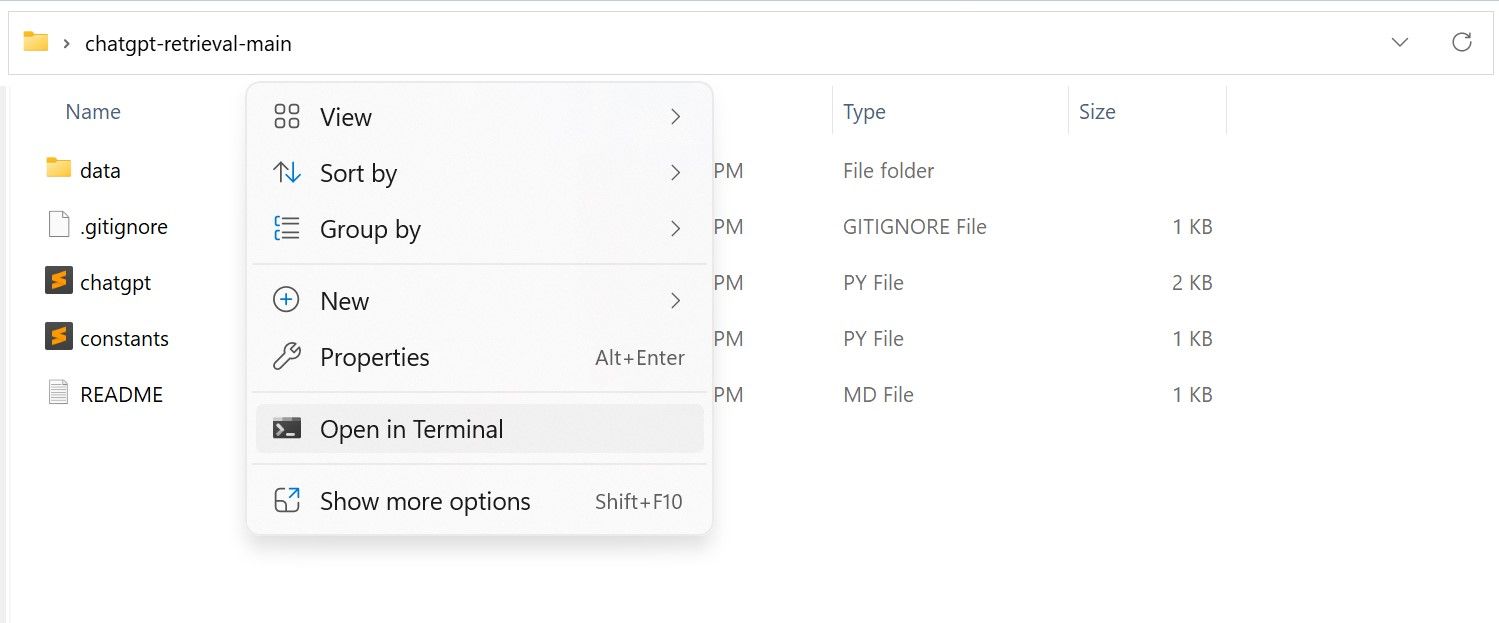

To set up the environment, you’ll need to open a terminal in the chatgpt-retrieval-main folder you downloaded. To do that, open chatgpt-retrieval-main folder, right-click, and select Open in Terminal.

Once the terminal is open, copy and paste this command:

pip install langchain openai chromadb tiktoken unstructured

This command uses Python’s package manager to create and manage the Python virtual environment needed.

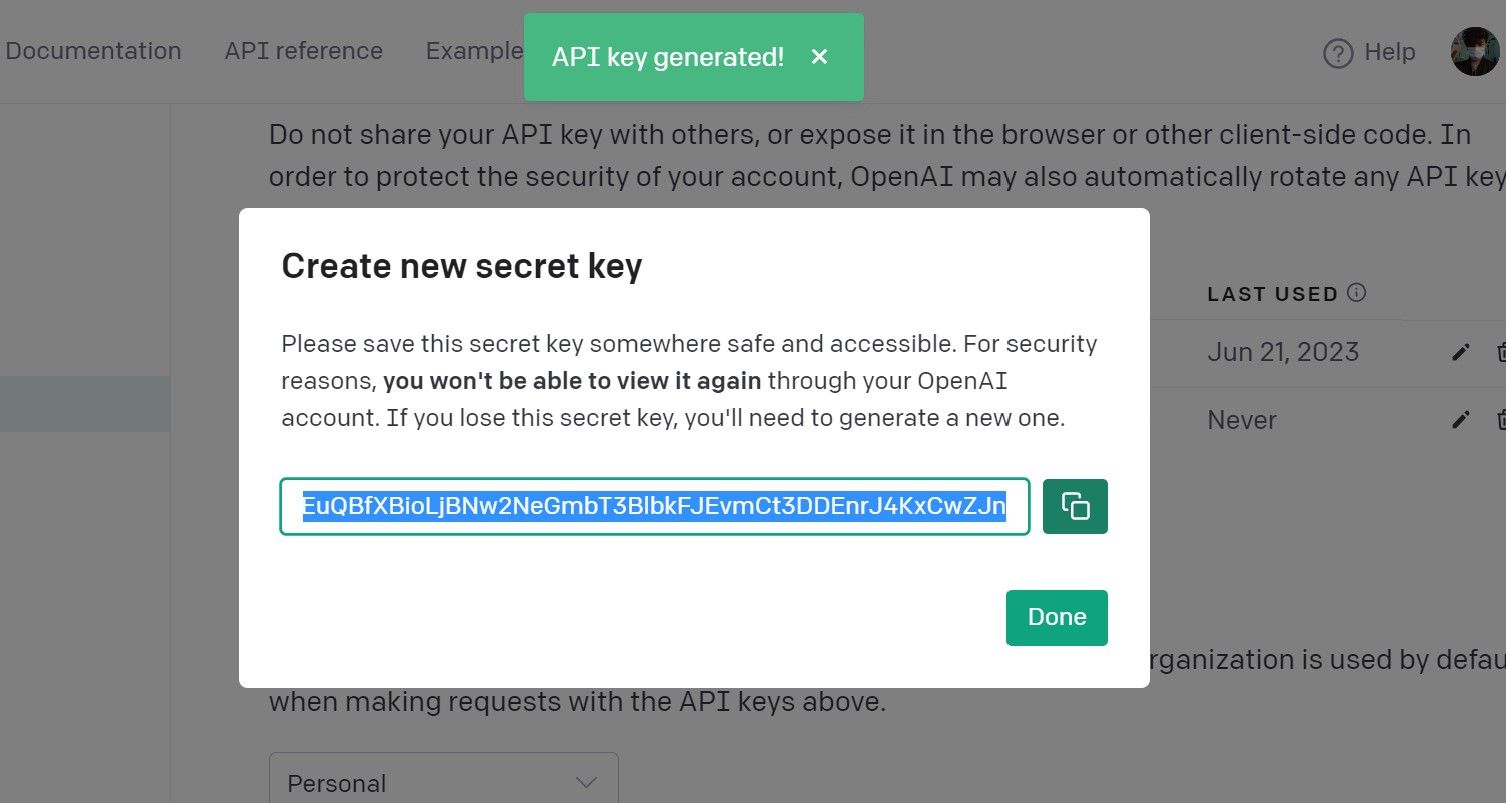

After creating the virtual environment, we need to supply an OpenAI API key to access their services. We’ll first need to generate an API key from the OpenAI API keys site by clicking on Create new secret key, adding a name for the key, then hitting the Create secret key button.

You will be provided with a string of characters. This is your OpenAI API key. Copy it by clicking on the copy icon on the side of the API key. Keep note that this API key should be kept secret. Do not share it with others unless you really intend for them to use it with you.

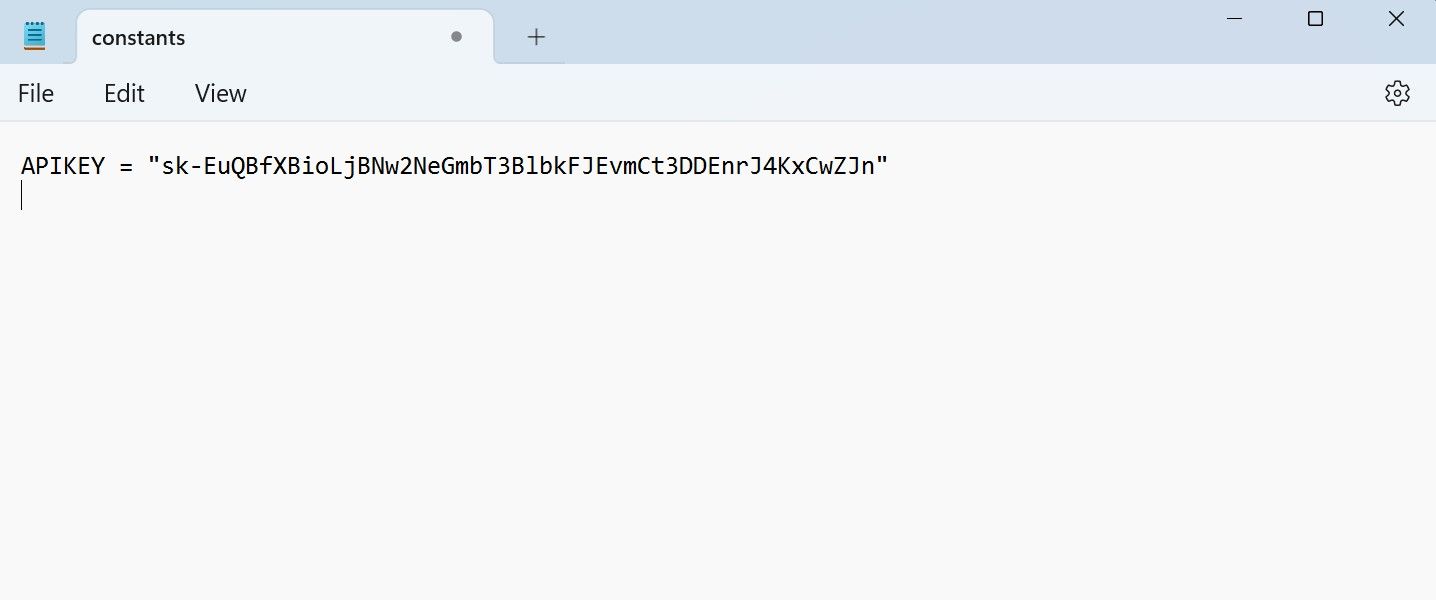

Once copied, return to the chatgpt-retrieval-main folder and open constants with Notepad. Now replace the placeholder with your API key. Remember to save the file!

Now that you have successfully set up your virtual environment and added your OpenAI API key as an environment variable. You can now provide your custom data to ChatGPT.

Step 3: Adding Custom Data

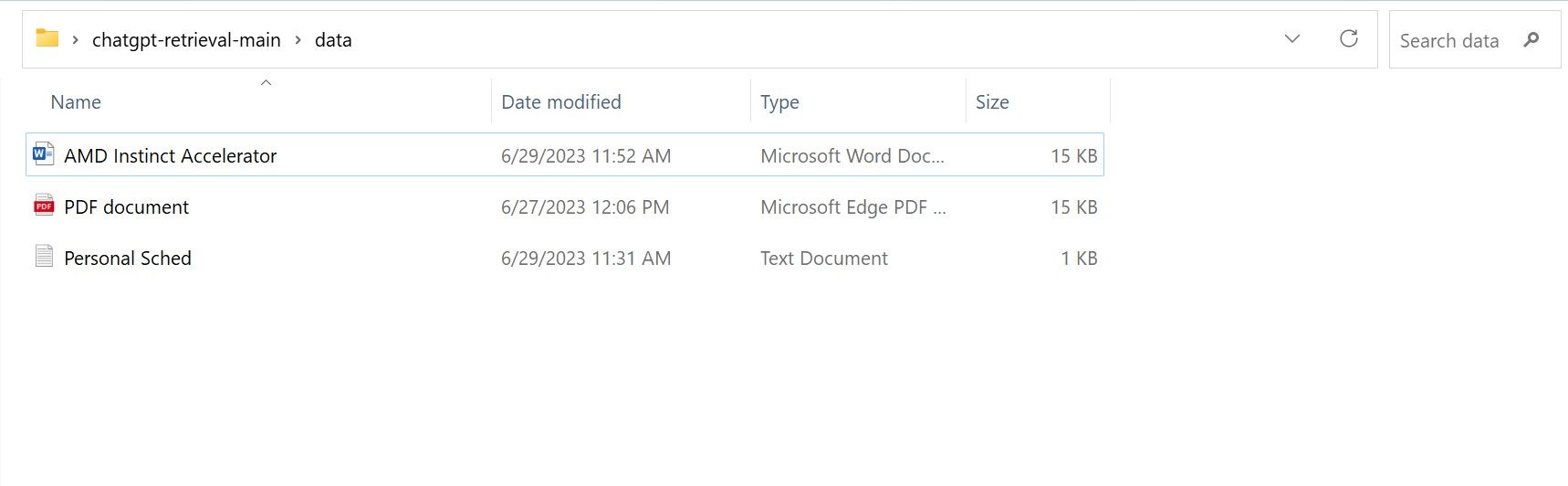

To add custom data, place all your custom text data in the data folder within chatgpt-retrieval-main. The format of the text data may be in the form of a PDF, TXT, or DOC.

As you can see from the screenshot above, I’ve added a text file containing a made-up personal schedule, an article I wrote on AMD’s Instinct Accelerators , and a PDF document.

Step 4: Querying ChatGPT Through Terminal

The Python script allows us to query data from the custom data we’ve added to the data folder and the internet. In other words, you will have access to the usual ChatGPT backend and all the data stored locally in the data folder.

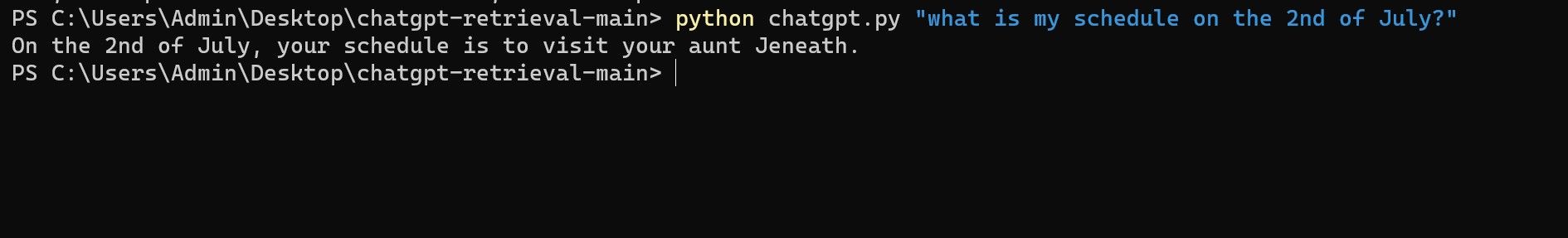

To use the script, run the python chatgpt.py script and then add your question or query as the argument.

python chatgpt.py “YOUR QUESTION”

Make sure to put your questions in quotation marks.

To test if we have successfully fed ChatGPT our data, I’ll ask a personal question regarding the Personal Sched.txt file.

It worked! This means ChatGPT was able to read the Personal Sched.txt provided earlier. Now let’s see if we have successfully fed ChatGPT with information it does not know due to its knowledge cutoff date.

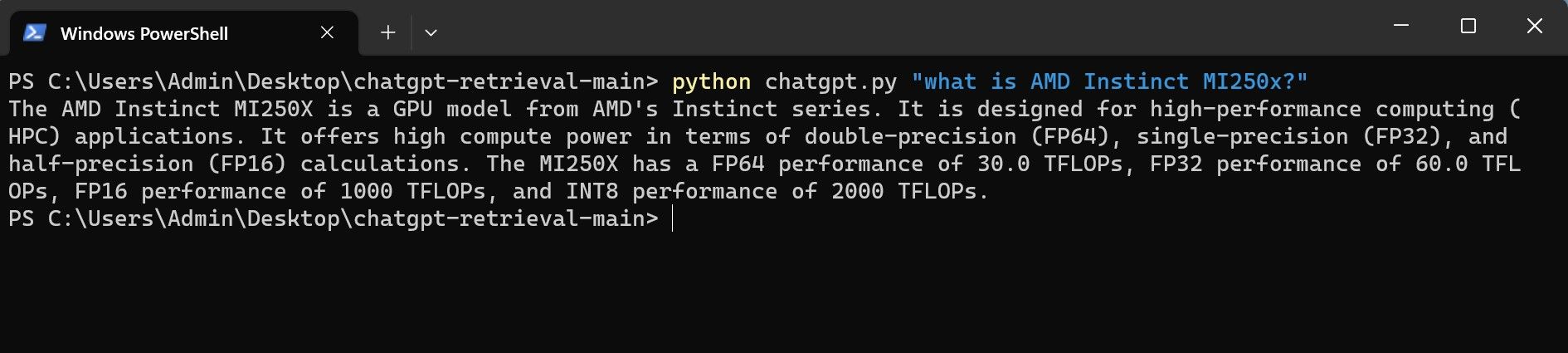

As you can see, it correctly described the AMD Instinct MI250x, which was released after ChatGPT -3’s knowledge cutoff date.

Limitations of Custom ChatGPT

Although feeding GPT-3.5 with custom data opens more ways to apply and use the LLM, there are a few drawbacks and limitations.

Firstly, you need to provide all the data yourself. You can still access all the knowledge of GPT-3.5 until its knowledge cutoff date; however, you must provide all the extra data. This means if you want your local model to be knowledgeable of a certain subject on the internet that GPT-3.5 don’t already know, you’ll have to go to the internet and scrape the data yourself and save it as a text on the data folder of chatgpt-retrieval-main.

Another issue is that querying ChatGPT like this takes more time to load when compared to asking ChatGPT directly.

Lastly, the only model currently available is GPT-3.5 Turbo. So even if you have access to GPT-4, you won’t be able to use it to power your custom ChatGPT instance.

Custom ChatGPT Is Awesome But Limited

Providing custom data to ChatGPT is a powerful way to get more out of the model. Through this method, you can feed the model with any text data you want and prompt it just like regular ChatGPT, albeit with some limitations. However, this will change in the future as it becomes easier to integrate our data with the LLM, along with access to the latest GPT-4 model.

SCROLL TO CONTINUE WITH CONTENT

As a workaround, we can use OpenAI’s API and LangChain to provide ChatGPT with custom data and updated info past 2021 to create a custom ChatGPT instance.

Also read:

- [New] 2024 Approved 10 Masterpieces in Job Market Insight

- [New] 2024 Approved How To Expertly Archive Your Favorite Streamed Shows (Hulu)

- [Updated] 2024 Approved Aggregate Video Pieces Into Lists

- [Updated] In 2024, The Blueprint for YouTube Video Success

- 2024 Approved Unlicensed Legal Tracks Downloads for Gaming

- Anonymity at Risk: Neural Network Inversion

- ChatGPT's Impact on Efficiency: 10 Ways to Apply AI in Business

- Hidden Capability: Interact with AI ChatGPT

- In 2024, Top 10 Fingerprint Lock Apps to Lock Your Oppo A79 5G Phone

- Innocuous Names, Hidden Dangers: A Guide to Authentic ChatBots!

- Issues playing H.265 HEVC video on Samsung Galaxy F34 5G

- Premier 20 GitHub ChatGPT Interaction Cases

- SnapCutMaster Insights – Full Video Editor Evaluation for 2024

- Tech Talk: Understanding Forefront AI Versus ChatGPT

- The Era of TwitScams Ends with New Signatures

- Ultimate Tubidy Tool: Quick & Easy Music, Video & Movie Downloads From Tubidy

- Title: Data-Driven Discourse: Your Own AI Model Blueprints

- Author: Brian

- Created at : 2024-10-08 19:29:39

- Updated at : 2024-10-15 13:07:10

- Link: https://tech-savvy.techidaily.com/data-driven-discourse-your-own-ai-model-blueprints/

- License: This work is licensed under CC BY-NC-SA 4.0.