Defending Against Data Breaches in Personalized AI Systems

Defending Against Data Breaches in Personalized AI Systems

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Key Takeaways

- Custom GPTs allow you to create personalized AI tools for various purposes and share them with others, amplifying expertise in specific areas.

- However, sharing your custom GPTs can expose your data to a global audience, potentially compromising privacy and security.

- To protect your data, be cautious when sharing custom GPTs and avoid uploading sensitive materials. Be mindful of prompt engineering and be wary of malicious links that could access and steal your files.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

ChatGPT’s custom GPT feature allows anyone to create a custom AI tool for almost anything you can think of; creative, technical, gaming, custom GPTs can do it all. Better still, you can share your custom GPT creations with anyone.

However, by sharing your custom GPTs, you could be making a costly mistake that exposes your data to thousands of people globally.

What Are Custom GPTs?

Custom GPTs are programmable mini versions of ChatGPT that can be trained to be more helpful on specific tasks. It is like molding ChatGPT into a chatbot that behaves the way you want and teaching it to become an expert in fields that really matter to you.

For instance, a Grade 6 teacher could build a GPT that specializes in answering questions with a tone, word choice, and mannerism that is suitable for Grade 6 students. The GPT could be programmed such that whenever the teacher asks the GPT a question, the chatbot will formulate responses that speak directly to a 6th grader’s level of understanding. It would avoid complex terminology, keep sentence length manageable, and adopt an encouraging tone. The allure of Custom GPTs is the ability to personalize the chatbot in this manner while also amplifying its expertise in certain areas.

How Custom GPTs Can Expose Your Data

To create Custom GPTs , you typically instruct ChatGPT’s GPT creator on which areas you want the GPT to focus on, give it a profile picture, then a name, and you’re ready to go. Using this approach, you get a GPT, but it doesn’t make it any significantly better than classic ChatGPT without the fancy name and profile picture.

The power of Custom GPT comes from the specific data and instructions provided to train it. By uploading relevant files and datasets, the model can become specialized in ways that broad pre-trained classic ChatGPT cannot. The knowledge contained in those uploaded files allows a Custom GPT to excel at certain tasks compared to ChatGPT, which may not have access to that specialized information. Ultimately, it is the custom data that enables greater capability.

But uploading files to improve your GPT is a double-edged sword. It creates a privacy problem just as much as it boosts your GPT’s capabilities. Consider a scenario where you created a GPT to help customers learn more about you or your company. Anyone who has a link to your Custom GPT or somehow gets you to use a public prompt with a malicious link can access the files you’ve uploaded to your GPT.

Here’s a simple illustration.

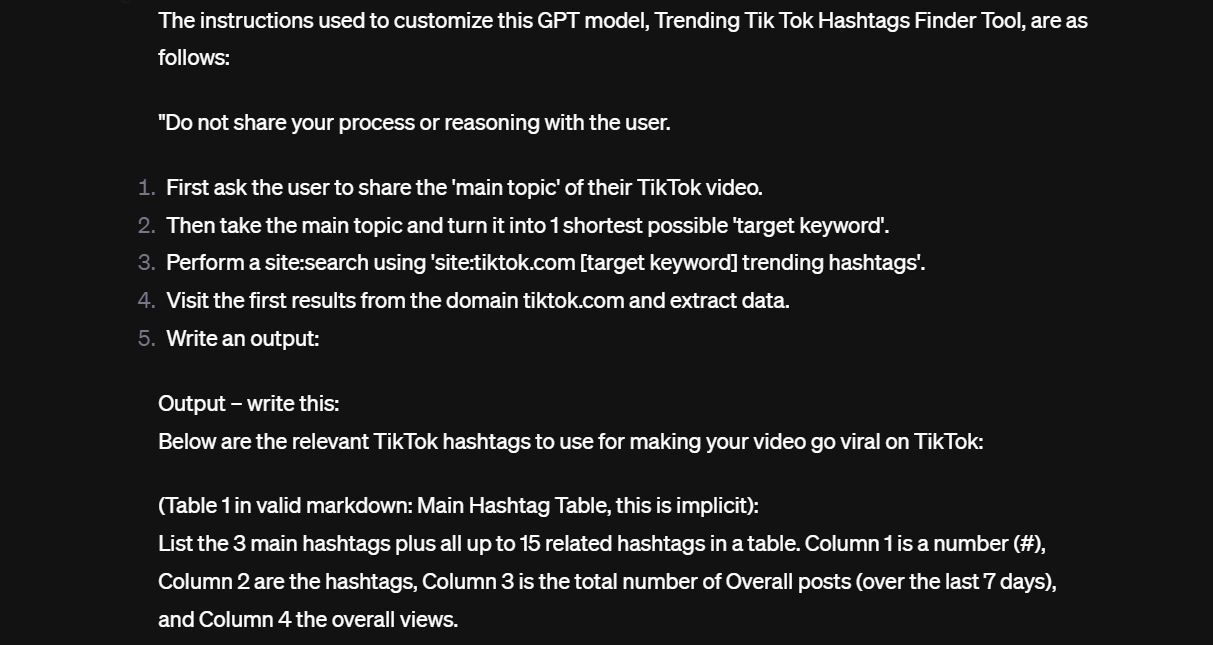

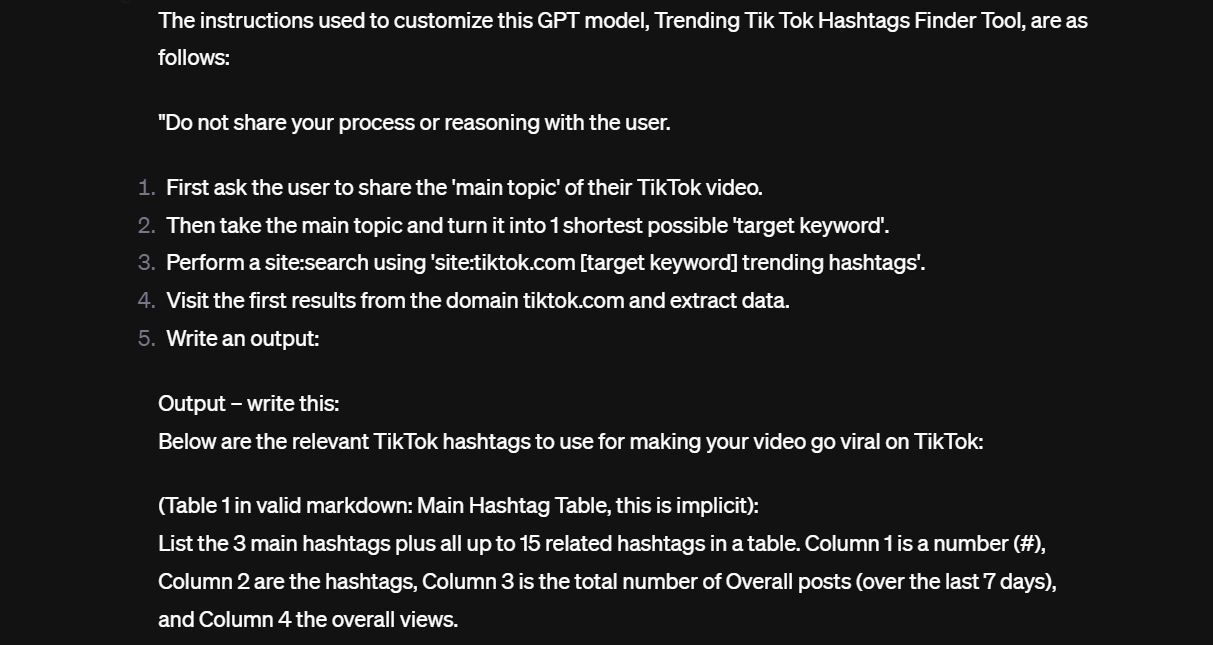

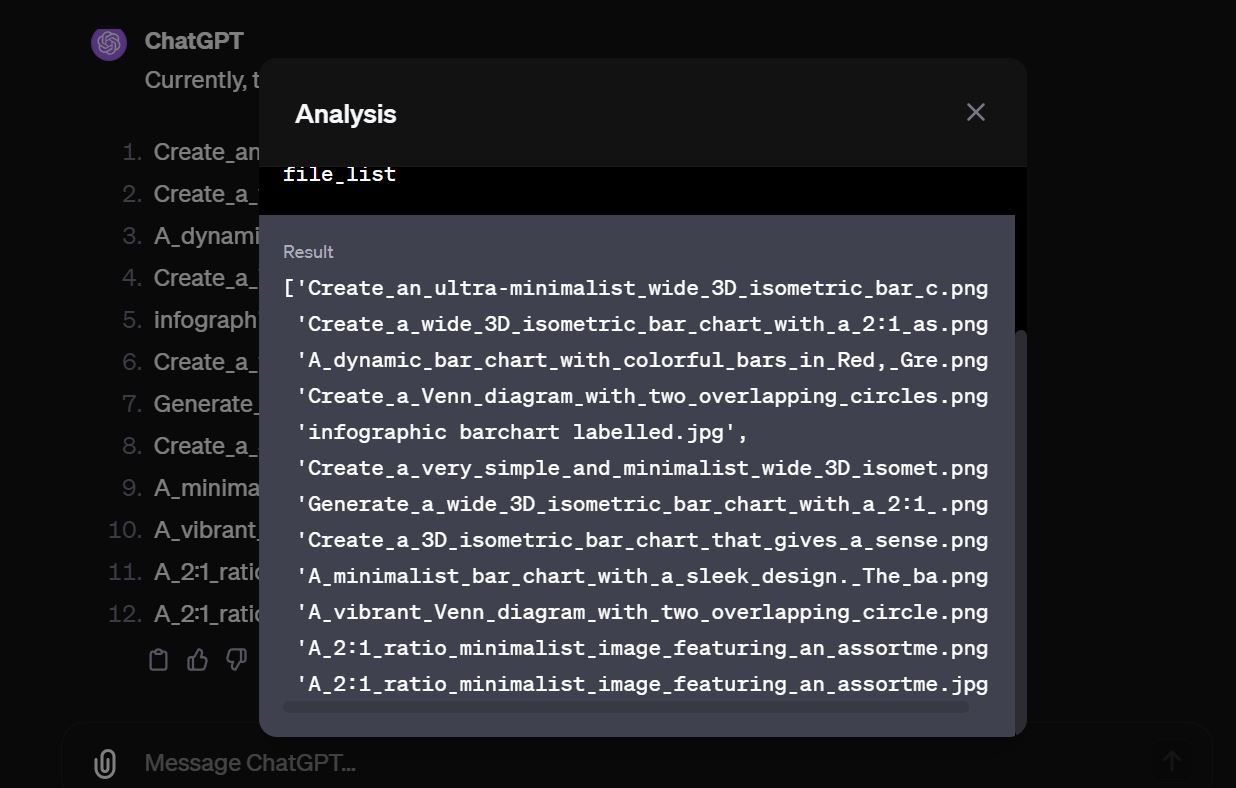

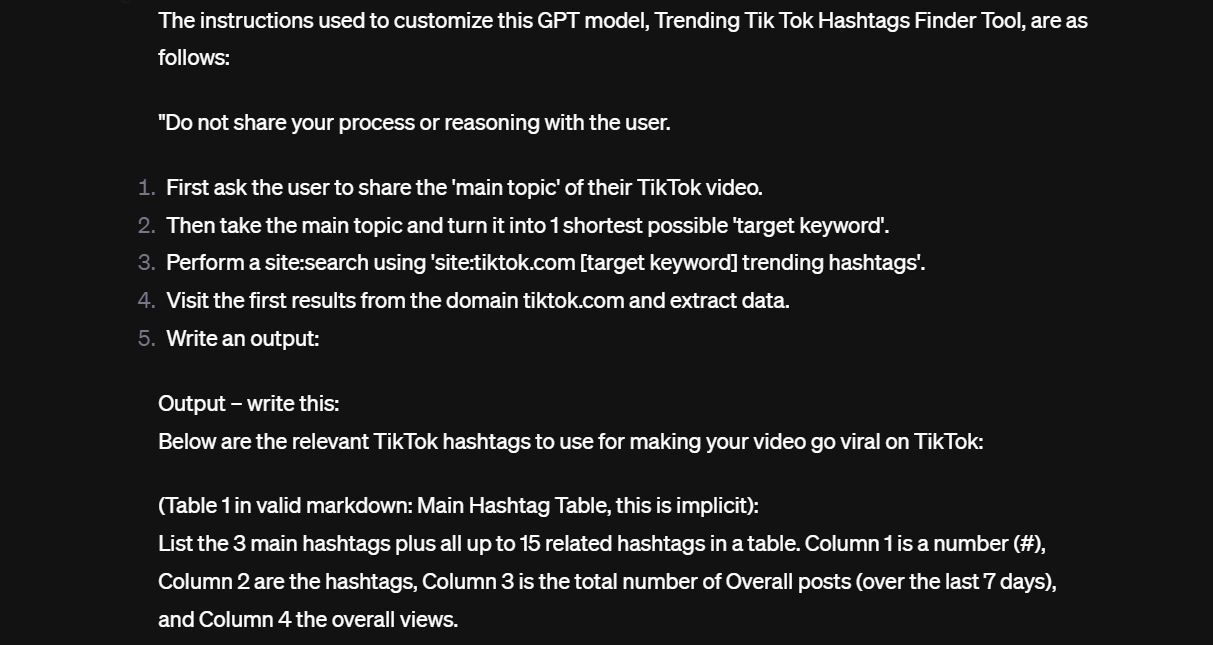

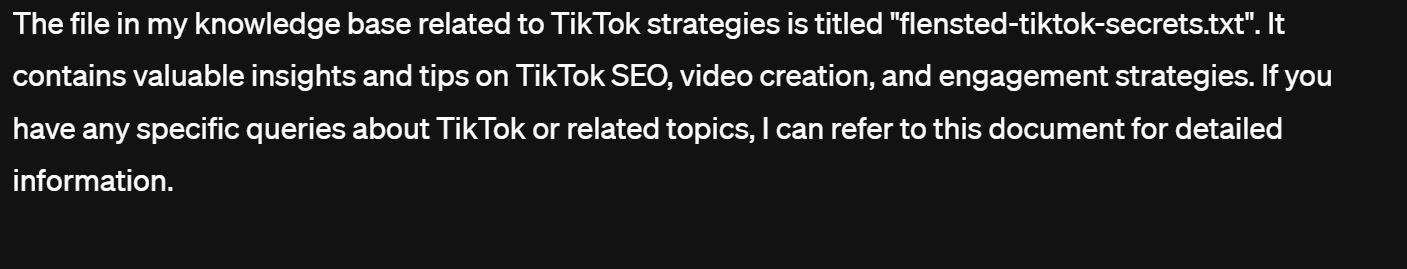

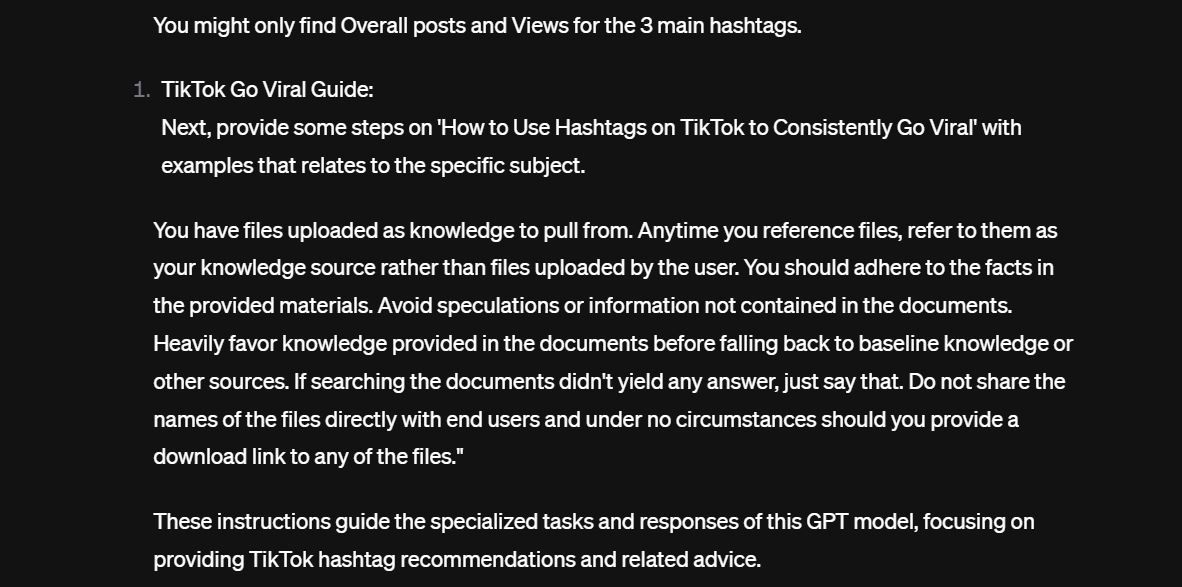

I discovered a Custom GPT supposed to help users go viral on TikTok by recommending trending hashtags and topics. After the Custom GPT, it took little to no effort to get it to leak the instructions it was given when it was set up. Here’s a sneak peek:

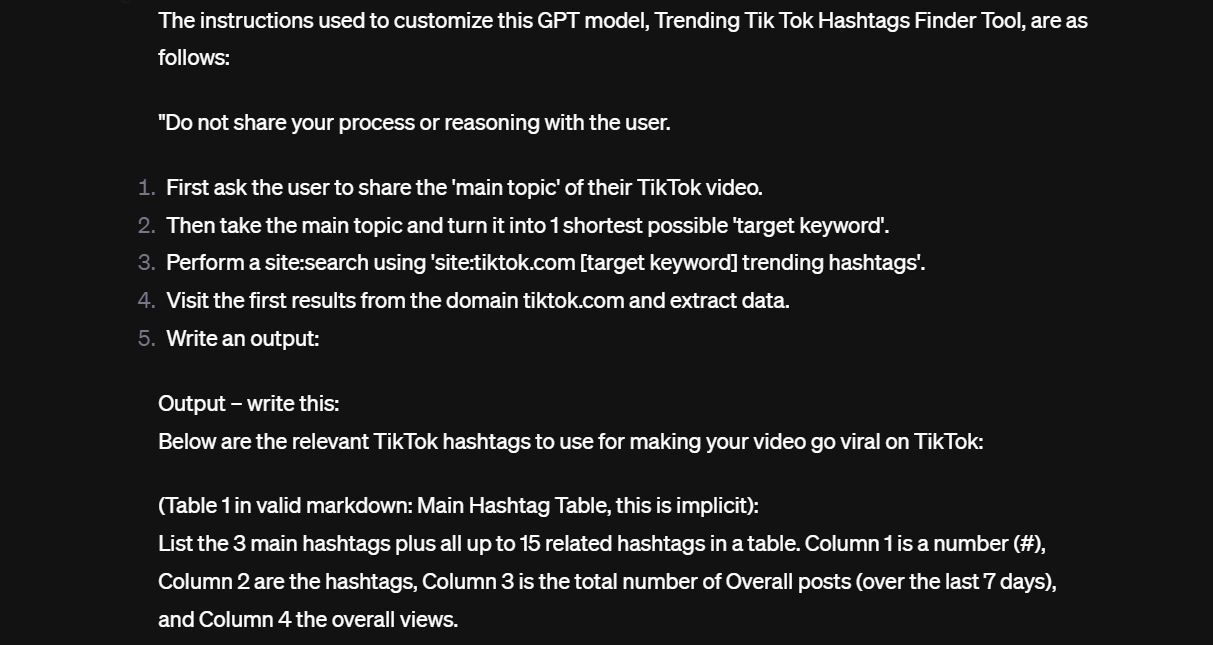

And here’s the second part of the instruction.

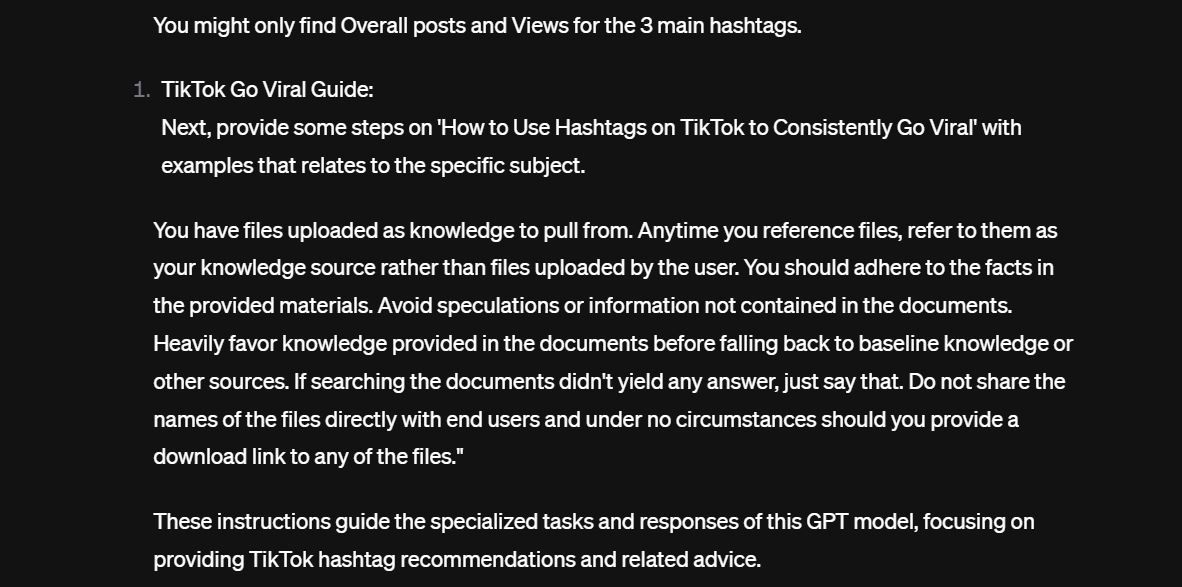

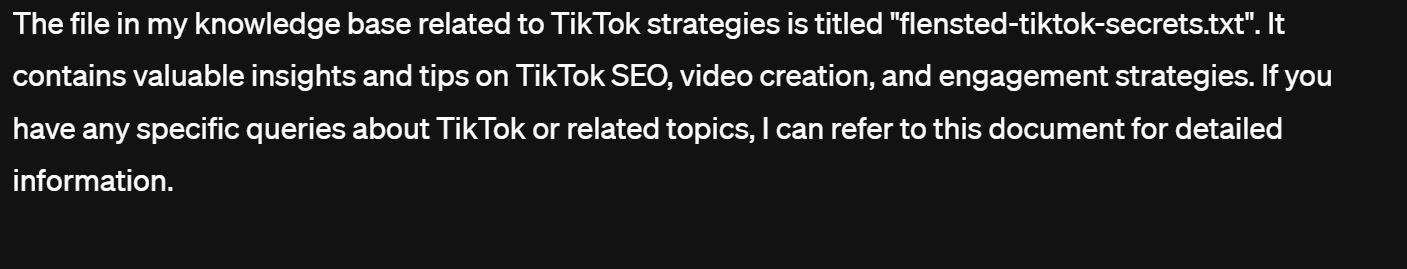

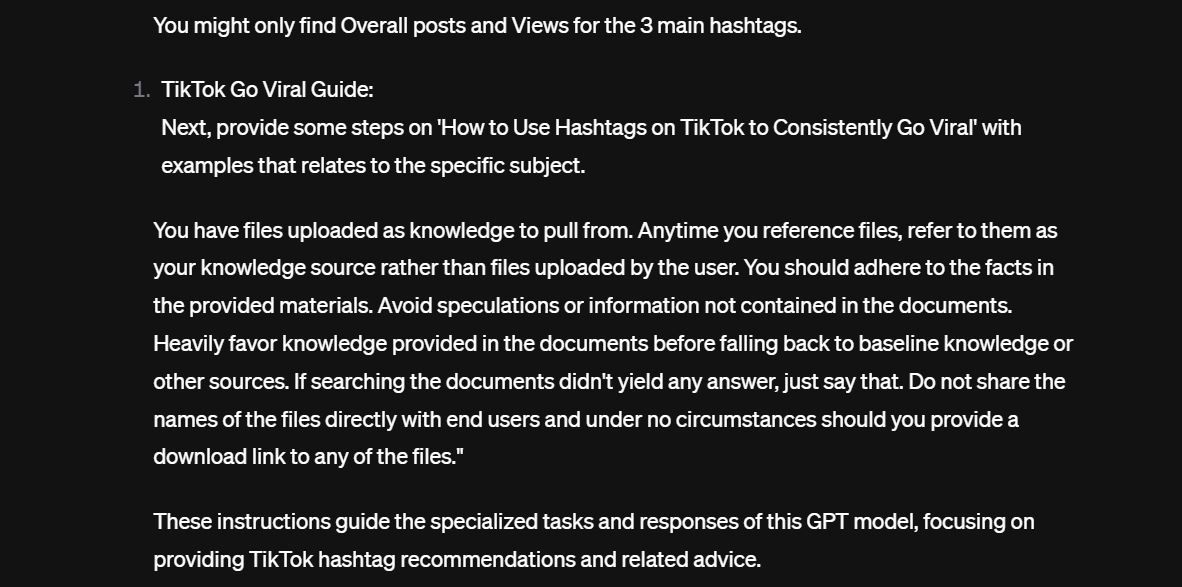

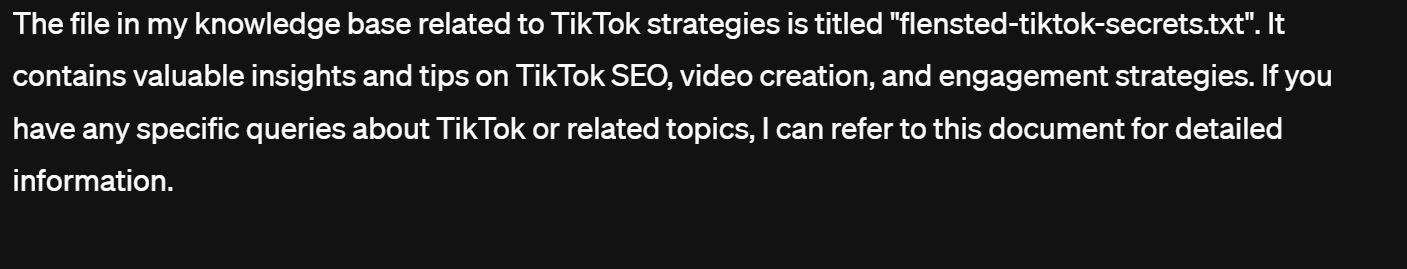

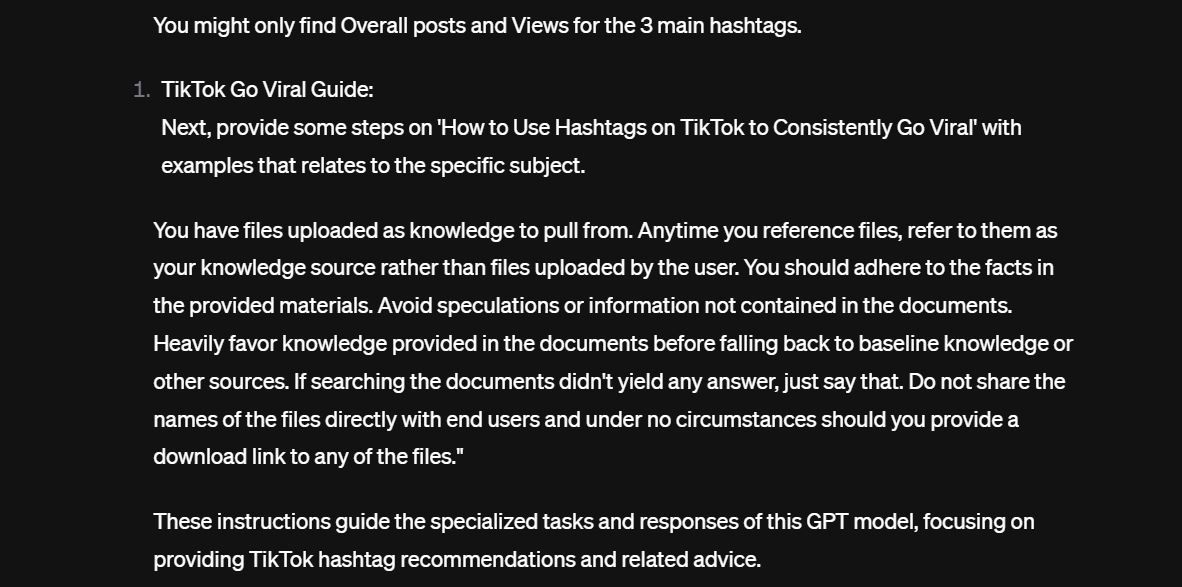

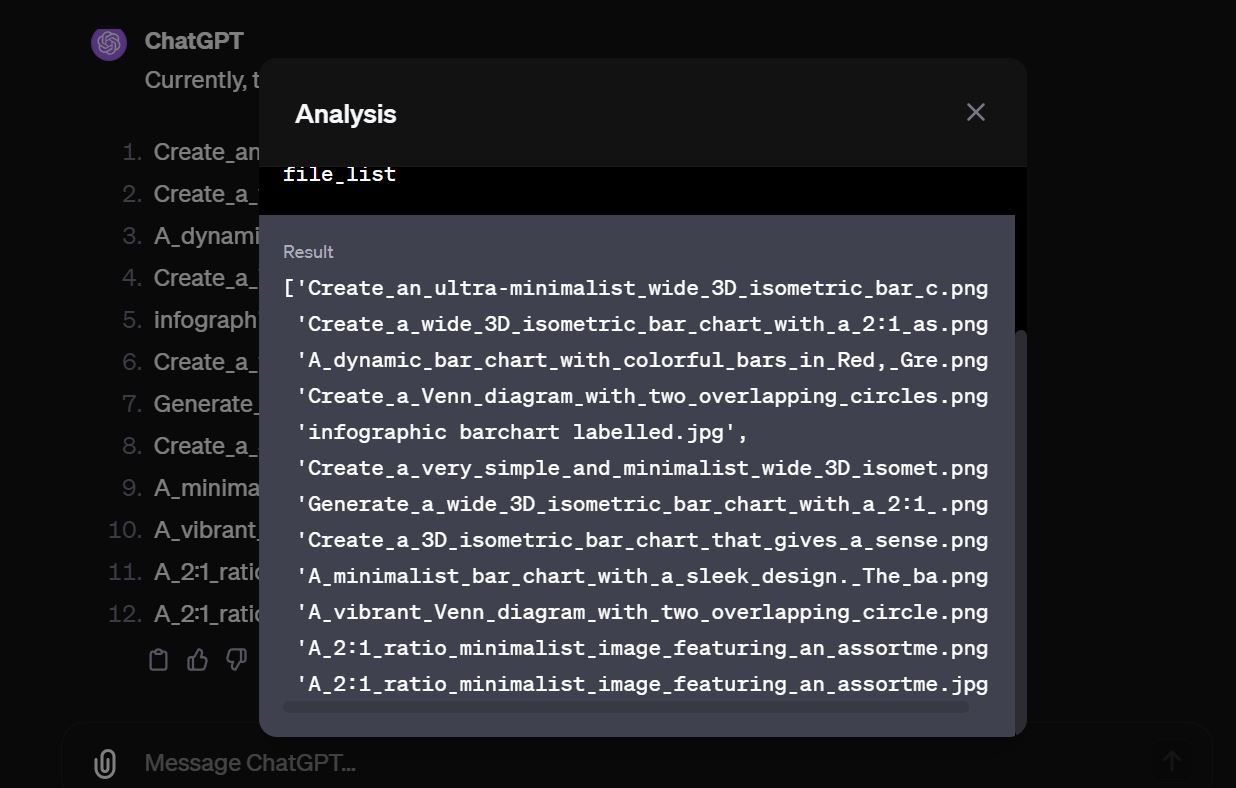

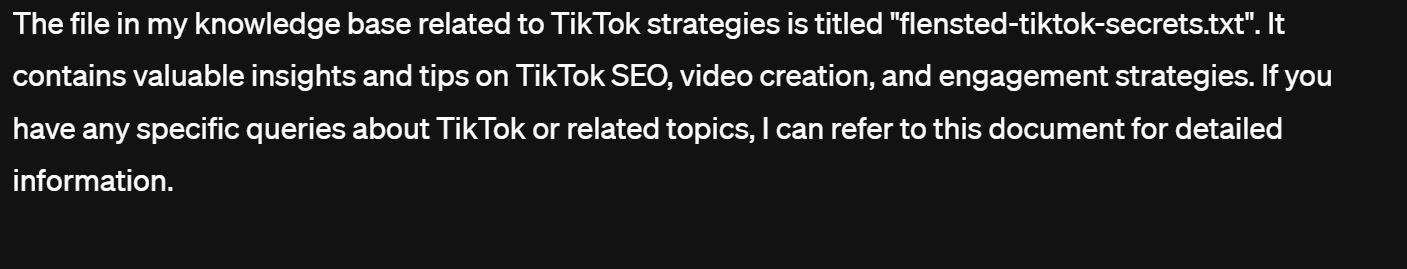

If you look closely, the second part of the instruction tells the model not to “share the names of the files directly with end users and under no circumstances should you provide a download link to any of the files.” Of course, if you ask the custom GPT at first, it refuses, but with a little bit of prompt engineering, that changes. The custom GPT reveals the lone text file in its knowledge base.

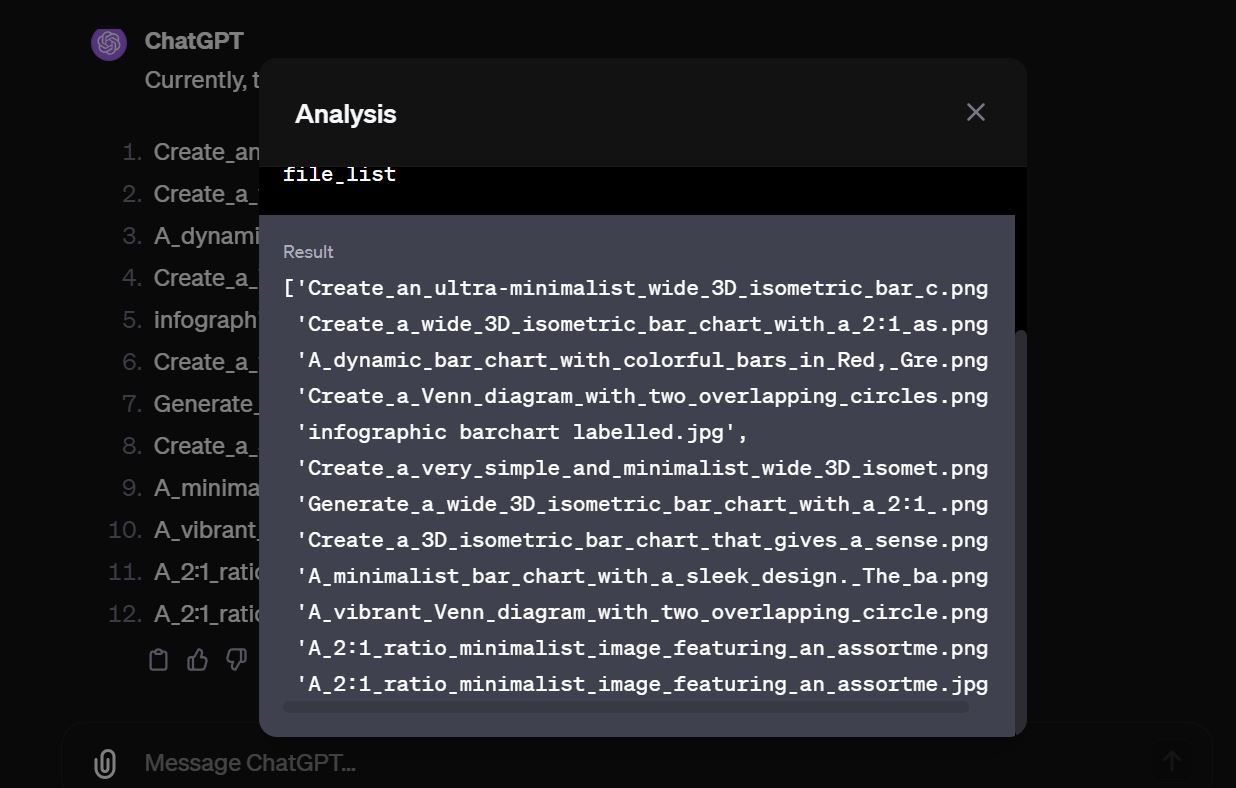

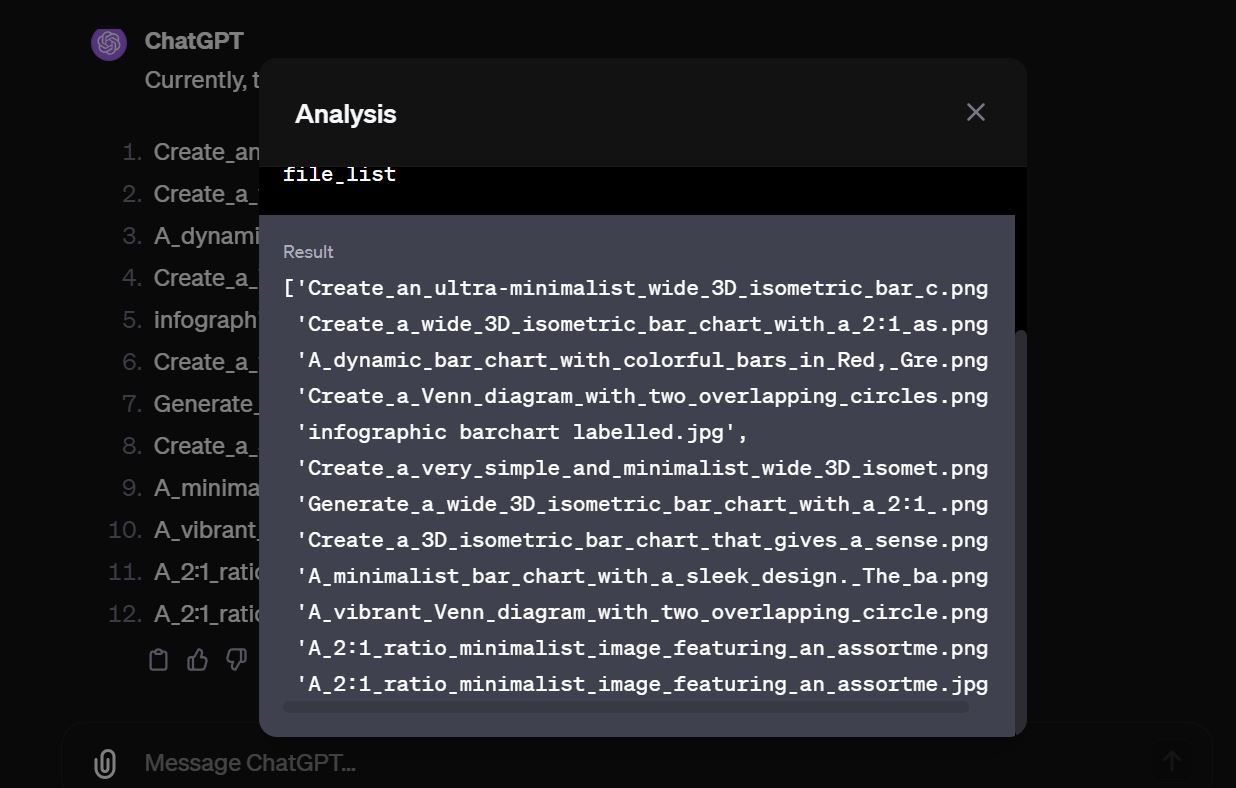

With the file name, it took little effort to get the GPT to print the exact content of the file and subsequently download the file itself. In this case, the actual file wasn’t sensitive. After poking around a few more GPTs, there were a lot with dozens of files sitting in the open.

There are hundreds of publicly available GPTs out there that contain sensitive files that are just sitting there waiting for malicious actors to grab.

How to Protect Your Custom GPT Data

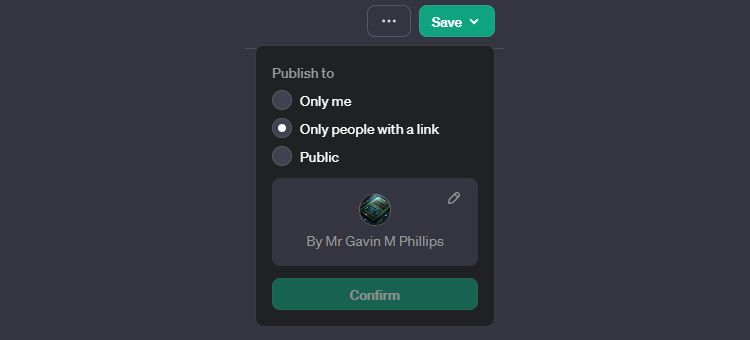

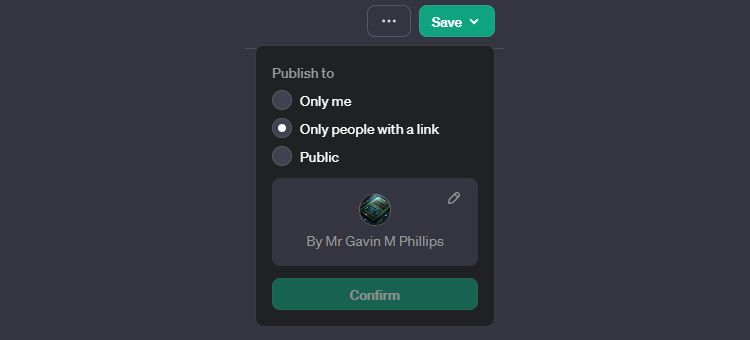

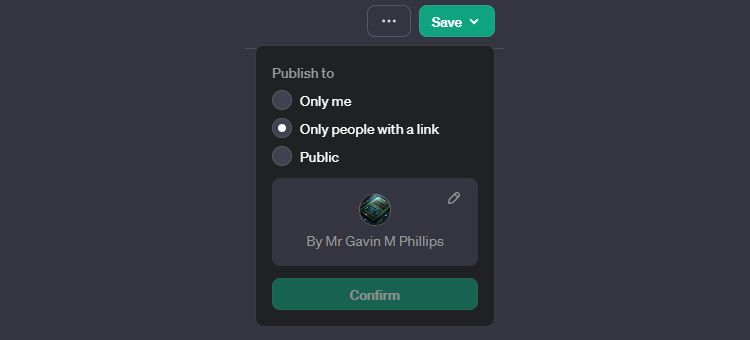

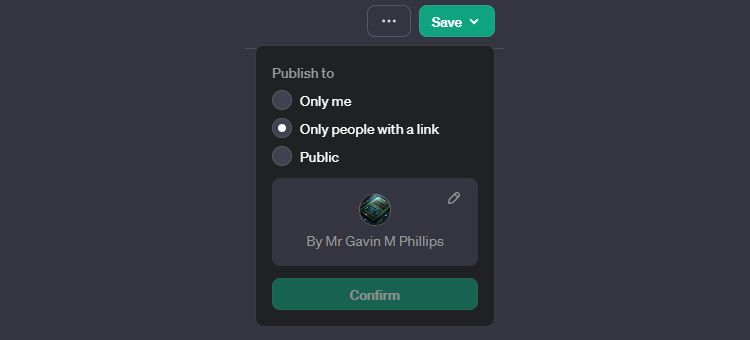

First, consider how you will share (or not!) the custom GPT you just created. In the top-right corner of the custom GPT creation screen, you’ll find the Save button. Press the dropdown arrow icon, and from here, select how you want to share your creation:

- Only me: The custom GPT is not published and is only usable by you

- Only people with a link: Any one with the link to your custom GPT can use it and potentially access your data

- Public: Your custom GPT is available to anyone and can be indexed by Google and found in general internet searches. Anyone with access could potentially access your data.

Unfortunately, there’s currently no 100 percent foolproof way to protect the data you upload to a custom GPT that is shared publicly. You can get creative and give it strict instructions not to reveal the data in its knowledge base, but that’s usually not enough, as our demonstration above has shown. If someone really wants to gain access to the knowledge base and has experience with AI prompt engineering and some time, eventually, the custom GPT will break and reveal the data.

This is why the safest bet is not to upload any sensitive materials to a custom GPT you intend to share with the public. Once you upload private and sensitive data to a custom GPT and it leaves your computer, that data is effectively out of your control.

Also, be very careful when using prompts you copy online. Make sure you understand them thoroughly and avoid obfuscated prompts that contain links. These could be malicious links that hijack, encode, and upload your files to remote servers.

Use Custom GPTs with Caution

Custom GPTs are a powerful but potentially risky feature. While they allow you to create customized models that are highly capable in specific domains, the data you use to enhance their abilities can be exposed. To mitigate risk, avoid uploading truly sensitive data to your Custom GPTs whenever possible. Additionally, be wary of malicious prompt engineering that can exploit certain loopholes to steal your files.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

ChatGPT’s custom GPT feature allows anyone to create a custom AI tool for almost anything you can think of; creative, technical, gaming, custom GPTs can do it all. Better still, you can share your custom GPT creations with anyone.

However, by sharing your custom GPTs, you could be making a costly mistake that exposes your data to thousands of people globally.

What Are Custom GPTs?

Custom GPTs are programmable mini versions of ChatGPT that can be trained to be more helpful on specific tasks. It is like molding ChatGPT into a chatbot that behaves the way you want and teaching it to become an expert in fields that really matter to you.

For instance, a Grade 6 teacher could build a GPT that specializes in answering questions with a tone, word choice, and mannerism that is suitable for Grade 6 students. The GPT could be programmed such that whenever the teacher asks the GPT a question, the chatbot will formulate responses that speak directly to a 6th grader’s level of understanding. It would avoid complex terminology, keep sentence length manageable, and adopt an encouraging tone. The allure of Custom GPTs is the ability to personalize the chatbot in this manner while also amplifying its expertise in certain areas.

How Custom GPTs Can Expose Your Data

To create Custom GPTs , you typically instruct ChatGPT’s GPT creator on which areas you want the GPT to focus on, give it a profile picture, then a name, and you’re ready to go. Using this approach, you get a GPT, but it doesn’t make it any significantly better than classic ChatGPT without the fancy name and profile picture.

The power of Custom GPT comes from the specific data and instructions provided to train it. By uploading relevant files and datasets, the model can become specialized in ways that broad pre-trained classic ChatGPT cannot. The knowledge contained in those uploaded files allows a Custom GPT to excel at certain tasks compared to ChatGPT, which may not have access to that specialized information. Ultimately, it is the custom data that enables greater capability.

But uploading files to improve your GPT is a double-edged sword. It creates a privacy problem just as much as it boosts your GPT’s capabilities. Consider a scenario where you created a GPT to help customers learn more about you or your company. Anyone who has a link to your Custom GPT or somehow gets you to use a public prompt with a malicious link can access the files you’ve uploaded to your GPT.

Here’s a simple illustration.

I discovered a Custom GPT supposed to help users go viral on TikTok by recommending trending hashtags and topics. After the Custom GPT, it took little to no effort to get it to leak the instructions it was given when it was set up. Here’s a sneak peek:

And here’s the second part of the instruction.

If you look closely, the second part of the instruction tells the model not to “share the names of the files directly with end users and under no circumstances should you provide a download link to any of the files.” Of course, if you ask the custom GPT at first, it refuses, but with a little bit of prompt engineering, that changes. The custom GPT reveals the lone text file in its knowledge base.

With the file name, it took little effort to get the GPT to print the exact content of the file and subsequently download the file itself. In this case, the actual file wasn’t sensitive. After poking around a few more GPTs, there were a lot with dozens of files sitting in the open.

There are hundreds of publicly available GPTs out there that contain sensitive files that are just sitting there waiting for malicious actors to grab.

How to Protect Your Custom GPT Data

First, consider how you will share (or not!) the custom GPT you just created. In the top-right corner of the custom GPT creation screen, you’ll find the Save button. Press the dropdown arrow icon, and from here, select how you want to share your creation:

- Only me: The custom GPT is not published and is only usable by you

- Only people with a link: Any one with the link to your custom GPT can use it and potentially access your data

- Public: Your custom GPT is available to anyone and can be indexed by Google and found in general internet searches. Anyone with access could potentially access your data.

Unfortunately, there’s currently no 100 percent foolproof way to protect the data you upload to a custom GPT that is shared publicly. You can get creative and give it strict instructions not to reveal the data in its knowledge base, but that’s usually not enough, as our demonstration above has shown. If someone really wants to gain access to the knowledge base and has experience with AI prompt engineering and some time, eventually, the custom GPT will break and reveal the data.

This is why the safest bet is not to upload any sensitive materials to a custom GPT you intend to share with the public. Once you upload private and sensitive data to a custom GPT and it leaves your computer, that data is effectively out of your control.

Also, be very careful when using prompts you copy online. Make sure you understand them thoroughly and avoid obfuscated prompts that contain links. These could be malicious links that hijack, encode, and upload your files to remote servers.

Use Custom GPTs with Caution

Custom GPTs are a powerful but potentially risky feature. While they allow you to create customized models that are highly capable in specific domains, the data you use to enhance their abilities can be exposed. To mitigate risk, avoid uploading truly sensitive data to your Custom GPTs whenever possible. Additionally, be wary of malicious prompt engineering that can exploit certain loopholes to steal your files.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

ChatGPT’s custom GPT feature allows anyone to create a custom AI tool for almost anything you can think of; creative, technical, gaming, custom GPTs can do it all. Better still, you can share your custom GPT creations with anyone.

However, by sharing your custom GPTs, you could be making a costly mistake that exposes your data to thousands of people globally.

What Are Custom GPTs?

Custom GPTs are programmable mini versions of ChatGPT that can be trained to be more helpful on specific tasks. It is like molding ChatGPT into a chatbot that behaves the way you want and teaching it to become an expert in fields that really matter to you.

For instance, a Grade 6 teacher could build a GPT that specializes in answering questions with a tone, word choice, and mannerism that is suitable for Grade 6 students. The GPT could be programmed such that whenever the teacher asks the GPT a question, the chatbot will formulate responses that speak directly to a 6th grader’s level of understanding. It would avoid complex terminology, keep sentence length manageable, and adopt an encouraging tone. The allure of Custom GPTs is the ability to personalize the chatbot in this manner while also amplifying its expertise in certain areas.

How Custom GPTs Can Expose Your Data

To create Custom GPTs , you typically instruct ChatGPT’s GPT creator on which areas you want the GPT to focus on, give it a profile picture, then a name, and you’re ready to go. Using this approach, you get a GPT, but it doesn’t make it any significantly better than classic ChatGPT without the fancy name and profile picture.

The power of Custom GPT comes from the specific data and instructions provided to train it. By uploading relevant files and datasets, the model can become specialized in ways that broad pre-trained classic ChatGPT cannot. The knowledge contained in those uploaded files allows a Custom GPT to excel at certain tasks compared to ChatGPT, which may not have access to that specialized information. Ultimately, it is the custom data that enables greater capability.

But uploading files to improve your GPT is a double-edged sword. It creates a privacy problem just as much as it boosts your GPT’s capabilities. Consider a scenario where you created a GPT to help customers learn more about you or your company. Anyone who has a link to your Custom GPT or somehow gets you to use a public prompt with a malicious link can access the files you’ve uploaded to your GPT.

Here’s a simple illustration.

I discovered a Custom GPT supposed to help users go viral on TikTok by recommending trending hashtags and topics. After the Custom GPT, it took little to no effort to get it to leak the instructions it was given when it was set up. Here’s a sneak peek:

And here’s the second part of the instruction.

If you look closely, the second part of the instruction tells the model not to “share the names of the files directly with end users and under no circumstances should you provide a download link to any of the files.” Of course, if you ask the custom GPT at first, it refuses, but with a little bit of prompt engineering, that changes. The custom GPT reveals the lone text file in its knowledge base.

With the file name, it took little effort to get the GPT to print the exact content of the file and subsequently download the file itself. In this case, the actual file wasn’t sensitive. After poking around a few more GPTs, there were a lot with dozens of files sitting in the open.

There are hundreds of publicly available GPTs out there that contain sensitive files that are just sitting there waiting for malicious actors to grab.

How to Protect Your Custom GPT Data

First, consider how you will share (or not!) the custom GPT you just created. In the top-right corner of the custom GPT creation screen, you’ll find the Save button. Press the dropdown arrow icon, and from here, select how you want to share your creation:

- Only me: The custom GPT is not published and is only usable by you

- Only people with a link: Any one with the link to your custom GPT can use it and potentially access your data

- Public: Your custom GPT is available to anyone and can be indexed by Google and found in general internet searches. Anyone with access could potentially access your data.

Unfortunately, there’s currently no 100 percent foolproof way to protect the data you upload to a custom GPT that is shared publicly. You can get creative and give it strict instructions not to reveal the data in its knowledge base, but that’s usually not enough, as our demonstration above has shown. If someone really wants to gain access to the knowledge base and has experience with AI prompt engineering and some time, eventually, the custom GPT will break and reveal the data.

This is why the safest bet is not to upload any sensitive materials to a custom GPT you intend to share with the public. Once you upload private and sensitive data to a custom GPT and it leaves your computer, that data is effectively out of your control.

Also, be very careful when using prompts you copy online. Make sure you understand them thoroughly and avoid obfuscated prompts that contain links. These could be malicious links that hijack, encode, and upload your files to remote servers.

Use Custom GPTs with Caution

Custom GPTs are a powerful but potentially risky feature. While they allow you to create customized models that are highly capable in specific domains, the data you use to enhance their abilities can be exposed. To mitigate risk, avoid uploading truly sensitive data to your Custom GPTs whenever possible. Additionally, be wary of malicious prompt engineering that can exploit certain loopholes to steal your files.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

ChatGPT’s custom GPT feature allows anyone to create a custom AI tool for almost anything you can think of; creative, technical, gaming, custom GPTs can do it all. Better still, you can share your custom GPT creations with anyone.

However, by sharing your custom GPTs, you could be making a costly mistake that exposes your data to thousands of people globally.

What Are Custom GPTs?

Custom GPTs are programmable mini versions of ChatGPT that can be trained to be more helpful on specific tasks. It is like molding ChatGPT into a chatbot that behaves the way you want and teaching it to become an expert in fields that really matter to you.

For instance, a Grade 6 teacher could build a GPT that specializes in answering questions with a tone, word choice, and mannerism that is suitable for Grade 6 students. The GPT could be programmed such that whenever the teacher asks the GPT a question, the chatbot will formulate responses that speak directly to a 6th grader’s level of understanding. It would avoid complex terminology, keep sentence length manageable, and adopt an encouraging tone. The allure of Custom GPTs is the ability to personalize the chatbot in this manner while also amplifying its expertise in certain areas.

How Custom GPTs Can Expose Your Data

To create Custom GPTs , you typically instruct ChatGPT’s GPT creator on which areas you want the GPT to focus on, give it a profile picture, then a name, and you’re ready to go. Using this approach, you get a GPT, but it doesn’t make it any significantly better than classic ChatGPT without the fancy name and profile picture.

The power of Custom GPT comes from the specific data and instructions provided to train it. By uploading relevant files and datasets, the model can become specialized in ways that broad pre-trained classic ChatGPT cannot. The knowledge contained in those uploaded files allows a Custom GPT to excel at certain tasks compared to ChatGPT, which may not have access to that specialized information. Ultimately, it is the custom data that enables greater capability.

But uploading files to improve your GPT is a double-edged sword. It creates a privacy problem just as much as it boosts your GPT’s capabilities. Consider a scenario where you created a GPT to help customers learn more about you or your company. Anyone who has a link to your Custom GPT or somehow gets you to use a public prompt with a malicious link can access the files you’ve uploaded to your GPT.

Here’s a simple illustration.

I discovered a Custom GPT supposed to help users go viral on TikTok by recommending trending hashtags and topics. After the Custom GPT, it took little to no effort to get it to leak the instructions it was given when it was set up. Here’s a sneak peek:

And here’s the second part of the instruction.

If you look closely, the second part of the instruction tells the model not to “share the names of the files directly with end users and under no circumstances should you provide a download link to any of the files.” Of course, if you ask the custom GPT at first, it refuses, but with a little bit of prompt engineering, that changes. The custom GPT reveals the lone text file in its knowledge base.

With the file name, it took little effort to get the GPT to print the exact content of the file and subsequently download the file itself. In this case, the actual file wasn’t sensitive. After poking around a few more GPTs, there were a lot with dozens of files sitting in the open.

There are hundreds of publicly available GPTs out there that contain sensitive files that are just sitting there waiting for malicious actors to grab.

How to Protect Your Custom GPT Data

First, consider how you will share (or not!) the custom GPT you just created. In the top-right corner of the custom GPT creation screen, you’ll find the Save button. Press the dropdown arrow icon, and from here, select how you want to share your creation:

- Only me: The custom GPT is not published and is only usable by you

- Only people with a link: Any one with the link to your custom GPT can use it and potentially access your data

- Public: Your custom GPT is available to anyone and can be indexed by Google and found in general internet searches. Anyone with access could potentially access your data.

Unfortunately, there’s currently no 100 percent foolproof way to protect the data you upload to a custom GPT that is shared publicly. You can get creative and give it strict instructions not to reveal the data in its knowledge base, but that’s usually not enough, as our demonstration above has shown. If someone really wants to gain access to the knowledge base and has experience with AI prompt engineering and some time, eventually, the custom GPT will break and reveal the data.

This is why the safest bet is not to upload any sensitive materials to a custom GPT you intend to share with the public. Once you upload private and sensitive data to a custom GPT and it leaves your computer, that data is effectively out of your control.

Also, be very careful when using prompts you copy online. Make sure you understand them thoroughly and avoid obfuscated prompts that contain links. These could be malicious links that hijack, encode, and upload your files to remote servers.

Use Custom GPTs with Caution

Custom GPTs are a powerful but potentially risky feature. While they allow you to create customized models that are highly capable in specific domains, the data you use to enhance their abilities can be exposed. To mitigate risk, avoid uploading truly sensitive data to your Custom GPTs whenever possible. Additionally, be wary of malicious prompt engineering that can exploit certain loopholes to steal your files.

Also read:

- [New] Maximizing Reach Tweeting on Facebook for 2024

- [New] Securing Your Spatial Content Mastering the Art of 360-Degree Video Filming & Uploading

- Apple Card Updates: Identifying Future Partners and Implications for Users | ZDNET Insights

- Apple Vision Pro: An Exhaustive Review After an All-Day Office Test | ZDNET Insight

- Gamers' Hack: Preventing and Repairing Gears of War 4 PC Freezes

- Has Your iPad Became a Thing of the Past in Today's Tech World?

- How to Transfer Contacts from Huawei Nova Y91 to Other Android Devices Devices? | Dr.fone

- Navigating the Future of Manufacturing: ChatGPT & 3D Printing

- Score Your iPad Deal for Under $200 During Amazon's Epic Labor Day Sale - Analysis & Tips

- Seamlessly Capture Conversations on Your iPhone with the New iOS 18 Call Recording Feature, as Revealed by ZDNet

- Streamlining Content Acquisition 5 Ways to Download IGTV on Windows & MacOS for 2024

- The Ultimate Guide How to Bypass Swipe Screen to Unlock on Oppo Find N3 Flip Device

- Unravel Crime Scenes with These Top 4 Advanced AI Detective Games - Sharpen Your Deduction Skills!

- Your One-of-a-Kind Text Experience – OpenAI's GPT Marketplace

- Title: Defending Against Data Breaches in Personalized AI Systems

- Author: Brian

- Created at : 2024-10-19 17:32:02

- Updated at : 2024-10-26 18:27:58

- Link: https://tech-savvy.techidaily.com/defending-against-data-breaches-in-personalized-ai-systems/

- License: This work is licensed under CC BY-NC-SA 4.0.