Differentiating Authentic and Erroneous Machine Learning Outputs

Differentiating Authentic and Erroneous Machine Learning Outputs

Artificial Intelligence (AI) hallucination sounds perplexing. You’re probably thinking, “Isn’t hallucination a human phenomenon?” Well, yes, it used to be a solely-human phenomenon until AI began exhibiting human characteristics like facial recognition, self-learning, and speech recognition.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

Unfortunately, AI took on some negative attributes, including hallucinations. So, is AI hallucination similar to the kind of hallucination humans experience?

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

What Is AI Hallucination?

Artificial intelligence hallucination occurs when an AI model generates outputs different from what is expected. Note that some AI models are trained to intentionally generate outputs unrelated to any real-world input (data).

For example, top AI text-to-art generators , such as DALL-E 2, can creatively generate novel images we can tag as “hallucinations” since they are not based on real-world data.

AI Hallucination in Large Language Processing Models

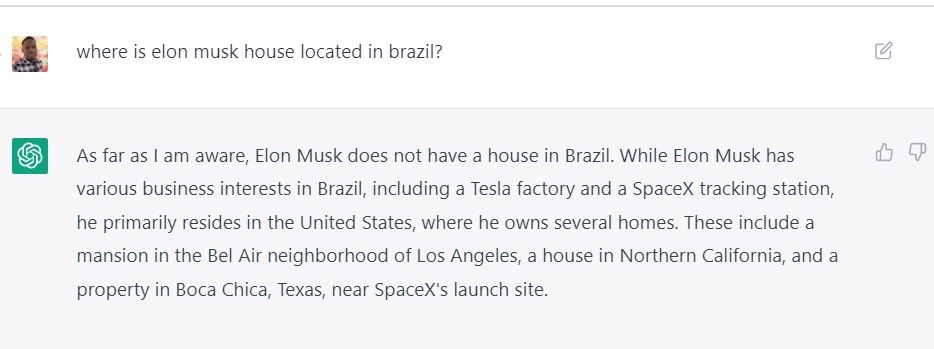

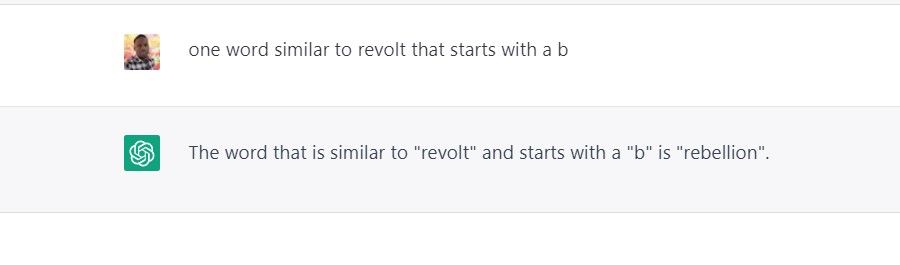

Let’s consider what AI hallucination would look like in a large language processing model such as ChatGPT. A ChatGPT hallucination would result in the bot giving you an incorrect fact with some assertion, such that you would naturally take such facts as truth.

In simple terms, it is made-up statements by the artificially intelligent chatbot. Here’s an example:

On further query, ChatGPT turned up with this:

AI Hallucination in Computer Vision

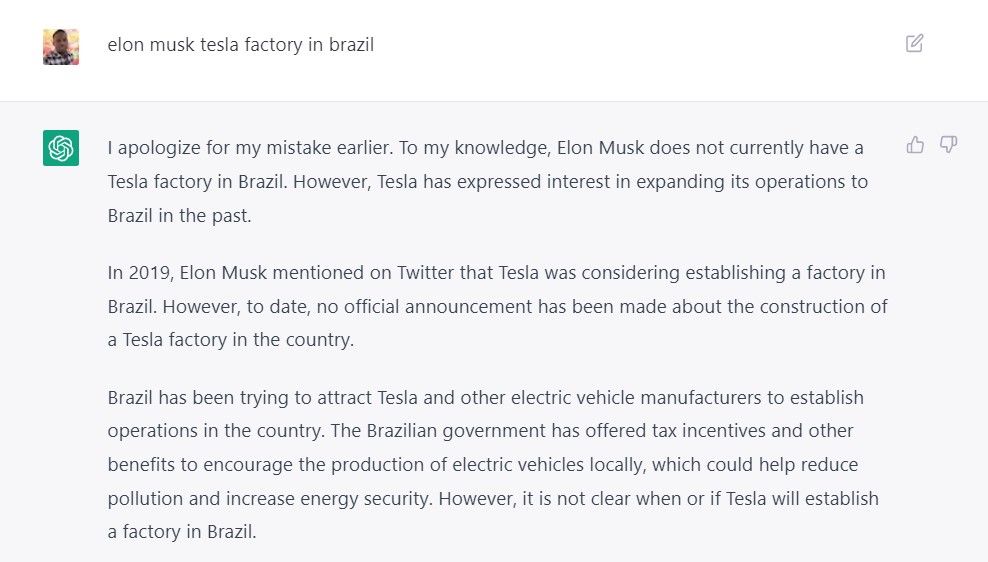

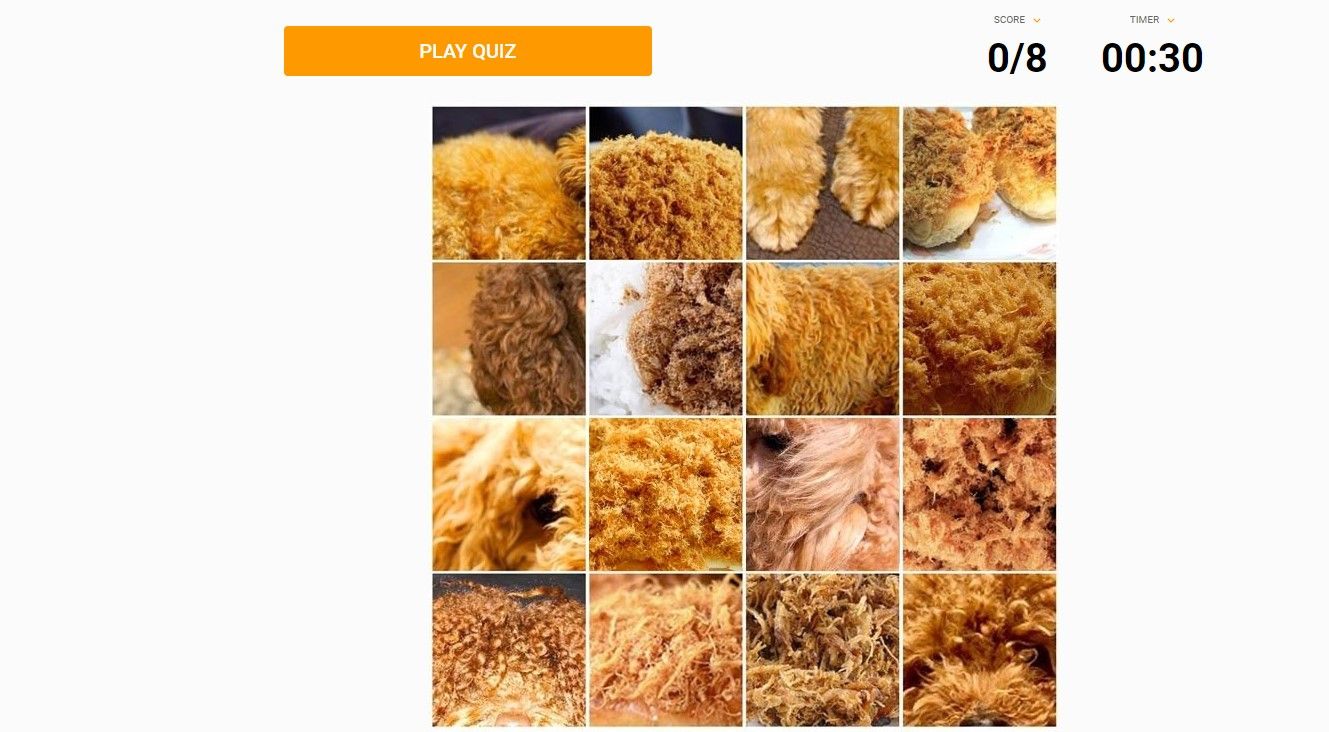

Let’s consider another field of AI that can experience AI hallucination: Computer Vision . The quiz below shows a 4x4 montage with two entities that look so much alike. The images are a mix of BBQ potato chips and leaves.

The challenge is to select the potato chips without hitting any leaves in the montage. This image might look tricky to a computer, and it might be unable to differentiate between the BBQ potato chips and leaves.

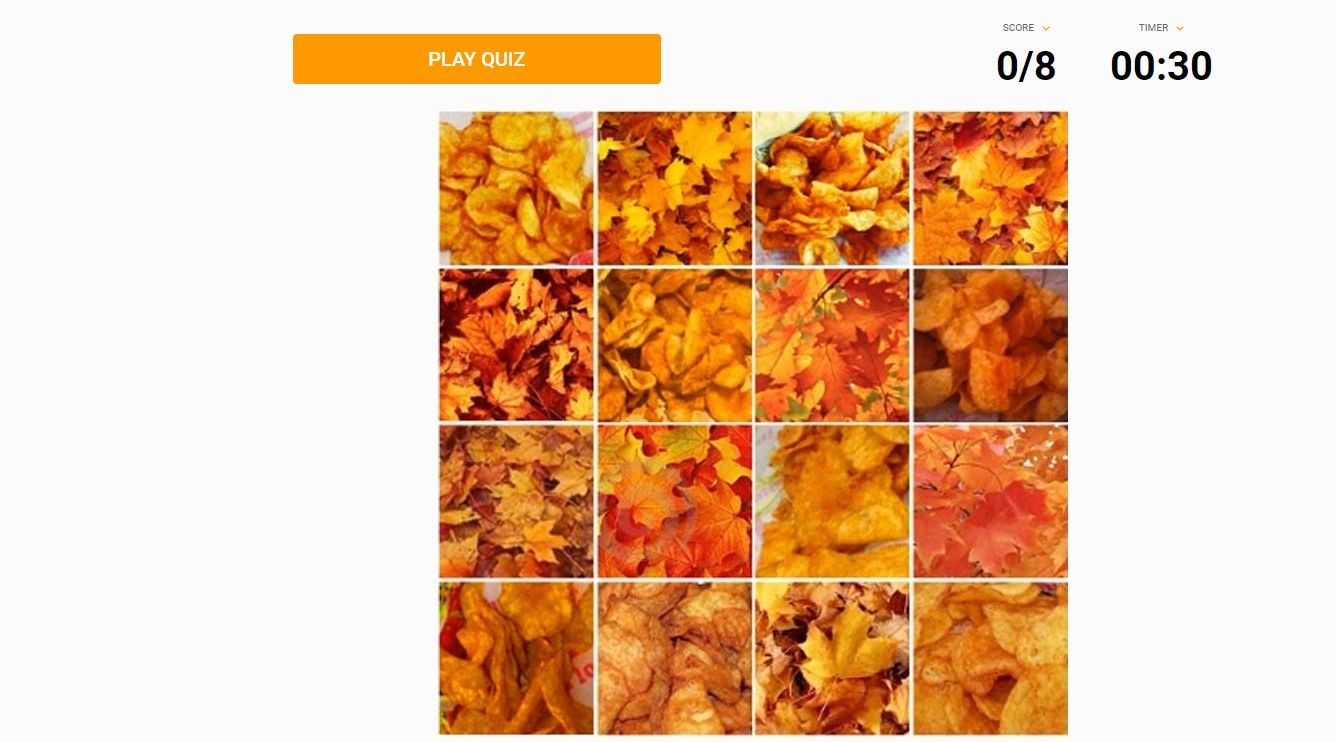

Here’s another montage with a mix of poodle and pork floss bun images. A computer would most likely be unable to differentiate between them, therefore mixing the images.

Why Does AI Hallucination Occur?

AI hallucination can occur due to adversarial examples—input data that trick an AI application into misclassifying them. For example, when training AI applications, developers use data (image, text, or others); if the data is changed or distorted, the application interprets the input differently, giving a wrong output.

In contrast, a human can still recognize and identify the data accurately despite the distortions. We can tag this as common sense—a human attribute AI does not yet possess. Watch how AI is fooled with adversarial examples in this video:

Regarding large language-based models such as ChatGPT and its alternatives , hallucinations may arise from inaccurate decoding from the transformer (machine learning model).

In AI, a transformer is a deep learning model that uses self-attention (semantic relationships between words in a sentence) to produce text similar to what a human would write using an encoder-decoder (input-output) sequence.

So transformers, a semi-supervised machine learning model, can generate a new body of text (output) from the large corpus of text data used in its training (input). It does so by predicting the next word in a series based on the previous words.

Concerning hallucination, if a language model was trained on insufficient and inaccurate data and resources, it is expected that the output would be made-up and inaccurate. The language model might generate a story or narrative without logical inconsistencies or unclear connections.

In the example below, ChatGPT was asked to give a word similar to “revolt” and starts with a “b.” Here is its response:

On further probing, it kept giving wrong answers, with a high level of confidence.

So why is ChatGPT unable to give an accurate answer to these prompts?

It could be that the language model is unequipped to handle rather complex prompts such as these or that it cannot interpret the prompt accurately, ignoring the prompt on giving a similar word with a specific alphabet.

How Do You Spot AI Hallucination?

It is evident now that AI applications have the potential to hallucinate—generate responses otherwise from the expected output (fact or truth) without any malicious intent. And spotting and recognizing AI hallucinations is up to the users of such applications.

Here are some ways to spot AI hallucinations while using common AI applications:

1. Large Language Processing Models

Although rare, if you notice a grammatical error in the content produced by a large processing model, such as ChatGPT, that should raise an eyebrow and make you suspect a hallucination. Similarly, when text-generated content doesn’t sound logical, correlate with the context given, or match the input data, you should suspect a hallucination.

Using human judgment or common sense can help detect hallucinations, as humans can easily identify when a text does not make sense or follow reality.

2. Computer Vision

As a branch of artificial intelligence, machine learning, and computer science, computer vision empowers computers to recognize and process images like human eyes. Using convolutional neural networks , they rely on the incredible amount of visual data used in their training.

A deviation from the patterns of the visual data used in training will result in hallucinations. For example, if a computer was not trained with images of a tennis ball, it could identify it as a green orange. Or if a computer recognizes a horse beside a human statue as a horse beside a real human, then an AI hallucination has occurred.

So to spot a computer vision hallucination, compare the output generated to what a [normal] human is expected to see.

3. Self-Driving Cars

Image Credit: Ford

Thanks to AI, self-driving cars are gradually infiltrating the auto market. Pioneers like Tesla Autopilot and Ford’s BlueCruise have been championing the scene of self-driving cars. You can check out how and what the Tesla Autopilot sees to get a bit of an understanding of how AI powers self-driving cars.

If you own one of such cars, you would want to know if your AI car is hallucinating. One sign will be if your vehicle seems to be deviating from its normal behavior patterns while driving. For example, if the vehicle brakes or swerves suddenly without any obvious reason, your AI vehicle might be hallucinating.

AI Systems Can Hallucinate Too

Humans and AI models experience hallucinations differently. When it comes to AI, hallucinations refer to erroneous outputs that are miles apart from reality or do not make sense within the context of the given prompt. For example, an AI chatbot may give a grammatically or logically incorrect response or misidentify an object due to noise or other structural factors.

AI hallucinations do not result from a conscious or subconscious mind, as you would observe in humans. Rather, it results from inadequacy or insufficiency of the data used in training and programming the AI system.

SCROLL TO CONTINUE WITH CONTENT

Unfortunately, AI took on some negative attributes, including hallucinations. So, is AI hallucination similar to the kind of hallucination humans experience?

Also read:

- [New] 2024 Approved Unlocking Your Videos Top 7 Rippers Explored

- [New] In 2024, Titans Clash Discovering the Ultimate 7 Grand Wars

- [Updated] 2024 Approved Building a Professional Online Brand as a Game Vlogger

- [Updated] In 2024, Master Money Makers The Best of the Top IG Earners

- Can Conversational AI Drive Health Transformation?

- Convertir Archivos De Audio/Video AU a Formato WMV Online Sin Coste: Guía Del Sitio Movavi

- Ease Up on Latency Issues - Roblox Playing

- Evolving Digital Safety: Navigating Through 7 Trends

- How ChatGPT's Custom GPTs Could Expose Your Data and How to Keep It Safe

- How Is Device-Based Artificinas Engineered and Used?

- In 2024, 10 Fastest Growing YouTube Channels to Light Up Your Mind

- Removing Cinavia Protection: Enabling HD Streaming From Your PS4 with a Personal Copy

- Skepticism in Automated Text Summaries: Why Trust Bots Less?

- Undetected Retention: Concealing Your AI Discourse Trail

- Title: Differentiating Authentic and Erroneous Machine Learning Outputs

- Author: Brian

- Created at : 2024-09-28 18:47:33

- Updated at : 2024-10-03 16:18:52

- Link: https://tech-savvy.techidaily.com/differentiating-authentic-and-erroneous-machine-learning-outputs/

- License: This work is licensed under CC BY-NC-SA 4.0.