Discovering AI's Roots: An Illustrative History

Discovering AI’s Roots: An Illustrative History

While the AI race only recently started, artificial intelligence and machine learning have been around longer than consumers realize. AI technologies play a crucial role in various industries. They accelerate research and development in healthcare, national security, logistics, finance, and retail, among other sectors.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

AI has a rich, complex history. Here are some of the most notable breakthroughs that shape today’s most sophisticated AI models.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

1300-1900: Tracing the Roots of AI

Computers emerged in the mid-70s , but historians trace the earliest references to AI back to the late middle ages. Scholars often wondered about future innovations. Of course, they lacked the technological resources and skills to materialize ideas.

- 1305: Theologian and Catalan mystic Ramon Llull wrote Ars Magna in the early 1300s. It details mechanical techniques for logical interreligious dialogues. The last section of Ars Magna, the Ars Generalis Ultima, explains a diagram for deriving propositions from existing information. It bears semblance to AI training.

- 1666: Gottfried Leibniz’s Dissertatio de arte combinatoria draws inspiration from Ars Magna. It’s a mechanical diagram that dissects dialogues, deconstructing them into their simplest forms for easy analyses. These deconstructed formulas are similar to the datasets that AI developers use.

- 1726: Gulliver’s Travels by Jonathan Swift introduces The Engine. It’s a fictional device that generates logical word sets and permutations, enabling even “the most ignorant person” to write scholarly pieces on various subjects. Generative AI performs this exact function.

- 1854: English mathematician George Boole likens logical reasoning to numeracy. He argues that humans can formulate hypotheses and analyze problems through predetermined equations. Coincidentally, generative AI uses complex algorithms to produce output.

Although the first period looking at the roots of AI covers a vast period, there are some key moments.

1900-1950: The Dawn of Modern AI

Technological developments accelerated during this period. The accessibility of IT resources enabled researchers to materialize theories, imagined concepts, and speculations. They were laying the foundation for cybernetics.

- 1914: Spanish civil engineer Leonardo Torres y Quevedo created El Ajedrecista, which translates to The Chess Player in English. It’s an early use of automation. The Chess Player performed an endgame move using its rook and king to checkmate an opposing player.

- 1943: Walter Pitts and Warren McCulloch developed a mathematical and computer model of the biological neuron. It performs simple logical functions. Researchers would continue referencing this algorithm for several decades, enabling them to produce today’s neural networks and deep learning technologies

- 1950: Alan Turing published Computing Machinery and Intelligence. It’s the first research paper to tackle artificial intelligence, although he didn’t coin the term AI. He calls it “machines” and “computing machinery.” The problem statements of his theses primarily discussed the intelligence and logical reasoning of machinery.

- 1950: Alan Turing formally published the Turing Test. It’s one of the earliest and most widely used interrogation methods for testing the accuracy of AI systems .

The dawn of modern AI fitting accumulates with Alan Turing’s paper and the Turing Test, which attempts to answer the question , “Can machines think?”

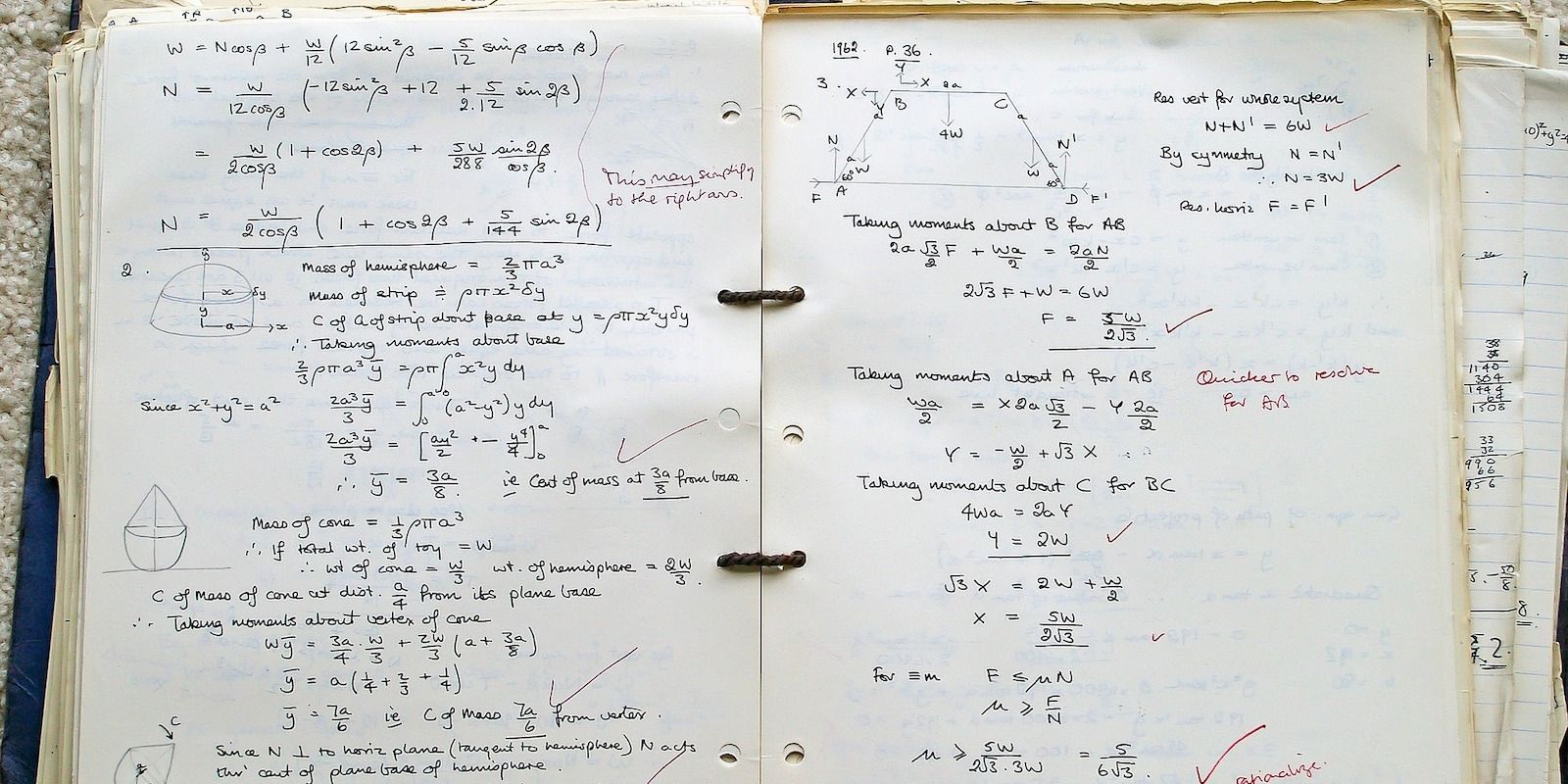

1951-2000: Exploring the Applications of AI Technologies

Image Credit: Ik T/Wikipedia Commons

The term “artificial intelligence” was coined during this period. After laying the groundwork for AI, researchers began exploring use cases. Various sectors experimented with it. The technology wasn’t commercially available yet—researchers focused on medical, industrial, and logistical applications.

- 1956: Scholars like Alan Turing and John Von Neumann were already researching ways to integrate logical reasoning with machines. However, John McCarthy only coined the term AI in 1956. It first appeared in a longitudinal study proposal by McCarthy, Claude Shannon, Nathaniel Rochester, and Marvin Minsky.

- 1966: Charles Rosen built Shakey the robot under the Stanford Research Institute. It’s arguably the first “intelligent” robot capable of executing simple tasks, recognizing patterns, and determining routes.

- 1997: IBM built Deep Blue, a chess-playing system powered by its supercomputer. It’s the first automated chess player to play a full game autonomously and win. Moreover, the demonstration involved a world-class chess grandmaster.

The middle period of AI development saw one of the most important moments of all: the coining of the term “artificial intelligence.”

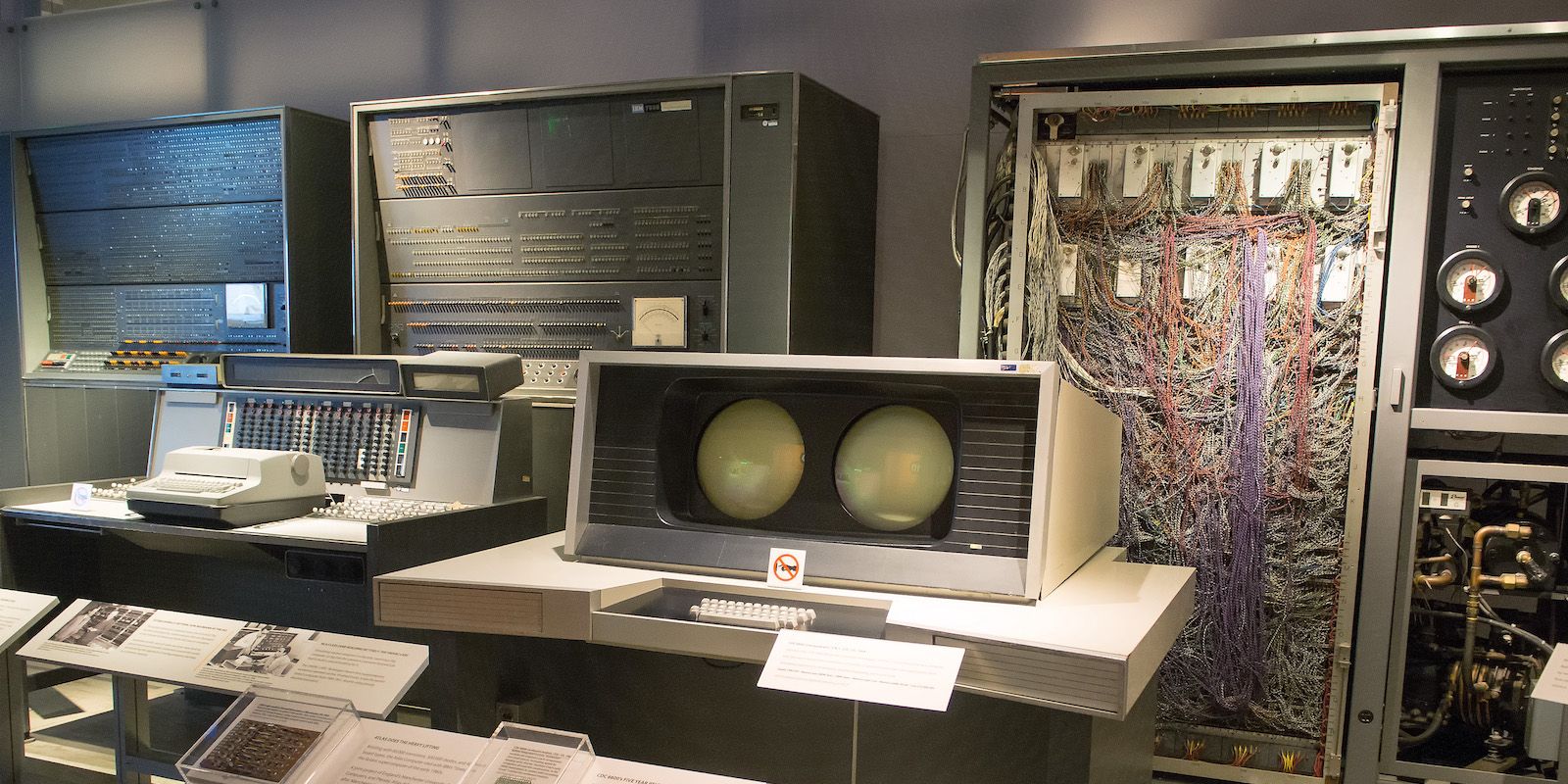

2001-2010: Integrating AI Into Modern Technologies

Image Credits: Carl Berkeley/Wikimedia Commons

Consumers gained access to innovative, groundbreaking technologies that made their lives more convenient. They slowly adopted these new gadgets. The iPod replaced the Sony Walkman, gaming consoles put arcades out of business, and Wikipedia beat Encyclopædia Britannica.

- 2001: Honda developed ASIMO. It’s a bipedal, AI-driven humanoid that walks as fast as humans. But ASIMO was never commercially sold—Honda primarily used it as a mobility, machine learning, and robotics research platform.

- 2002: iRobot launched the floor vacuuming robot. Despite the gadget’s simple function, it boasts an advanced algorithm far more sophisticated than what its predecessors used.

- 2006: Turing Center researchers Michele Banko, Oren Etzioni, and Michael Cafarella published a seminal paper on machine reading. It defines a system’s capacity to understand text autonomously.

- 2008: Google released an iOS app that accommodates speech recognition. It had an impressive 92 percent accuracy rate, while its predecessors capped at 80 percent accuracy.

- 2009: Google developed its driverless car for four years before passing the first statewide self-driving test in 2014. Competitors would later enhance driverless vehicles with AI .

Interestingly, despite this period featuring some of the most iconic tech from recent decades, AI wasn’t fully on the radar for most consumers, with personal and home assistant tools like Siri and Alexa only appearing in the next period.

2011-2020: The Spread and Development of AI-Driven Applications

Companies began developing stable AI-driven solutions during this period. They integrate AI into various software and hardware systems, like virtual assistants, grammar checkers, laptops, smartphones, and augmented reality apps .

- 2011: IBM developed Watson, a question-answering computer system. The company pitted it against two former champions in Jeopardy to demonstrate its capabilities—Watson the computer won.

- 2011: Apple released Siri. It’s a sophisticated AI-driven virtual assistant that iPhone owners still use regularly.

- 2012: University of Toronto researchers developed an 84 percent large-scale visual recognition system. Note that older models had a 25 percent error rate.

- 2016: Reigning world champion in Go Lee Sedol played five games against AlphaGo, a Go-playing computer system trained by Google DeepMind. Lee lost four times. This demonstration proves that properly trained AI systems surpass even the most skilled professionals in their fields

- 2018: OpenAI developed GPT-1, the first language model of the GPT family . Developers used the BookCorpus dataset for training. The model could answer general knowledge questions and use natural language.

During this period, consumers were likely to use AI applications without even realizing it, even though visual and voice recognition tools (for most consumers) were still young. Towards the end of the decade, AI development kicked up a notch, but still not as dramatically as what was to come.

2021-Present: Global Tech Leaders Kick off the Great AI Race

The great AI race has begun. Developers are releasing language models, and companies are researching ways to integrate AI with their products. At this rate, almost every consumer product will have an AI component.

- 2022: OpenAI made waves with ChatGPT . It’s a sophisticated, AI-driven chatbot powered by GPT-3.5, an iteration of the GPT model it developed in 2018. Developers fed it 300 billion words during training.

- 2023: Other global tech companies followed suit. Google launched Bard, Microsoft released Bing Chat, Meta developed an open-source language model called LLaMA, and OpenAI released GPT-4, its upgraded model.

There are also numerous other AI web apps and AI-based health apps available for use or in development, and so many more to come.

How AI Will Shape the Future

AI technologies go beyond chatbots and image generators. They contribute to the advancement of various fields, from global security to consumer technology. You benefit from AI in more ways than you realize. So instead of rejecting publicly available AI systems, learn to utilize them yourself.

Jumpstart your research with simple AI tools like ChatGPT or Bing Chat. Incorporate them into your daily life. Powerful language models can compose challenging emails, research SEO keywords, solve math questions, and answer general knowledge questions.

SCROLL TO CONTINUE WITH CONTENT

AI has a rich, complex history. Here are some of the most notable breakthroughs that shape today’s most sophisticated AI models.

Also read:

- [New] In 2024, Bedtime Videos Breakdown Stories and Reviews

- [Updated] Best Captures of Mac's Visual Display (Under 156 Characters) for 2024

- [Updated] The Essential Guide to State-of-the-Art Screen Recorders

- Ad-Based Revenue Tracking for YouTubers' Success for 2024

- Blizzard & Microsoft Alliance: Shaping the Future of AI-Driven Art and Language [Panel Discussion]

- Brainpower Boost: Mindmappings + GPT-3 Dialogue

- How do I reset my Infinix Note 30 Phone without technical knowledge? | Dr.fone

- How To Track IMEI Number Of Infinix Smart 8 Pro Through Google Earth?

- In 2024, Mastering Airborne Shots The Pivotal Role of DJI Spark in Selfie Culture

- In 2024, What Pokémon Evolve with A Dawn Stone For Vivo Y100 5G? | Dr.fone

- Is Conversation the Key to Outdoor Emergency Prep?

- Is iOS 18 Causing Issues with Your iPad? Essential Info & Tips Pre-Installation - Insights

- Navigating Modern Life: Top 9 Tips From ChatGPT Experts

- Professional Picks: A Guide to the Best Video Editing Laptops Tested and Analyzed | CNET Reviewers

- Sim Unlock Samsung Galaxy A34 5G Phones without Code 2 Ways to Remove Android Sim Lock

- Ultimate Selection of iPhone 16/Pro Cases: Comprehensive Expert Analysis | ZDNET's Guide to Durability and Style

- Unraveling GPT-3 Features for OpenAI Enthusiasts

- Unraveling Wellness Wisdom: Effective Fact-Checking Methods

- What to Get After iPhone 13 and 15 Pro: Expert Picks for Your New Smartphone Reviews

- Title: Discovering AI's Roots: An Illustrative History

- Author: Brian

- Created at : 2024-12-02 19:14:51

- Updated at : 2024-12-06 17:43:02

- Link: https://tech-savvy.techidaily.com/discovering-ais-roots-an-illustrative-history/

- License: This work is licensed under CC BY-NC-SA 4.0.