Exploring the Evolution of OpenAI's GPT

Exploring the Evolution of OpenAI’s GPT

OpenAI has made significant strides in natural language processing (NLP) through its GPT models. From GPT-1 to GPT-4, these models have been at the forefront of AI-generated content, from creating prose and poetry to chatbots and even coding.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

But what is the difference between each GPT model, and what’s their impact on the field of NLP?

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

What Are Generative Pre-Trained Transformers?

Generative Pre-trained Transformers (GPTs) are a type of machine learning model used for natural language processing tasks. These models are pre-trained on massive amounts of data, such as books and web pages, to generate contextually relevant and semantically coherent language.

In simpler terms, GPTs are computer programs that can create human-like text without being explicitly programmed to do so. As a result, they can be fine-tuned for a range of natural language processing tasks, including question-answering, language translation, and text summarization.

So, why are GPTs important? GPTs represent a significant breakthrough in natural language processing, allowing machines to understand and generate language with unprecedented fluency and accuracy. Below, we explore the four GPT models, from the first version to the most recent GPT-4, and examine their performance and limitations.

GPT-1

GPT-1 was released in 2018 by OpenAI as their first iteration of a language model using the Transformer architecture. It had 117 million parameters, significantly improving previous state-of-the-art language models.

One of the strengths of GPT-1 was its ability to generate fluent and coherent language when given a prompt or context. The model was trained on a combination of two datasets: the Common Crawl , a massive dataset of web pages with billions of words, and the BookCorpus dataset, a collection of over 11,000 books on a variety of genres. The use of these diverse datasets allowed GPT-1 to develop strong language modeling abilities.

While GPT-1 was a significant achievement in natural language processing (NLP) , it had certain limitations. For example, the model was prone to generating repetitive text, especially when given prompts outside the scope of its training data. It also failed to reason over multiple turns of dialogue and could not track long-term dependencies in text. Additionally, its cohesion and fluency were only limited to shorter text sequences, and longer passages would lack cohesion.

Despite these limitations, GPT-1 laid the foundation for larger and more powerful models based on the Transformer architecture.

GPT-2

GPT-2 was released in 2019 by OpenAI as a successor to GPT-1. It contained a staggering 1.5 billion parameters, considerably larger than GPT-1. The model was trained on a much larger and more diverse dataset, combining Common Crawl and WebText.

One of the strengths of GPT-2 was its ability to generate coherent and realistic sequences of text. In addition, it could generate human-like responses, making it a valuable tool for various natural language processing tasks, such as content creation and translation.

However, GPT-2 was not without its limitations. It struggled with tasks that required more complex reasoning and understanding of context. While GPT-2 excelled at short paragraphs and snippets of text, it failed to maintain context and coherence over longer passages.

These limitations paved the way for the development of the next iteration of GPT models.

GPT-3

Natural language processing models made exponential leaps with the release of GPT-3 in 2020. With 175 billion parameters, GPT-3 is over 100 times larger than GPT-1 and over ten times larger than GPT-2.

GPT-3 is trained on a diverse range of data sources, including BookCorpus, Common Crawl, and Wikipedia, among others. The datasets comprise nearly a trillion words, allowing GPT-3 to generate sophisticated responses on a wide range of NLP tasks, even without providing any prior example data.

One of the main improvements of GPT-3 over its previous models is its ability to generate coherent text, write computer code, and even create art. Unlike the previous models, GPT-3 understands the context of a given text and can generate appropriate responses. The ability to produce natural-sounding text has huge implications for applications like chatbots, content creation, and language translation. One such example is ChatGPT, a conversational AI bot, which went from obscurity to fame almost overnight .

While GPT-3 can do some incredible things, it still has flaws. For example, the model can return biased, inaccurate, or inappropriate responses. This issue arises because GPT-3 is trained on massive amounts of text that possibly contain biased and inaccurate information. There are also instances when the model generates totally irrelevant text to a prompt, indicating that the model still has difficulty understanding context and background knowledge.

The capabilities of GPT-3 also raised concerns about the ethical implications and potential misuse of such powerful language models . Experts worry about the possibility of the model being used for malicious purposes, like generating fake news, phishing emails, and malware. Indeed, we’ve already seen criminals use ChatGPT to create malware .

OpenAI also released an improved version of GPT-3, GPT-3.5, before officially launching GPT-4.

GPT-4

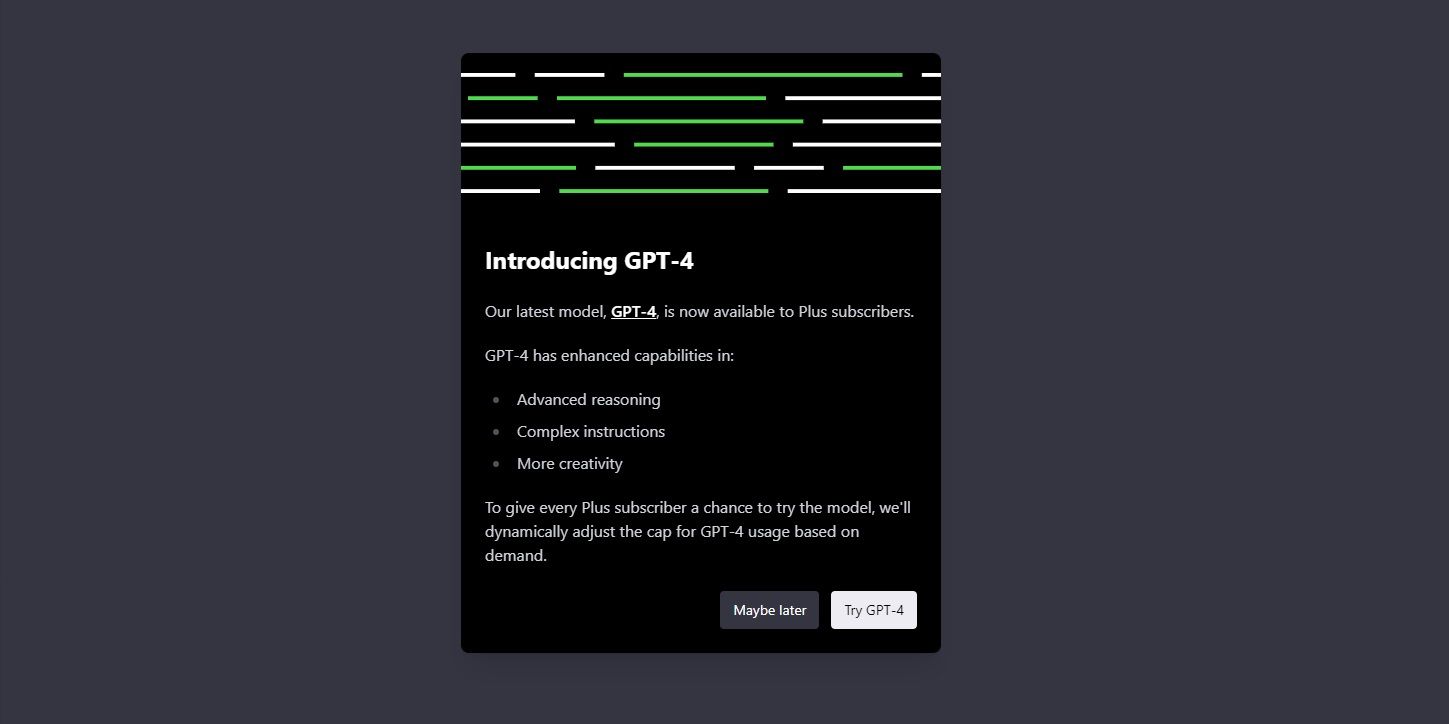

GPT-4 is the latest model in the GPT series, launched on March 14, 2023. It’s a significant step up from its previous model, GPT-3, which was already impressive. While the specifics of the model’s training data and architecture are not officially announced, it certainly builds upon the strengths of GPT-3 and overcomes some of its limitations.

GPT-4 is exclusive to ChatGPT Plus users, but the usage limit is capped. You can also gain access to it by joining the GPT-4 API waitlist, which might take some time due to the high volume of applications. However, the easiest way to get your hands on GPT-4 is using Microsoft Bing Chat . It’s completely free and there’s no need to join a waitlist.

A standout feature of GPT-4 is its multimodal capabilities. This means that the model can now accept an image as input and understand it like a text prompt. For example, during the GPT-4 launch live stream, an OpenAI engineer fed the model with an image of a hand-drawn website mockup, and the model surprisingly provided a working code for the website.

The model also better understands complex prompts and exhibits human-level performance on several professional and traditional benchmarks. Additionally, it has a larger context window and context size, which refers to the data the model can retain in its memory during a chat session.

GPT-4 is pushing the boundaries of what is currently possible with AI tools, and it will likely have applications in a wide range of industries. However, as with any powerful technology, there are concerns about the potential misuse and ethical implications of such a powerful tool .

| Model | Launch Date | Training Data | No. of Parameters | Max. Sequence Length |

|---|---|---|---|---|

| GPT-1 | June 2018 | Common Crawl, BookCorpus | 117 million | 1024 |

| GPT-2 | February 2019 | Common Crawl, BookCorpus, WebText | 1.5 billion | 2048 |

| GPT-3 | June 2020 | Common Crawl, BookCorpus, Wikipedia, Books, Articles, and more | 175 billion | 4096 |

| GPT-4 | March 2023 | Unknown | Estimated to be in trillions | Unknown |

A Journey Through GPT Language Models

GPT models have revolutionized the field of AI and opened up a new world of possibilities. Moreover, the sheer scale, capability, and complexity of these models have made them incredibly useful for a wide range of applications.

However, as with any technology, there are potential risks and limitations to consider. The ability of these models to generate highly realistic text and working code raises concerns about potential misuse, particularly in areas such as malware creation and disinformation.

Nonetheless, as GPT models evolve and become more accessible, they’ll play a notable role in shaping the future of AI and NLP.

SCROLL TO CONTINUE WITH CONTENT

But what is the difference between each GPT model, and what’s their impact on the field of NLP?

Also read:

- [Updated] 2024 Approved Exclusive List of Budget-Friendly Video Conferencing Tools

- [Updated] Rising Through Rhythm How to Submit Music to YouTube for 2024

- [Updated] The Crème De La Créativité YouTube Subscriber List

- [Updated] Top 10 Gratis Video Chat Solutions for Corporate & Schools

- Bottles Could Potentially Break if Not Handled with Care or Placed Too Roughly on the Table, Causing Liquid to Spill Out

- De Beste Manieren Om MKV Tot DVD Op Windows/Mac Toe Te Konverteren

- Descubre Las Mejores Técnicas De Grabación Para Tus Vídeos De TikTok en Todos Los Equipos Usando Movavi

- Determining Facebook Uptime: Methods and Timelines

- Diferenças Essenciais Entre Formatos De Vídeo - MOV Vs. MP4 Explicado!

- Discover the Ultimate 10 PC-Based Software Solutions Similar to ScreenFlow for Seamless Video Production

- In 2024, 9 Best Free Android Monitoring Apps to Monitor Phone Remotely For your Motorola Edge 40 | Dr.fone

- Jumpstart Gaming Fun Xbox One and Zoom Integration for 2024

- Mastery of Magnified Mining Maps

- Title: Exploring the Evolution of OpenAI's GPT

- Author: Brian

- Created at : 2024-10-01 19:15:16

- Updated at : 2024-10-03 16:58:54

- Link: https://tech-savvy.techidaily.com/exploring-the-evolution-of-openais-gpt/

- License: This work is licensed under CC BY-NC-SA 4.0.