Exploring the Finest Free AI Creation Software

Exploring the Finest Free AI Creation Software

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Quick Links

AI-based text-to-image generation models are everywhere and becoming easier to access daily. While it’s easy just to visit a website and generate the image you’re looking for, open-source text-to-image generators are your best bet if you want more control over the generation process.

MAKEUSEOF VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

There are dozens of free and open-source AI text-to-image generators available on the internet that specialize in specific kinds of images. So, we’ve sifted through the pile and found the best open-source AI text-to-image generators you can try right now.

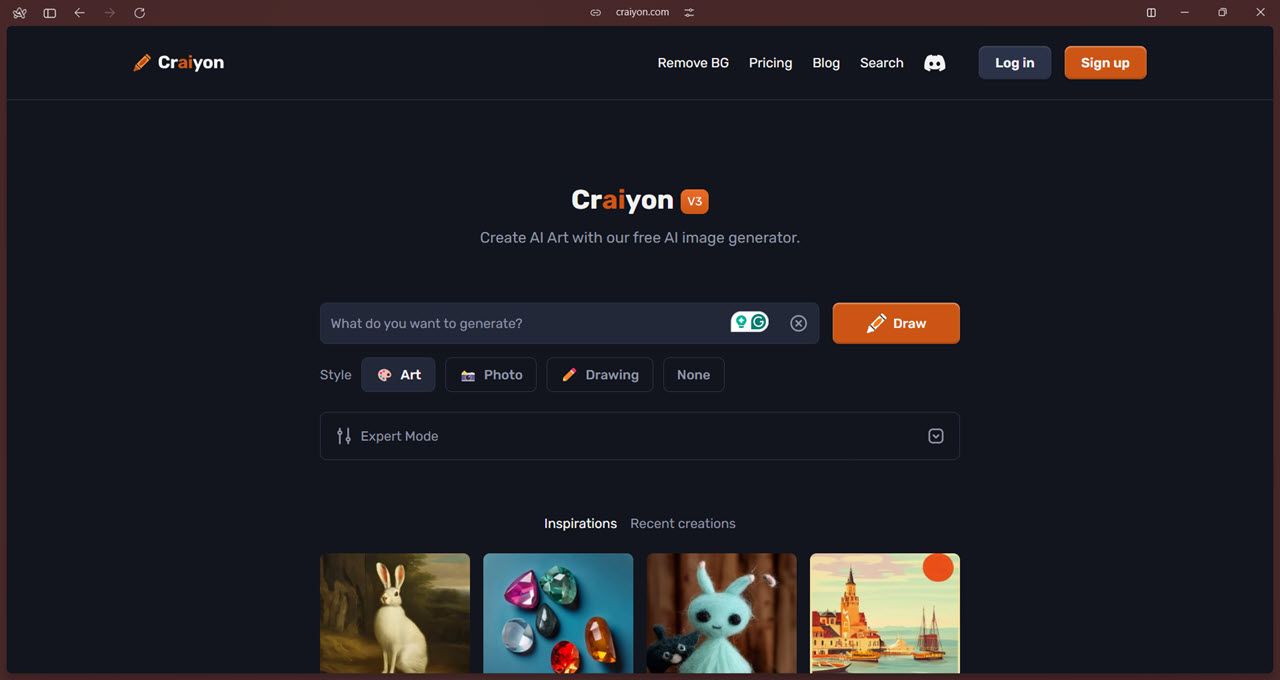

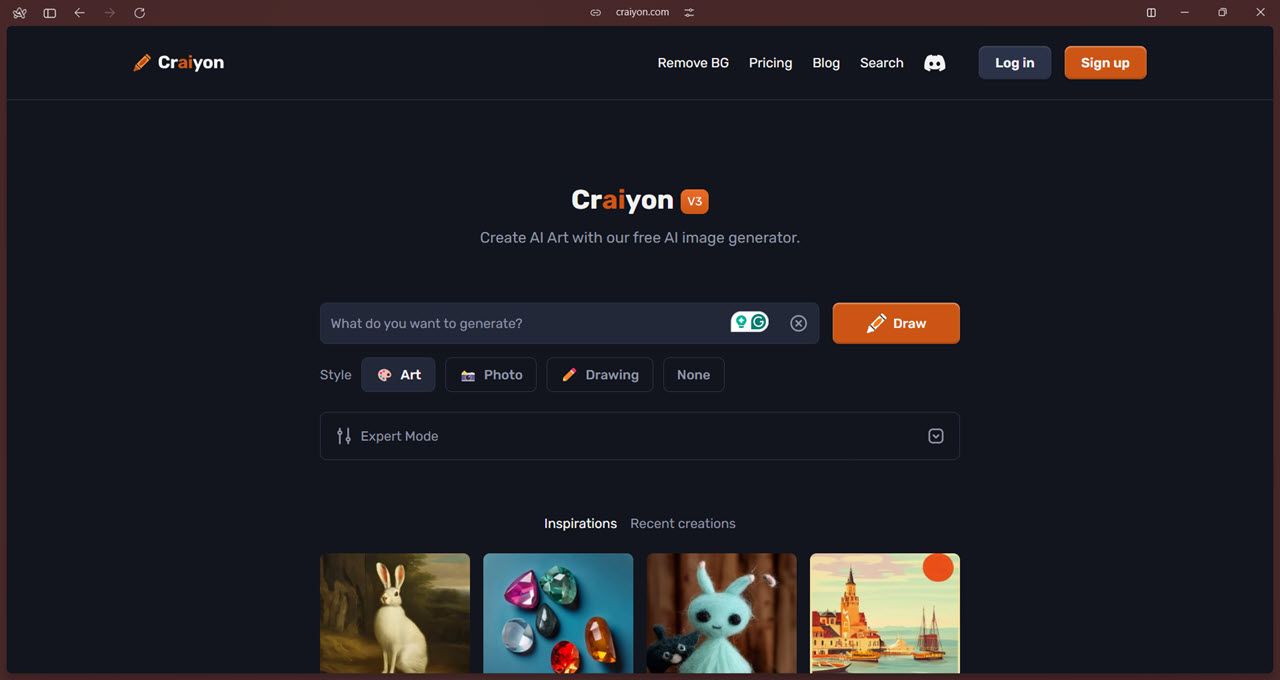

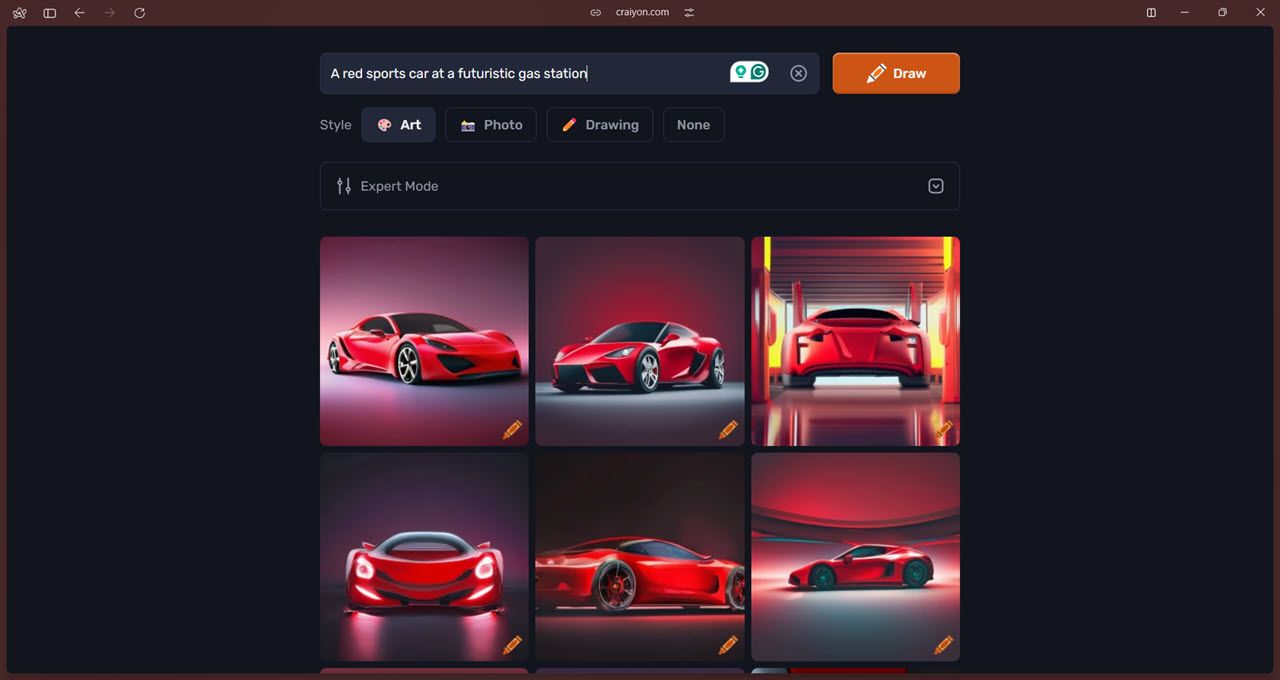

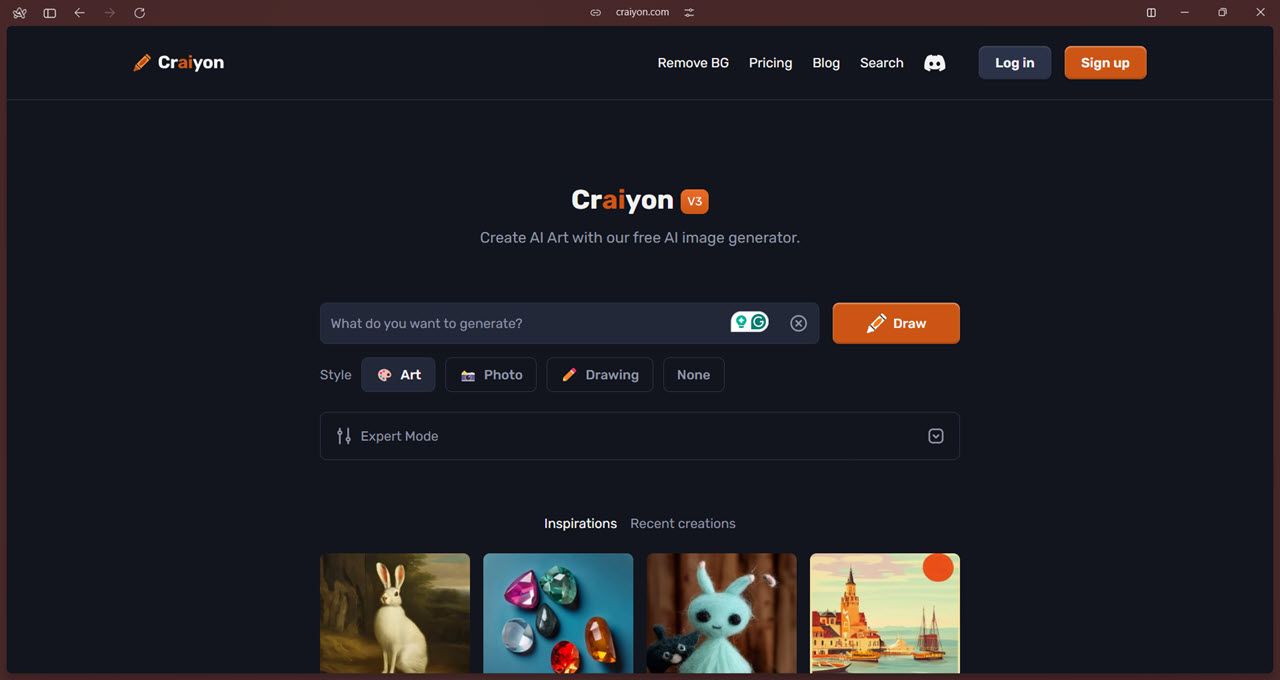

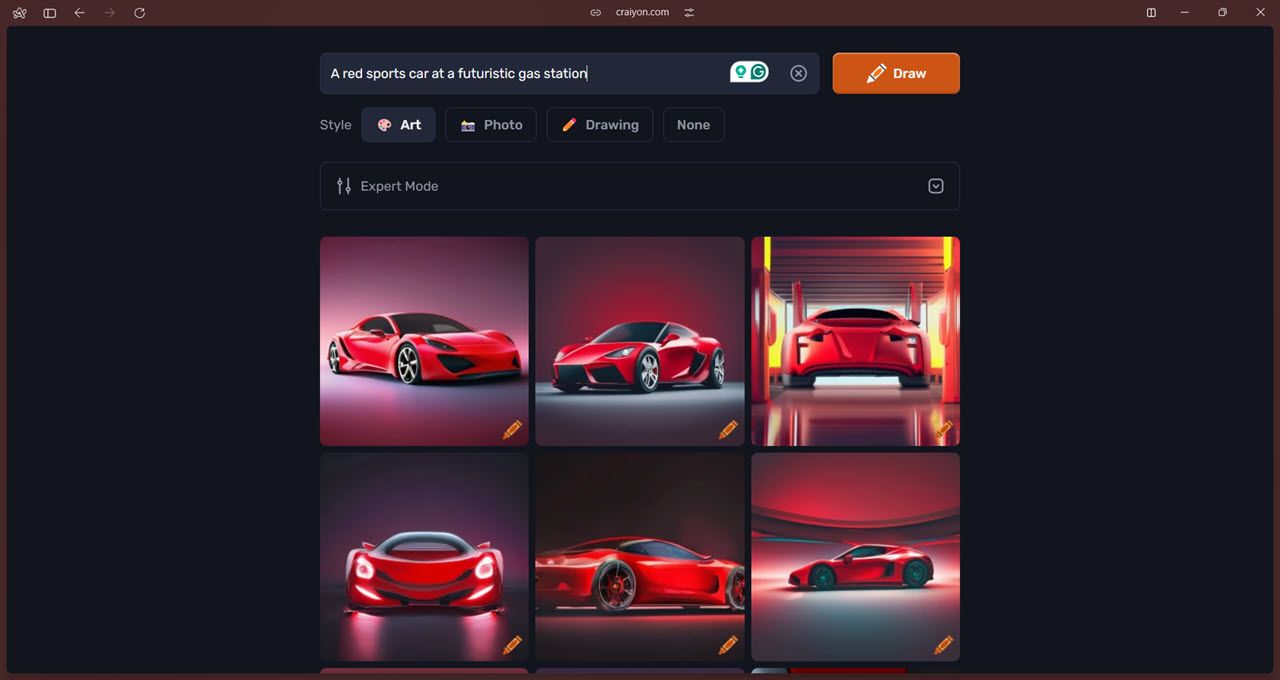

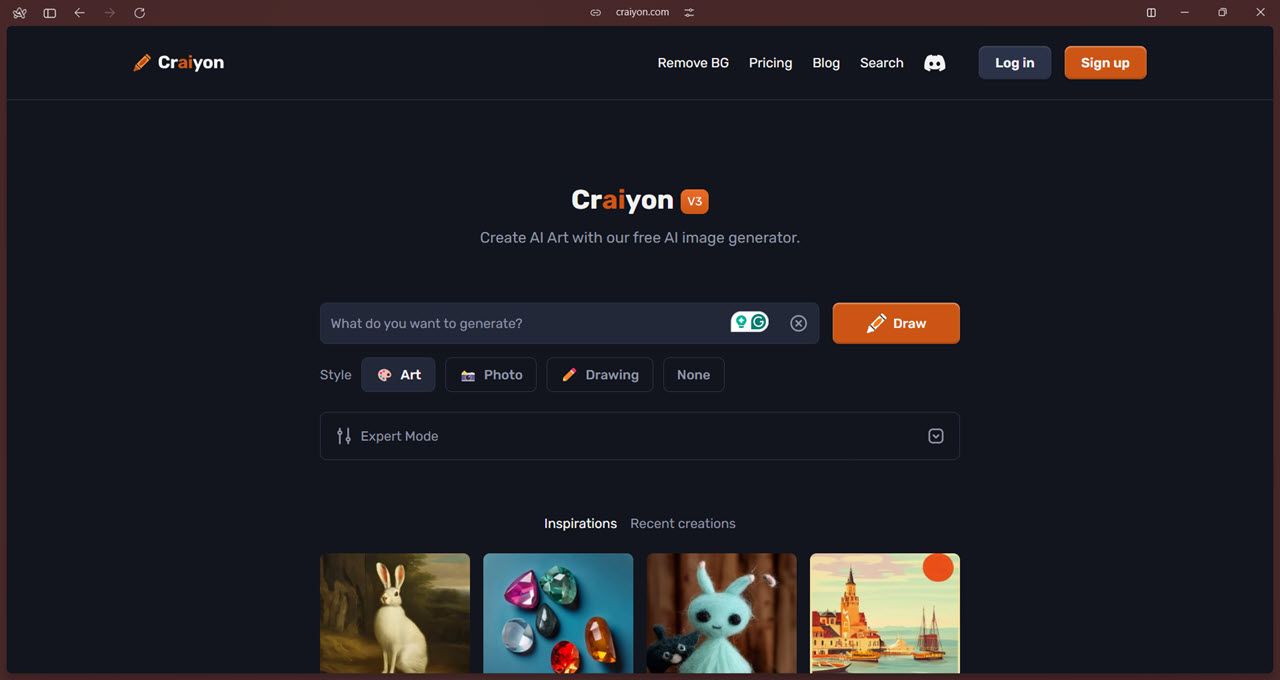

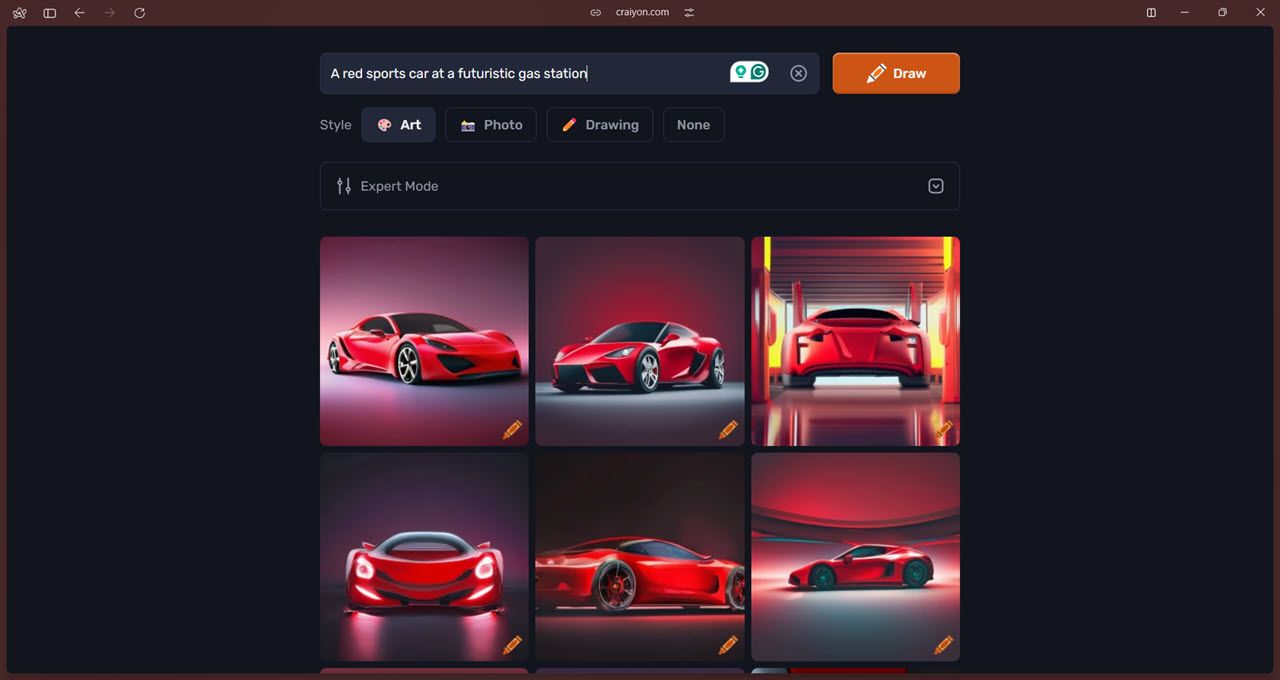

1 Craiyon

Craiyon is one of the most easily accessible open-source AI image generators. It’s based on DALL-E Mini, and while you can clone the Github repository and install the model locally on your computer, Craiyon seems to have dropped this approach in favor of its website.

The official Github repository hasn’t been updated since June 2022, but the latest model is still available for free on the official Craiyon site . There are no Android or iOS apps either.

In terms of functionality, you’ll see all the usual options that you expect from an AI image generator. Once you enter your prompt and get an image, you can use the upscale feature to get a higher-resolution copy. There are three styles to choose from: Art, Photo, and Drawing. You can also select the “None” option if you want the model to decide.

Additionally, “Expert Mode” lets you include negative words, which tells the model to avoid specific items. There’s also a prompt prediction feature, which uses ChatGPT to help users write the best, most detailed prompts possible. Lastly, the AI-powered remove background features can help you save time and effort cropping backgrounds out of images.

And that’s about everything Craiyon does. It’s not the most sophisticated AI image generation model, but it does well as a basic model if you don’t want something detailed or realistic.

The model is free to use, but free users are limited to nine free images at a time within a minute. You can subscribe to their Supporter or Professional tiers (priced at $5 and $20 a month, respectively, and billed annually) to get no ads or watermarks, faster generation, and the option to keep your generated images private. A Custom subscription tier also allows custom models, integration, dedicated support, and private servers.

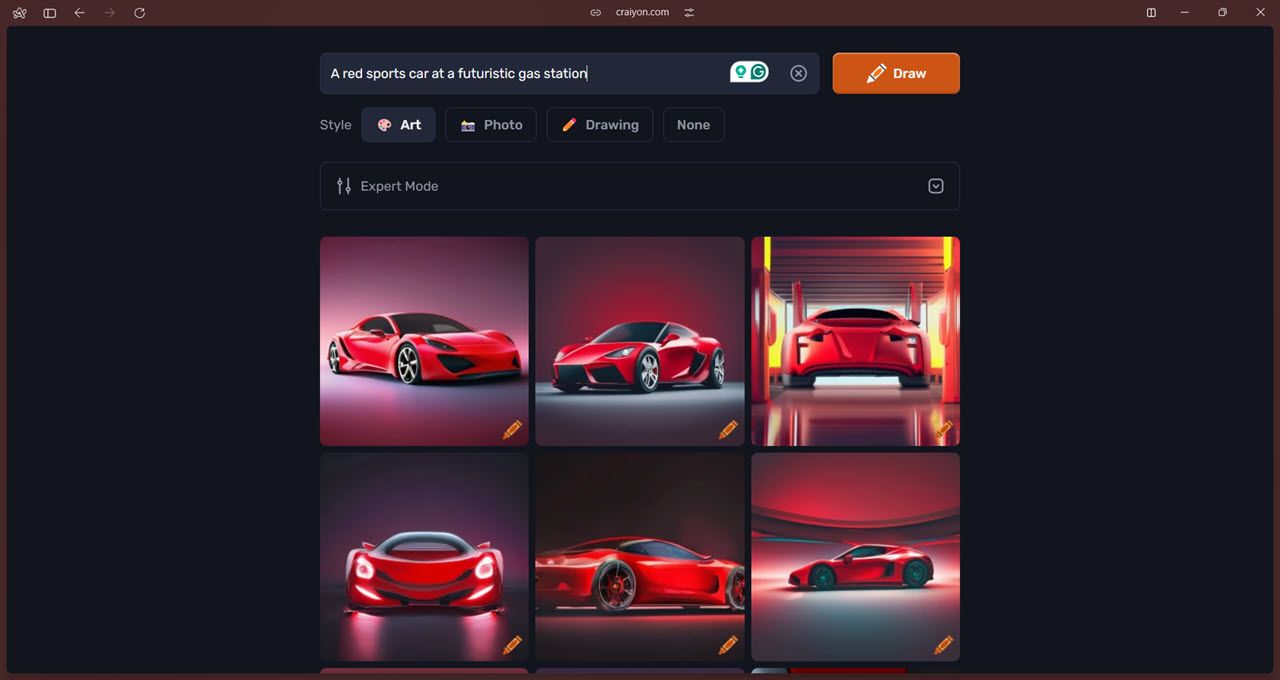

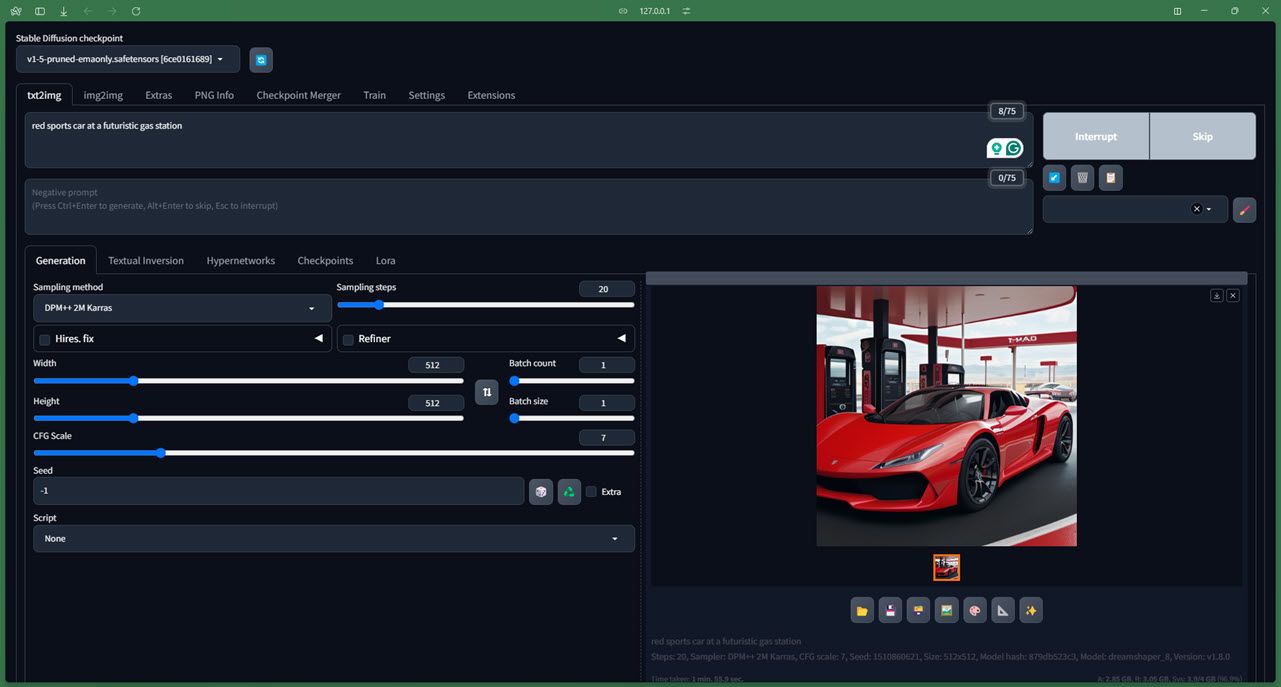

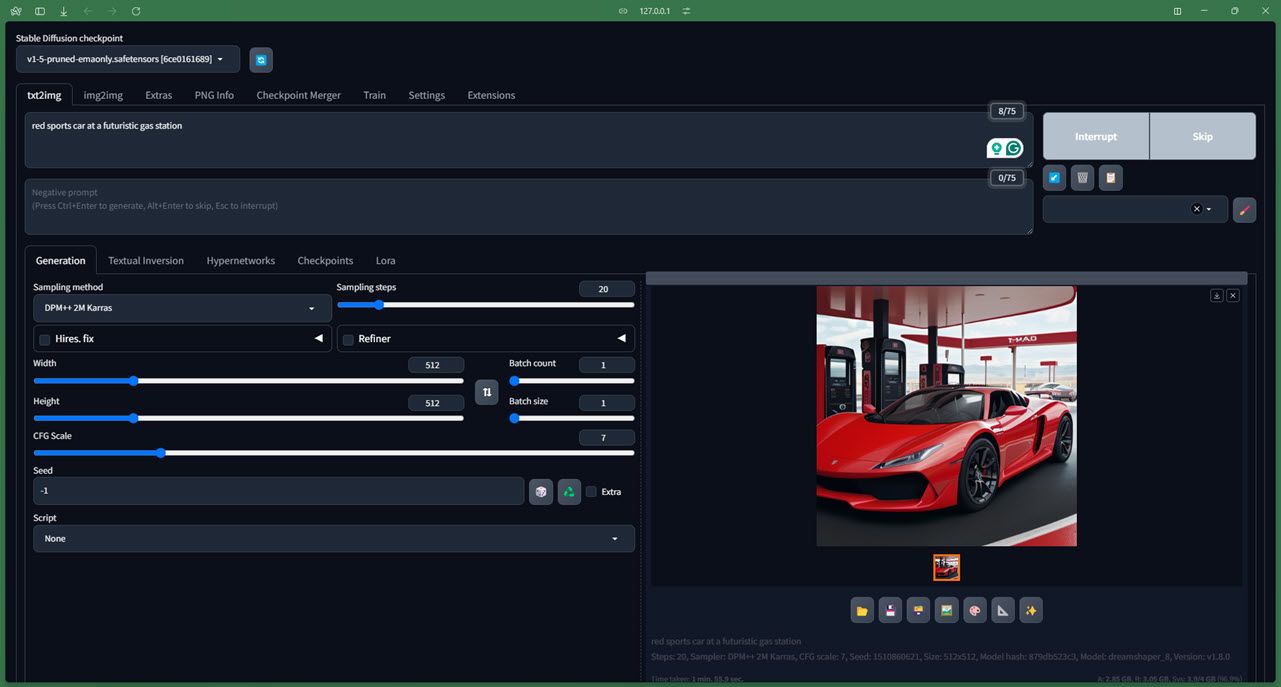

2 Stable Diffusion 1.5

Stable Diffusion is perhaps one of the most popular open-source text-to-image generation models. It also powers other models, including the three image generators mentioned below. It was released in 2022 and has had many implementations since.

I’ll spare you the overly technical details of how the model works (for which you can check out their official Github repository ), but the model is easy to install even for complete beginners and works well as long as you have a dedicated GPU with at least 4GB of memory. You can also access Stable Diffusion online, and we’ve got you covered if you want to run Stable Diffusion on a Mac .

There are several checkpoints (consider them versions) available to use for Stable Diffusion. While we tested out version 1.5, version 2.1 is also in active development and is more precise.

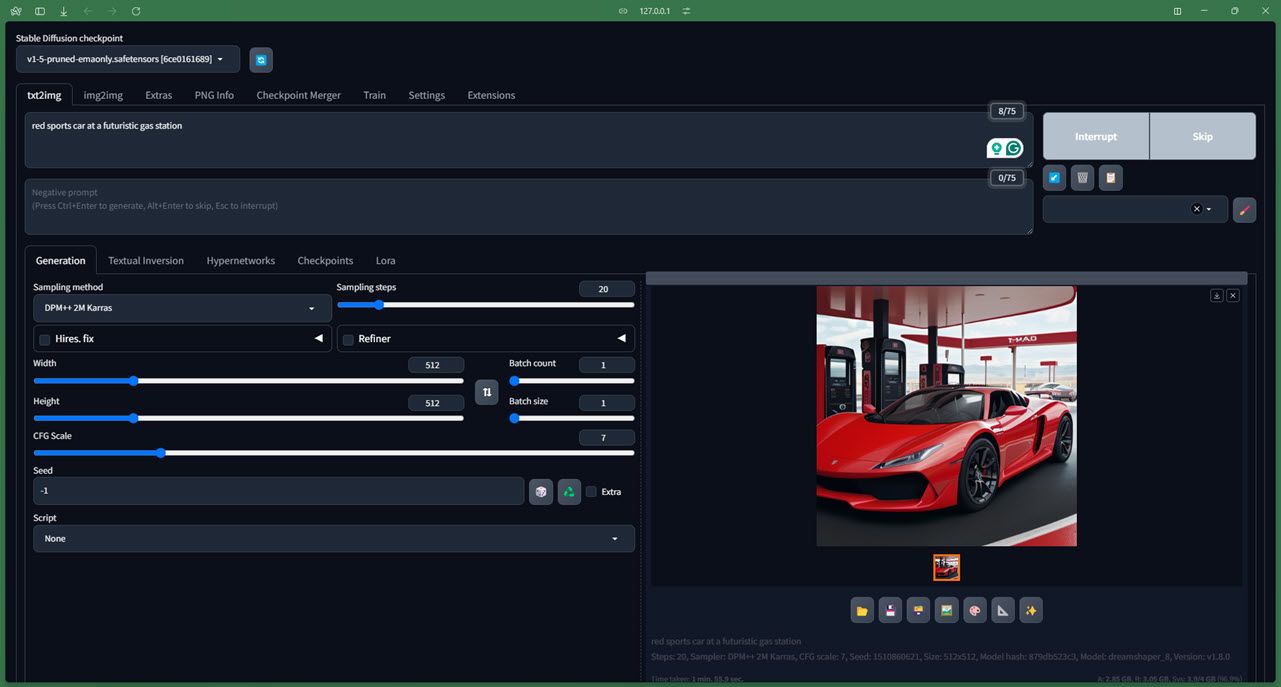

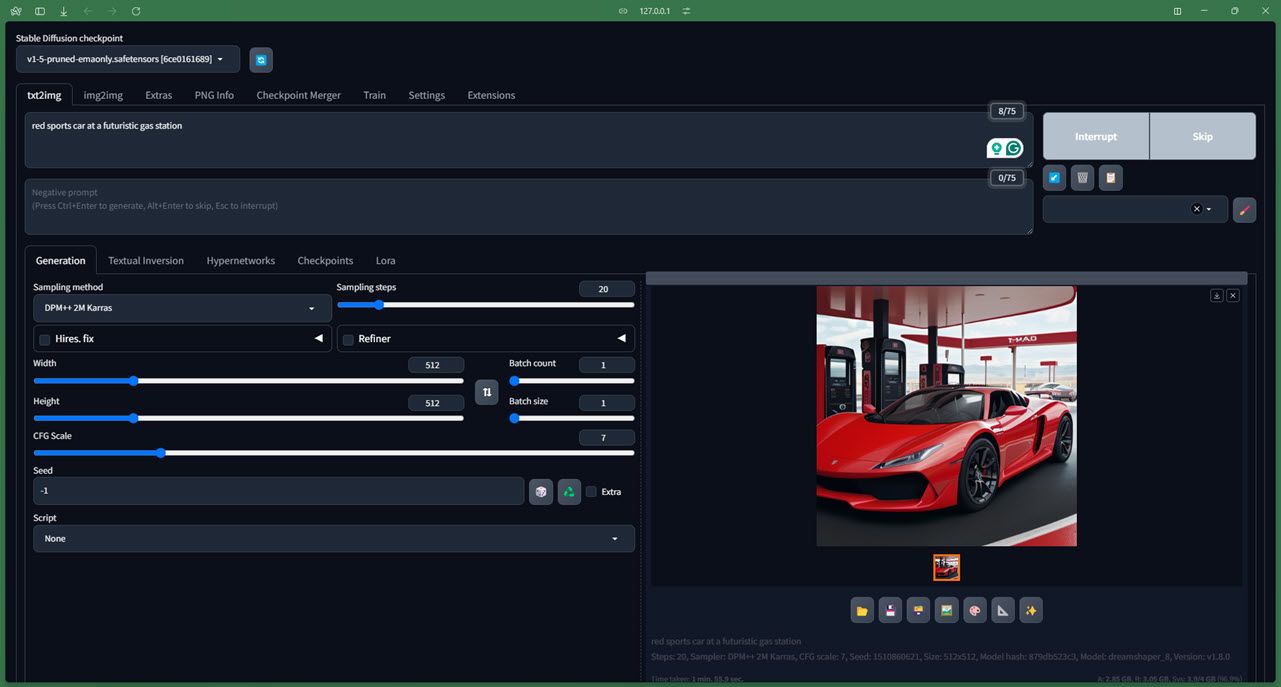

Yadullah Abidi/MakeUseOf/DreamShaper

Running the model is also rather easy. We tested it with the AUTOMATIC1111 Stable Diffusion web user interface , and all controls and parameters work well. It’s also quite NSFW-proof courtesy of the LAION-5B database that the model trained on (although it’s not perfect, mind you). While generation time itself will vary based on your hardware, you can expect your images to be detailed and realistic even with basic prompts.

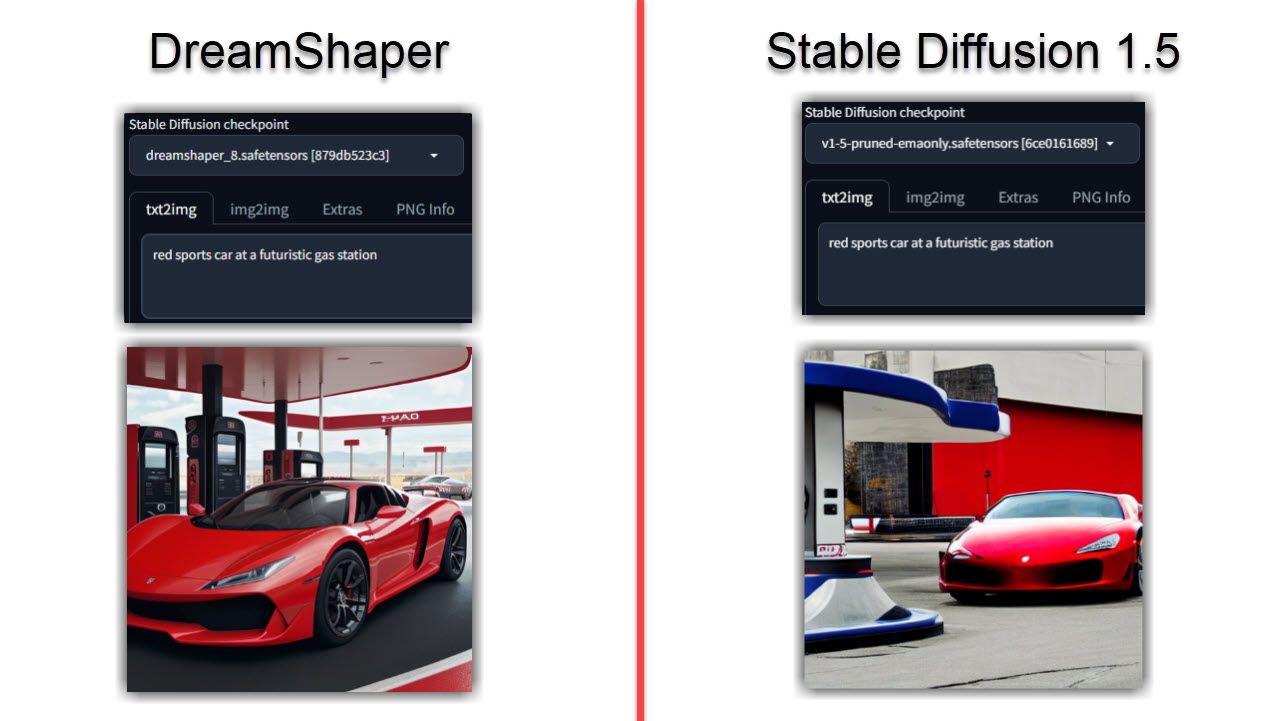

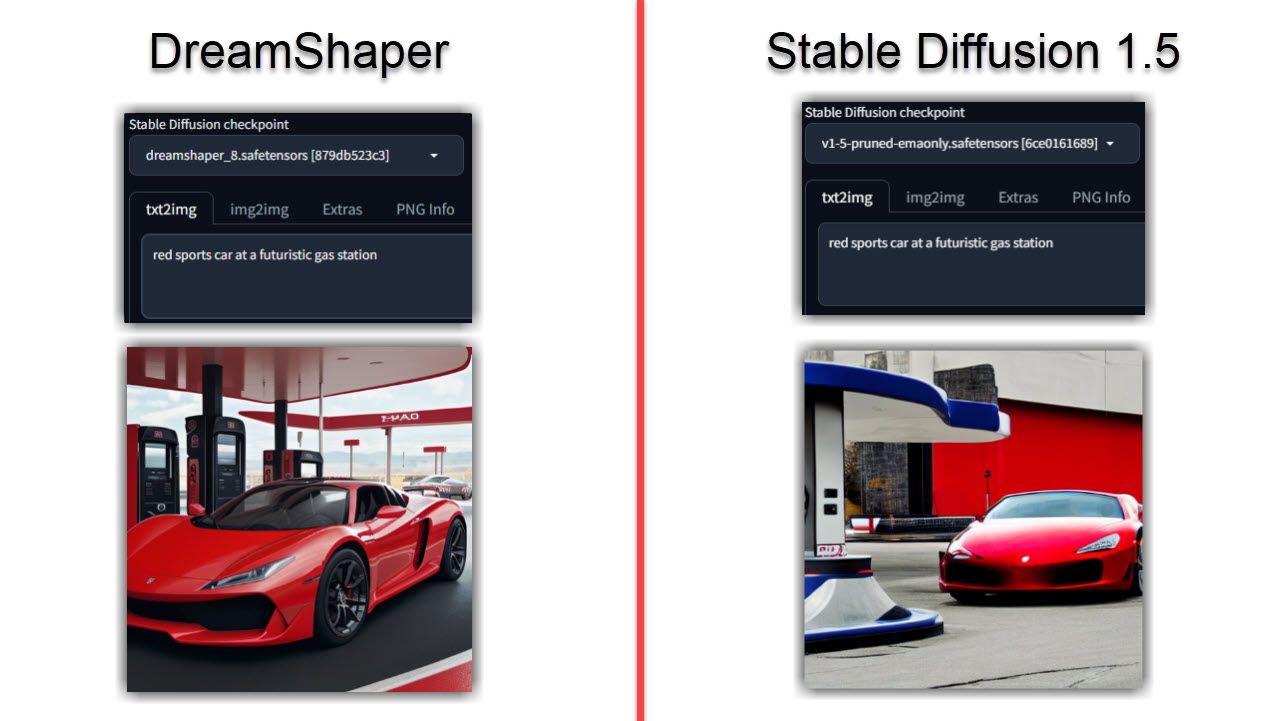

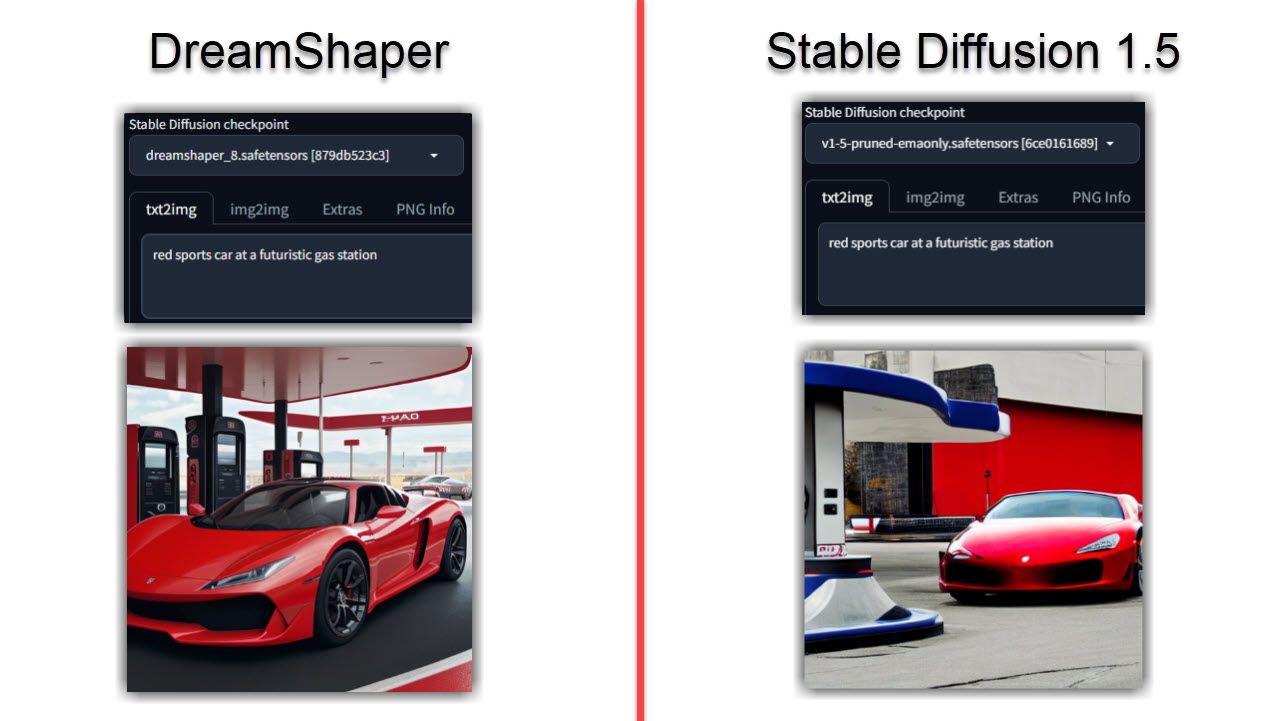

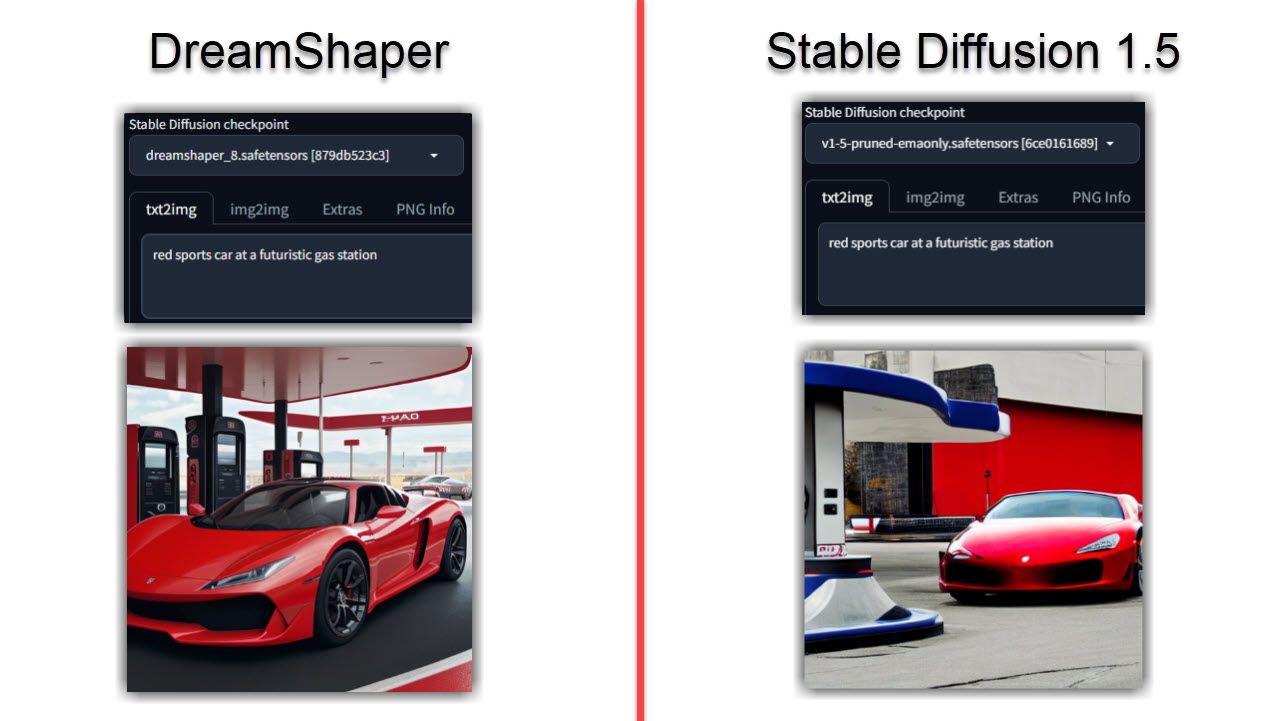

3 DreamShaper

DreamShaper is an image generation model based on Stable Diffusion. It was intended as an open-source alternative to MidJourney and focuses on photorealism in the generated images, although it can handle anime and painting styles just as well with a few tweaks.

The model is more capable than Stable Diffusion, allowing users more freedom over the final output, ranging from lightning improvements to looser NSFW restrictions. Running the model is also easy, with a downloadable, pre-trained version available online for local access and a host of websites, including Sinkin.ai , RandomSeed , and Mage.space (requires a basic subscription) that let you run the model with GPU acceleration.

As you can probably guess by now, images generated by DreamShaper tend to look more realistic compared to Stable Diffusion. Even if you run the same prompt on both models, the DreamShaper model will likely be more realistic, detailed, and better-lit.

This is especially true for portraits or characters, something I found Stable Diffusion lacking compared to the same prompt. If your images become too realistic, here are four ways to identify an AI-generated image .

You don’t need a behemoth PC to run the model, either. My GTX 1650Ti with 4GB VRAM ran the model perfectly. Generation time was a bit longer, but it didn’t seem to affect the actual output. That said, you might require GPUs with more VRAM to run DreamShaper XL, which is based on the Stable Diffusion XL model.

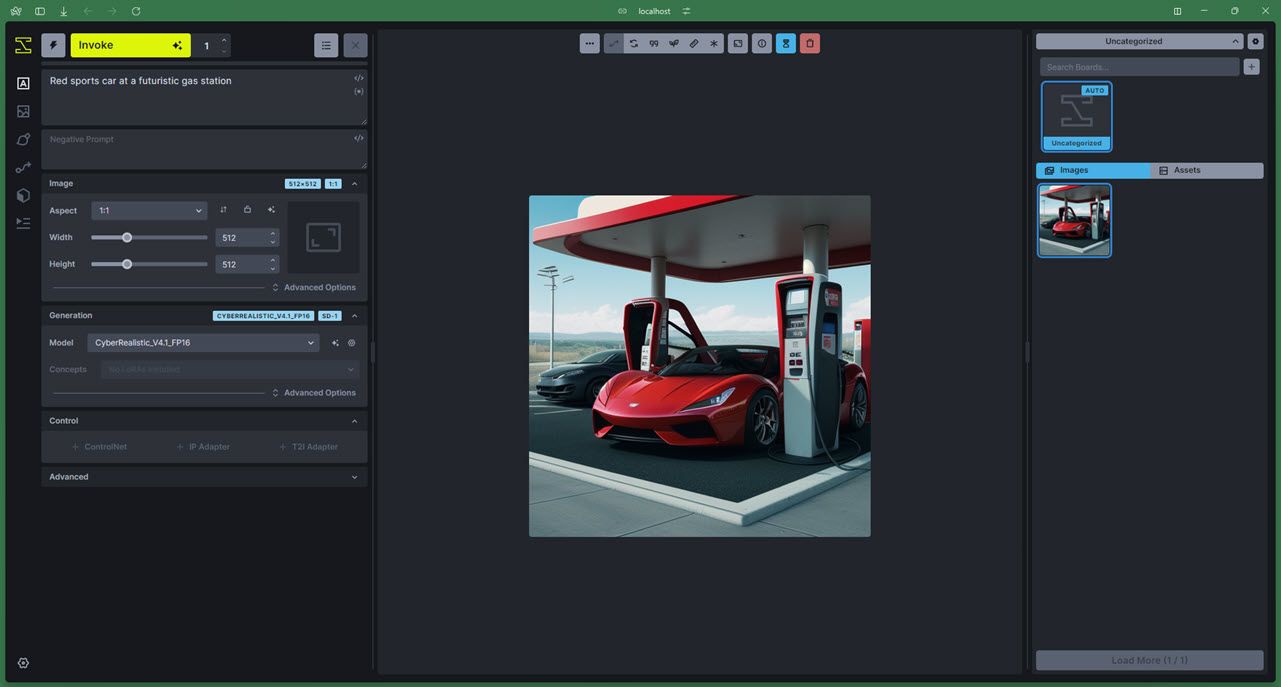

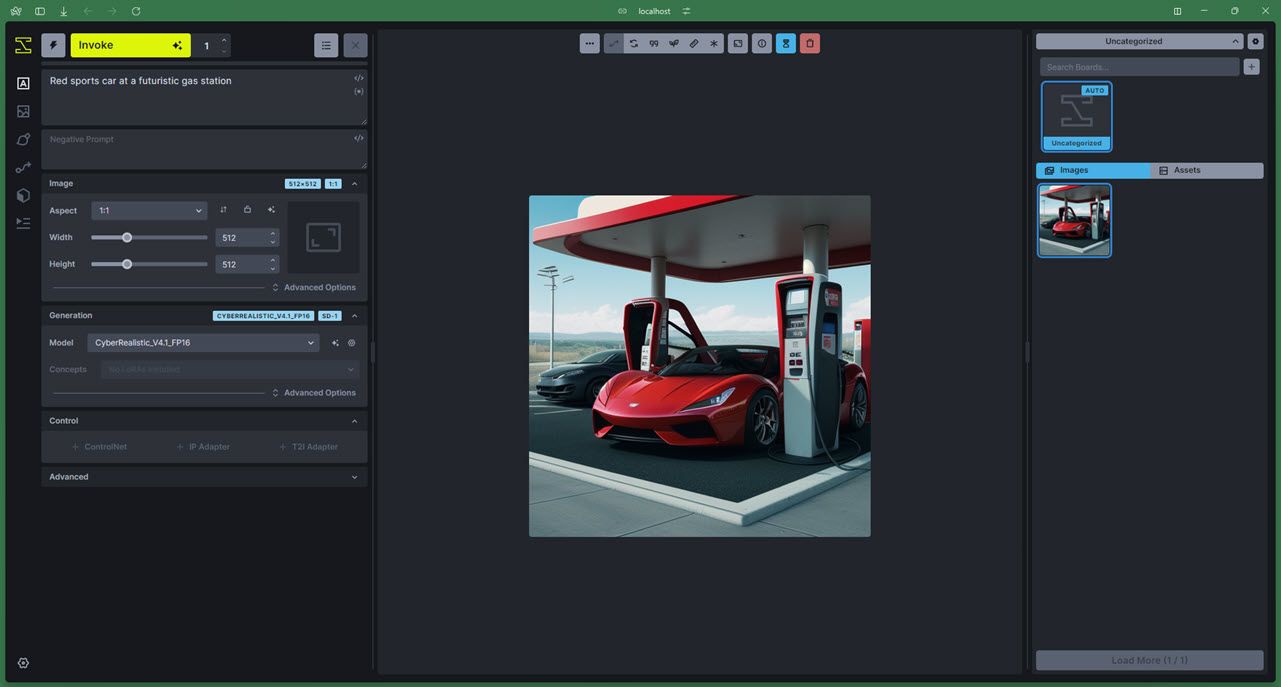

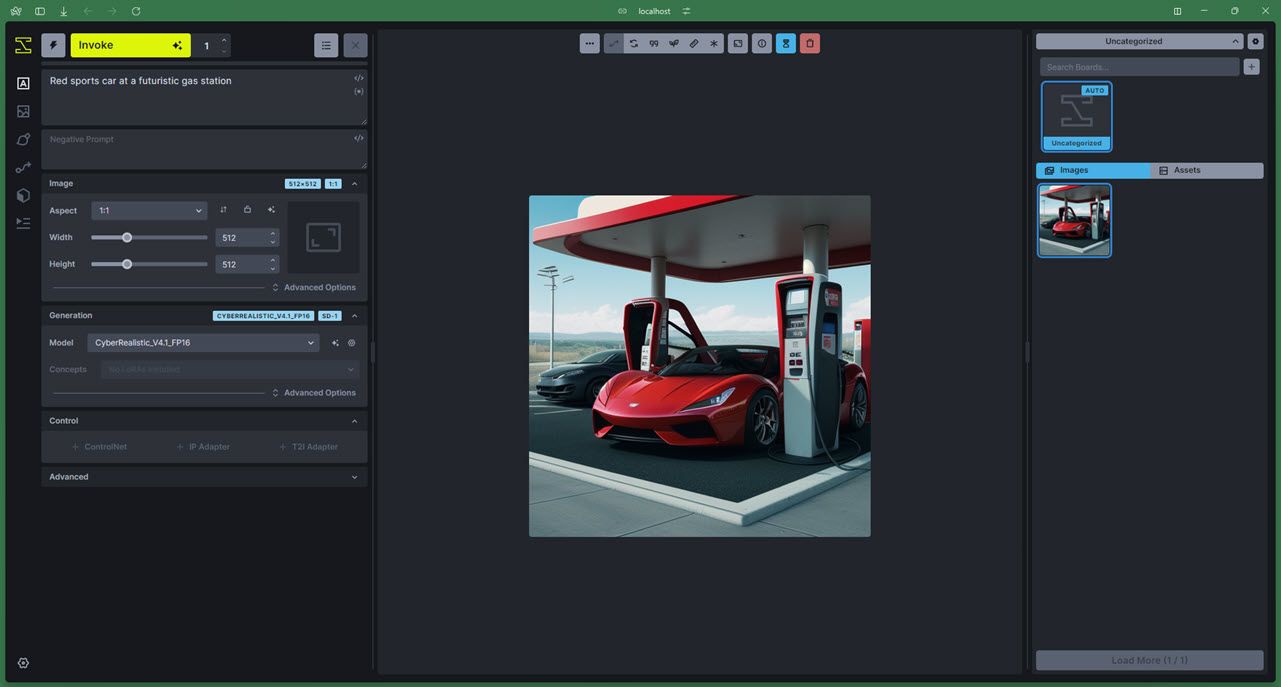

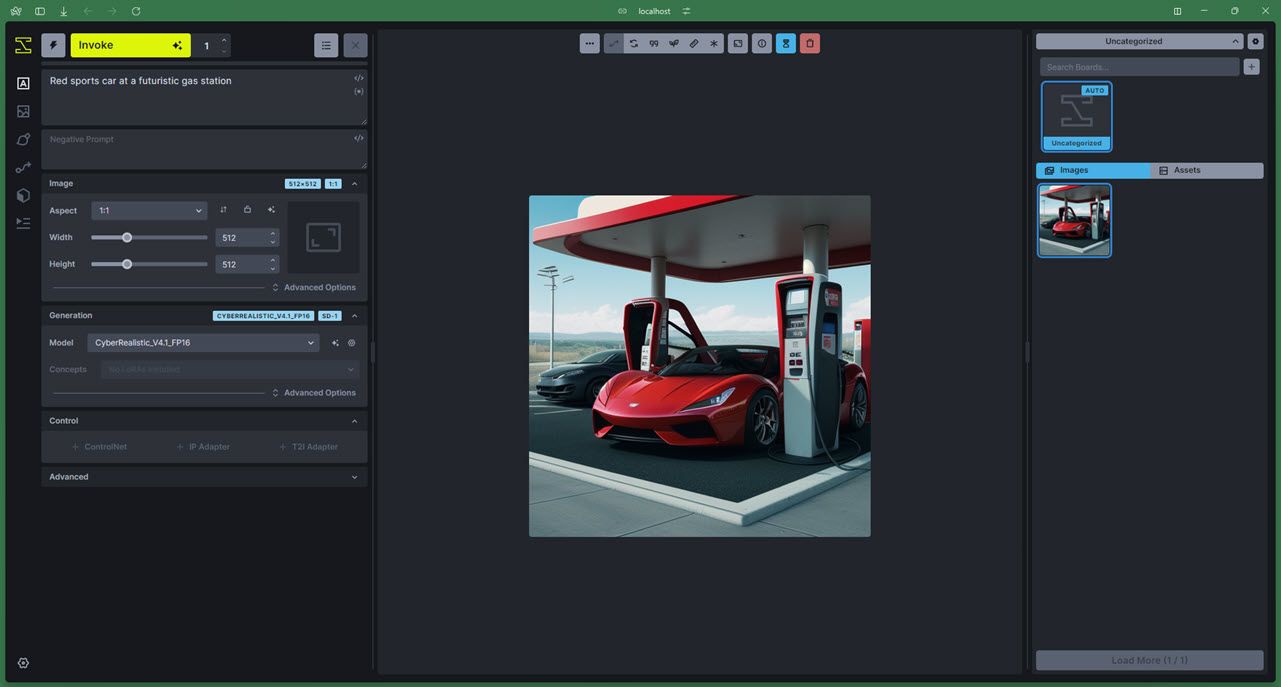

4 InvokeAI

Invoke AI is another AI-based image generation model based on Stable Diffusion, with an XL version based on Stable Diffusion XL. It also has its own web and command line user interface, meaning you won’t have to jump hoops with things like the Stable Diffusion web UI.

The model focuses on letting users create visuals based on their intellectual property with customized workflows. InvokeAI is one of the best open-source AI image generation models for training custom models and working with intellectual property.

Its official Github repository lists two installation methods: installing via InvokeAI’s installer or using PyPI if you’re comfortable with a terminal and Python and need more control over the packages installed with the model.

However, the extra control does bring a few limitations, most notably stricter hardware requirements. InvokeAI recommends a dedicated GPU with at least 4GB of memory, with six to eight GB recommended for running the XL variant. The VRAM requirements apply to both AMD and Nvidia GPUs. You’ll also need at least 12GB of RAM and 12GB of free disk space for the model, its dependencies, and Python.

Yadullah Abidi/MakeUseOf/InvokeAI

While the documentation doesn’t recommend Nvidia’s GTX 10 Series and 16 Series GPUs for their lack of video memory, the provided installer did run just fine. While your mileage may vary, if you’re on a lower-end GPU, expect to wait longer to see your prompts being turned into images. Finally, if you’re on Windows, you can only use an Nvidia GPU, as there’s no support for AMD GPUs currently.

For the image generation part, the model tends to lean more toward artistic styles than photorealism. Of course, you can train the model on your dataset and have it generate images closer to what you want, even if that involves photorealistic images, especially if you’re working in product design, architecture, or retail spaces. However, one important thing to keep in mind is that InvokeAI is primarily an image generation engine, meaning you’ll likely have to use your own models for the best results (easily found via the model manager provided in the web interface) as the default model is quite similar to Stable Diffusion itself.

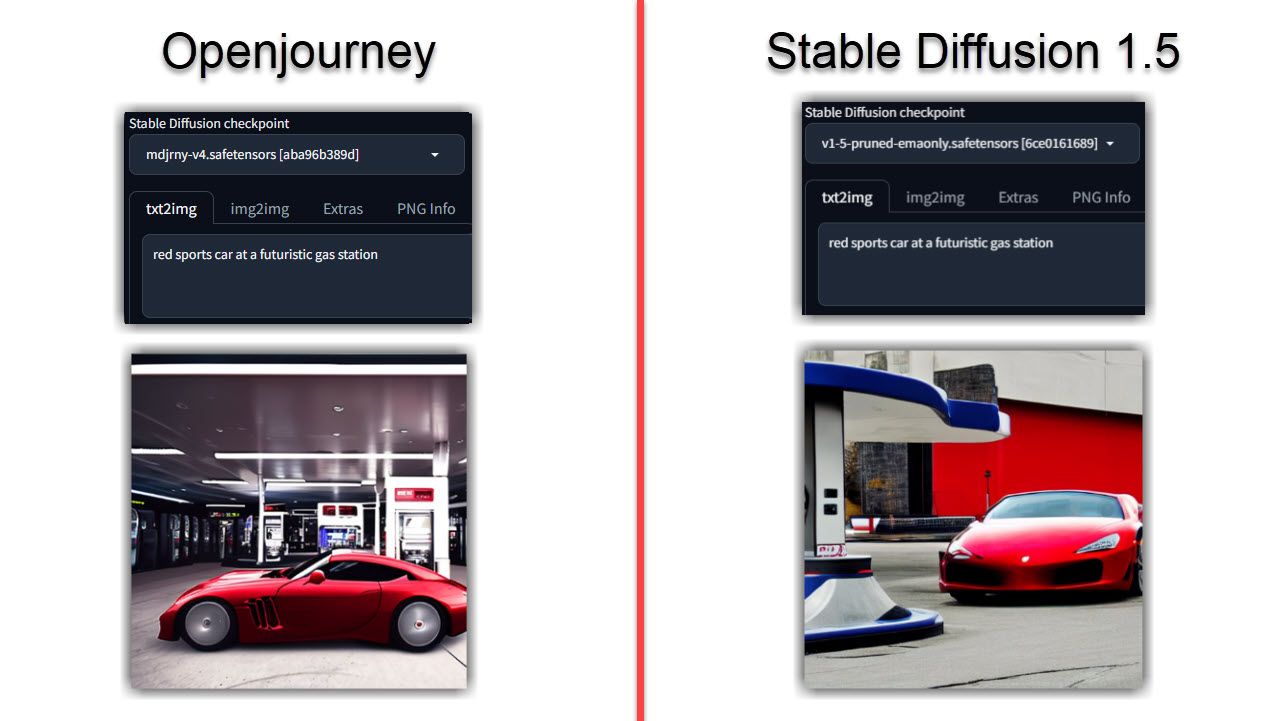

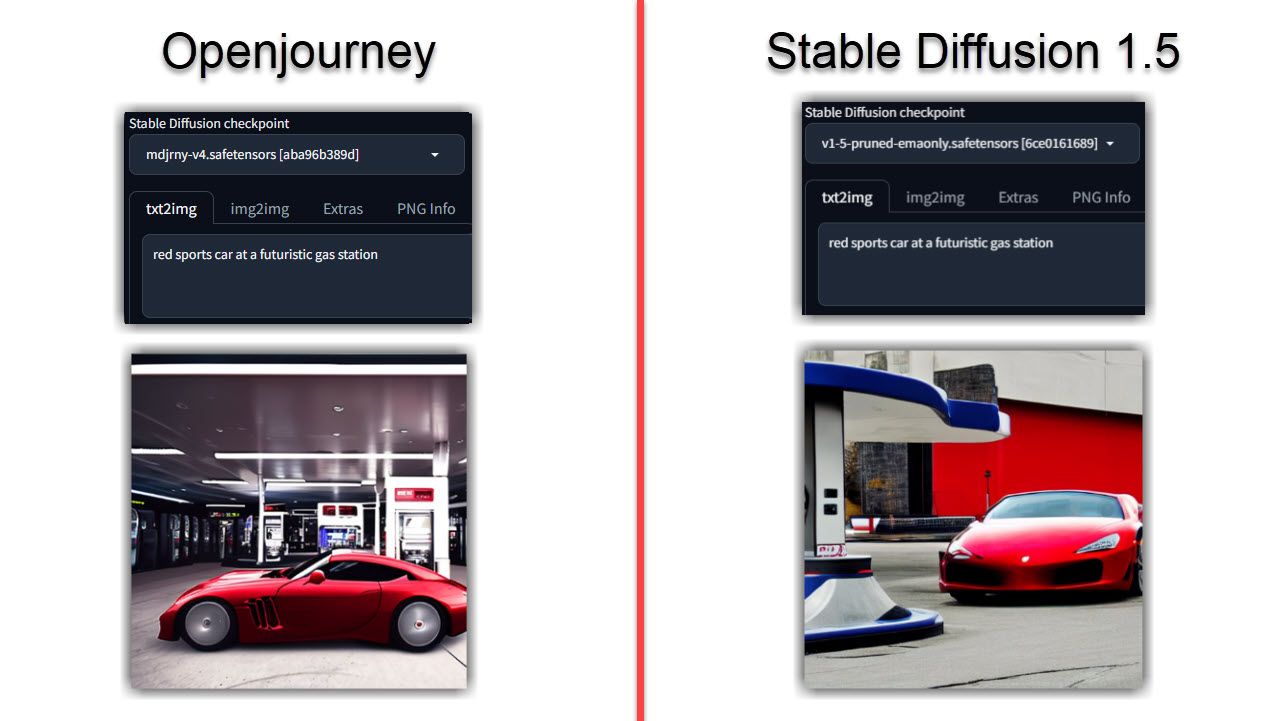

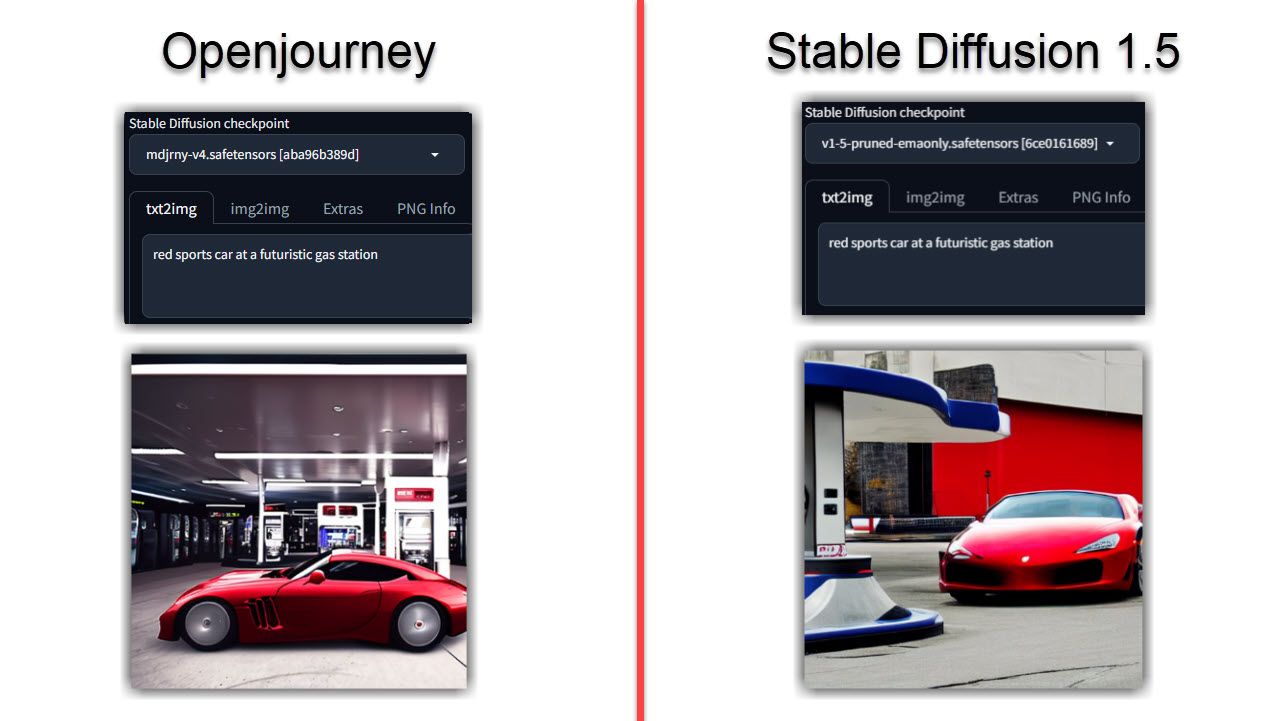

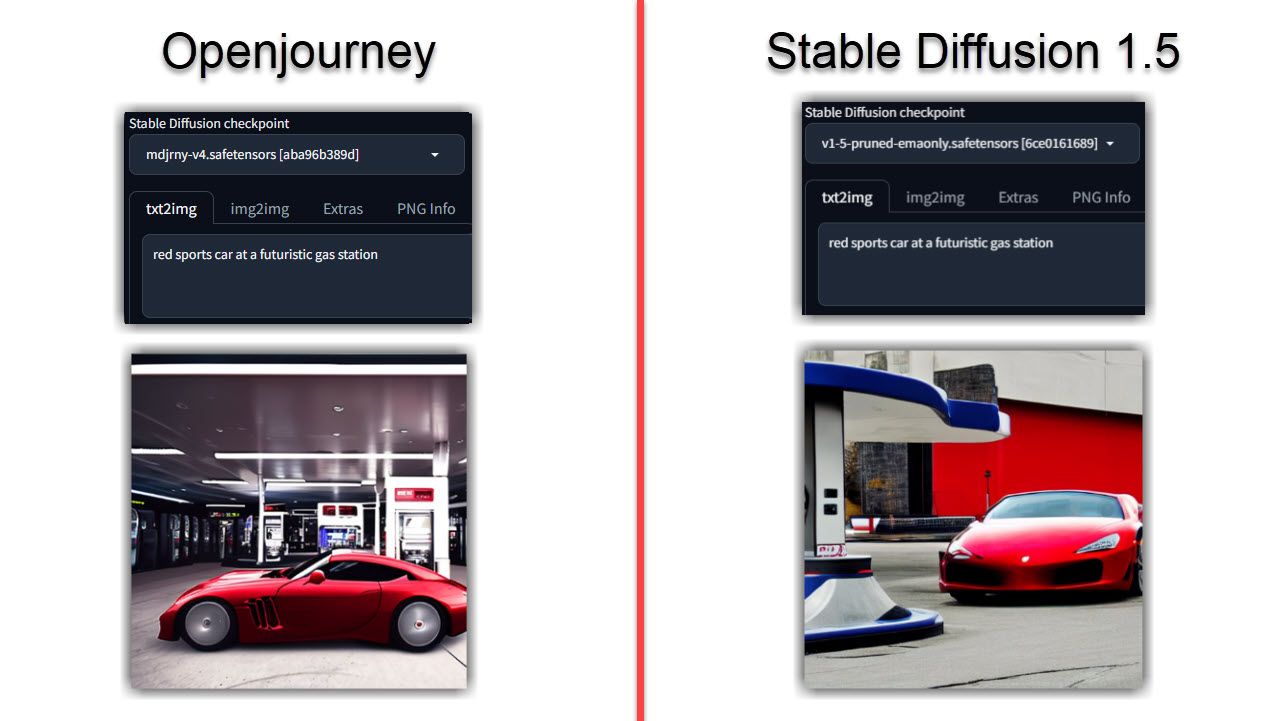

5 Openjourney

Openjourney is a free, open-source AI image generation model based yet again on Stable Diffusion. If you’re wondering why the model is called Openjourney, it’s because it was trained on Midjourney images and can mimic its style in the images it generates.

PromptHero , the company behind Openjourney, lets you test the model alongside other models, including Stable Diffusion (versions 1.5 and 2), DreamShaper, and Realistic Vision. When signing up, you get 25 free credits (one credit for each image generated), after which you have to subscribe to their Pro subscription tier, which costs $9 a month and gives you access to 300 credits each month with other exclusive features.

However, if you want to run it locally and for free, you can download the model file from HuggingFace and run it using the Stable Diffusion web UI. Openjourney is also the second most downloaded AI image generation model on HuggingFace, right behind Stable Diffusion.

Openjourney doesn’t list any specific hardware requirements for running the model locally on its website, but you can expect similar hardware requirements to Stable Diffusion. This means a dedicated GPU with 4GB VRAM, 16GB RAM, and around 12 to 15GB of free space on your computer to save the model and its dependencies.

Yadullah Abidi/MakeUseOf/OpenJourney

Images generated by Openjourney tend to be balanced between photorealism and art unless otherwise specified. If you’re looking for an all-around model and prefer the Midjourney look and feel without paying for the subscription, Openjourney is one of the best options.

AI-based text-to-image generation models are everywhere and becoming easier to access daily. While it’s easy just to visit a website and generate the image you’re looking for, open-source text-to-image generators are your best bet if you want more control over the generation process.

MAKEUSEOF VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

There are dozens of free and open-source AI text-to-image generators available on the internet that specialize in specific kinds of images. So, we’ve sifted through the pile and found the best open-source AI text-to-image generators you can try right now.

1 Craiyon

Craiyon is one of the most easily accessible open-source AI image generators. It’s based on DALL-E Mini, and while you can clone the Github repository and install the model locally on your computer, Craiyon seems to have dropped this approach in favor of its website.

The official Github repository hasn’t been updated since June 2022, but the latest model is still available for free on the official Craiyon site . There are no Android or iOS apps either.

In terms of functionality, you’ll see all the usual options that you expect from an AI image generator. Once you enter your prompt and get an image, you can use the upscale feature to get a higher-resolution copy. There are three styles to choose from: Art, Photo, and Drawing. You can also select the “None” option if you want the model to decide.

Additionally, “Expert Mode” lets you include negative words, which tells the model to avoid specific items. There’s also a prompt prediction feature, which uses ChatGPT to help users write the best, most detailed prompts possible. Lastly, the AI-powered remove background features can help you save time and effort cropping backgrounds out of images.

And that’s about everything Craiyon does. It’s not the most sophisticated AI image generation model, but it does well as a basic model if you don’t want something detailed or realistic.

The model is free to use, but free users are limited to nine free images at a time within a minute. You can subscribe to their Supporter or Professional tiers (priced at $5 and $20 a month, respectively, and billed annually) to get no ads or watermarks, faster generation, and the option to keep your generated images private. A Custom subscription tier also allows custom models, integration, dedicated support, and private servers.

2 Stable Diffusion 1.5

Stable Diffusion is perhaps one of the most popular open-source text-to-image generation models. It also powers other models, including the three image generators mentioned below. It was released in 2022 and has had many implementations since.

I’ll spare you the overly technical details of how the model works (for which you can check out their official Github repository ), but the model is easy to install even for complete beginners and works well as long as you have a dedicated GPU with at least 4GB of memory. You can also access Stable Diffusion online, and we’ve got you covered if you want to run Stable Diffusion on a Mac .

There are several checkpoints (consider them versions) available to use for Stable Diffusion. While we tested out version 1.5, version 2.1 is also in active development and is more precise.

Yadullah Abidi/MakeUseOf/DreamShaper

Running the model is also rather easy. We tested it with the AUTOMATIC1111 Stable Diffusion web user interface , and all controls and parameters work well. It’s also quite NSFW-proof courtesy of the LAION-5B database that the model trained on (although it’s not perfect, mind you). While generation time itself will vary based on your hardware, you can expect your images to be detailed and realistic even with basic prompts.

3 DreamShaper

DreamShaper is an image generation model based on Stable Diffusion. It was intended as an open-source alternative to MidJourney and focuses on photorealism in the generated images, although it can handle anime and painting styles just as well with a few tweaks.

The model is more capable than Stable Diffusion, allowing users more freedom over the final output, ranging from lightning improvements to looser NSFW restrictions. Running the model is also easy, with a downloadable, pre-trained version available online for local access and a host of websites, including Sinkin.ai , RandomSeed , and Mage.space (requires a basic subscription) that let you run the model with GPU acceleration.

As you can probably guess by now, images generated by DreamShaper tend to look more realistic compared to Stable Diffusion. Even if you run the same prompt on both models, the DreamShaper model will likely be more realistic, detailed, and better-lit.

This is especially true for portraits or characters, something I found Stable Diffusion lacking compared to the same prompt. If your images become too realistic, here are four ways to identify an AI-generated image .

You don’t need a behemoth PC to run the model, either. My GTX 1650Ti with 4GB VRAM ran the model perfectly. Generation time was a bit longer, but it didn’t seem to affect the actual output. That said, you might require GPUs with more VRAM to run DreamShaper XL, which is based on the Stable Diffusion XL model.

4 InvokeAI

Invoke AI is another AI-based image generation model based on Stable Diffusion, with an XL version based on Stable Diffusion XL. It also has its own web and command line user interface, meaning you won’t have to jump hoops with things like the Stable Diffusion web UI.

The model focuses on letting users create visuals based on their intellectual property with customized workflows. InvokeAI is one of the best open-source AI image generation models for training custom models and working with intellectual property.

Its official Github repository lists two installation methods: installing via InvokeAI’s installer or using PyPI if you’re comfortable with a terminal and Python and need more control over the packages installed with the model.

However, the extra control does bring a few limitations, most notably stricter hardware requirements. InvokeAI recommends a dedicated GPU with at least 4GB of memory, with six to eight GB recommended for running the XL variant. The VRAM requirements apply to both AMD and Nvidia GPUs. You’ll also need at least 12GB of RAM and 12GB of free disk space for the model, its dependencies, and Python.

Yadullah Abidi/MakeUseOf/InvokeAI

While the documentation doesn’t recommend Nvidia’s GTX 10 Series and 16 Series GPUs for their lack of video memory, the provided installer did run just fine. While your mileage may vary, if you’re on a lower-end GPU, expect to wait longer to see your prompts being turned into images. Finally, if you’re on Windows, you can only use an Nvidia GPU, as there’s no support for AMD GPUs currently.

For the image generation part, the model tends to lean more toward artistic styles than photorealism. Of course, you can train the model on your dataset and have it generate images closer to what you want, even if that involves photorealistic images, especially if you’re working in product design, architecture, or retail spaces. However, one important thing to keep in mind is that InvokeAI is primarily an image generation engine, meaning you’ll likely have to use your own models for the best results (easily found via the model manager provided in the web interface) as the default model is quite similar to Stable Diffusion itself.

5 Openjourney

Openjourney is a free, open-source AI image generation model based yet again on Stable Diffusion. If you’re wondering why the model is called Openjourney, it’s because it was trained on Midjourney images and can mimic its style in the images it generates.

PromptHero , the company behind Openjourney, lets you test the model alongside other models, including Stable Diffusion (versions 1.5 and 2), DreamShaper, and Realistic Vision. When signing up, you get 25 free credits (one credit for each image generated), after which you have to subscribe to their Pro subscription tier, which costs $9 a month and gives you access to 300 credits each month with other exclusive features.

However, if you want to run it locally and for free, you can download the model file from HuggingFace and run it using the Stable Diffusion web UI. Openjourney is also the second most downloaded AI image generation model on HuggingFace, right behind Stable Diffusion.

Openjourney doesn’t list any specific hardware requirements for running the model locally on its website, but you can expect similar hardware requirements to Stable Diffusion. This means a dedicated GPU with 4GB VRAM, 16GB RAM, and around 12 to 15GB of free space on your computer to save the model and its dependencies.

Yadullah Abidi/MakeUseOf/OpenJourney

Images generated by Openjourney tend to be balanced between photorealism and art unless otherwise specified. If you’re looking for an all-around model and prefer the Midjourney look and feel without paying for the subscription, Openjourney is one of the best options.

AI-based text-to-image generation models are everywhere and becoming easier to access daily. While it’s easy just to visit a website and generate the image you’re looking for, open-source text-to-image generators are your best bet if you want more control over the generation process.

MAKEUSEOF VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

There are dozens of free and open-source AI text-to-image generators available on the internet that specialize in specific kinds of images. So, we’ve sifted through the pile and found the best open-source AI text-to-image generators you can try right now.

1 Craiyon

Craiyon is one of the most easily accessible open-source AI image generators. It’s based on DALL-E Mini, and while you can clone the Github repository and install the model locally on your computer, Craiyon seems to have dropped this approach in favor of its website.

The official Github repository hasn’t been updated since June 2022, but the latest model is still available for free on the official Craiyon site . There are no Android or iOS apps either.

In terms of functionality, you’ll see all the usual options that you expect from an AI image generator. Once you enter your prompt and get an image, you can use the upscale feature to get a higher-resolution copy. There are three styles to choose from: Art, Photo, and Drawing. You can also select the “None” option if you want the model to decide.

Additionally, “Expert Mode” lets you include negative words, which tells the model to avoid specific items. There’s also a prompt prediction feature, which uses ChatGPT to help users write the best, most detailed prompts possible. Lastly, the AI-powered remove background features can help you save time and effort cropping backgrounds out of images.

And that’s about everything Craiyon does. It’s not the most sophisticated AI image generation model, but it does well as a basic model if you don’t want something detailed or realistic.

The model is free to use, but free users are limited to nine free images at a time within a minute. You can subscribe to their Supporter or Professional tiers (priced at $5 and $20 a month, respectively, and billed annually) to get no ads or watermarks, faster generation, and the option to keep your generated images private. A Custom subscription tier also allows custom models, integration, dedicated support, and private servers.

2 Stable Diffusion 1.5

Stable Diffusion is perhaps one of the most popular open-source text-to-image generation models. It also powers other models, including the three image generators mentioned below. It was released in 2022 and has had many implementations since.

I’ll spare you the overly technical details of how the model works (for which you can check out their official Github repository ), but the model is easy to install even for complete beginners and works well as long as you have a dedicated GPU with at least 4GB of memory. You can also access Stable Diffusion online, and we’ve got you covered if you want to run Stable Diffusion on a Mac .

There are several checkpoints (consider them versions) available to use for Stable Diffusion. While we tested out version 1.5, version 2.1 is also in active development and is more precise.

Yadullah Abidi/MakeUseOf/DreamShaper

Running the model is also rather easy. We tested it with the AUTOMATIC1111 Stable Diffusion web user interface , and all controls and parameters work well. It’s also quite NSFW-proof courtesy of the LAION-5B database that the model trained on (although it’s not perfect, mind you). While generation time itself will vary based on your hardware, you can expect your images to be detailed and realistic even with basic prompts.

3 DreamShaper

DreamShaper is an image generation model based on Stable Diffusion. It was intended as an open-source alternative to MidJourney and focuses on photorealism in the generated images, although it can handle anime and painting styles just as well with a few tweaks.

The model is more capable than Stable Diffusion, allowing users more freedom over the final output, ranging from lightning improvements to looser NSFW restrictions. Running the model is also easy, with a downloadable, pre-trained version available online for local access and a host of websites, including Sinkin.ai , RandomSeed , and Mage.space (requires a basic subscription) that let you run the model with GPU acceleration.

As you can probably guess by now, images generated by DreamShaper tend to look more realistic compared to Stable Diffusion. Even if you run the same prompt on both models, the DreamShaper model will likely be more realistic, detailed, and better-lit.

This is especially true for portraits or characters, something I found Stable Diffusion lacking compared to the same prompt. If your images become too realistic, here are four ways to identify an AI-generated image .

You don’t need a behemoth PC to run the model, either. My GTX 1650Ti with 4GB VRAM ran the model perfectly. Generation time was a bit longer, but it didn’t seem to affect the actual output. That said, you might require GPUs with more VRAM to run DreamShaper XL, which is based on the Stable Diffusion XL model.

4 InvokeAI

Invoke AI is another AI-based image generation model based on Stable Diffusion, with an XL version based on Stable Diffusion XL. It also has its own web and command line user interface, meaning you won’t have to jump hoops with things like the Stable Diffusion web UI.

The model focuses on letting users create visuals based on their intellectual property with customized workflows. InvokeAI is one of the best open-source AI image generation models for training custom models and working with intellectual property.

Its official Github repository lists two installation methods: installing via InvokeAI’s installer or using PyPI if you’re comfortable with a terminal and Python and need more control over the packages installed with the model.

However, the extra control does bring a few limitations, most notably stricter hardware requirements. InvokeAI recommends a dedicated GPU with at least 4GB of memory, with six to eight GB recommended for running the XL variant. The VRAM requirements apply to both AMD and Nvidia GPUs. You’ll also need at least 12GB of RAM and 12GB of free disk space for the model, its dependencies, and Python.

Yadullah Abidi/MakeUseOf/InvokeAI

While the documentation doesn’t recommend Nvidia’s GTX 10 Series and 16 Series GPUs for their lack of video memory, the provided installer did run just fine. While your mileage may vary, if you’re on a lower-end GPU, expect to wait longer to see your prompts being turned into images. Finally, if you’re on Windows, you can only use an Nvidia GPU, as there’s no support for AMD GPUs currently.

For the image generation part, the model tends to lean more toward artistic styles than photorealism. Of course, you can train the model on your dataset and have it generate images closer to what you want, even if that involves photorealistic images, especially if you’re working in product design, architecture, or retail spaces. However, one important thing to keep in mind is that InvokeAI is primarily an image generation engine, meaning you’ll likely have to use your own models for the best results (easily found via the model manager provided in the web interface) as the default model is quite similar to Stable Diffusion itself.

5 Openjourney

Openjourney is a free, open-source AI image generation model based yet again on Stable Diffusion. If you’re wondering why the model is called Openjourney, it’s because it was trained on Midjourney images and can mimic its style in the images it generates.

PromptHero , the company behind Openjourney, lets you test the model alongside other models, including Stable Diffusion (versions 1.5 and 2), DreamShaper, and Realistic Vision. When signing up, you get 25 free credits (one credit for each image generated), after which you have to subscribe to their Pro subscription tier, which costs $9 a month and gives you access to 300 credits each month with other exclusive features.

However, if you want to run it locally and for free, you can download the model file from HuggingFace and run it using the Stable Diffusion web UI. Openjourney is also the second most downloaded AI image generation model on HuggingFace, right behind Stable Diffusion.

Openjourney doesn’t list any specific hardware requirements for running the model locally on its website, but you can expect similar hardware requirements to Stable Diffusion. This means a dedicated GPU with 4GB VRAM, 16GB RAM, and around 12 to 15GB of free space on your computer to save the model and its dependencies.

Yadullah Abidi/MakeUseOf/OpenJourney

Images generated by Openjourney tend to be balanced between photorealism and art unless otherwise specified. If you’re looking for an all-around model and prefer the Midjourney look and feel without paying for the subscription, Openjourney is one of the best options.

AI-based text-to-image generation models are everywhere and becoming easier to access daily. While it’s easy just to visit a website and generate the image you’re looking for, open-source text-to-image generators are your best bet if you want more control over the generation process.

MAKEUSEOF VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

There are dozens of free and open-source AI text-to-image generators available on the internet that specialize in specific kinds of images. So, we’ve sifted through the pile and found the best open-source AI text-to-image generators you can try right now.

1 Craiyon

Craiyon is one of the most easily accessible open-source AI image generators. It’s based on DALL-E Mini, and while you can clone the Github repository and install the model locally on your computer, Craiyon seems to have dropped this approach in favor of its website.

The official Github repository hasn’t been updated since June 2022, but the latest model is still available for free on the official Craiyon site . There are no Android or iOS apps either.

In terms of functionality, you’ll see all the usual options that you expect from an AI image generator. Once you enter your prompt and get an image, you can use the upscale feature to get a higher-resolution copy. There are three styles to choose from: Art, Photo, and Drawing. You can also select the “None” option if you want the model to decide.

Additionally, “Expert Mode” lets you include negative words, which tells the model to avoid specific items. There’s also a prompt prediction feature, which uses ChatGPT to help users write the best, most detailed prompts possible. Lastly, the AI-powered remove background features can help you save time and effort cropping backgrounds out of images.

And that’s about everything Craiyon does. It’s not the most sophisticated AI image generation model, but it does well as a basic model if you don’t want something detailed or realistic.

The model is free to use, but free users are limited to nine free images at a time within a minute. You can subscribe to their Supporter or Professional tiers (priced at $5 and $20 a month, respectively, and billed annually) to get no ads or watermarks, faster generation, and the option to keep your generated images private. A Custom subscription tier also allows custom models, integration, dedicated support, and private servers.

2 Stable Diffusion 1.5

Stable Diffusion is perhaps one of the most popular open-source text-to-image generation models. It also powers other models, including the three image generators mentioned below. It was released in 2022 and has had many implementations since.

I’ll spare you the overly technical details of how the model works (for which you can check out their official Github repository ), but the model is easy to install even for complete beginners and works well as long as you have a dedicated GPU with at least 4GB of memory. You can also access Stable Diffusion online, and we’ve got you covered if you want to run Stable Diffusion on a Mac .

There are several checkpoints (consider them versions) available to use for Stable Diffusion. While we tested out version 1.5, version 2.1 is also in active development and is more precise.

Yadullah Abidi/MakeUseOf/DreamShaper

Running the model is also rather easy. We tested it with the AUTOMATIC1111 Stable Diffusion web user interface , and all controls and parameters work well. It’s also quite NSFW-proof courtesy of the LAION-5B database that the model trained on (although it’s not perfect, mind you). While generation time itself will vary based on your hardware, you can expect your images to be detailed and realistic even with basic prompts.

3 DreamShaper

DreamShaper is an image generation model based on Stable Diffusion. It was intended as an open-source alternative to MidJourney and focuses on photorealism in the generated images, although it can handle anime and painting styles just as well with a few tweaks.

The model is more capable than Stable Diffusion, allowing users more freedom over the final output, ranging from lightning improvements to looser NSFW restrictions. Running the model is also easy, with a downloadable, pre-trained version available online for local access and a host of websites, including Sinkin.ai , RandomSeed , and Mage.space (requires a basic subscription) that let you run the model with GPU acceleration.

As you can probably guess by now, images generated by DreamShaper tend to look more realistic compared to Stable Diffusion. Even if you run the same prompt on both models, the DreamShaper model will likely be more realistic, detailed, and better-lit.

This is especially true for portraits or characters, something I found Stable Diffusion lacking compared to the same prompt. If your images become too realistic, here are four ways to identify an AI-generated image .

You don’t need a behemoth PC to run the model, either. My GTX 1650Ti with 4GB VRAM ran the model perfectly. Generation time was a bit longer, but it didn’t seem to affect the actual output. That said, you might require GPUs with more VRAM to run DreamShaper XL, which is based on the Stable Diffusion XL model.

4 InvokeAI

Invoke AI is another AI-based image generation model based on Stable Diffusion, with an XL version based on Stable Diffusion XL. It also has its own web and command line user interface, meaning you won’t have to jump hoops with things like the Stable Diffusion web UI.

The model focuses on letting users create visuals based on their intellectual property with customized workflows. InvokeAI is one of the best open-source AI image generation models for training custom models and working with intellectual property.

Its official Github repository lists two installation methods: installing via InvokeAI’s installer or using PyPI if you’re comfortable with a terminal and Python and need more control over the packages installed with the model.

However, the extra control does bring a few limitations, most notably stricter hardware requirements. InvokeAI recommends a dedicated GPU with at least 4GB of memory, with six to eight GB recommended for running the XL variant. The VRAM requirements apply to both AMD and Nvidia GPUs. You’ll also need at least 12GB of RAM and 12GB of free disk space for the model, its dependencies, and Python.

Yadullah Abidi/MakeUseOf/InvokeAI

While the documentation doesn’t recommend Nvidia’s GTX 10 Series and 16 Series GPUs for their lack of video memory, the provided installer did run just fine. While your mileage may vary, if you’re on a lower-end GPU, expect to wait longer to see your prompts being turned into images. Finally, if you’re on Windows, you can only use an Nvidia GPU, as there’s no support for AMD GPUs currently.

For the image generation part, the model tends to lean more toward artistic styles than photorealism. Of course, you can train the model on your dataset and have it generate images closer to what you want, even if that involves photorealistic images, especially if you’re working in product design, architecture, or retail spaces. However, one important thing to keep in mind is that InvokeAI is primarily an image generation engine, meaning you’ll likely have to use your own models for the best results (easily found via the model manager provided in the web interface) as the default model is quite similar to Stable Diffusion itself.

5 Openjourney

Openjourney is a free, open-source AI image generation model based yet again on Stable Diffusion. If you’re wondering why the model is called Openjourney, it’s because it was trained on Midjourney images and can mimic its style in the images it generates.

PromptHero , the company behind Openjourney, lets you test the model alongside other models, including Stable Diffusion (versions 1.5 and 2), DreamShaper, and Realistic Vision. When signing up, you get 25 free credits (one credit for each image generated), after which you have to subscribe to their Pro subscription tier, which costs $9 a month and gives you access to 300 credits each month with other exclusive features.

However, if you want to run it locally and for free, you can download the model file from HuggingFace and run it using the Stable Diffusion web UI. Openjourney is also the second most downloaded AI image generation model on HuggingFace, right behind Stable Diffusion.

Openjourney doesn’t list any specific hardware requirements for running the model locally on its website, but you can expect similar hardware requirements to Stable Diffusion. This means a dedicated GPU with 4GB VRAM, 16GB RAM, and around 12 to 15GB of free space on your computer to save the model and its dependencies.

Yadullah Abidi/MakeUseOf/OpenJourney

Images generated by Openjourney tend to be balanced between photorealism and art unless otherwise specified. If you’re looking for an all-around model and prefer the Midjourney look and feel without paying for the subscription, Openjourney is one of the best options.

Also read:

- [New] Spark Imagination with the Top Drawing Apps on Android Devices

- [Updated] In 2024, Audio-to-Video Bridge Easy 3-Step Guide for MP3 to YouTube Uploads

- [Updated] Unlock Screen Capture Potential with Expert Tips From Adobe Captivity

- Astro A40 Headset Mic Problems: Effective Fixes That Worked for Me

- Budget-Friendly AIs on Par with Sora's Capabilities

- Fix & Solutions: How to Stop CS2 From Crashing on Your Computer

- How to Change/Fake Your Honor X50 Location on Viber | Dr.fone

- How To Restore Missing Call Logs from Motorola Edge 40 Neo

- In 2024, Edit and Send Fake Location on Telegram For your Xiaomi Redmi Note 12 Pro+ 5G in 3 Ways | Dr.fone

- Perfecting Press Releases: ChatGPT's Approach

- Secure AI Conversations: Top 5 Safety Guidelines for Children's GPT Use

- Transform Your Excel Skills Using ChatGPT Tips

- Triple Use Cases for ChatGPT's Integration with WolframAlpha

- Understanding Current Availability of ChatGPT

- Title: Exploring the Finest Free AI Creation Software

- Author: Brian

- Created at : 2024-10-20 20:27:02

- Updated at : 2024-10-26 16:17:35

- Link: https://tech-savvy.techidaily.com/exploring-the-finest-free-ai-creation-software/

- License: This work is licensed under CC BY-NC-SA 4.0.