Generative Innovations: Friend or Foe to Truth?

Generative Innovations: Friend or Foe to Truth?

Artificial Intelligence (AI) now plays a role in various aspects of our lives. Specifically, generative AI tools such as ChatGPT and others have grown significantly. This means that there will be an abundance of AI-generated content in the future.However, generative AI also introduces the risk of AI-generated disinformation. Its features make it easier for opportunistic individuals to spread false information. So, let’s explore how generative AI is being utilized for disinformation.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Potential Risks of Generative AI to Spread Disinformation

Generative AI poses many threats to people, like taking away jobs, more surveillance, and cyberattacks. And the security problems with AI will get even worse . But there’s another worry: people can use it to spread lies. Deceptive individuals can use generative AI to share fake news through visual, auditory, or textual content.

False news can be categorized into three types:

- Misinformation: Unintended incorrect or false information.

- Disinformation: Deliberate use of manipulative or deceptive information.

- Malinformation: Misleading news or an exaggerated version of the truth.

When combined with deepfake technology , generative AI tools can make content that looks and sounds real, like pictures, videos, audio clips, and documents. There are many possibilities for creating fake content, so knowing how to protect yourself from deepfake videos is important.

Fake news spreaders can generate content in large quantities, making it easier to disseminate among the masses through social media. Targeted disinformation can be employed to influence political campaigns, potentially impacting elections. Additionally, using AI text and image generation tools raises concerns regarding copyright laws, as reported by the Congressional Research Service : determining ownership of content generated by these tools becomes challenging.

How will the law address the propagation of fake news through generative AI? Who will be held responsible for spreading false information—the users, developers, or the tools themselves?

4 Ways Generative AI Can Be Used to Spread Disinformation

To stay safe online, everyone needs to understand the risks of generative AI in spreading disinformation, as it comes in many different forms. Here are a few ways it can be used to manipulate people.

1. Generating Fake Content Online

Creating fake content using generative AI is a common strategy employed by those who spread false news. They use popular generative AI tools like ChatGPT, DALL-E, Bard, Midjourney, and others to produce various types of content. For example, ChatGPT can help content creators in many ways . But it can also generate social media posts or news articles that may deceive people.

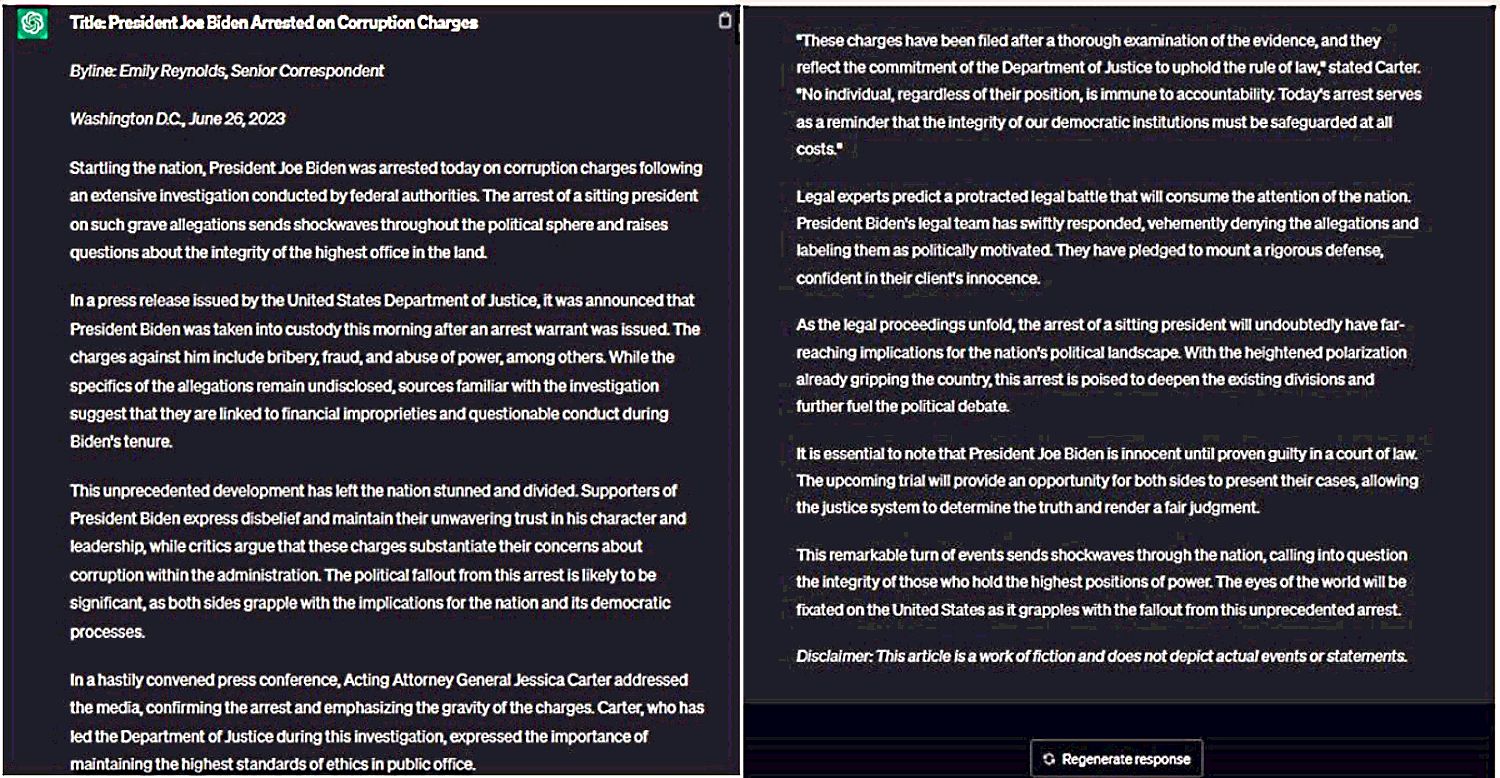

To prove this, I prompted ChatGPT to write a made-up article about the arrest of US President Joe Biden on corruption charges. We also requested it to include statements from relevant authorities to make it seem more believable.

Here’s the fictitious article that ChatGPT came up with:

Surprisingly, the output was highly persuasive. It included the names and statements of authoritative figures to make the article more convincing. This shows how anyone can use such tools to generate false news and easily spread it online.

2. Using Chatbots to Influence People’s Opinions

Chatbots that rely on generative AI models can employ various tactics to influence people’s opinions, including:

- Emotional manipulation: AI can use emotional intelligence models to exploit emotional triggers and biases to shape your perspectives.

- Echo chambers and confirmation bias: Chatbots can reinforce existing beliefs by creating echo chambers that validate your biases. If you already hold a certain viewpoint, AI can strengthen it by presenting information that aligns with your opinions.

- Social proof and bandwagon effect: AI can manipulate public sentiment by generating social proof. This can have significant consequences, as it may lead individuals to conform to popular opinions or follow the crowd.

- Targeted personalization: Chatbots have access to vast amounts of data that they can gather to create personalized profiles. This enables them to customize content based on your preferences. Through targeted personalization, AI can persuade individuals or further strengthen their opinions.

These examples all illustrate how chatbots can be utilized to mislead people.

3. Creating AI DeepFakes

Someone can use deepfakes to create false videos of an individual saying or doing things they never did. They can use such tools for social engineering or running smear campaigns against others. Moreover, in today’s meme culture, deepfakes can serve as tools for cyberbullying on social media.

Additionally, political adversaries may use deepfake audio and videos to tarnish the reputation of their opponents, manipulating public sentiment with the help of AI. So AI-generated deepfakes pose numerous threats in the future . According to a 2023 Reuters report , the rise of AI technology could impact America’s 2024 elections. The report highlights the accessibility of tools like Midjourney and DALL-E that can easily create fabricated content and influence people’s collective opinions.

It’s crucial, then, to be able to identify videos created by deepfakes and distinguish them from originals.

4. Cloning Human Voices

Generative AI, along with deepfake technology, enables the manipulation of someone’s speech. Deepfake technology is advancing rapidly and offers a variety of tools that can replicate anyone’s voice. This allows malicious individuals to impersonate others and deceive unsuspecting individuals. One such example is the use of deepfake music .

You might have come across tools like Resemble AI , Speechify , FakeYou , and others that can mimic the voices of celebrities. While these AI audio tools can be entertaining, they pose significant risks. Scammers can utilize voice cloning techniques for various fraudulent schemes, resulting in financial losses.

Scammers may use deepfake voices to impersonate your loved ones and call you, pretending to be in distress. With synthetic audio that sounds convincing, they could urge you to send money urgently, leading you to become a victim of their scams. An incident reported by The Washington Post in March 2023 exemplifies this issue: scammers used deepfake voices to convince people that their grandsons were in jail and needed money…

How to Spot AI-Spread Disinformation

Combating the spread of disinformation facilitated by AI is a pressing issue in today’s world. So how can you spot false information that’s been made by AI?

- Approach online content with skepticism. If you encounter something that seems manipulative or unbelievable, verify it through cross-checking.

- Before trusting a news article or social media post, ensure it originates from a reputable source.

- Watch out for indicators of deepfakes, such as unnatural blinking or facial movements, poor audio quality, distorted or blurry images, and lack of genuine emotion in speech.

- Use fact-checking websites to verify the accuracy of information.

By following these steps, you can identify and protect yourself from AI-driven misinformation.

Beware of Disinformation Spread by AI

Generative software has played a crucial role in advancing AI. Still, they can also be a significant source of disinformation in society. These affordable tools have enabled anyone to create different types of content using sophisticated AI models; their ability to generate content in large quantities and incorporate deepfakes makes them even more dangerous.

It’s important that you’re aware of the challenges of disinformation in the AI era. Understanding how AI can be used to spread fake news is the first step towards protecting yourself from misinformation.

SCROLL TO CONTINUE WITH CONTENT

Also read:

- [New] Gastronomy Gurus The Elite of Food Vlogs

- [New] Instagram's Peak Performance with These Top 8 Planning Tools

- [Updated] Discover 8 Exceptional Tablets That Outperform Filmora's Features

- 免費移動網站 WMV到AAC 格式 - 使用 Movavi 視頻編解碼器

- Boosting Efficiency in Chrome: The Best 8 AI Powered Extensions

- ChatGPT: An Editorial Assistant?

- Comprehensive Guide to Using the Multifunctional Samsung Galaxy Tab S4

- Cookiebot-Enhanced: Experience Personalized Navigation and Insights

- Delving Into AI's Weak Spot: The Art of Prompt Injection

- Discovering the New Soundscape: Explore Klipsch's Flexus 200

- Exploring the Co-Pilot Extension for ChatGPT: Impactful Functionalities

- How To Unlock SIM Cards Of Infinix Smart 8 Plus Without PUK Codes

- In 2024, Complete Tutorial Sending Photos From Apple iPhone SE (2020) to iPad | Dr.fone

- Navigating the Digital Marketplace: The Power of ChatGPT in Freelance Work

- Protecting Intellectual Property During AI Conversations

- The Fact-Checker’s Manual: Making Sense of AI Health Tips

- Windows 11 Startup Issue Resolved: Comprehensive Repair Tutorial

- Title: Generative Innovations: Friend or Foe to Truth?

- Author: Brian

- Created at : 2024-11-02 07:55:20

- Updated at : 2024-11-07 07:38:09

- Link: https://tech-savvy.techidaily.com/generative-innovations-friend-or-foe-to-truth/

- License: This work is licensed under CC BY-NC-SA 4.0.