Getting Acquainted with LangChain Technology

Getting Acquainted with LangChain Technology

With the introduction of large language models (LLMs), Natural Language Processing has been the talk of the internet. New applications are being developed daily due to LLMs like ChatGPT and LangChain.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

LangChain is an open-source Python framework enabling developers to develop applications powered by large language models. Its applications are chatbots, summarization, generative questioning and answering, and many more.

This article will provide an introduction to LangChain LLM. It will cover the basic concepts, how it compares to other language models, and how to get started with it.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Understanding the LangChain LLM

Before explaining how LangChain works, first, you need to understand how large language models work . A large language model is a type of artificial intelligence (AI) that uses deep learning to train the machine learning models on big data consisting of textual, numerical, and code data.

The vast amount of data enables the model to learn the existing patterns and relationships between words, figures, and symbols. This feature allows the model to perform an array of tasks, such as:

- Text generation, language translation, creative, technical, and academic content writing, and accurate and relevant question answering.

- Object detection in images.

- Summarization of books, articles, and research papers.

LLMs’ most significant limitation is that the models are very general. This feature means that despite their ability to perform several tasks effectively, they may sometimes provide general answers to questions or prompts requiring expertise and deep domain knowledge instead of specific answers.

Developed by Harrison Chase in late 2022, the LangChain framework offers an innovative approach to LLMs. The process starts by preprocessing the dataset texts by breaking it down into smaller parts or summaries. The summaries are then embedded in a vector space. The model receives a question, searches the summaries, and provides the appropriate response.

LangChain’s preprocessing method is a critical feature that is unavoidable as LLMs become more powerful and data-intensive. This method is mainly used in code and semantic search cases because it provides real-time collection and interaction with the LLMs.

LangChain LLM vs. Other Language Models

The following comparative overview aims to highlight the unique features and capabilities that set LangChain LLM apart from other existing language models in the market:

- Memory: Several LLMs have a short memory, which usually results in context loss if prompts exceed the memory limit. LangChain, however, provides the previous chat prompts and responses, solving the issue of memory limits. The message history enables a user to repeat the previous messages to the LLM to recap the previous context.

- LLM Switching: Compared to other LLMs that lock your software with a single model’s API, LangChain provides an abstraction that simplifies the switching of LLMs or integrating multiple LLMs into your application. This is useful when you want to upgrade your software capabilities using a compact model, such as Stability AI’s StableLM say from OpenAI’s GPT-3.5.

- Integration: Integrating LangChain into your application is easy compared to other LLMs. It provides pipeline workflows through chains and agents , allowing you to quickly incorporate LangChain into your application. In terms of linear pipelines, chains are objects that essentially connect numerous parts. Agents are more advanced, allowing you to choose how the components should interact using business logic. For instance, you might want to use conditional logic to determine the next course of action based on the results of an LLM.

- Data Passing: Due to the general text-based nature of LLMs, it is usually tricky to pass data to the model. LangChain solves this problem by using indexes . Indexes enable an application to import data in variable formats and store it in a way that makes it possible to serve it row-by-row to an LLM.

- Responses: LangChain provides output parser tools to give answers in a suitable format as opposed to other LLMs whose model response consists of general text. When using AI in an application, it is preferred to have a structured response that you can program against.

Getting Started With LangChain LLM

Now you will learn how to implement LangChain in a real use-case scenario to understand how it works. Before starting the development, you need to set up the development environment.

Setting Up Your Development Environment

First, create a virtual environment and install the dependencies below:

- OpenAI: To integrate GPT-3 API into your application.

- LangChain: To integrate LangChain into your application.

Using pip, run the command below to install the dependencies:

pipenv install langchain openai

The command above installs the packages and creates a virtual environment.

Import the Installed Dependencies

First, import the necessary classes such as LLMChain, OpenAI, ConversationChain, and PromptTemplate from the langchain package.

`from langchain import ConversationChain, OpenAI, PromptTemplate, LLMChain

from langchain.memory import ConversationBufferWindowMemory`

The LangChain classes outline and execute the language model chains.

Access OpenAI API Key

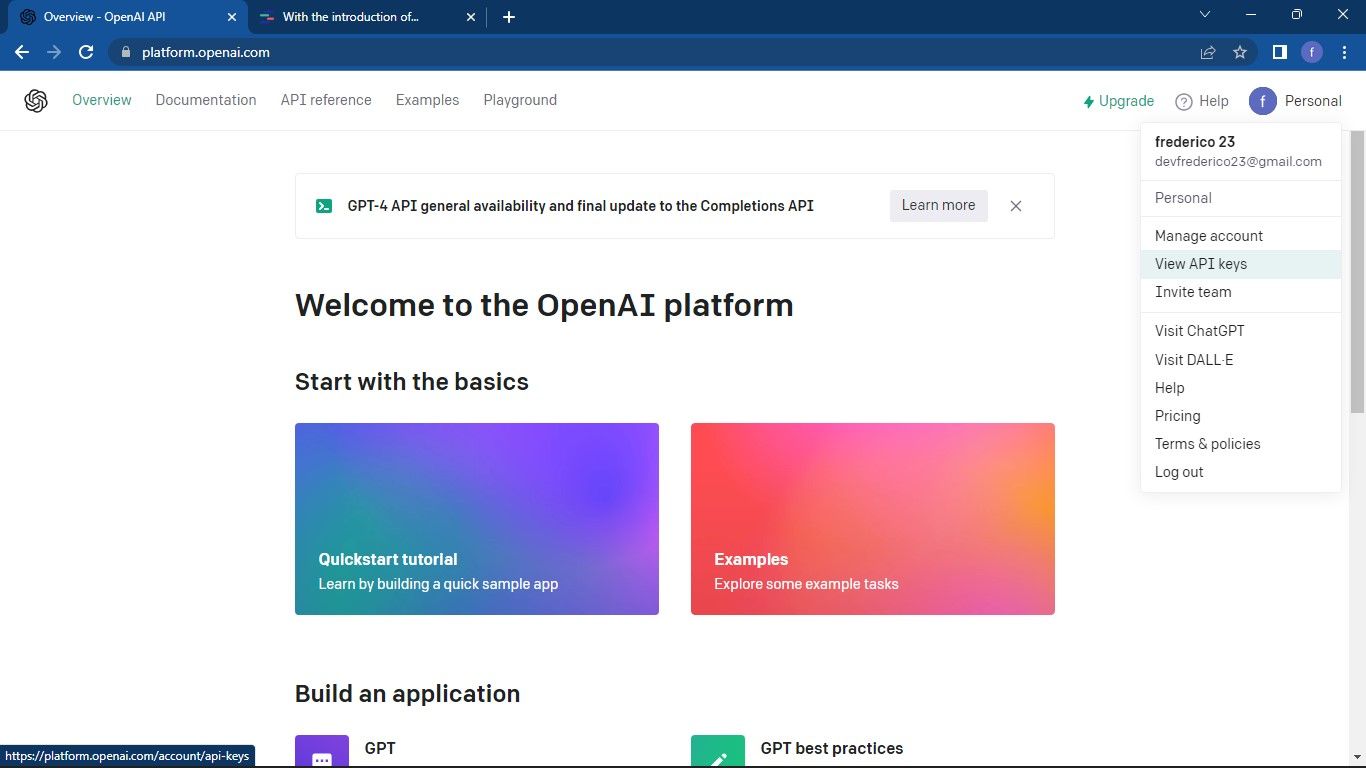

Next, get the OpenAI API key. To access OpenAI’s API key, you must have an OpenAI account, then move to the OpenAI API platform .

On the dashboard, click on the Profile icon. Then, click the View API keys button.

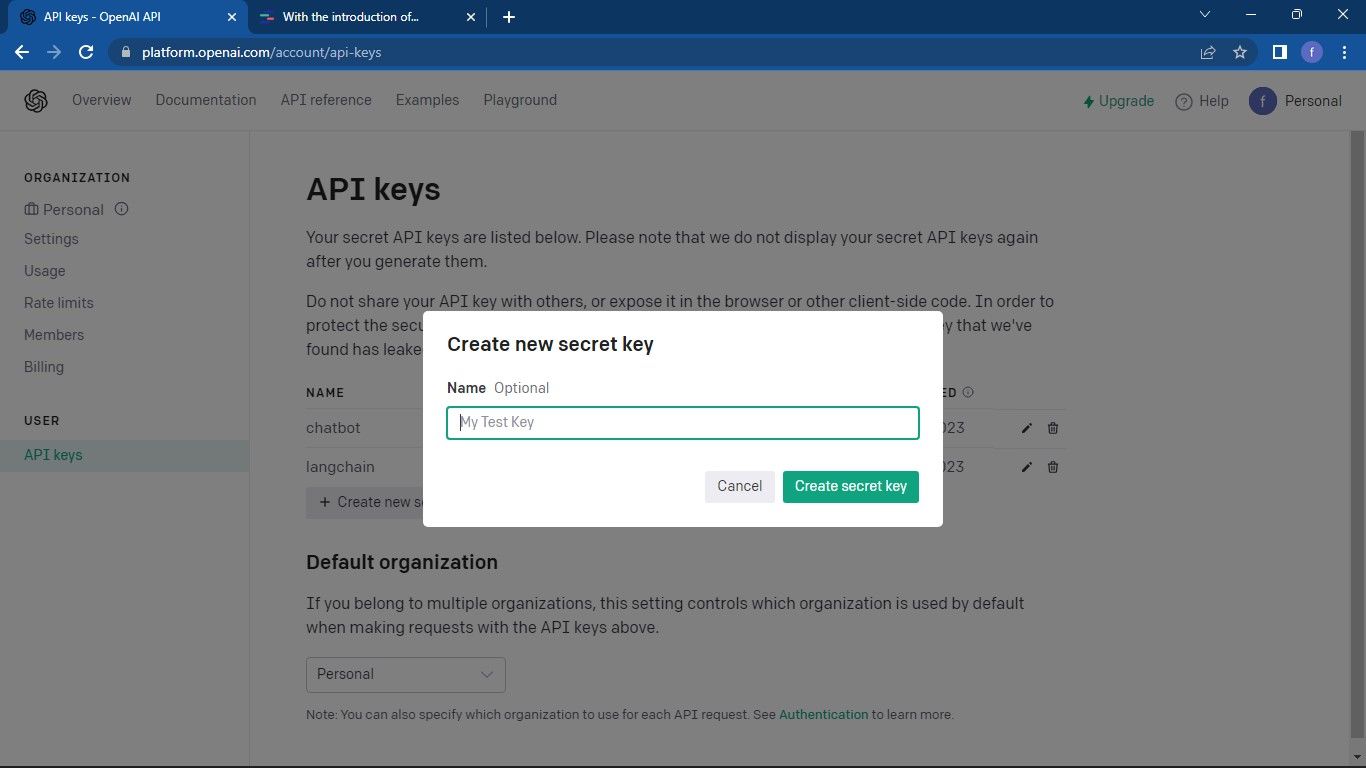

Next, click the Create new secret key button to get a new API key.

Enter the requested name of the API key.

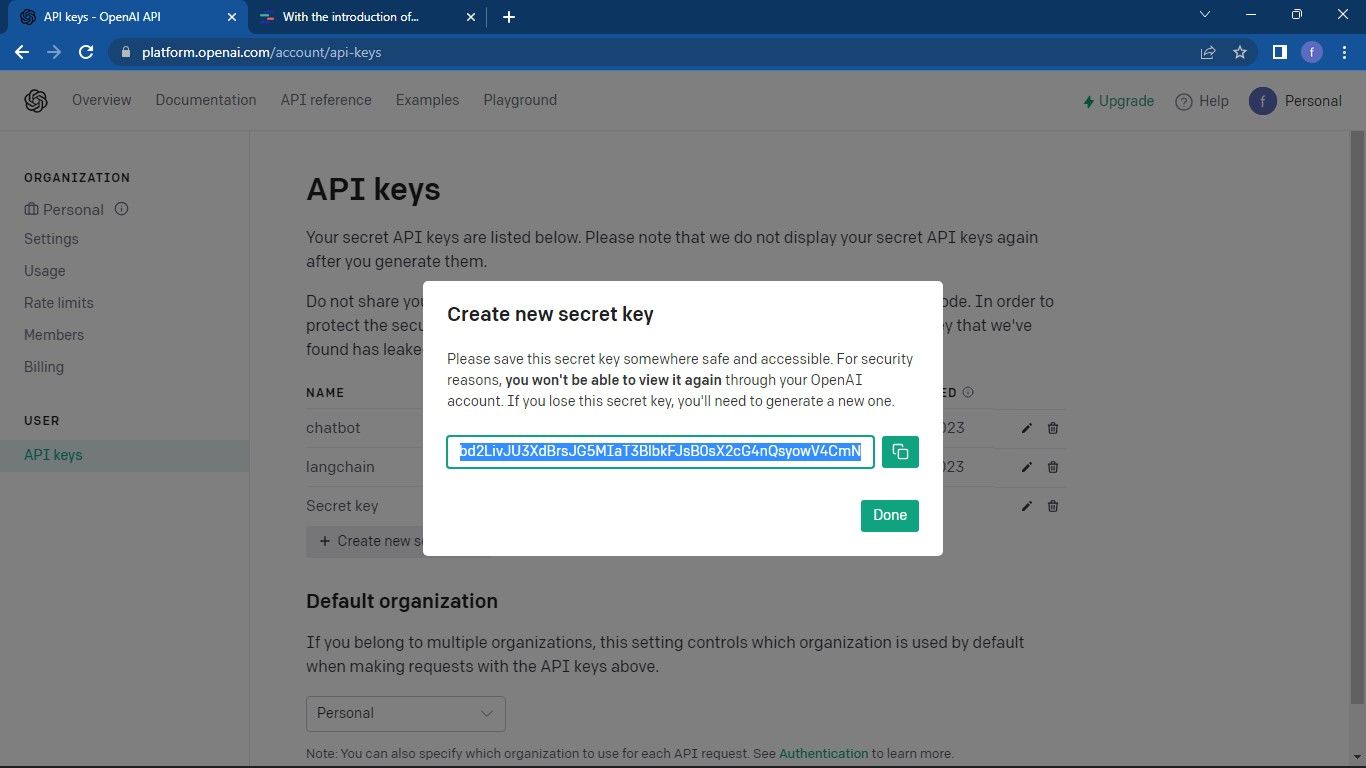

You will receive a secret key prompt.

Copy and store the API key in a safe place for future use.

Developing an Application Using LangChain LLM

You will now proceed to develop a simple chat application as follows:

`# Customize the LLM template

template = “””Assistant is a large language model trained by OpenAI.

{history}

Human: {human_input}

Assistant:”””

prompt = PromptTemplate(input_variables=[“history”, “human_input”], template=template)`

Next, you will load the ChatGPT chain using the API key you stored earlier.

`chatgpt_chain = LLMChain(

llm=OpenAI(openai_api_key=”OPENAI_API_KEY”,temperature=0),

prompt=prompt,

verbose=True,

memory=ConversationBufferWindowMemory(k=2),

)

Predict a sentence using the chatgpt chain

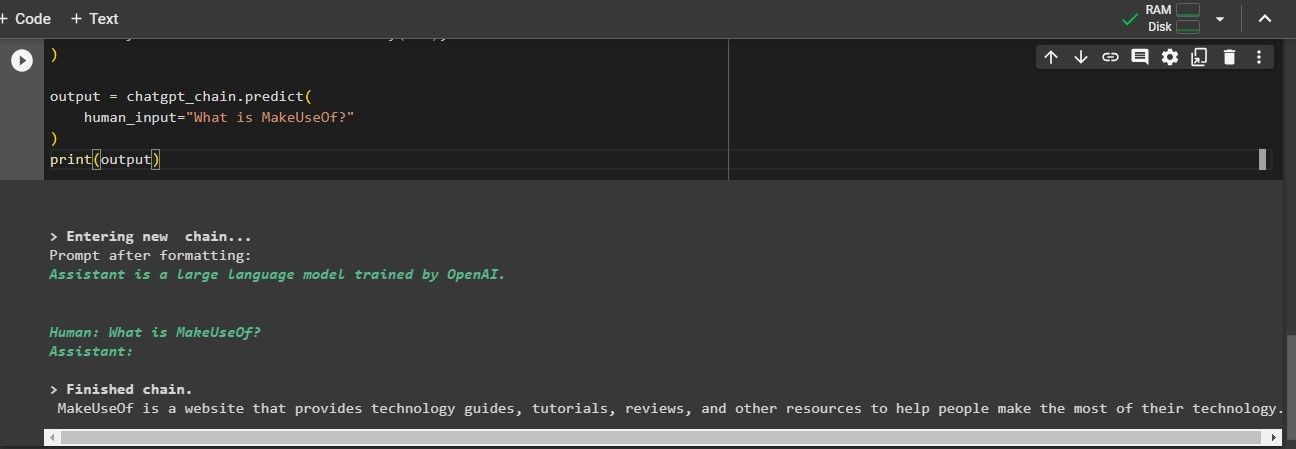

output = chatgpt_chain.predict(

human_input=”What is MakeUseOf?”

)

Display the model’s response

print(output)`

This code loads the LLM chain with the OpenAI API key and the prompt template. User input is then provided, and its output is displayed.

Above is the expected output.

The Rising Influence of LLMs

LLMs consumption is growing rapidly and changing how humans interact with knowledge machines. Frameworks like LangChain are at the forefront of providing developers with a smooth and simple way to serve the LLMs to applications. Generative AI models like ChatGPT, Bard, and Hugging Face are also not left behind in advancing LLM applications.

SCROLL TO CONTINUE WITH CONTENT

LangChain is an open-source Python framework enabling developers to develop applications powered by large language models. Its applications are chatbots, summarization, generative questioning and answering, and many more.

This article will provide an introduction to LangChain LLM. It will cover the basic concepts, how it compares to other language models, and how to get started with it.

Also read:

- [Updated] Cultivating a Unique Vibe in Your Own Mukbang Content

- [Updated] The Ultimate List of 6 Best NFT Platforms for Artists

- 2024 Approved Best Value for Money in Budget 4K Cameras (<$1,000)

- 2024 Approved Elevating Visuals The Shift From SDR to HDR in Editing

- Apple Watch Showdown: Series 10 Vs. Series 8 - Which One Wins the Tech Race? | ZDNet

- Comprehensive Nomad

- In 2024, From Clouds to Storage Keeping Snapshots on Your Phone

- Score Big Savings on the New Apple Watch Series 10 at Best Buy - Preorder Now and Save Up to 50% Off!

- The Role of Vector Databases in Advancing Artificial Intelligence

- Unleashing Trouble? Refrain From the Google Bard Update

- What Does the Co-Pilot Extension Do for ChatGPT?

- Title: Getting Acquainted with LangChain Technology

- Author: Brian

- Created at : 2024-11-10 17:37:06

- Updated at : 2024-11-17 16:08:40

- Link: https://tech-savvy.techidaily.com/getting-acquainted-with-langchain-technology/

- License: This work is licensed under CC BY-NC-SA 4.0.