In-Depth Steps to Running Llama 2 Locally on Your Device

In-Depth Steps to Running Llama 2 Locally on Your Device

Meta released Llama 2 in the summer of 2023. The new version of Llama is fine-tuned with 40% more tokens than the original Llama model, doubling its context length and significantly outperforming other open-sourced models available. The fastest and easiest way to access Llama 2 is via an API through an online platform. However, if you want the best experience, installing and loading Llama 2 directly on your computer is best.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

With that in mind, we’ve created a step-by-step guide on how to use Text-Generation-WebUI to load a quantized Llama 2 LLM locally on your computer.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Why Install Llama 2 Locally

There are many reasons why people choose to run Llama 2 directly. Some do it for privacy concerns, some for customization, and others for offline capabilities. If you’re researching, fine-tuning, or integrating Llama 2 for your projects, then accessing Llama 2 via API might not be for you. The point of running an LLM locally on your PC is to reduce reliance on third-party AI tools and use AI anytime, anywhere, without worrying about leaking potentially sensitive data to companies and other organizations.

With that said, let’s begin with the step-by-step guide to installing Llama 2 locally.

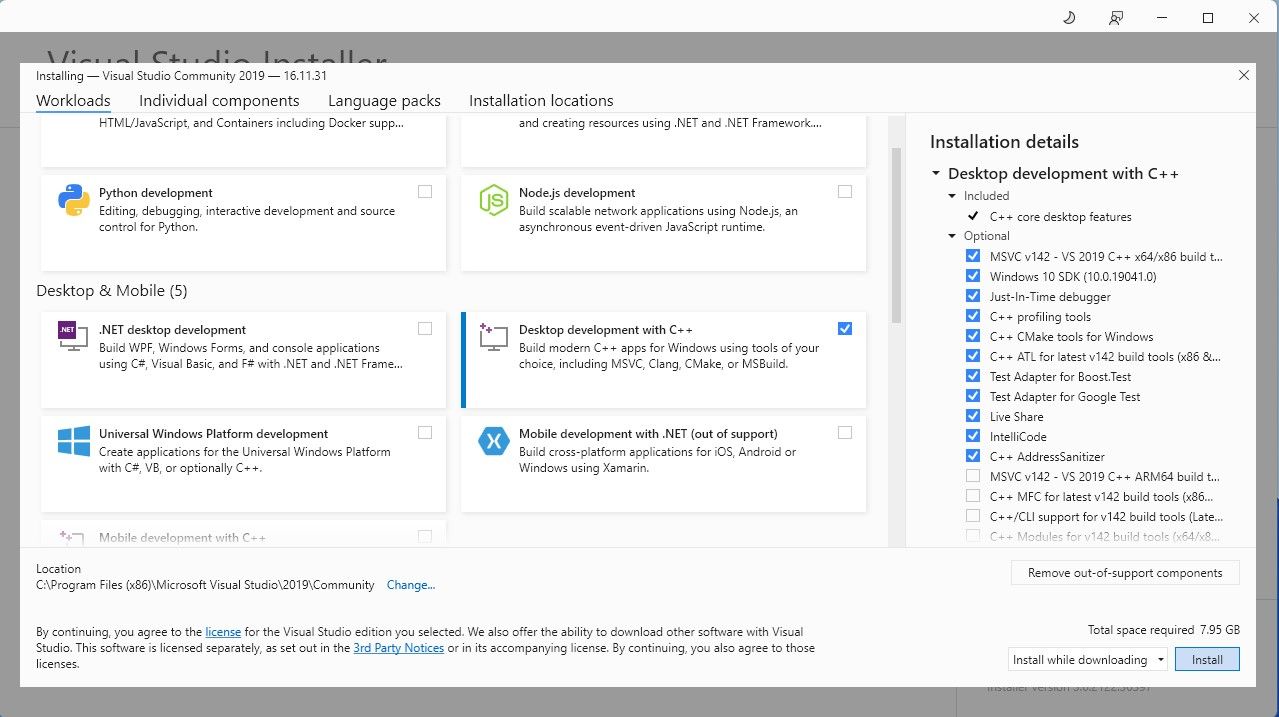

Step 1: Install Visual Studio 2019 Build Tool

To simplify things, we will use a one-click installer for Text-Generation-WebUI (the program used to load Llama 2 with GUI). However, for this installer to work, you need to download the Visual Studio 2019 Build Tool and install the necessary resources.

Download:Visual Studio 2019 (Free)

- Go ahead and download the community edition of the software.

- Now install Visual Studio 2019, then open the software. Once opened, tick the box on Desktop development with C++ and hit install.

Now that you have Desktop development with C++ installed, it’s time to download the Text-Generation-WebUI one-click installer.

Step 2: Install Text-Generation-WebUI

The Text-Generation-WebUI one-click installer is a script that automatically creates the required folders and sets up the Conda environment and all necessary requirements to run an AI model.

To install the script, download the one-click installer by clicking on Code > Download ZIP.

Download:Text-Generation-WebUI Installer (Free)

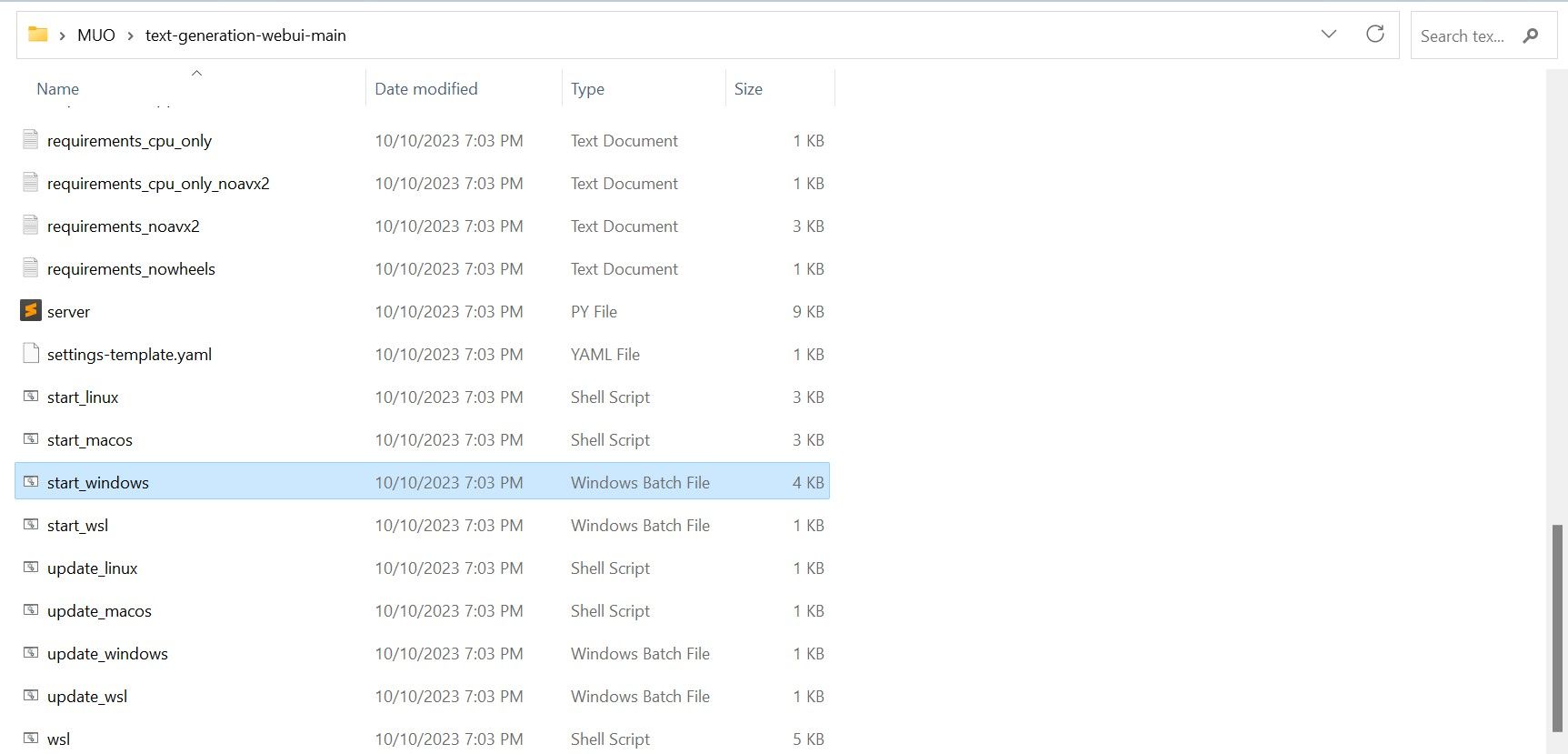

- Once downloaded, extract the ZIP file to your preferred location, then open the extracted folder.

- Within the folder, scroll down and look for the appropriate start program for your operating system. Run the programs by double-clicking the appropriate script.

- If you are on Windows, select start_windows batch file

- for MacOS, select start_macos shell scrip

- for Linux, start_linux shell script.

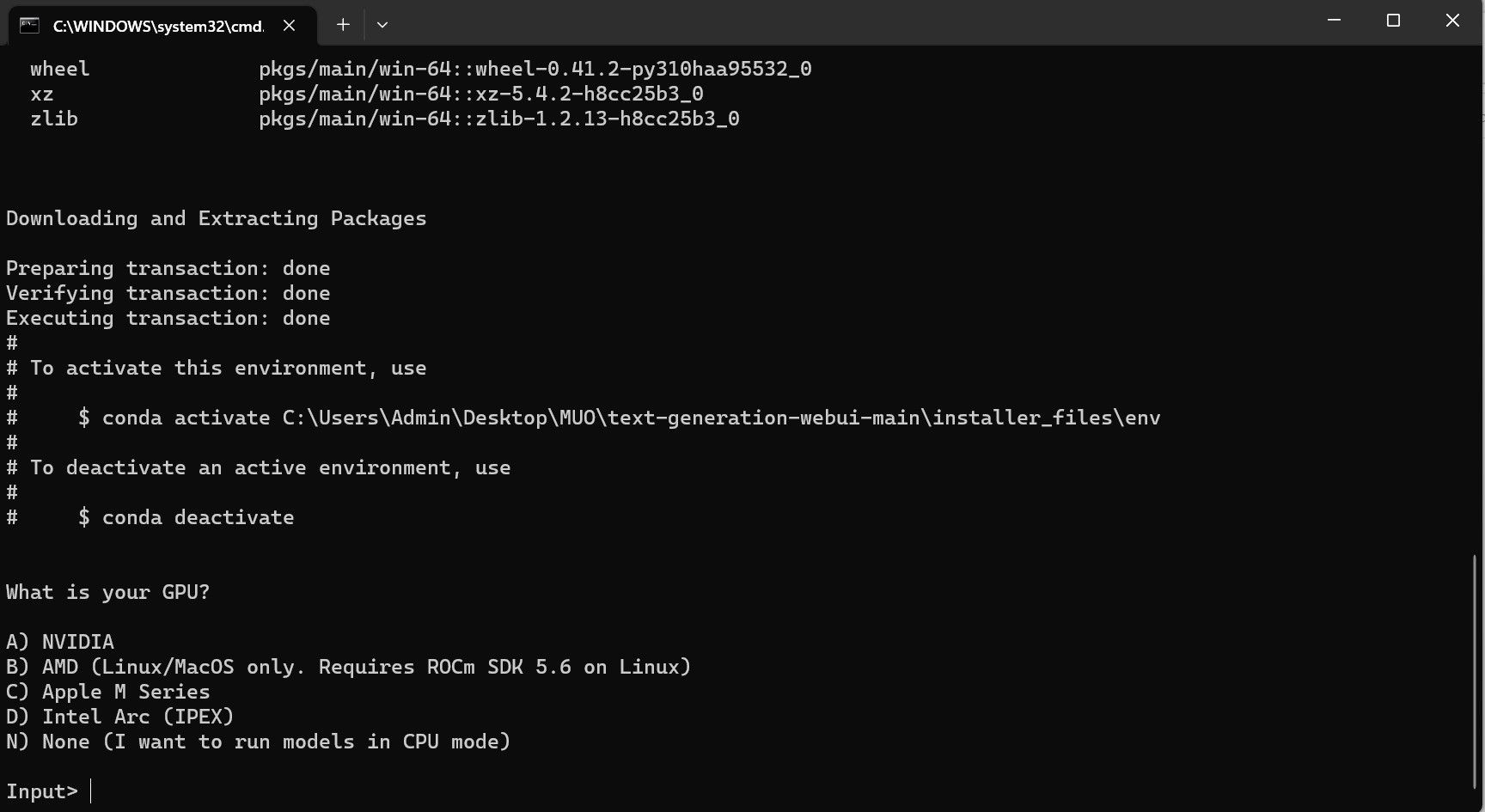

- Your anti-virus might create an alert; this is fine. The prompt is just an antivirus false positive for running a batch file or script. Click on Run anyway.

- A terminal will open and start the setup. Early on, the setup will pause and ask you what GPU you are using. Select the appropriate type of GPU installed on your computer and hit enter. For those without a dedicated graphics card, select None (I want to run models in CPU mode). Keep in mind that running on CPU mode is much slower when compared to running the model with a dedicated GPU.

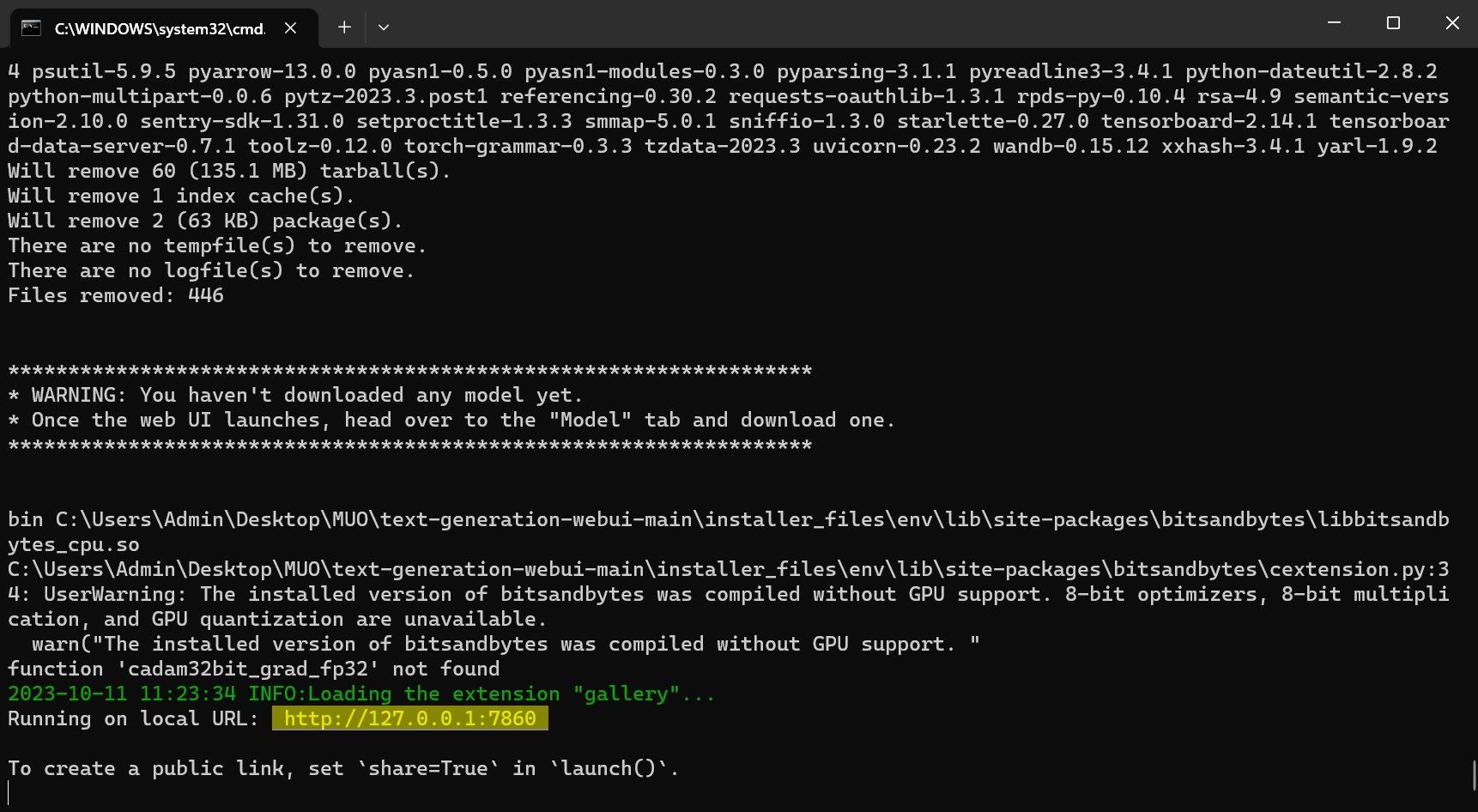

- Once the setup is complete, you can now launch Text-Generation-WebUI locally. You can do so by opening your preferred web browser and entering the provided IP address on the URL.

6. The WebUI is now ready for use.

However, the program is only a model loader. Let’s download Llama 2 for the model loader to launch.

Step 3: Download the Llama 2 Model

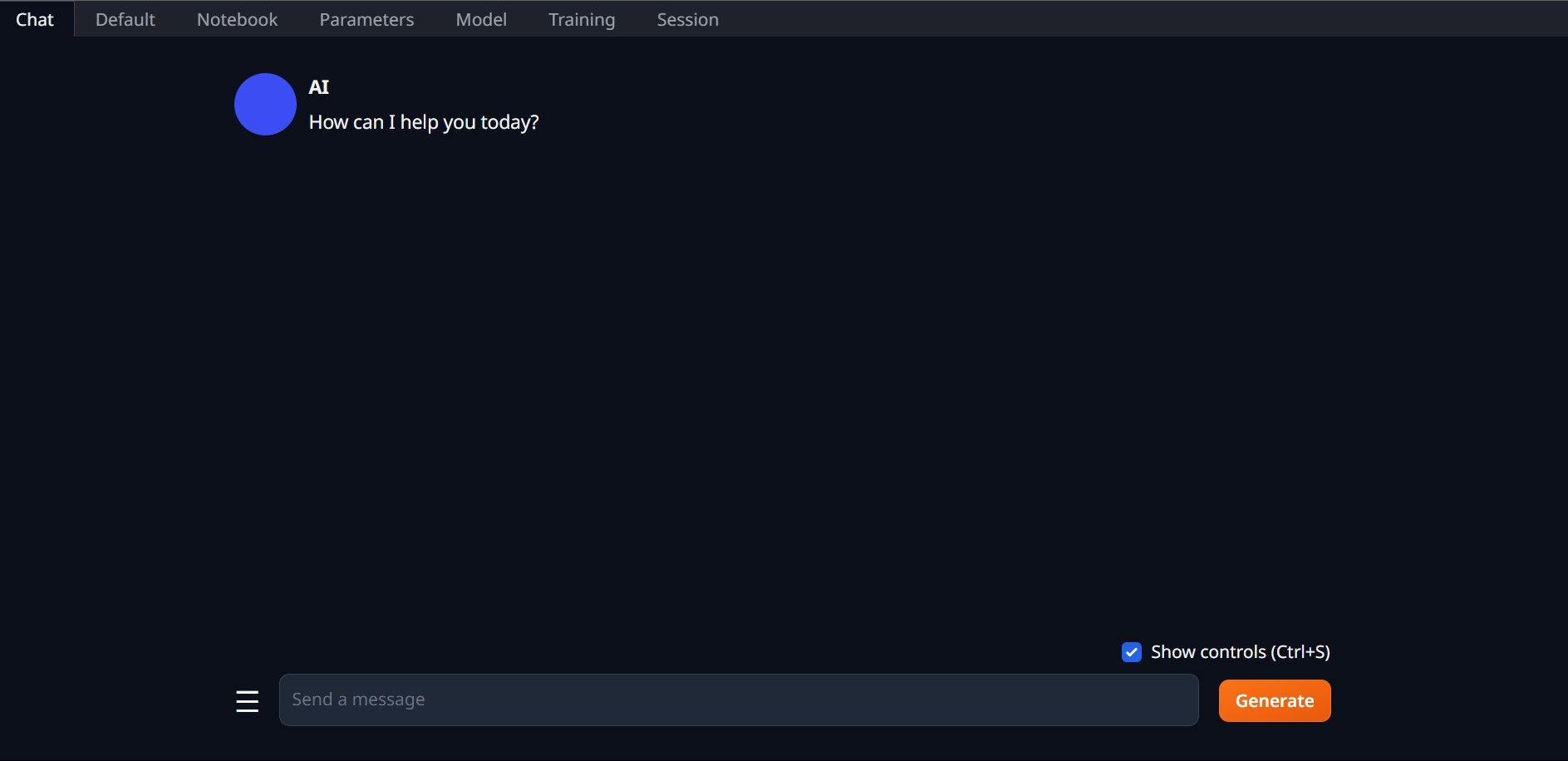

There are quite a few things to consider when deciding which iteration of Llama 2 you need. These include parameters, quantization, hardware optimization, size, and usage. All of this information will be found denoted in the model’s name.

- Parameters: The number of parameters used to train the model. Bigger parameters make more capable models but at the cost of performance.

- Usage: Can either be standard or chat. A chat model is optimized to be used as a chatbot like ChatGPT, while the standard is the default model.

- Hardware Optimization: Refers to what hardware best runs the model. GPTQ means the model is optimized to run on a dedicated GPU, while GGML is optimized to run on a CPU.

- Quantization: Denotes the precision of weights and activations in a model. For inferencing, a precision of q4 is optimal.

- Size: Refers to the size of the specific model.

Note that some models may be arranged differently and may not even have the same types of information displayed. However, this type of naming convention is fairly common in the HuggingFace Model library, so it’s still worth understanding.

In this example, the model can be identified as a medium-sized Llama 2 model trained on 13 billion parameters optimized for chat inferencing using a dedicated CPU.

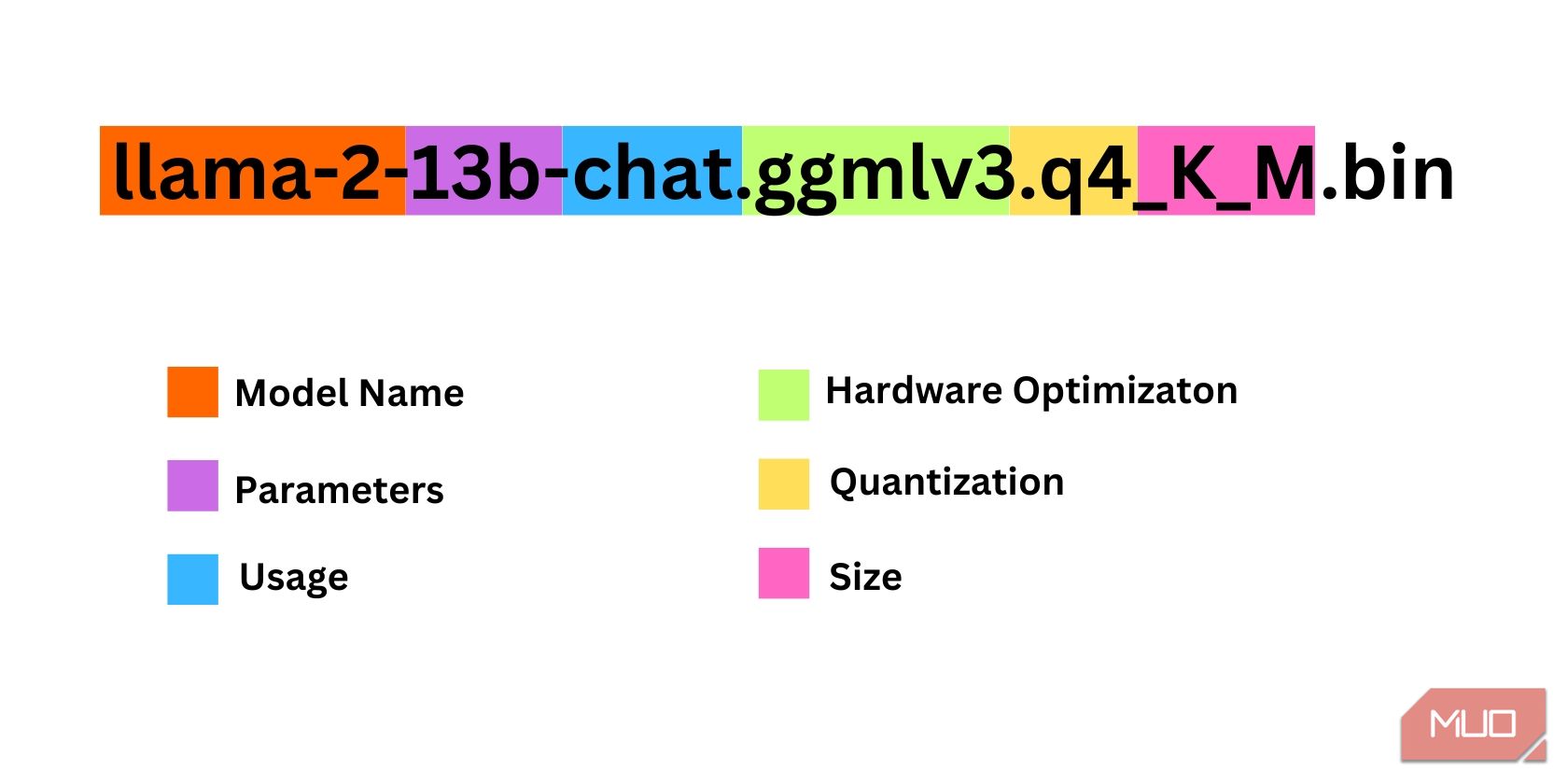

For those running on a dedicated GPU, choose a GPTQ model, while for those using a CPU, choose GGML. If you want to chat with the model like you would with ChatGPT, choose chat, but if you want to experiment with the model with its full capabilities, use the standard model. As for parameters, know that using bigger models will provide better results at the expense of performance. I would personally recommend you start with a 7B model. As for quantization, use q4, as it’s only for inferencing.

Download:GGML (Free)

Download:GPTQ (Free)

Now that you know what iteration of Llama 2 you need, go ahead and download the model you want.

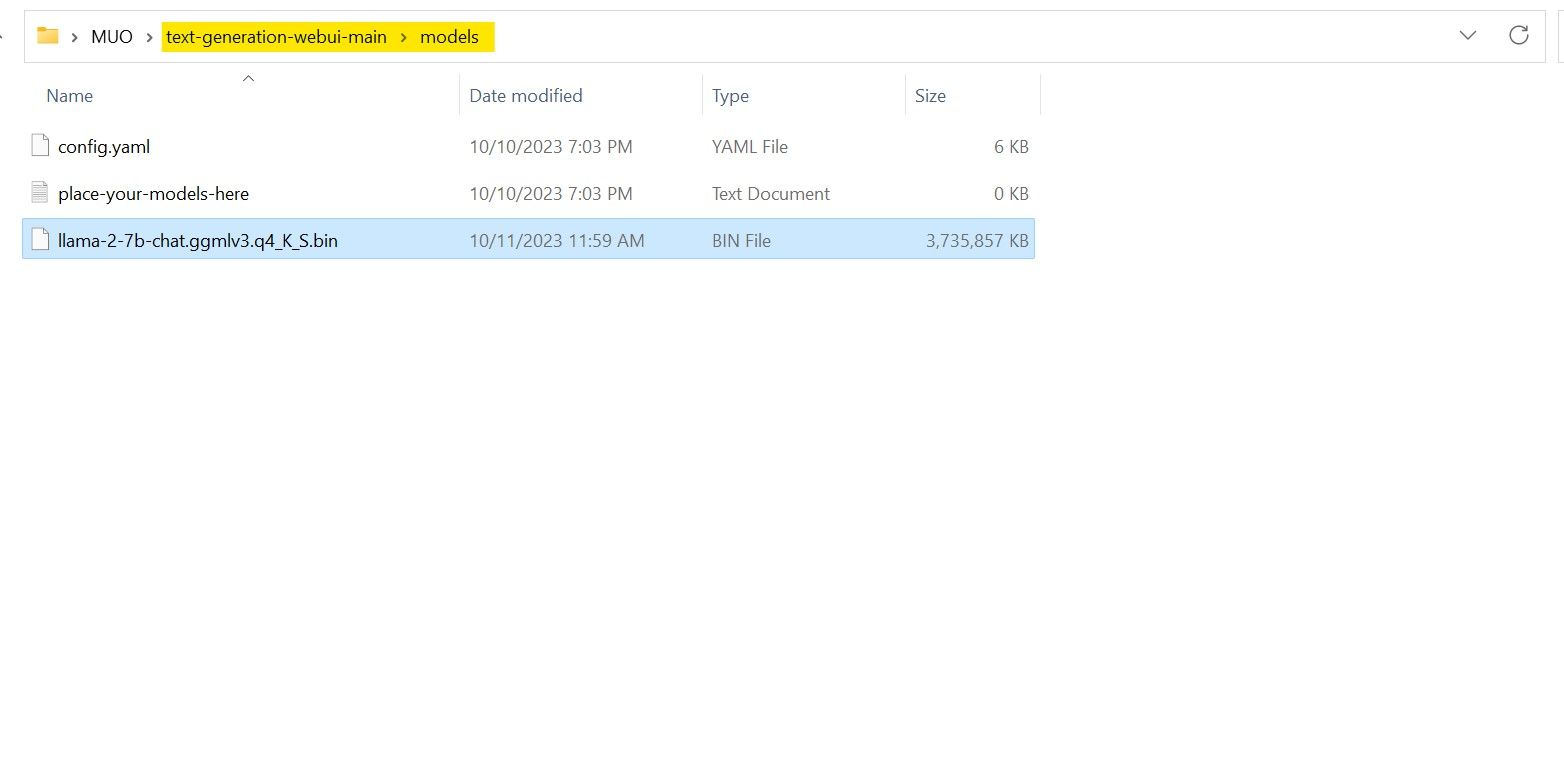

In my case, since I’m running this on an ultrabook, I’ll be using a GGML model fine-tuned for chat, llama-2-7b-chat-ggmlv3.q4_K_S.bin.

After the download is finished, place the model in text-generation-webui-main > models.

Now that you have your model downloaded and placed in the model folder, it’s time to configure the model loader.

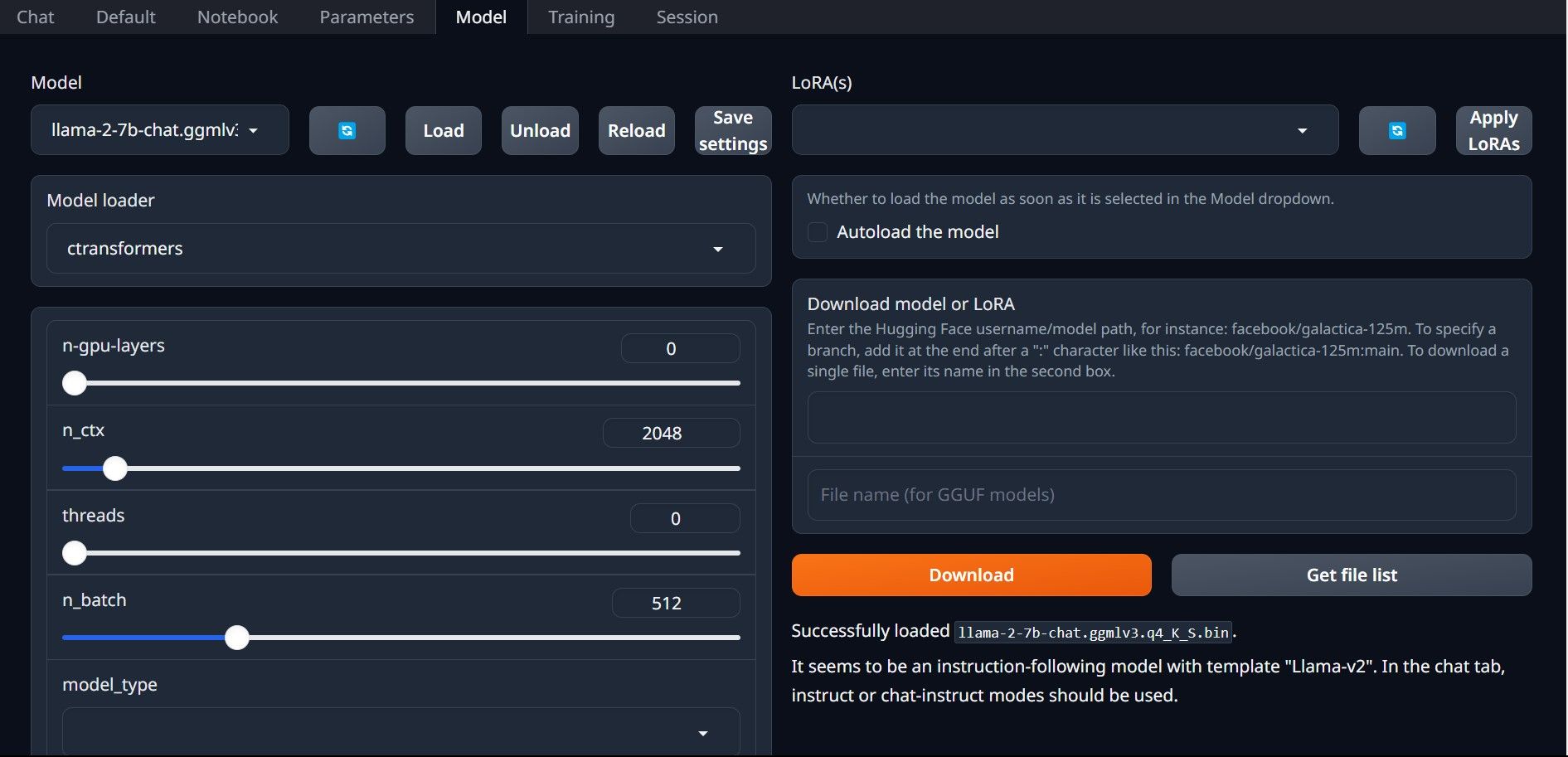

Step 4: Configure Text-Generation-WebUI

Now, let’s begin the configuration phase.

- Once again, open Text-Generation-WebUI by running the start_(your OS) file (see the previous steps above).

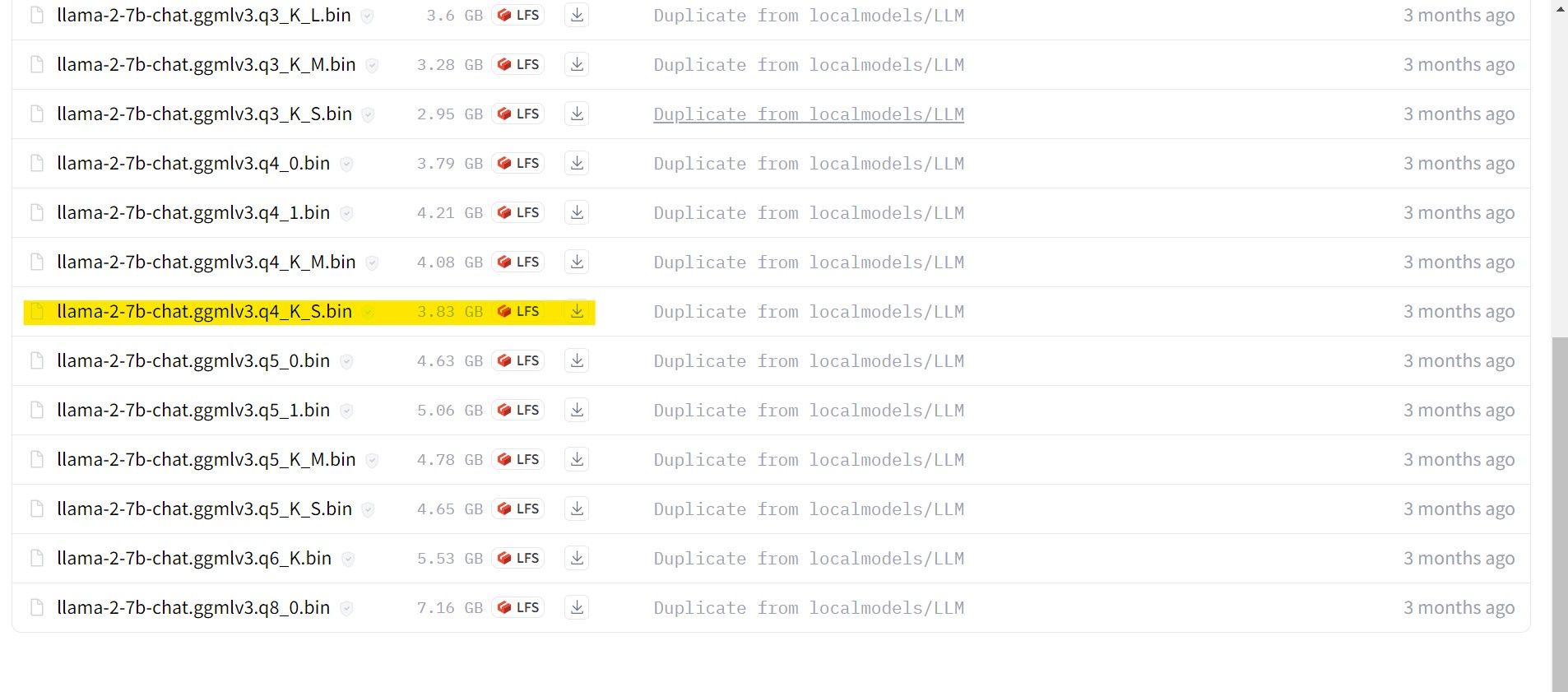

- On the tabs located above the GUI, click Model. Click the refresh button at the model dropdown menu and select your model.

- Now click on the dropdown menu of the Model loader and select AutoGPTQ for those using a GTPQ model and ctransformers for those using a GGML model. Finally, click on Load to load your model.

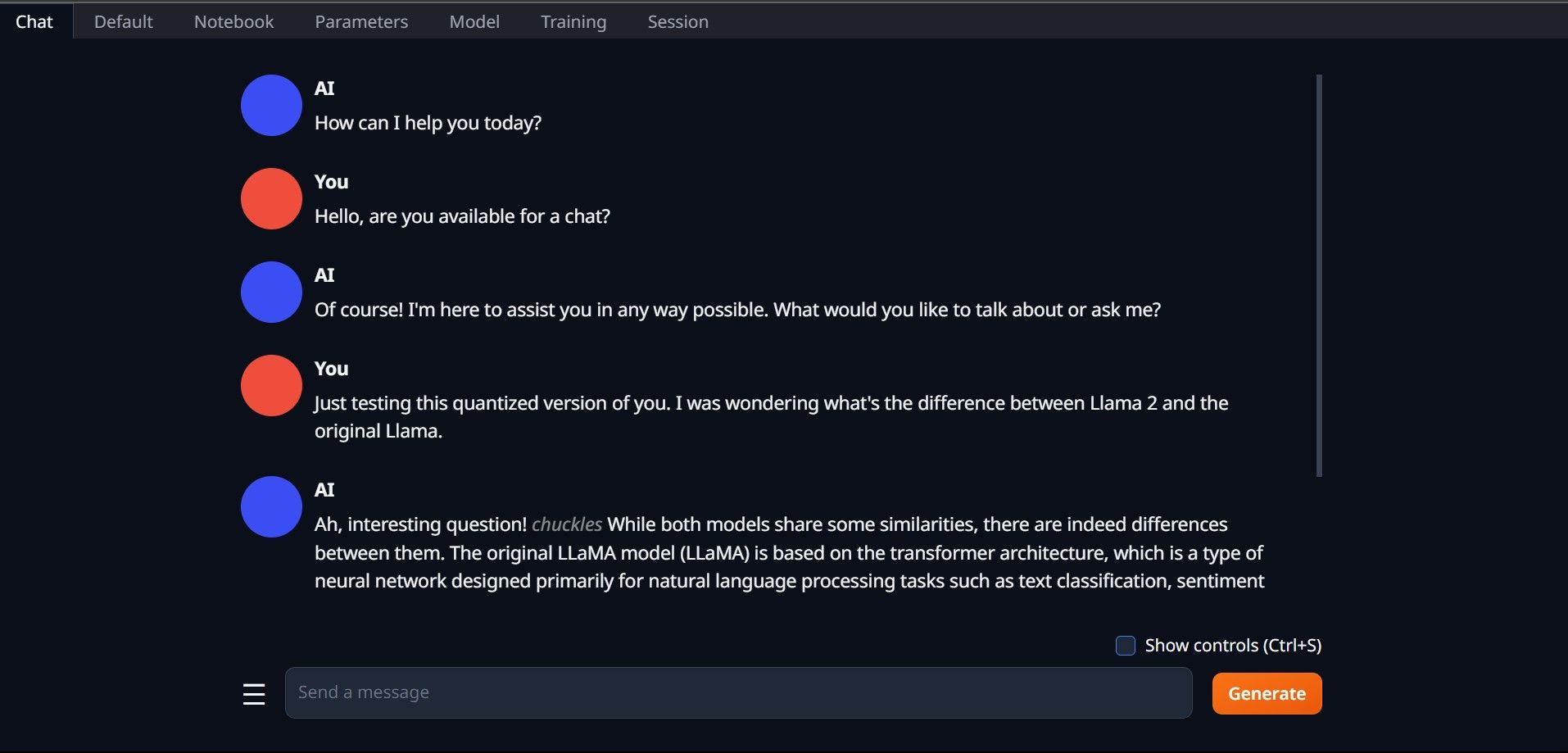

- To use the model, open the Chat tab and start testing the model.

Congratulations, you’ve successfully loaded Llama2 on your local computer!

Try Out Other LLMs

Now that you know how to run Llama 2 directly on your computer using Text-Generation-WebUI, you should also be able to run other LLMs besides Llama. Just remember the naming conventions of models and that only quantized versions of models (usually q4 precision) can be loaded on regular PCs. Many quantized LLMs are available on HuggingFace. If you want to explore other models, search for TheBloke in HuggingFace’s model library, and you should find many models available.

SCROLL TO CONTINUE WITH CONTENT

With that in mind, we’ve created a step-by-step guide on how to use Text-Generation-WebUI to load a quantized Llama 2 LLM locally on your computer.

Also read:

- Android Version of Gemini Introduces Updated Live Chat Functionality and Improved Overlay Assistant Tools

- Beyond the Spotlight: Discover Apple's Most Impactful, Yet Subtle AI Innovation at WWDC

- Brace Yourself, Managers: Microsoft’s Latest Plan Is Causing Stir and Discontent

- Effortlessly Overcome the Blizzards with Top-Tier Electric Snow Blower Reviewed for Cleveland Climates | ZDNET

- Exploring Language Models: BERT & GPT Unveiled

- Forgotten The Voicemail Password Of Xiaomi Redmi Note 12 4G? Try These Fixes

- Get the Newest GeForce 940MX GPU Driver for Your PC - Free Download

- Get Your Apple Watch Series 9/Ultra at Unbeatable Prices Before Sale Ends: A Buyer's Guide | ZDNET

- In 2024, Unveiling the Secrets of Video Captioning in Vimeo

- In 2024, Will the iPogo Get You Banned and How to Solve It On Vivo Y36i | Dr.fone

- Privacy in Dialogue: Bot Safeguarding Strategies

- Simplifying Life: Comparing Everyday Conversational Bots

- Struggling with Your iPhone's Battery Performance After iOS 19? Discover These 7 Helpful Tips to Improve It

- The Zodiac and the Salts of Salvation | Free Book

- Unlock the Full Potential of Craft - Your Definitive Step-by-Step macOS Document Tool Tutorial on ZDNet

- Unlocking PDF Insights with ChatGPT: Four Methods

- Zuck's Goat: A Monetary Metaphor in Animal Form.

- Title: In-Depth Steps to Running Llama 2 Locally on Your Device

- Author: Brian

- Created at : 2024-12-01 16:27:57

- Updated at : 2024-12-06 18:22:51

- Link: https://tech-savvy.techidaily.com/in-depth-steps-to-running-llama-2-locally-on-your-device/

- License: This work is licensed under CC BY-NC-SA 4.0.