Inside ChatGPT’s Woes: Discovering the 8 Crucial Limitations

Inside ChatGPT’s Woes: Discovering the 8 Crucial Limitations

ChatGPT is a powerful AI chatbot that is quick to impress, yet plenty of people have pointed out that it has some serious pitfalls.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

From security breaches to incorrect answers to the undisclosed data it was trained on, there are plenty of concerns about the AI-powered chatbot. Yet, the technology is already being incorporated into apps and used by millions, from students to company employees.

With no sign of AI development slowing down, the problems with ChatGPT are even more important to understand. With ChatGPT set to change our future, here are some of the biggest issues.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

What Is ChatGPT?

ChatGPT is a large language model designed to produce natural human language. Much like conversing with someone, you can talk to ChatGPT, and it will remember things you have said in the past while also being capable of correcting itself when challenged.

It was trained on all sorts of text from the internet, such as Wikipedia, blog posts, books, and academic articles. Alongside responding to you in a human-like way, it can recall information about our present-day world and pull up historical information from our past.

Learning how to use ChatGPT is simple, and it’s equally easy to be fooled into thinking that the AI system performs without any trouble. However, since its release, key problems have emerged around privacy, security, and its wider impact on people’s lives, from jobs to education.

1. Security Threats and Privacy Concerns

There are plenty of things that you must not share with AI chatbots , and for good reason. Writing about your financial details or confidential workplace information comes with a risk. OpenAI retains your chat history on its servers and may share this data with a select number of third-party groups.

In addition, leaving your data in the safety of OpenAI has proved to be a problem. In March 2023, a security breach meant some users on ChatGPT saw conversation headings in the sidebar that didn’t belong to them. Accidentally sharing users’ chat histories is a serious concern for any tech company, but it’s especially bad considering how many people use the popular chatbot.

As reported by Reuters , ChatGPT had 100 million monthly active users in January 2023 alone. While the bug that caused the breach was quickly patched, Italy banned ChatGPT and demanded it stop processing Italian users’ data.

The watchdog organization suspected that European privacy regulations were being breached. After investigating the issue, it requested that OpenAI meet several demands to reinstate the chatbot.

OpenAI eventually resolved the issue with regulators by making several significant changes. For a start, an age restriction was added, limiting the use of the app to people 18+ or 13+ with guardian permission. It also made its Privacy Policy more visible and provided an opt-out Google form for users to exclude their data from its training or delete ChatGPT history entirely.

These changes are a great start, but the improvements should be extended to all ChatGPT users.

You might not think that you would share your personal details so easily, but we’re all susceptible to a slip of the tongue, and a good example of this is how a Samsung employee shared company information with ChatGPT .

2. Concerns Over ChatGPT Training and Privacy Issues

Following the massively popular launch of ChatGPT, critics have questioned how OpenAI trained its model in the first place.

Even with improved changes to OpenAI’s privacy policies following a data breach, it may not be enough to satisfy the General Data Protection Regulation (GDPR), the data protection law that covers Europe. As TechCrunch reports:

It is not clear whether Italians’ personal data that was used to train its GPT model historically, i.e. when it scraped public data off the Internet, was processed with a valid lawful basis — or, indeed, whether data used to train models previously will or can be deleted if users request their data deleted now.

OpenAI likely scooped up personal information when it first trained ChatGPT. While the laws in the United States are less definitive, European data laws protect personal data, whether they post that info publicly or privately.

Similar arguments against ChatGPT training data are voiced by artists who say they never consented to their work being used to train an AI model. At the same time, Getty Images has sued Stability.AI for using copyrighted images to train its AI models.

Unless OpenAI publishes its training data, the lack of transparency makes it difficult to know whether it was done lawfully. We don’t know the details about how ChatGPT is trained, what data was used, where the data comes from, or what the system’s architecture looks like in detail.

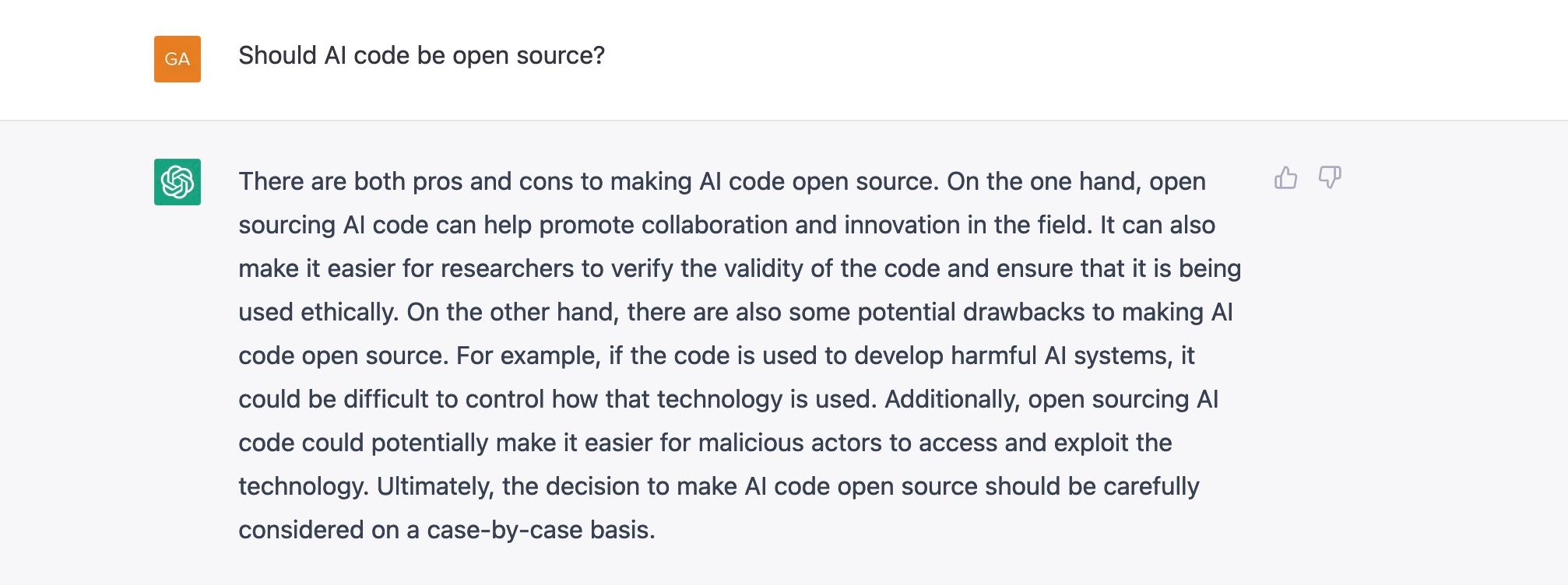

3. ChatGPT Generates Wrong Answers

It fails at basic math, can’t seem to answer simple logic questions, and will even go as far as to argue completely incorrect facts. As people across social media will attest, ChatGPT can get it wrong multiple times.

OpenAI knows about this limitation, writing that: “ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers.” This “hallucination” of fact and fiction, as it’s been referred to, is especially dangerous regarding things like medical advice or getting the facts right on key historical events.

ChatGPT initially didn’t use the internet to locate answers, unlike other AI assistants like Siri or Alexa you may be familiar with. Instead, it constructed an answer word by word, selecting the most likely “token” that should come next based on its training. In other words, ChatGPT arrives at an answer by making a series of probable guesses, which is part of why it can argue wrong answers as if they were completely true.

In March 2023, ChatGPT was hooked up to the internet but quickly disconnected again. OpenAI didn’t reveal too much information, but to say that “ChatGPT Browse beta can occasionally display content in ways we don’t want.”

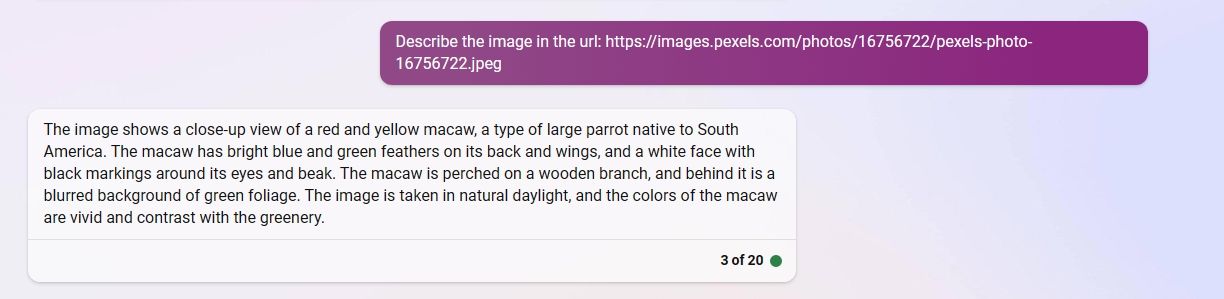

The Search with Bing feature piggybacked off Microsoft’s Bing-AI tool, which has equally proved that it’s not quite ready to answer your questions correctly. When asked to describe the picture in a URL, it should admit it can’t complete the request. Instead, Bing described in great detail a red and yellow macaw—the URL, in fact, showed an image of a man sitting.

You can see more hilarious hallucinations in our comparison between ChatGPT vs. Microsoft Bing AI vs. Google Bard . It’s not hard to imagine people using ChatGPT to get quick facts and information, expecting those results to be true. But so far, ChatGPT can’t get it right, and teaming up with an equally inaccurate Bing search engine only made things worse.

4. ChatGPT Has Bias Baked Into Its System

ChatGPT was trained on the collective writing of humans across the world, past and present. Unfortunately, this means that the same biases in the real world can also appear in the model.

ChatGPT has been shown to produce some terrible answers that discriminate against gender, race, and minority groups, which the company is trying to mitigate.

One way to explain this issue is to point to the data as the problem, blaming humanity for the biases embedded in the internet and beyond. But part of the responsibility also lies with OpenAI, whose researchers and developers select the data used to train ChatGPT.

Once again, OpenAI knows this is an issue and has said it’s addressing “biased behavior” by collecting feedback from users and encouraging them to flag ChatGPT outputs that are bad, offensive, or simply incorrect.

With the potential to cause harm to people, you could argue that ChatGPT shouldn’t have been released to the public before these problems were studied and resolved. But a race to be the first company to create the most powerful AI model might have been enough for OpenAI to throw caution to the wind.

By contrast, a similar AI chatbot called Sparrow—owned by Google’s parent company, Alphabet—was released in September 2022. However, it was purposely kept behind closed doors because of similar safety concerns. Around the same time, Facebook released an AI language model called Galactica, intended to help with academic research. However, it was rapidly recalled after many people criticized it for outputting wrong and biased results related to scientific research.

5. ChatGPT Might Take Jobs From Humans

The dust has yet to settle after the rapid development and deployment of ChatGPT, but that hasn’t stopped the underlying technology from being stitched into several commercial apps. Among the apps that have integrated GPT-4 are Duolingo and Khan Academy.

The former is a language learning app, while the latter is a diverse educational learning tool. Both offer what is essentially an AI tutor, either in the form of an AI-powered character that you can talk to in the language you are learning. Or as an AI tutor that can give you tailored feedback on your learning.

This could be just the beginning of AI holding human jobs. The types of jobs most at risk of from AI include graphic design, writing, and accounting. When it was announced that a later version of ChatGPT passed the bar exam , the final hurdle for a person to become a lawyer, it became even more plausible that AI could change the workforce in the near future.

As reported by The Guardian , Education companies posted huge losses on the London and New York stock exchanges, highlighting the disruption AI is causing to some markets as little as six months after ChatGPT was launched.

Technological advancements have always resulted in jobs being lost, but the speed of AI advancements means multiple industries are facing rapid change. A huge cross-section of human jobs are seeing AI filtering into the workplace. Some jobs may find menial tasks being completed with the help of AI tools, while other positions may cease to exist in the future.

6. ChatGPT Is Challenging Education

You can ask ChatGPT to proofread your writing or point out how to improve a paragraph. Or you can remove yourself from the equation entirely and ask ChatGPT to do all the writing for you.

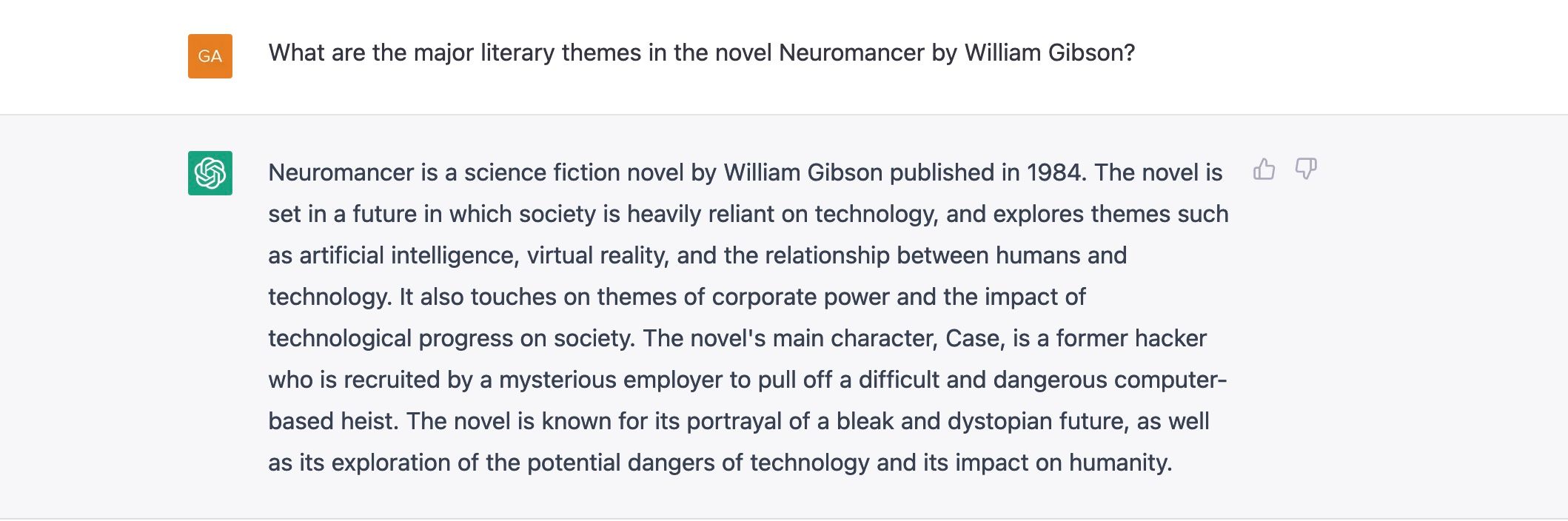

Teachers have experimented with feeding English assignments to ChatGPT and have received answers that are better than what many of their students could do. From writing cover letters to describing major themes in a famous work of literature, ChatGPT can do it all without hesitation.

That begs the question: if ChatGPT can write for us, will students need to learn this skill in the future? It might seem like an existential question, but since students have started using ChatGPT to help write their essays, educators will soon have to face reality.

Unsurprisingly, students are already experimenting with AI. The Stanford Daily reports that early surveys show a significant number of students have used AI to assist with assignments and exams.

In the short term, schools and universities are updating their policies and ruling whether students can or cannot use AI to help them with an assignment. It’s not only English-based subjects that are at risk either; ChatGPT can help with any task involving brainstorming, summarizing, or drawing analytical conclusions.

7. ChatGPT Can Cause Real-World Harm

It wasn’t long before someone tried to jailbreak ChatGPT , resulting in an AI model that could bypass OpenAI’s guard rails meant to prevent it from generating offensive and dangerous text.

A group of users on the ChatGPT Reddit group named their unrestricted AI model Dan, short for “Do Anything Now.” Sadly, doing whatever you like has led to hackers ramping up online scams. Hackers have also been seen selling rule-less ChatGPT services that create malware and produce phishing emails, with mixed results on the AI-created malware .

Trying to spot a phishing email designed to extract sensitive details from you is far more difficult now with AI-generated text. Grammatical errors, which used to be an obvious red flag, are limited with ChatGPT, which can fluently write all kinds of text, from essays to poems and, of course, dodgy emails.

The rate at which ChatGPT can produce information has already caused problems for Stack Exchange, a website dedicated to providing correct answers to everyday questions. Soon after ChatGPT was released, users flooded the site with answers they asked ChatGPT to generate.

Without enough human volunteers to sort through the backlog, it would be impossible to maintain a high level of quality answers. Not to mention, many of the answers were incorrect. To avoid the website being damaged, a ban was placed on all answers generated using ChatGPT.

The spread of fake information is a serious concern, too. The scale at which ChatGPT can produce text, coupled with the ability to make even incorrect information sound convincingly right, makes everything on the internet questionable. It’s a critical combination that amplifies the dangers of deepfake technology .

8. OpenAI Holds All the Power

With great power comes great responsibility, and OpenAI holds a fair share of it. It’s one of the first AI companies to truly shake up the world with not one but multiple generative AI models, including Dall-E 2, GPT-3, and GPT-4.

As a private company, OpenAI selects the data used to train ChatGPT and chooses how fast it rolls out new developments. Despite experts warning of the dangers posed by AI, OpenAI isn’t showing signs of slowing down.

On the contrary, the popularity of ChatGPT has spurred a race between big tech companies competing to launch the next big AI model; among them are Microsoft’s Bing AI and Google’s Bard . Fearing that rapid development will lead to serious safety problems, a letter was penned by tech leaders worldwide asking for development to be delayed.

While OpenAI considers safety a high priority, there is a lot that we don’t know about how the models themselves work, for better or worse. At the end of the day, the only choice we have is to unquestioningly trust that OpenAI will research, develop, and use ChatGPT responsibly.

Whether we agree with its methods or not, it’s worth remembering that OpenAI is a private company that will continue developing ChatGPT according to its own goals and ethical standards.

Tackling AI’s Biggest Problems

There is a lot to be excited about with ChatGPT, but beyond its immediate uses, there are some serious problems.

OpenAI admits that ChatGPT can produce harmful and biased answers, hoping to mitigate the problem by gathering user feedback. But its ability to produce convincing text, even when the facts aren’t true, can easily be used by bad actors.

Privacy and security breaches have already shown that OpenAI’s system can be vulnerable, putting users’ personal data at risk. Adding to the trouble, people are jailbreaking ChatGPT and using the unrestricted version to produce malware and scams on a scale we haven’t seen before.

Threats to jobs and the potential to disrupt education are a few more problems that are piling up. With brand-new technology, it’s difficult to predict what problems will arise in the future, but unfortunately, we don’t have to look very far. ChatGPT has produced its fair share of challenges for us to deal with in the present.

SCROLL TO CONTINUE WITH CONTENT

From security breaches to incorrect answers to the undisclosed data it was trained on, there are plenty of concerns about the AI-powered chatbot. Yet, the technology is already being incorporated into apps and used by millions, from students to company employees.

With no sign of AI development slowing down, the problems with ChatGPT are even more important to understand. With ChatGPT set to change our future, here are some of the biggest issues.

Also read:

- [New] In 2024, Top 5 PS1 Emulation Software on Modern Computers

- [New] Zooming Into Success The Essential Blueprint for Producing High-Quality Audio on Video Platforms for 2024

- [Updated] 2024 Approved Exclusive Recording Gadgets for Windows 10 Gamers

- [Updated] 2024 Approved Unveiling Instagram Reels 10 Surprising Insights

- Does Itel P55 Have Find My Friends? | Dr.fone

- Exploring the Elite Collection of Inflatable Jacuzzis for 2Er 2022: Indulge in Comfort | ZDNET

- How an Economical Power Station Transformed My Cross-Country Journey - Insights From ZDNet Testing

- In 2024, How Do I Stop Someone From Tracking My Itel P55T? | Dr.fone

- In 2024, Which Pokémon can Evolve with a Moon Stone For Xiaomi Redmi K70? | Dr.fone

- Labor Day Blowout: Secure Your Home with a 4-Camera Setup and Lighting for $80 at Blink!

- Prepare for Severe Winter Weather & Save Big – Get 40% Off on Anker Generators | ZDNet

- Quickly Find Lost Pets with Ring’s Budget-Friendly Tag for Dogs and Cats | Expert Insights on $10 Gadget

- Secure Your Home for Less: Grab the Ultimate Floodlight & Outdoor Camera Combo From Blink at Just $80 in Time for Labor Day – Explore Tech Deals

- Slash Prices: Secrets to Saving Big - Up to $200 Off on GoPro Hero 11 Cam

- Surprisingly Delighted: My Experience with the Ring Spotlight Cam Pro - A Review by ZDNet

- The Fundamentals of Exceptional Interviewing

- Top-Ranked Animation Set for Typography

- Title: Inside ChatGPT’s Woes: Discovering the 8 Crucial Limitations

- Author: Brian

- Created at : 2024-10-19 22:40:10

- Updated at : 2024-10-21 03:31:37

- Link: https://tech-savvy.techidaily.com/inside-chatgpts-woes-discovering-the-8-crucial-limitations/

- License: This work is licensed under CC BY-NC-SA 4.0.