Inside Look: GPT4All's Intricate Process

Inside Look: GPT4All’s Intricate Process

OpenAI’s GPT models have revolutionized natural language processing (NLP), but unless you pay for premium access to OpenAI’s services, you won’t be able to fine-tune and integrate their GPT models into your applications. Furthermore, OpenAI will have access to all your conversations, which may be a security issue if you use ChatGPT for business and other more sensitive areas of your life. If you’re not keen on this, you may want to try out GPT4All.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

So what exactly is GPT4All? How does it work, and why use it over ChatGPT?

What Is GPT4All?

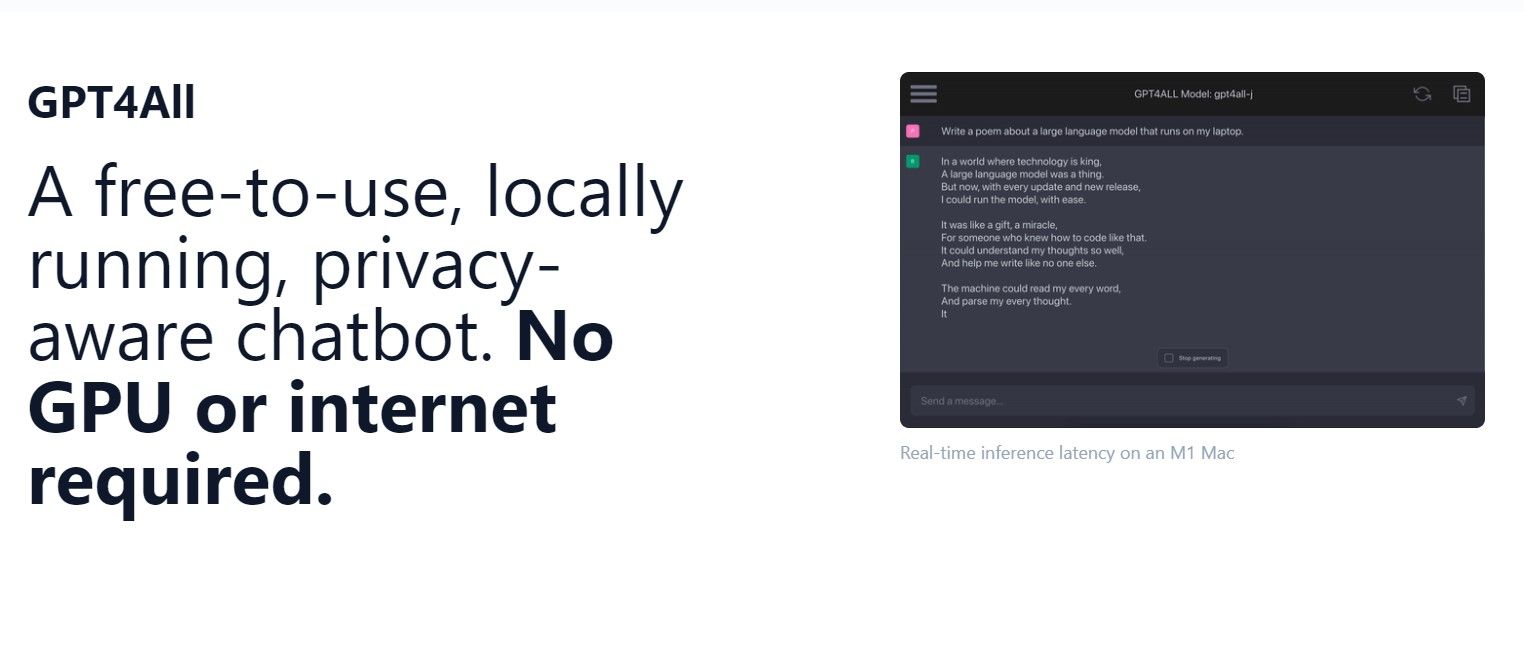

GPT4All is an open-source ecosystem used for integrating LLMs into applications without paying for a platform or hardware subscription. It was created by Nomic AI, an information cartography company that aims to improve access to AI resources.

GPT4All is designed to run on modern to relatively modern PCs without needing an internet connection or even a GPU! This is possible since most of the models provided by GPT4All have been quantized to be as small as a few gigabytes, requiring only 4–16GB RAM to run.

This allows smaller businesses, organizations, and independent researchers to use and integrate an LLM for specific applications. And with GPT4All easily installable through a one-click installer, people can now use GPT4All and many of its LLMs for content creation, writing code, understanding documents, and information gathering.

Why Use GPT4ALL Over ChatGPT?

Image Credit:bruce mars/Unsplash

There are several reasons why you might want to use GPT4All over ChatGPT.

- Portability: Models provided by GPT4All only require four to eight gigabytes of memory storage, do not require a GPU to run, and can easily be saved on a USB flash drive with the GPT4All one-click installer. This makes GPT4All and its models truly portable and usable on just about any modern computer out there.

- Privacy and Security: As explained earlier, unless you have access to ChatGPT Plus, all your ChatGPT conversions are accessible by OpenAI. GPT4All is focused on data transparency and privacy; your data will only be saved on your local hardware unless you intentionally share it with GPT4All to help grow their models.

- Offline Mode: GPT is a proprietary model requiring API access and a constant internet connection to query or access the model. If you lose an internet connection or have a server problem, you won’t have access to ChatGPT. This is not the case with GPT4All. Since all the data is already stored on a four to eight-gigabyte package, and inferencing is done locally, you do not require an internet connection to access any models in GPT4All. You can continue chatting and fine-tuning your model even without an internet connection.

- Free and Open Source: Several LLMs provided by GPT4All are licensed under GPL-2. This allows anyone to fine-tune and integrate their own models for commercial use without needing to pay for licensing.

How GPT4All Works

As discussed earlier, GPT4All is an ecosystem used to train and deploy LLMs locally on your computer, which is an incredible feat! Typically, loading a standard 25-30GB LLM would take 32GB RAM and an enterprise-grade GPU.

To compare, the LLMs you can use with GPT4All only require 3GB-8GB of storage and can run on 4GB–16GB of RAM. This makes running an entire LLM on an edge device possible without needing a GPU or external cloud assistance.

The hardware requirements to run LLMs on GPT4All have been significantly reduced thanks to neural network quantization. By reducing precision weight and activations in a neural network, many of the models provided by GPT4All can be run on most relatively modern computers.

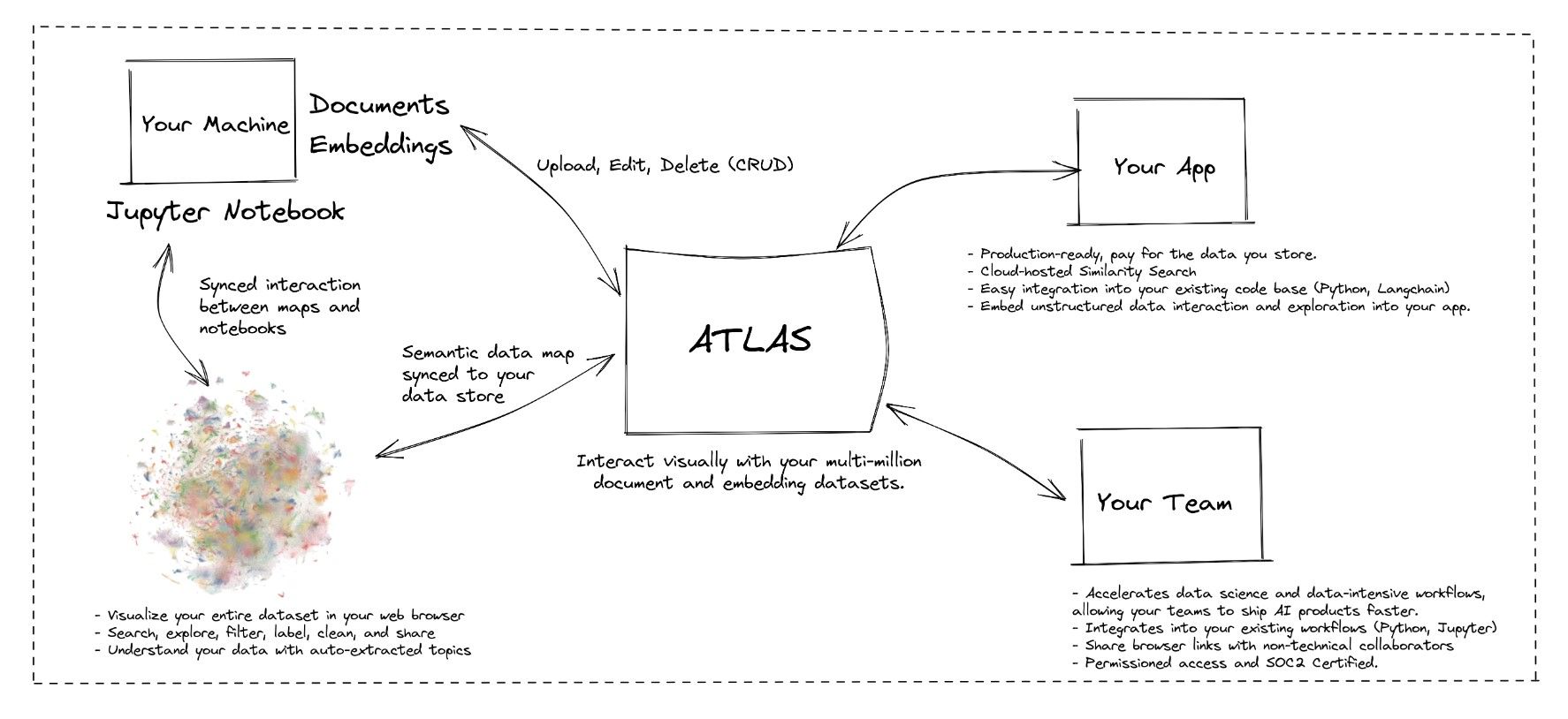

The training data used in some of the available models were collected through “the pile,” which is just scraped data from publicly released content on the internet. The data is then sent to Nomic AI’s Atlas AI database, which can be seen based on correlations on an easy-to-see 2D vector map (also known as an AI vector database ).

By Nomic AI training the Groovy model and using publicly available data, Nomic AI was able to release the model on an open GPL license which allows anyone to use it, even for commercial applications.

How to Install GPT4All

Installing GPT4All is simple, and now that GPT4All version 2 has been released, it is even easier! The best way to install GPT4All 2 is to download the one-click installer:

Download:GPT4All for Windows, macOS, or Linux (Free)

The following instructions are for Windows, but you can install GPT4All on each major operating system.

Once downloaded, double-click on the installer and select Install. Windows Defender may see the installation as malicious because the process for Microsoft to give valid signatures for 3rd party applications can take a long time. However, this should be fixed soon. As of writing, as long as you downloaded the GPT4All application from the official website, you should be safe. Click on Install Anyway to install GPT4All.

Once you open the application, you will need to select a model to use. GPT4ALL provides you with several models, all of which will have their strengths and weaknesses. To know which model to download, here is a table showing their strengths and weaknesses.

| Model | Size | Note | Parameters | Type | Quantization |

|---|---|---|---|---|---|

| Hermes | 7.58 GB | Instruction Based Gives long responses Curated with 300,000 uncensored instructions Cannot be used commercially | 13 Billion | LLaMA | q4_0 |

| GPT4All Falcon | 3.78 GB | Fast responses Instruction based Licensed for commercial use | 7 Billion | Falcon | q4_0 |

| Groovy | 8 GB | Fast responses -Creative responses Instruction based Licensed for commercial use | 7 Billion | GPT-J | q4_0 |

| ChatGPT-3.5 Turbo | Minimal | Requires personal API Will send your chats to OpenAI GPT4All is only used to communicate with OpenAI | ? | GPT | NA |

| ChatGPT-4 | Minimal | Requires personal API Will send your chats to OpenAI GPT4All is only used to communicate with OpenAI | ? | GPT | NA |

| Snoozy | 7.58 GB | Instruction based Slower than Groovy but with higher quality responses Cannot be used commercially | 13 Billion | LLaMA | q4_0 |

| MPT Chat | 4.52 GB | Fast responses Chat based Cannot be used commercially | 7 Billion | MPT | q4_0 |

| Orca | 3.53 GB | Instruction based Explains tuned datasets Orca Research Paper dataset construction approaches Licensed for commercial use | 7 Billion | OpenLLaMA | q4_0 |

| Vicuna | 3.92 GB | Instruction based Cannot be used commercially | 7 Billion | LLaMA | q4_2 |

| Wizard | 3.92 GB | Instruction based Cannot be used commercially | 7 Billion | LLaMA | q4_2 |

| Wizard Uncensored | 7.58 GB | Instruction based Cannot be used commercially | 13 Billion | LLaMA | q4_0 |

Keep in mind that the models provided have different levels of restrictions. Not all models can be used commercially for free; some will need more hardware resources, while others will need an API key. The least restrictive models available in GPT4All are Groovy, GPT4All Falcon, and Orca.

Can You Train GPT4All Models?

Yes, but not the quantized versions. To effectively fine-tune GPT4All models, you need to download the raw models and use enterprise-grade GPUs such as AMD’s Instinct Accelerators or NVIDIA’s Ampere or Hopper GPUs. Additionally, you will need to train the model through an AI training framework like LangChain, which will require some technical knowledge.

Fine-tuning a GPT4All model will require some monetary resources as well as some technical know-how, but if you only want to feed a GPT4All model custom data, you can keep training the model through retrieval augmented generation (which helps a language model access and understand information outside its base training to complete tasks). You can do so by prompting the GPT4All to model your custom data before asking a question. Custom data should be saved locally, and when prompted, the model should be able to provide you with the info you once gave.

Should You Use GTP4All?

The idea for GPT4All is to provide a free-to-use and open-source platform where people can run large language models on their computers. Currently, GPT4All and its quantized models are great for experimenting, learning, and trying out different LLMs in a secure environment. For professional workloads, we would still recommend using ChatGPT as the model is significantly more capable.

Overall, there isn’t any reason you should limit yourself to one. Since their use case does not overlap, you should try using both.

SCROLL TO CONTINUE WITH CONTENT

So what exactly is GPT4All? How does it work, and why use it over ChatGPT?

Also read:

- [New] 2024 Approved Cost-Cutting Options for Purchasing GoPros

- [Updated] 2024 Approved Crafting the Ideal Voice Over Soundtrack

- 2024 Approved Recorder Royalty Best Premium Recording Software on PC & MacOS FREE

- 2024 Approved The Dynamic Edge Technique Adding Motion Blur to Portraits Using Picsart

- AI in Education: Why Teachers Should Embrace Change (8 Points)

- Airborne Ingenuity Sections for 2024

- Apple's iPad Pro Explored: Understanding Its Distinct Role as a Powerful Device Separate From the MacBook - An In-Depth Analysis

- ChatGPT Strategies for Streamlined 3D Printing Processes

- Creating Personalized Ringtones: A Step-by-Step Guide for iOS and Android Users

- Eradicating Grayscale Issues with YouTube Video

- Experts' Pick: The Ultimate Collection of iPhone 16 and iPhone 16 Pro Cases Featuring Maximum Durability & Style | ZDNET

- How To Install the Latest iOS/iPadOS Beta Version on iPhone 12 Pro Max? | Dr.fone

- How to Unlock Your Apple iPhone SE (2022) Passcode 4 Easy Methods (With or Without iTunes) | Dr.fone

- Intuitive Talking to AI? Try This Chrome Tool

- Limited Offer on Presidents' Day: Save Big & Score $200 Off the Latest MacBook Pro with Apple Silicon - M3 Pro Chip Exclusive at ZDNet Shop

- Unlocking New Communication Safety: How iOS 18 Brings Essential Messaging Enhancements to iPhones without Relying on AI | In-Depth Coverage by ZDNet

- Unlocking the Solution: Overcome the Risky Battery Flaw in Your AirTags and Compatible Gadgets - Expert Tips

- Updated 2024 Approved Find Out the Top Ten 3D Video Maker and Editor Apps that Will Help You Create Stunning 3D Videos. Now Create Professional-Level 3D Videos with Ease with the Best Tools

- Voice-Enabled ChatGPT for Your Android Device

- Title: Inside Look: GPT4All's Intricate Process

- Author: Brian

- Created at : 2025-01-05 16:49:05

- Updated at : 2025-01-13 01:03:01

- Link: https://tech-savvy.techidaily.com/inside-look-gpt4alls-intricate-process/

- License: This work is licensed under CC BY-NC-SA 4.0.