Key Considerations in Employing ChatGPT for Therapy Support Systems

Key Considerations in Employing ChatGPT for Therapy Support Systems

Tools powered by artificial intelligence (AI) make daily life drastically easier. With large language models such as ChatGPT, access to information in a conversational way has improved. ChatGPT’s analytical features have found a large application in mental health. However, there are caveats to using it for mental health.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

Mental health conditions should only be diagnosed and treated by certified professionals. However, using AI to improve the management of symptoms has both advantages and disadvantages. While ChatGPT avoids giving medical advice, there are some factors to keep in mind before trusting it for mental health information.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

1. ChatGPT Is Not a Replacement for Therapy

ChatGPT is a large language model trained on an enormous database of information. Therefore, it can generate human-like responses along with proper context. Such responses can help you learn about mental health but are not a replacement for in-person therapy.

For example, ChatGPT cannot diagnose an illness from your chats. However, it will give you an objective analysis but advise you to consult a medical professional. The analysis could be misinformed, so you must always fact-check it. Therefore, relying solely on AI for self-diagnosis could be detrimental to your mental well-being and should be avoided.

Using telehealth in place of in-person therapy is a better option. You can access mental health professionals remotely and at a significantly lower cost through telehealth services.

2. The Right Prompts Matter

Through specific prompts, you can make better use of ChatGPT’s analytical ability and logical reasoning. It can act as a virtual companion rather than a therapist and provide various insights about mental health.

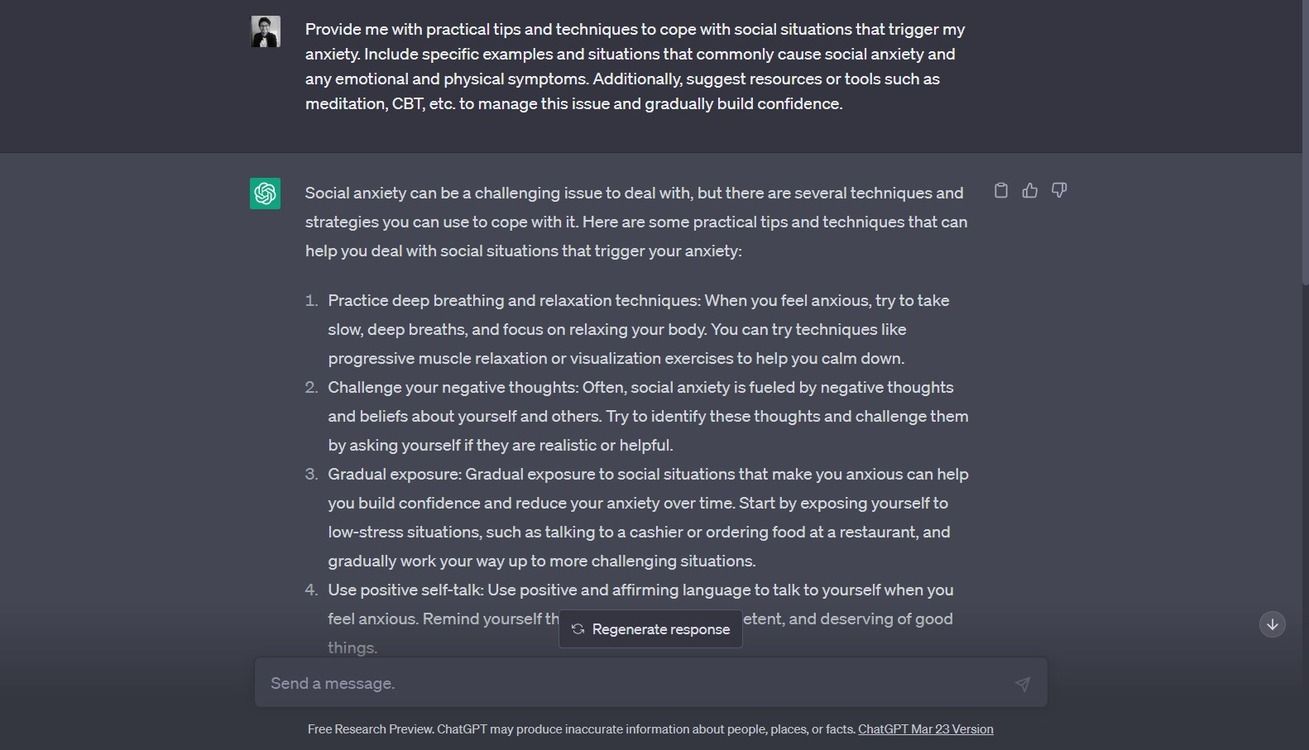

The more specific you are in your prompts, the better the responses. For example, a prompt like “List 10 ways to deal with social anxiety” provides a broad overview. It gives generic strategies rather than actionable advice for social situations.

Instead, a more specific prompt will give you a significantly better response: “Provide me with practical tips and techniques to cope with social situations that trigger my anxiety. Include specific examples and situations that commonly cause social anxiety and any emotional and physical symptoms. Additionally, suggest resources or tools such as meditation, CBT, etc. to manage this issue and gradually build confidence.”

You can create great prompts by integrating your symptoms, some general questions about a condition, and a specific objective. That will help you use ChatGPT in a supportive and informative manner.

3. Spotting Misinformation

It is crucial to spot misinformation while using ChatGPT. However, this can be challenging due to the confident tone used by the chatbot. Any kind of health claim requires peer-reviewed scientific evidence. Therefore, while using it for mental health, always ask it to cite studies that support any health claims.

Another error that ChatGPT is prone to making is presenting made-up information as facts. It sometimes responds with logically inconsistent or inaccurate information that can be harmful. For example, ChatGPT’s limited training database cuts its access to updated scientific literature.

Also, it may produce wrong citations or links. Thus, manually checking claims using resources such as the PubMed search engine is essential. A great way to avoid incorrect responses is to limit your prompts to advice and analysis. While it can help you learn about various topics, refrain from using it to make conclusions and diagnose conditions.

4. Privacy Concerns With ChatGPT

Any kind of health information is personal. And ensuring that the health data collected by ChatGPT is not misused is not as easy. One of the main disadvantages is ChatGPT’s issues with privacy . OpenAI, the organization behind ChatGPT, states that your chat data is shared with service providers, affiliates, and other businesses.

While your data may be anonymized (stripped of all personal identifiers), it is still subject to cybersecurity risks. Additionally, there is no confidentiality agreement for health-specific data. Therefore, OpenAI stores all your chat data on its servers for further use.

That may not be an issue if you do not enter personal information and sensitive health data. However, considering its overall impact on your data privacy, consulting a medical professional is much safer than using ChatGPT.

5. How ChatGPT Can Benefit Your Mental Health

One of the best ways to use the chatbot is for self-care, resource gathering, and education. Mental health can be a dense and vast subject to learn about. Whether you want to learn about a specific condition or overall best practices, information overload can affect your research.

ChatGPT helps you condense all that information into easily understandable points and illustrative examples. It can help you summarize books into insightful takeaway points which can help you determine if you want to read them.

By sorting through the clutter of information, you can reduce the stress that comes with research. The chatbot is also effective in providing self-care tips. It can help you with a practical plan to manage emotions such as anger, frustration, and sadness. Additionally, use it to create personalized routines and schedules that can help you organize the day better.

You can also delegate several productivity-associated tasks to it. This will free up some time and further help reduce stress levels throughout the day. Virtual assistants can also help you stay relaxed throughout the day.

6. Consider the Risks

While ChatGPT is a powerful tool for self-care and learning, it comes with some risks, including privacy, dependency, and bias in data. The dataset that the bot is trained on is human-generated, which is prone to several biases. Therefore, the type of response provided by ChatGPT may fluctuate based on these biases.

Due to its instantaneous response times, personalized information has become extremely accessible. However, this also creates a risk of over-dependence on ChatGPT. The need to manually filter through search results and determine the best information is decreasing. In the long run, this may affect critical thinking, social interactions, and technological vulnerability.

ChatGPT Is a Powerful Tool if Used With Caution

As chatbot technology progresses, the responses will become more nuanced, logically sound, and informative. A newer version of the bot, GPT-4, has access to the internet and can extract more relevant data. However, some risks such as privacy concerns and bias remain. Therefore, using ChatGPT moderately and knowing how to spot health claims can help you avoid misinformation.

SCROLL TO CONTINUE WITH CONTENT

Mental health conditions should only be diagnosed and treated by certified professionals. However, using AI to improve the management of symptoms has both advantages and disadvantages. While ChatGPT avoids giving medical advice, there are some factors to keep in mind before trusting it for mental health information.

Also read:

- [New] In 2024, Horizontal Vs. Vertical - Deciding the Right Face for Vids

- [New] Maximizing Your Video's Reach A Guide to Legal Yield Boosting

- [New] Revive the Experience Essential Tips for Lost iPhone X Users

- [Updated] Zooming Through Efficient Meeting Coordination Tips for 2024

- 2024 Approved Music Management for Social Media IPhone & Android Basics

- ChatGPT Takes the iOS Stage

- Choose the Ultimate Smartwatch for Your Needs: In-Depth Analysis of 2024'S Finest Models | ZDNet

- Compact Guide: Best Windows Forecasting Tools

- Configuring Safari's Auto-Close Feature for New macOS Catalina Users

- Examining Italy's Sudden Ban of the AI Giant, ChatGPT

- Expert Advice Enhancing Your Screen Capture Game with Mobizen Tools for 2024

- Guide to Fix Required Parts Not Found Error in Win11

- In 2024, Best 3 Software to Transfer Files to/from Your Honor V Purse via a USB Cable | Dr.fone

- In 2024, The Top 5 Android Apps That Use Fingerprint Sensor to Lock Your Apps On Xiaomi Redmi Note 12T Pro

- Master Your Typing Speed with Bing AI Chat on Android Mobile Phones

- Navigating and Understanding Shared Link Concept in GPT-3

- The Rights to Robot-Generated Imagery: Who Holds Them?

- Unlocking Energy Efficiency: Activating Battery Saving Features on Your Apple Watch - A Guide

- Unveiling the Apple Vision Pro: Price Breakdown, Key Features & Insider Tips Covered in Depth 'S Comprehensive FAQ

- Title: Key Considerations in Employing ChatGPT for Therapy Support Systems

- Author: Brian

- Created at : 2024-11-05 10:35:49

- Updated at : 2024-11-06 21:32:24

- Link: https://tech-savvy.techidaily.com/key-considerations-in-employing-chatgpt-for-therapy-support-systems/

- License: This work is licensed under CC BY-NC-SA 4.0.