Leveraging ChatGPT's API Power Efficiently

Leveraging ChatGPT’s API Power Efficiently

Key Takeaways

- OpenAI has released the ChatGPT API, allowing developers to integrate ChatGPT’s capabilities into their applications.

- To get started, you’ll need an OpenAI API key and a development environment with the official libraries.

- You can use the ChatGPT API for both chat completion and text completion tasks, opening up possibilities for various applications.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

With the release of its API, OpenAI has opened up the capabilities of ChatGPT to everyone. You can now seamlessly integrate ChatGPT’s features into your application.

Follow these steps to get started, whether you’re looking to integrate ChatGPT into your existing application or develop new applications with it.

1. Getting an OpenAI API Key

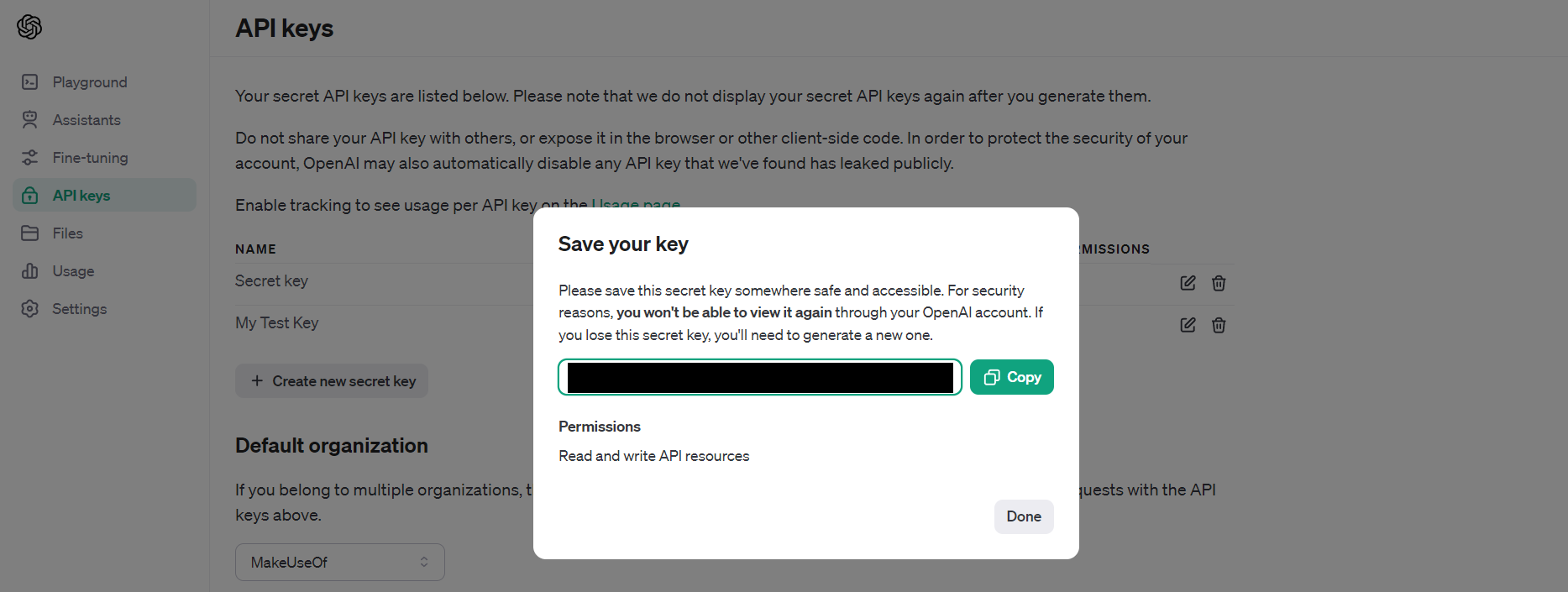

To start using the ChatGPT API, you need to obtain an API key.

- Sign up or log in to the official OpenAI platform.

- Once you’re logged in, click on the API keys tab in the left pane.

- Next, click on the Create new secret key button to generate the API key.

- You won’t be able to view the API key again, so copy it and store it somewhere safe.

The code used in this project is available in a GitHub repository and is free for you to use under the MIT license.

2. Setting Up the Development Environment

You can use the API endpoint directly or take advantage of the openai Python/JavaScript library to start building ChatGPT API-powered applications. This guide uses Python and the openai-python library.

To get started:

- Create a Python virtual environment

- Install the openai and python-dotenv libraries via pip:

pip install openai python-dotenv - Create a .env file in the root of your project directory to store your API key securely.

- Next, in the same file, set the OPENAI_API_KEY variable with the key value that you copied earlier:

OPENAI_API_KEY="YOUR_API_KEY"

Make sure you do not accidentally share your API key via version control. Add a .gitignore file to your project’s root directory and add “.env” to it to ignore the dotenv file.

3. Making ChatGPT API Requests

The OpenAI API’s GPT-3.5 Turbo, GPT-4, and GPT-4 Turbo are the same models that ChatGPT uses. These powerful models are capable of understanding and generating natural language text and code. GPT-4 Turbo can even process image inputs which opens the gates for several uses including analyzing images, parsing documents with figures, and transcribing text from images.

Please note that the ChatGPT API is a general term that refers to OpenAI APIs that use GPT-based models, including the gpt-3.5-turbo, gpt-4, and gpt-4-turbo models.

The ChatGPT API is primarily optimized for chat but it also works well for text completion tasks. Whether you want to generate code, translate languages, or draft documents, this API can handle it all.

To get access to the GPT-4 API, you need to make a successful payment of $1 or more. Otherwise, you might get an error similar to “The model `gpt-4` does not exist or you do not have access to it.”

Using the API for Chat Completion

You need to configure the chat model to get it ready for an API call. Here’s an example:

`from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI()

response = client.chat.completions.create(

model = “gpt-3.5-turbo-0125”,

temperature = 0.8,

max_tokens = 3000,

response_format={ “type”: “json_object” },

messages = [

{“role”: “system”, “content”: “You are a funny comedian who tells dad jokes. The output should be in JSON format.”},

{“role”: “user”, “content”: “Write a dad joke related to numbers.”},

{“role”: “assistant”, “content”: “Q: How do you make 7 even? A: Take away the s.”},

{“role”: “user”, “content”: “Write one related to programmers.”}

]

)`

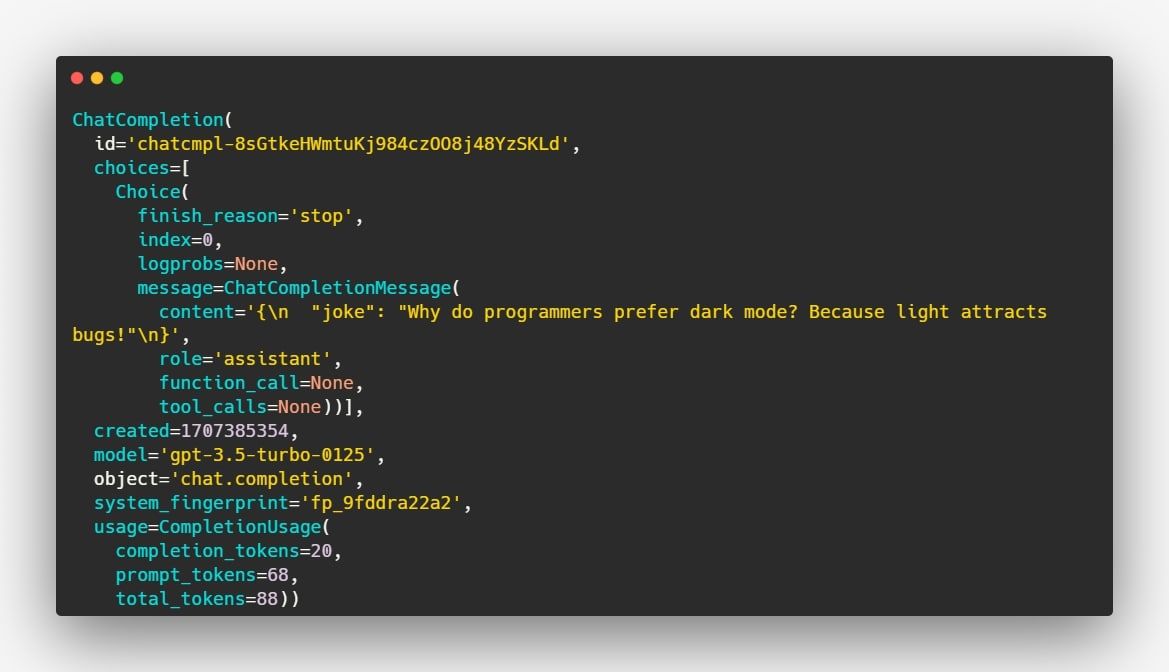

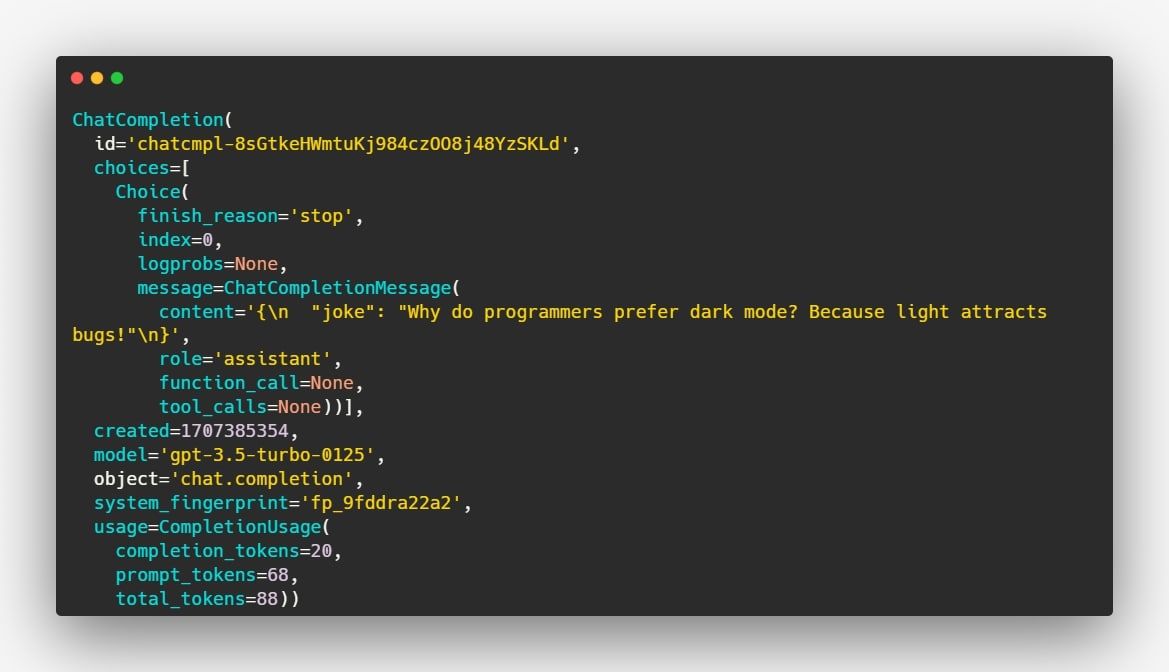

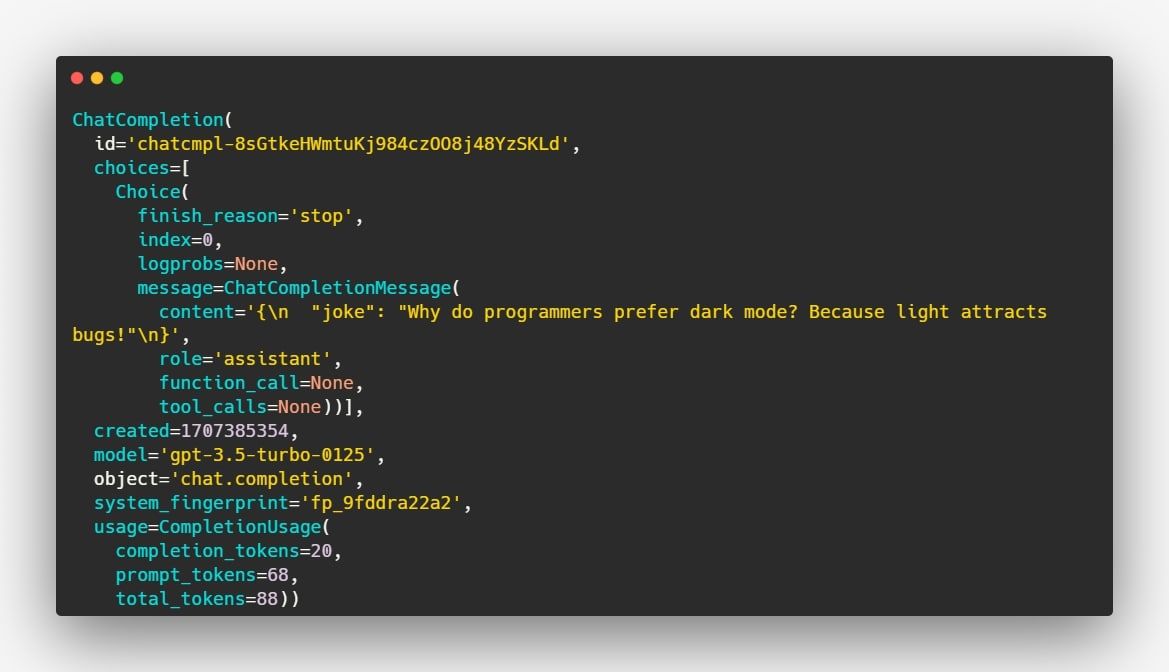

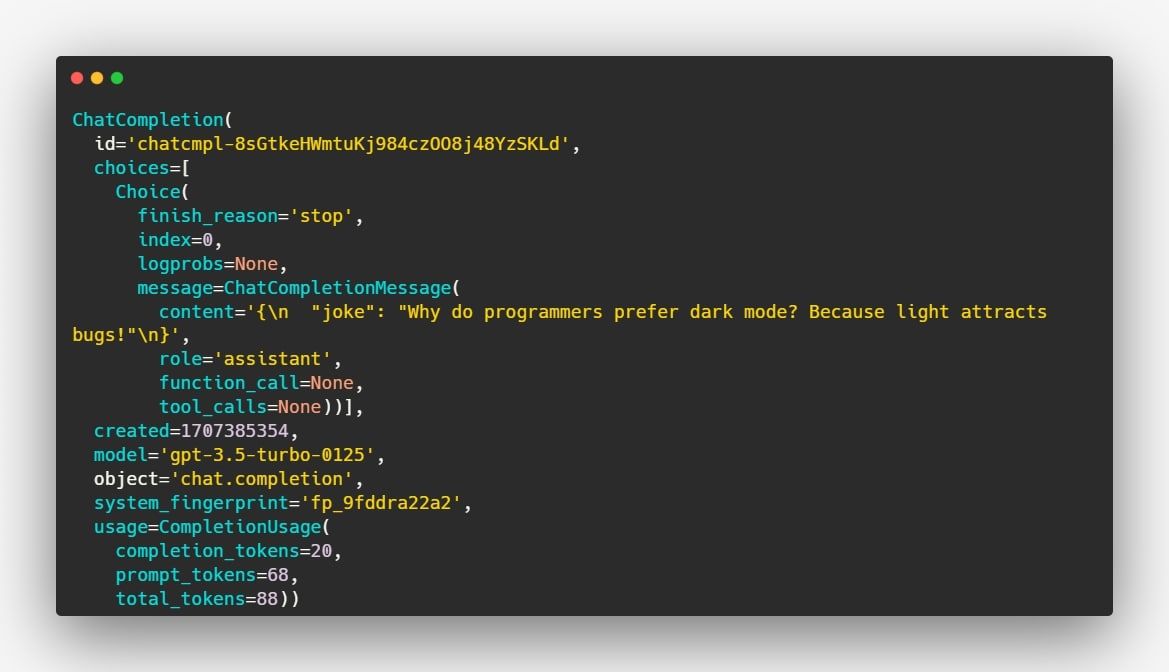

The ChatGPT API sends a response in the following format:

You can extract the content from the response, as a JSON string, with this code:

print(response.choices [0].message.content)

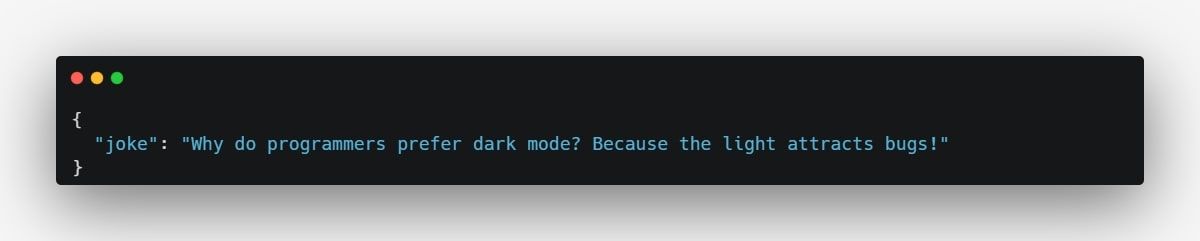

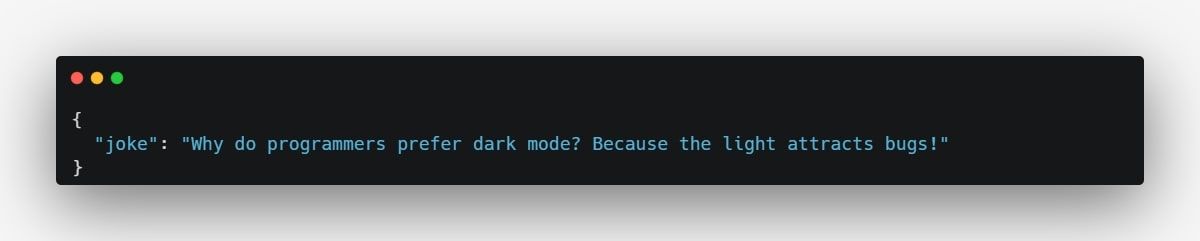

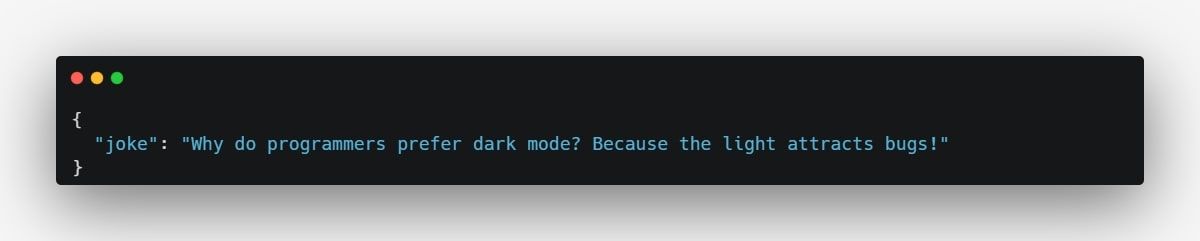

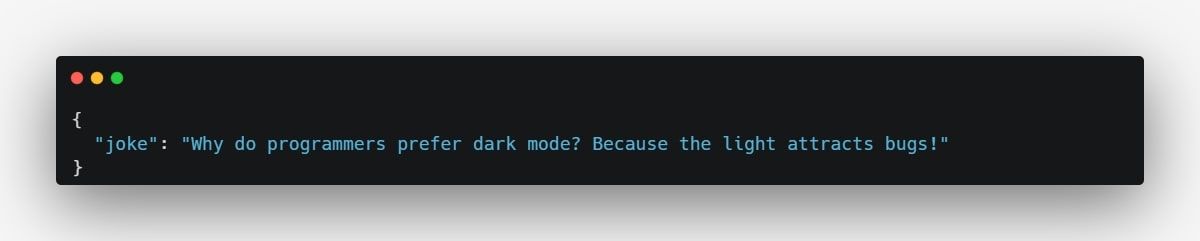

Running this code produces the following output:

The code demonstrates a ChatGPT API call using Python. Note that the model understood the context (“dad joke”) and the type of response (Q&A form) that we were expecting, based on the prompts fed to it.

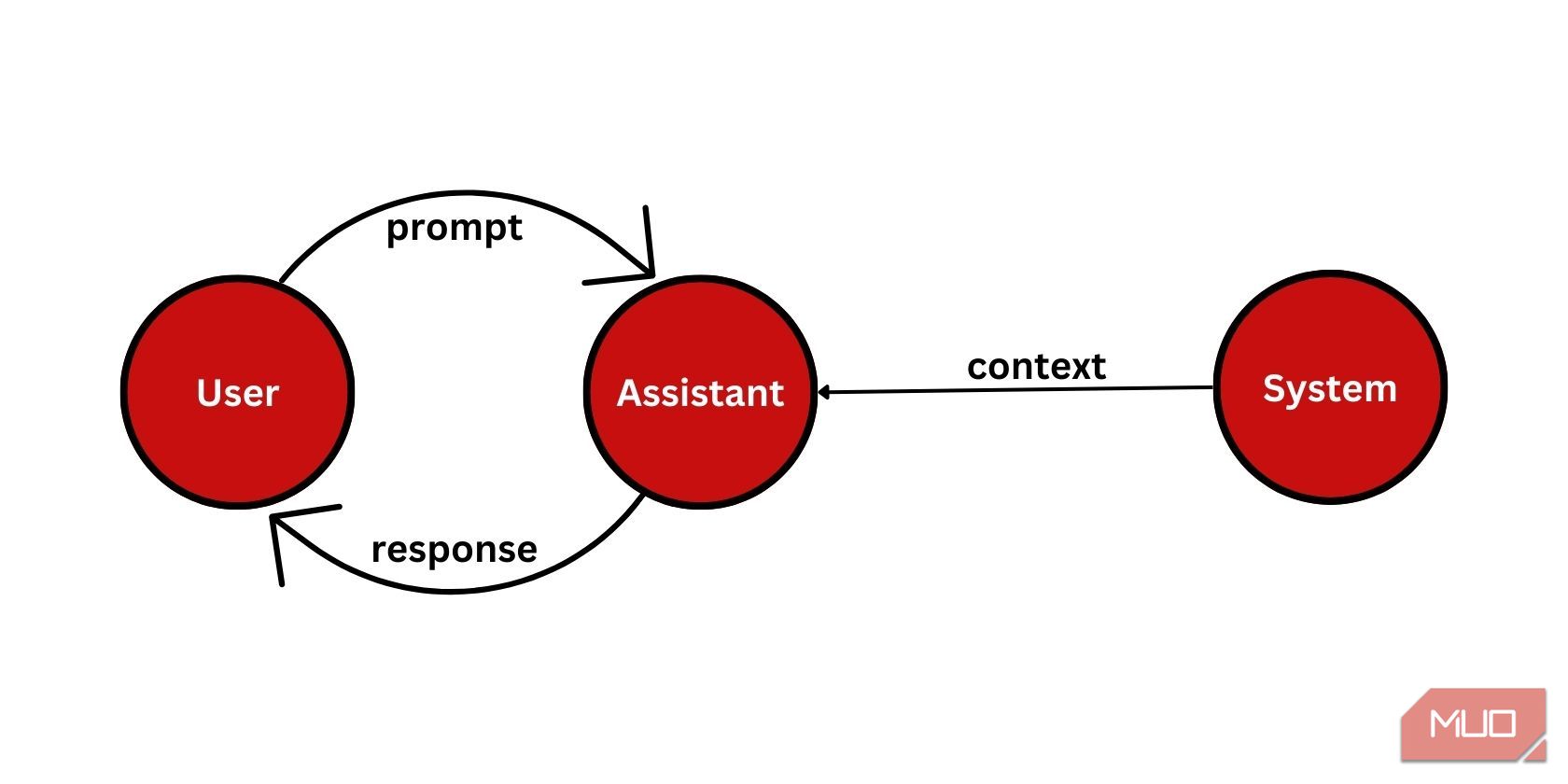

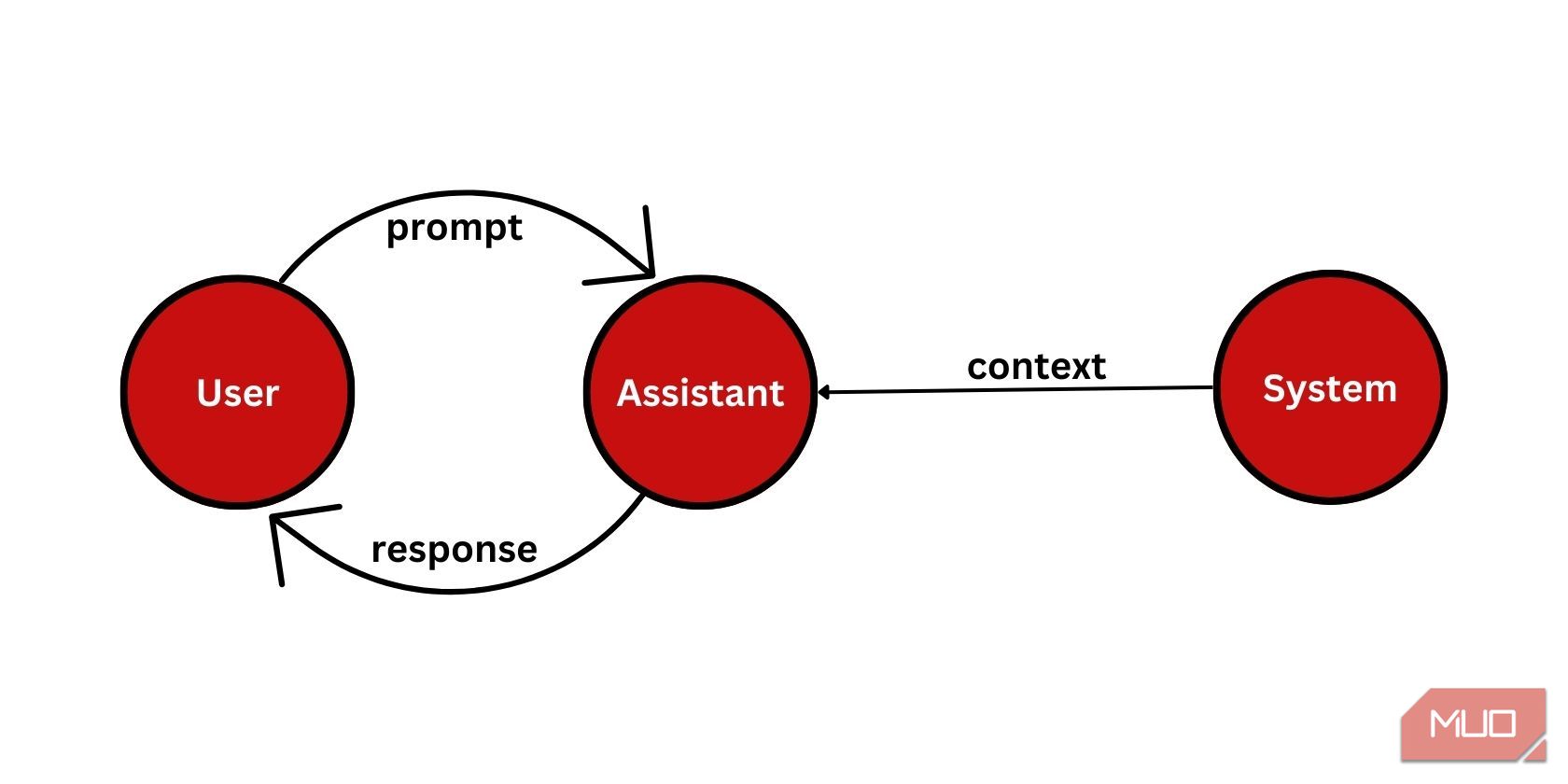

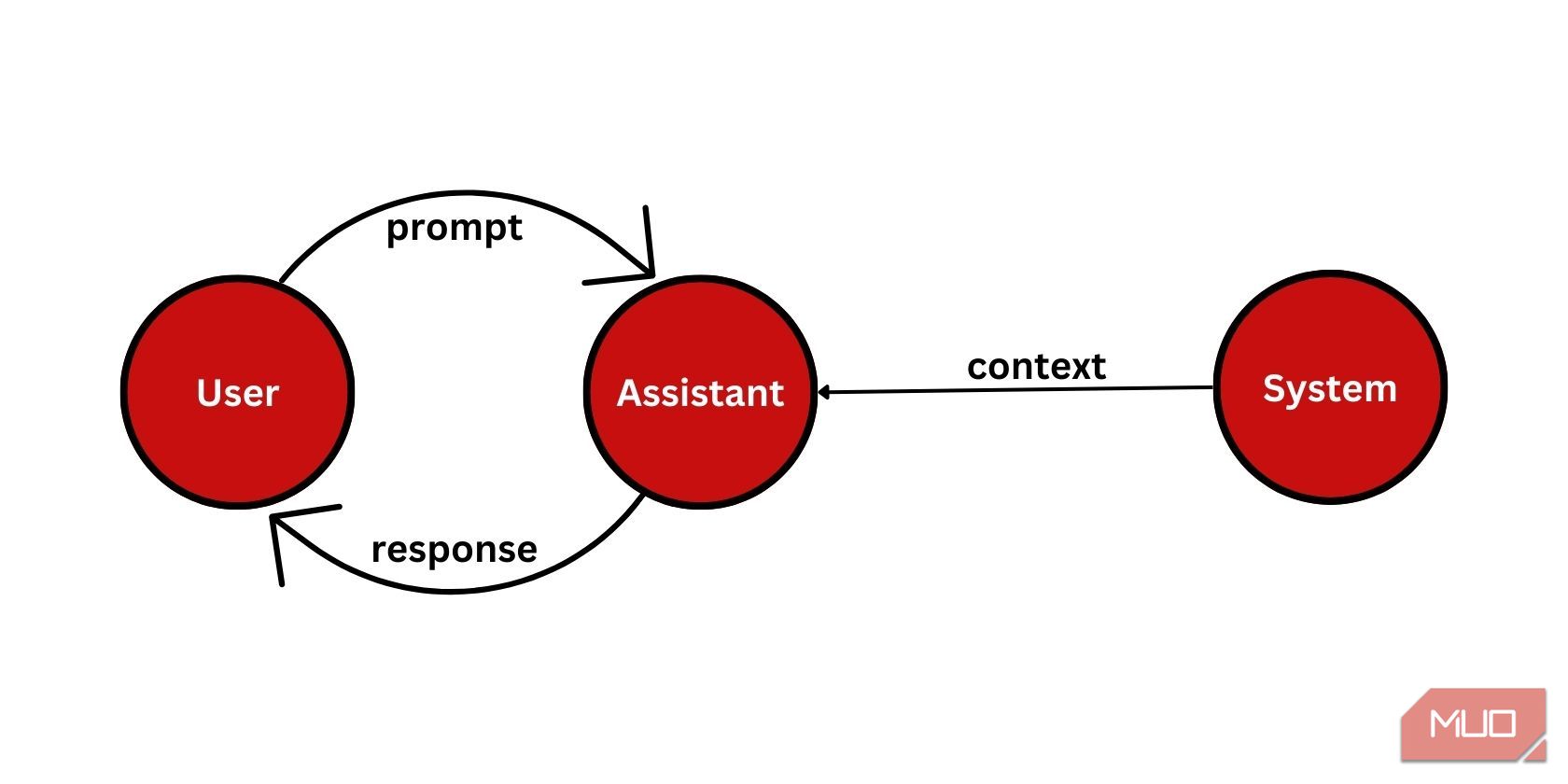

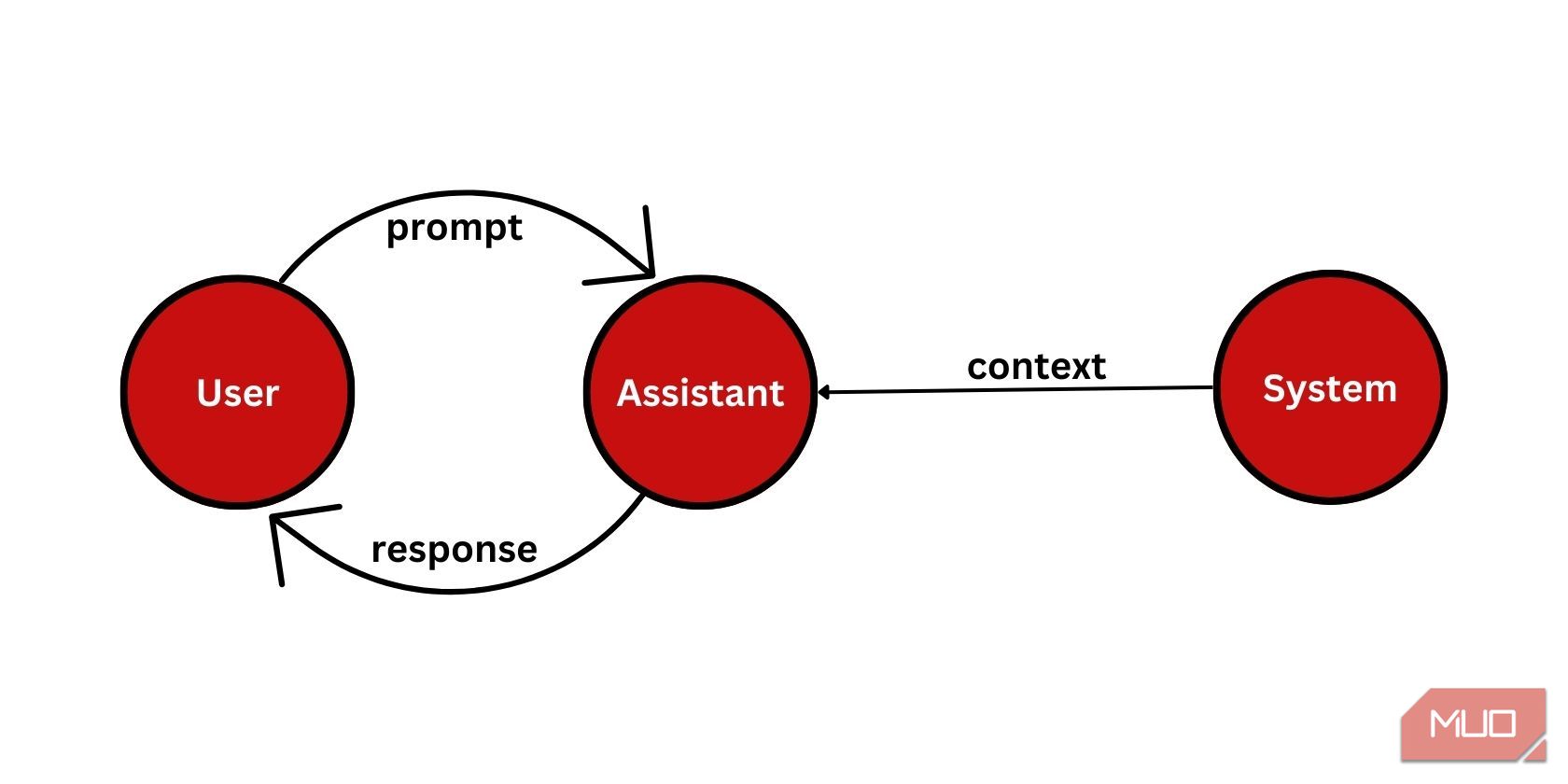

The most significant part of the configuration is the messages parameter which accepts an array of message objects. Each message object contains a role and content. You can use three types of roles:

- system which sets up the context and behavior of the assistant.

- user which gives instructions to the assistant. The end user will typically provide this, but you can also provide some default user prompts in advance.

- assistant which can include example responses.

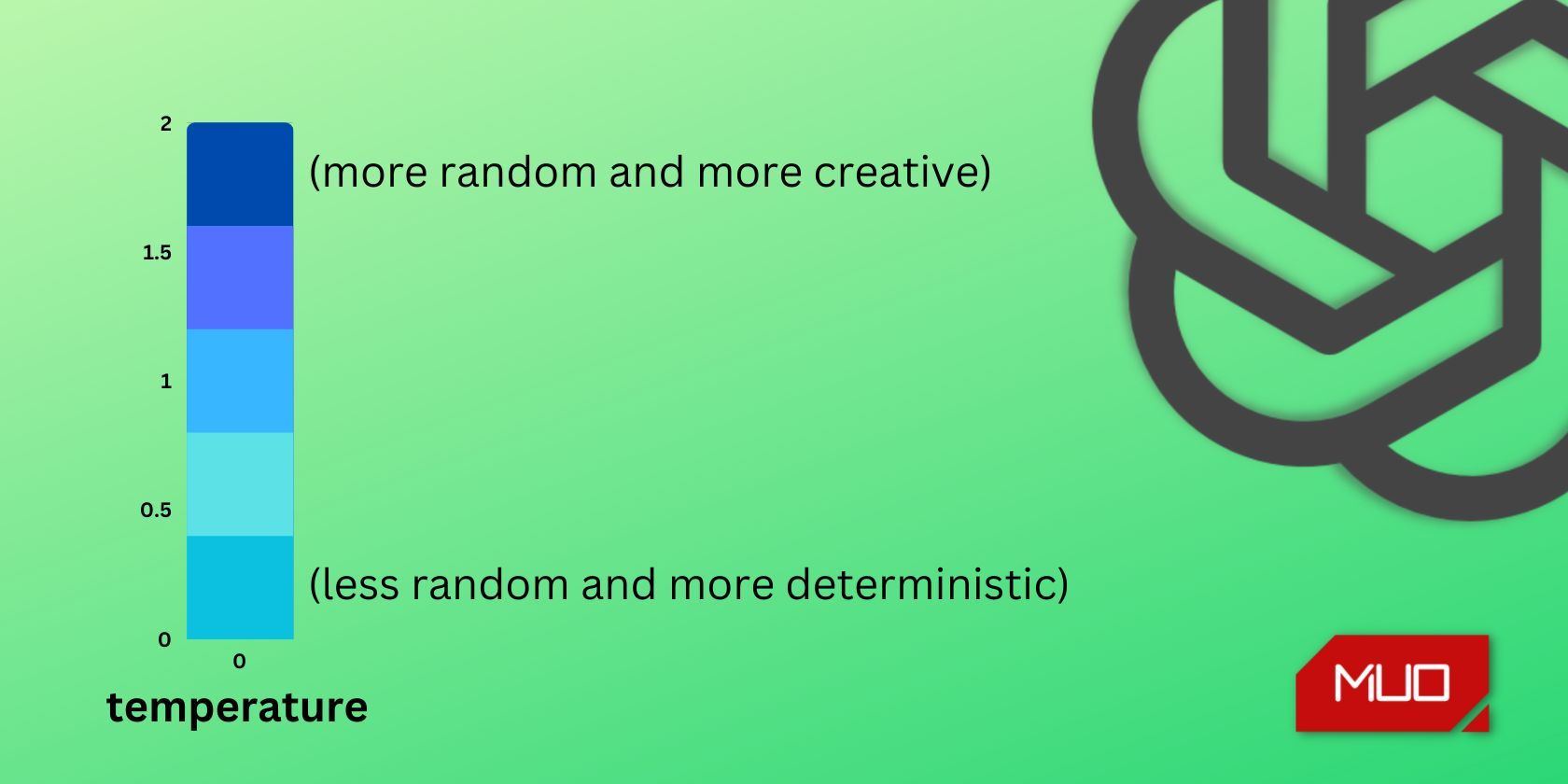

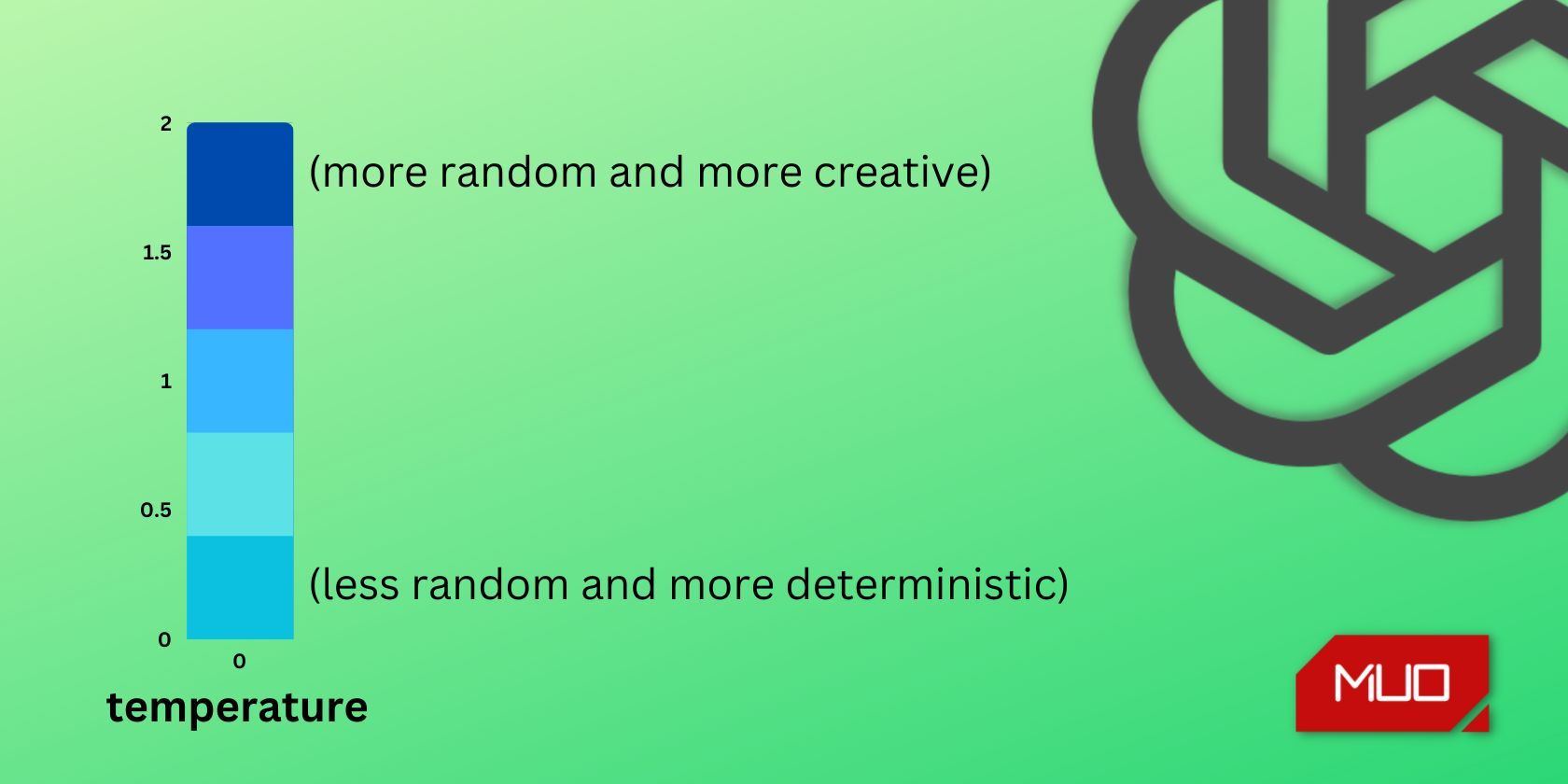

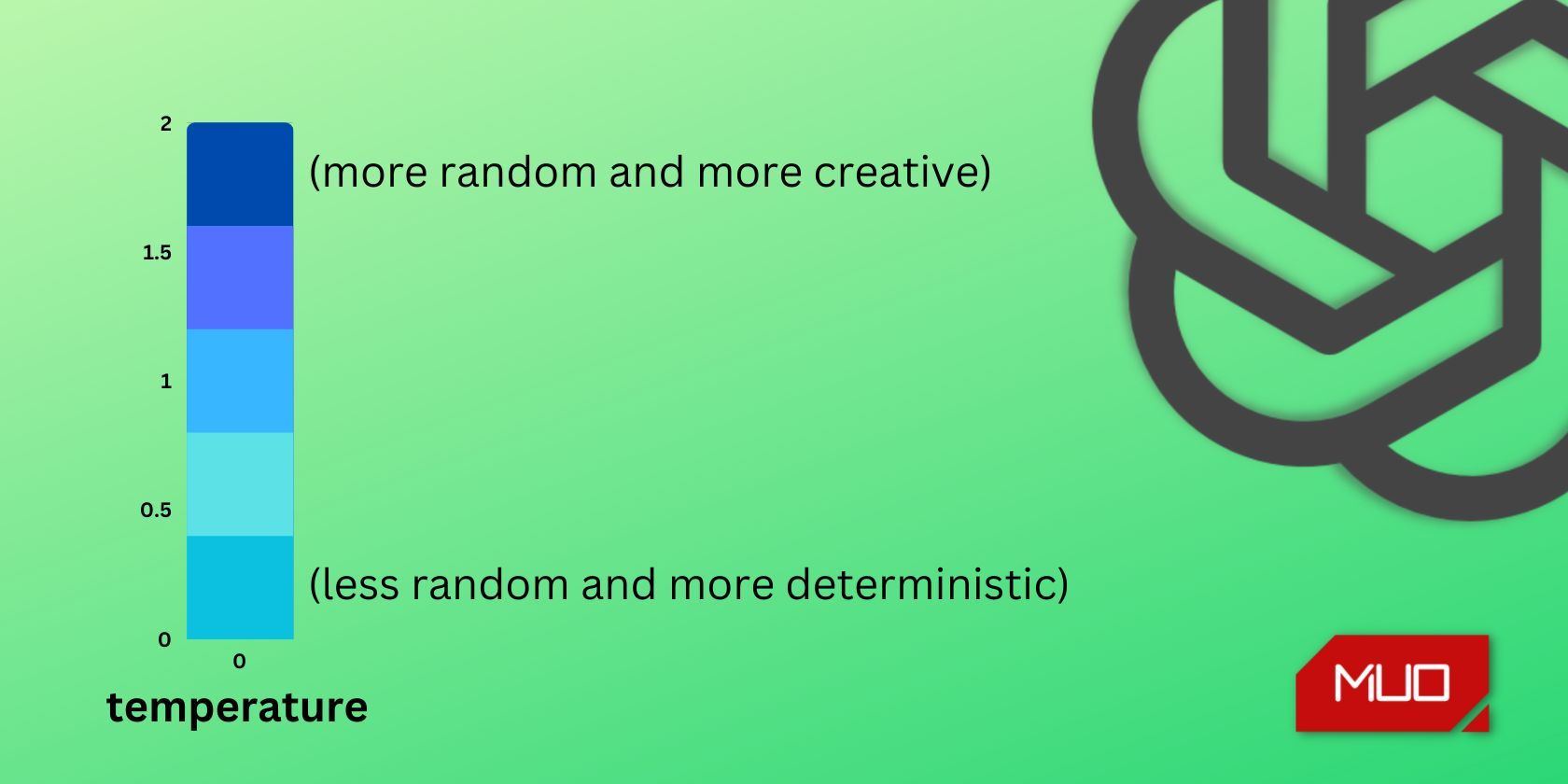

You can further customize the temperature and max_tokens parameters of the model to get the output according to your requirements.

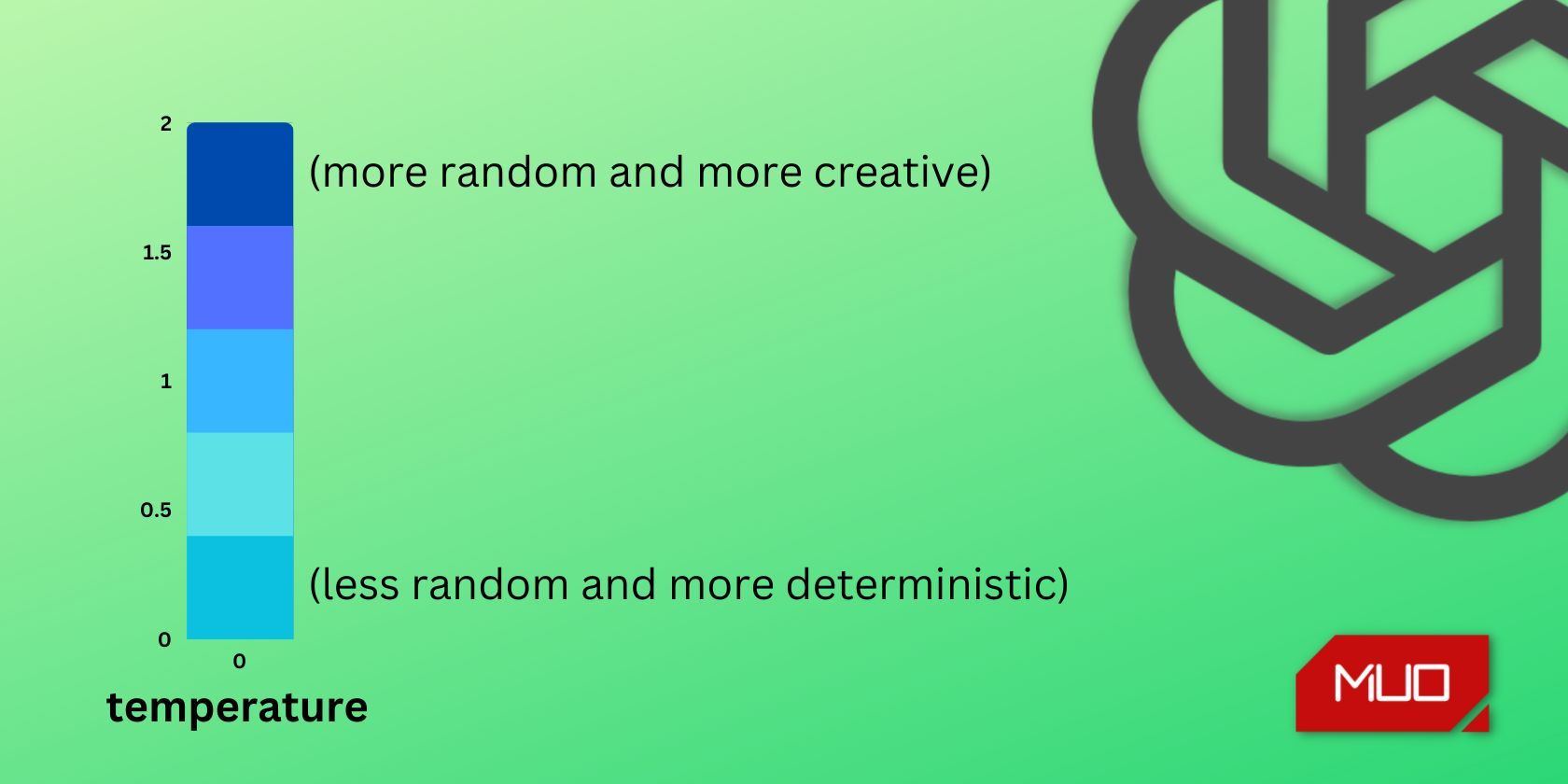

The higher the temperature, the higher the randomness of the output, and vice-versa. If you want your responses to be more focused and deterministic, go for the lower temperature value. And if you want it to be more creative, use a higher value. The temperature value ranges between 0 and 2.

Like ChatGPT, the API also has a word limit. Use the max_tokens parameter to limit the length of responses. Be aware that setting a lower max_tokens value can cause issues as it may cut off the output mid-way.

At the time of writing, the gpt-3.5-turbo model has a token limit of 4,096, while gpt-4‘s is 8,192. The latest gpt-3.5-turbo-0125 and gpt-4-turbo-preview models have limits of 16,385 and 128,000 respectively.

After high demand from developers, OpenAI has introduced JSON mode which instructs the model to always return a JSON object. You can enable JSON mode by setting response_format to { “type”: “json_object” }. Currently, this feature is only available to the latest models: gpt-3.5-turbo-0125 and gpt-4-turbo-preview.

You can further configure the model using the other parameters provided by OpenAI .

Using the ChatGPT API for Text Completion

In addition to multi-turn conversation tasks, the Chat Completions API (ChatGPT API) does a good job with text completion. The following example demonstrates how you can configure the ChatGPT API for text completion:

`

from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI()

response = client.chat.completions.create(

model = “gpt-3.5-turbo”,

temperature = 0.8,

max_tokens = 3000,

messages = [

{“role”: “system”, “content”: “You are a poet who creates poems that evoke emotions.”},

{“role”: “user”, “content”: “Write a short poem for programmers.”}

]

)

print(response.choices [0].message.content)`

You don’t even need to provide the system role and its content. Providing just the user prompt will do the work for you.

messages = [ {"role": "user", "content": "Write a short poem for programmers."} ]

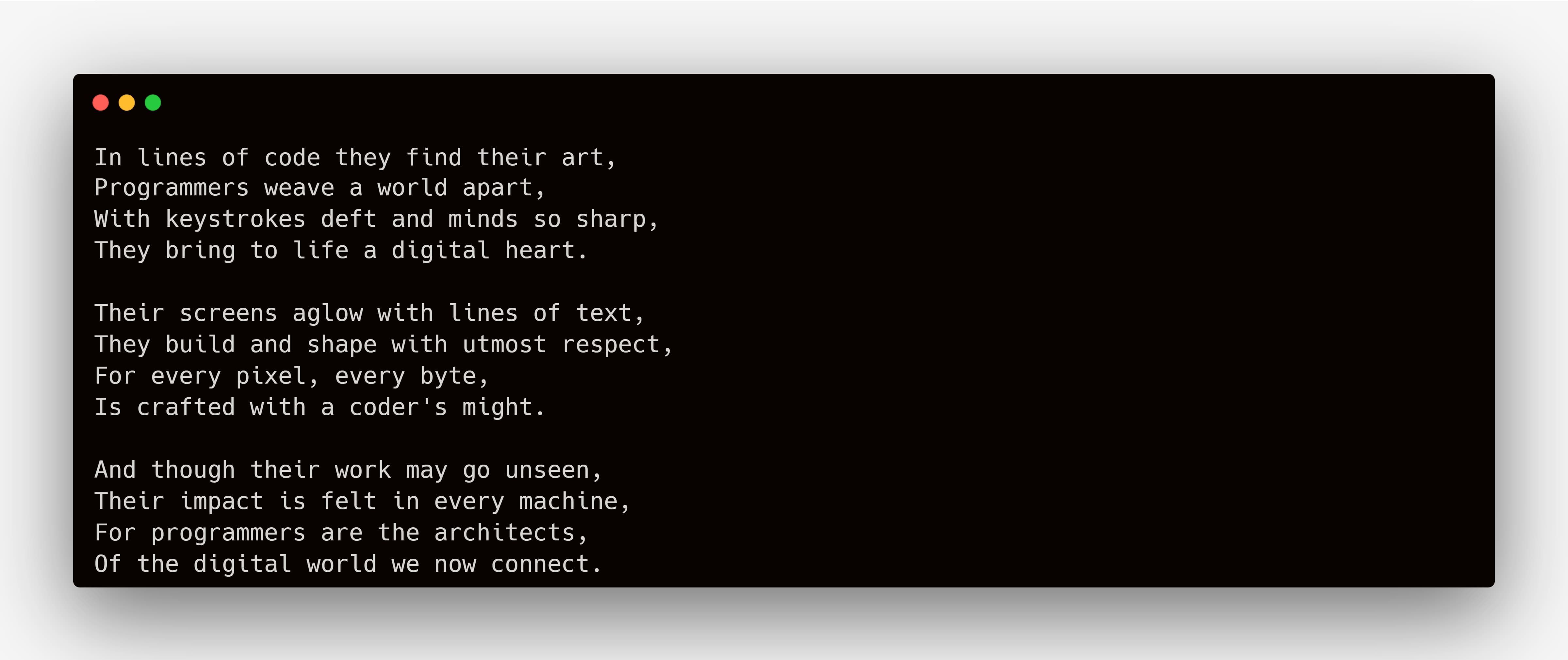

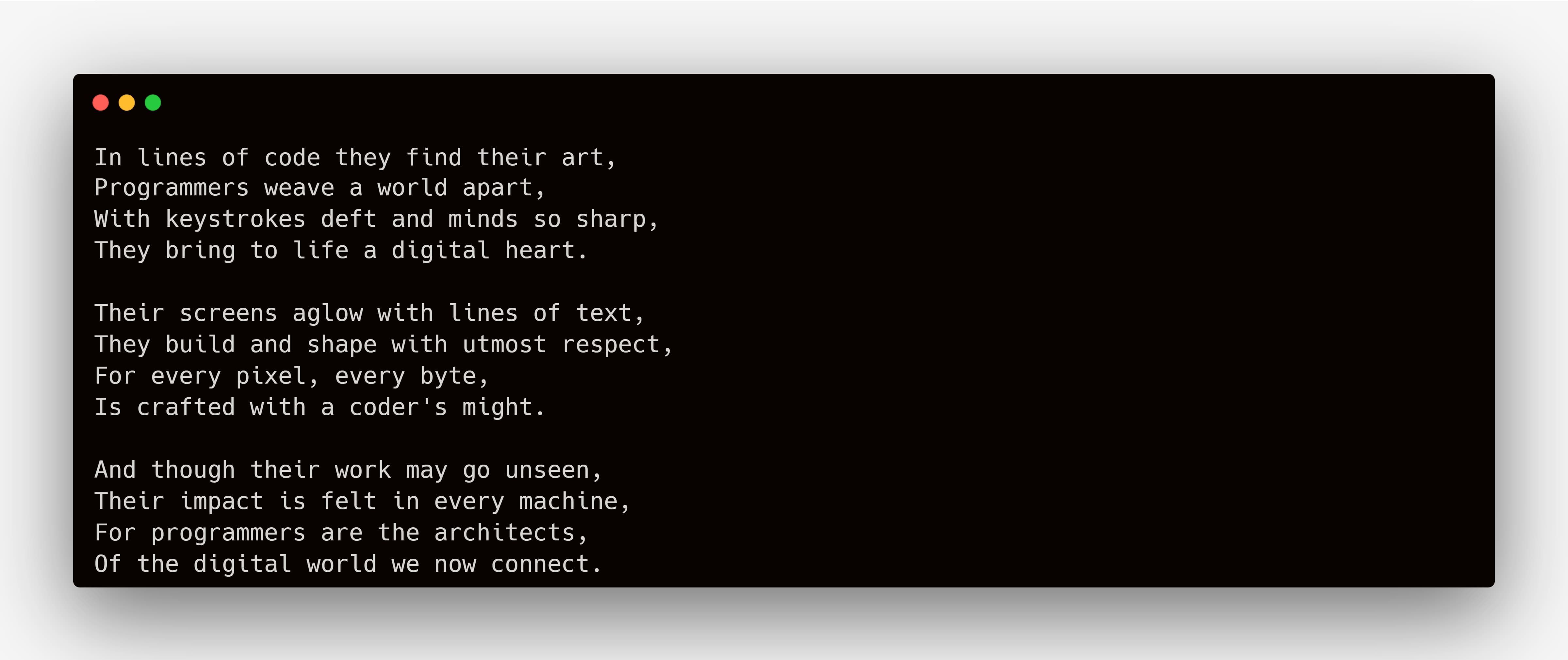

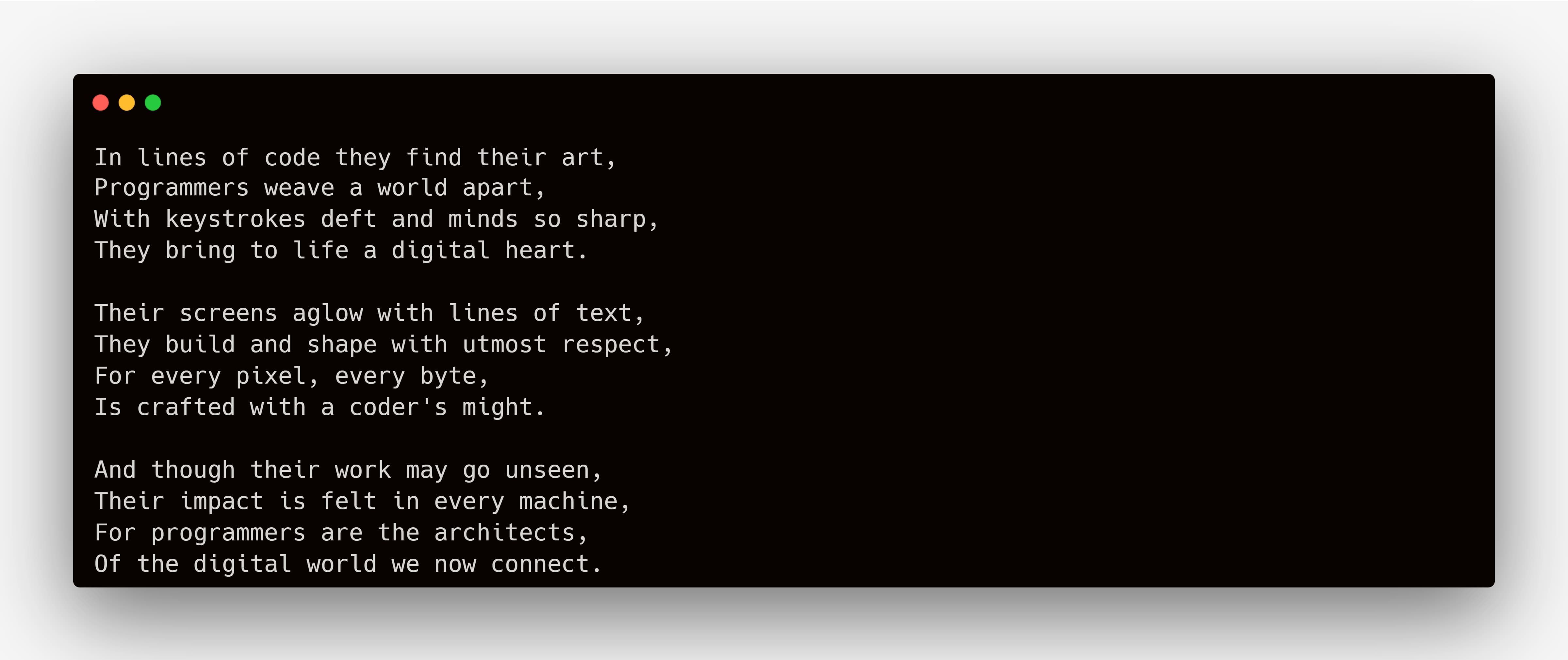

Running the above code will generate a poem for programmers, for example:

ChatGPT API Pricing

The ChatGPT API pricing is based on the “price per 1,000 tokens” model. For chat completion requests, the cost is calculated based on the number of input tokens plus the number of output tokens returned by the API. In layman’s terms, tokens are equivalent to pieces of words, where 1,000 tokens are approximately equal to 750 words.

| Model | Input | Output |

|---|---|---|

| gpt-4-0125-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4-1106-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4-1106-vision-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4 | $0.03 / 1K tokens | $0.06 / 1K tokens |

| gpt-4-32k | $0.06 / 1K tokens | $0.12 / 1K tokens |

| gpt-3.5-turbo-0125 | $0.0005 / 1K tokens | $0.0015 / 1K tokens |

| gpt-3.5-turbo-instruct | $0.0015 / 1K tokens | $0.0020 / 1K tokens |

Note that the pricing may change over time with improvements in the model.

Build Next Generation Apps Using the ChatGPT API

The ChatGPT API has opened gates for developers around the world to build innovative products with the power of AI.

You can use this tool to develop applications like story writers, code translators, marketing copy generators, and text summarizers. Your imagination is the limit to building applications using this technology.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

With the release of its API, OpenAI has opened up the capabilities of ChatGPT to everyone. You can now seamlessly integrate ChatGPT’s features into your application.

Follow these steps to get started, whether you’re looking to integrate ChatGPT into your existing application or develop new applications with it.

1. Getting an OpenAI API Key

To start using the ChatGPT API, you need to obtain an API key.

- Sign up or log in to the official OpenAI platform.

- Once you’re logged in, click on the API keys tab in the left pane.

- Next, click on the Create new secret key button to generate the API key.

- You won’t be able to view the API key again, so copy it and store it somewhere safe.

The code used in this project is available in a GitHub repository and is free for you to use under the MIT license.

2. Setting Up the Development Environment

You can use the API endpoint directly or take advantage of the openai Python/JavaScript library to start building ChatGPT API-powered applications. This guide uses Python and the openai-python library.

To get started:

- Create a Python virtual environment

- Install the openai and python-dotenv libraries via pip:

pip install openai python-dotenv - Create a .env file in the root of your project directory to store your API key securely.

- Next, in the same file, set the OPENAI_API_KEY variable with the key value that you copied earlier:

OPENAI_API_KEY="YOUR_API_KEY"

Make sure you do not accidentally share your API key via version control. Add a .gitignore file to your project’s root directory and add “.env” to it to ignore the dotenv file.

3. Making ChatGPT API Requests

The OpenAI API’s GPT-3.5 Turbo, GPT-4, and GPT-4 Turbo are the same models that ChatGPT uses. These powerful models are capable of understanding and generating natural language text and code. GPT-4 Turbo can even process image inputs which opens the gates for several uses including analyzing images, parsing documents with figures, and transcribing text from images.

Please note that the ChatGPT API is a general term that refers to OpenAI APIs that use GPT-based models, including the gpt-3.5-turbo, gpt-4, and gpt-4-turbo models.

The ChatGPT API is primarily optimized for chat but it also works well for text completion tasks. Whether you want to generate code, translate languages, or draft documents, this API can handle it all.

To get access to the GPT-4 API, you need to make a successful payment of $1 or more. Otherwise, you might get an error similar to “The model `gpt-4` does not exist or you do not have access to it.”

Using the API for Chat Completion

You need to configure the chat model to get it ready for an API call. Here’s an example:

`from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI()

response = client.chat.completions.create(

model = “gpt-3.5-turbo-0125”,

temperature = 0.8,

max_tokens = 3000,

response_format={ “type”: “json_object” },

messages = [

{“role”: “system”, “content”: “You are a funny comedian who tells dad jokes. The output should be in JSON format.”},

{“role”: “user”, “content”: “Write a dad joke related to numbers.”},

{“role”: “assistant”, “content”: “Q: How do you make 7 even? A: Take away the s.”},

{“role”: “user”, “content”: “Write one related to programmers.”}

]

)`

The ChatGPT API sends a response in the following format:

You can extract the content from the response, as a JSON string, with this code:

print(response.choices [0].message.content)

Running this code produces the following output:

The code demonstrates a ChatGPT API call using Python. Note that the model understood the context (“dad joke”) and the type of response (Q&A form) that we were expecting, based on the prompts fed to it.

The most significant part of the configuration is the messages parameter which accepts an array of message objects. Each message object contains a role and content. You can use three types of roles:

- system which sets up the context and behavior of the assistant.

- user which gives instructions to the assistant. The end user will typically provide this, but you can also provide some default user prompts in advance.

- assistant which can include example responses.

You can further customize the temperature and max_tokens parameters of the model to get the output according to your requirements.

The higher the temperature, the higher the randomness of the output, and vice-versa. If you want your responses to be more focused and deterministic, go for the lower temperature value. And if you want it to be more creative, use a higher value. The temperature value ranges between 0 and 2.

Like ChatGPT, the API also has a word limit. Use the max_tokens parameter to limit the length of responses. Be aware that setting a lower max_tokens value can cause issues as it may cut off the output mid-way.

At the time of writing, the gpt-3.5-turbo model has a token limit of 4,096, while gpt-4‘s is 8,192. The latest gpt-3.5-turbo-0125 and gpt-4-turbo-preview models have limits of 16,385 and 128,000 respectively.

After high demand from developers, OpenAI has introduced JSON mode which instructs the model to always return a JSON object. You can enable JSON mode by setting response_format to { “type”: “json_object” }. Currently, this feature is only available to the latest models: gpt-3.5-turbo-0125 and gpt-4-turbo-preview.

You can further configure the model using the other parameters provided by OpenAI .

Using the ChatGPT API for Text Completion

In addition to multi-turn conversation tasks, the Chat Completions API (ChatGPT API) does a good job with text completion. The following example demonstrates how you can configure the ChatGPT API for text completion:

`

from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI()

response = client.chat.completions.create(

model = “gpt-3.5-turbo”,

temperature = 0.8,

max_tokens = 3000,

messages = [

{“role”: “system”, “content”: “You are a poet who creates poems that evoke emotions.”},

{“role”: “user”, “content”: “Write a short poem for programmers.”}

]

)

print(response.choices [0].message.content)`

You don’t even need to provide the system role and its content. Providing just the user prompt will do the work for you.

messages = [ {"role": "user", "content": "Write a short poem for programmers."} ]

Running the above code will generate a poem for programmers, for example:

ChatGPT API Pricing

The ChatGPT API pricing is based on the “price per 1,000 tokens” model. For chat completion requests, the cost is calculated based on the number of input tokens plus the number of output tokens returned by the API. In layman’s terms, tokens are equivalent to pieces of words, where 1,000 tokens are approximately equal to 750 words.

| Model | Input | Output |

|---|---|---|

| gpt-4-0125-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4-1106-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4-1106-vision-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4 | $0.03 / 1K tokens | $0.06 / 1K tokens |

| gpt-4-32k | $0.06 / 1K tokens | $0.12 / 1K tokens |

| gpt-3.5-turbo-0125 | $0.0005 / 1K tokens | $0.0015 / 1K tokens |

| gpt-3.5-turbo-instruct | $0.0015 / 1K tokens | $0.0020 / 1K tokens |

Note that the pricing may change over time with improvements in the model.

Build Next Generation Apps Using the ChatGPT API

The ChatGPT API has opened gates for developers around the world to build innovative products with the power of AI.

You can use this tool to develop applications like story writers, code translators, marketing copy generators, and text summarizers. Your imagination is the limit to building applications using this technology.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

With the release of its API, OpenAI has opened up the capabilities of ChatGPT to everyone. You can now seamlessly integrate ChatGPT’s features into your application.

Follow these steps to get started, whether you’re looking to integrate ChatGPT into your existing application or develop new applications with it.

1. Getting an OpenAI API Key

To start using the ChatGPT API, you need to obtain an API key.

- Sign up or log in to the official OpenAI platform.

- Once you’re logged in, click on the API keys tab in the left pane.

- Next, click on the Create new secret key button to generate the API key.

- You won’t be able to view the API key again, so copy it and store it somewhere safe.

The code used in this project is available in a GitHub repository and is free for you to use under the MIT license.

2. Setting Up the Development Environment

You can use the API endpoint directly or take advantage of the openai Python/JavaScript library to start building ChatGPT API-powered applications. This guide uses Python and the openai-python library.

To get started:

- Create a Python virtual environment

- Install the openai and python-dotenv libraries via pip:

pip install openai python-dotenv - Create a .env file in the root of your project directory to store your API key securely.

- Next, in the same file, set the OPENAI_API_KEY variable with the key value that you copied earlier:

OPENAI_API_KEY="YOUR_API_KEY"

Make sure you do not accidentally share your API key via version control. Add a .gitignore file to your project’s root directory and add “.env” to it to ignore the dotenv file.

3. Making ChatGPT API Requests

The OpenAI API’s GPT-3.5 Turbo, GPT-4, and GPT-4 Turbo are the same models that ChatGPT uses. These powerful models are capable of understanding and generating natural language text and code. GPT-4 Turbo can even process image inputs which opens the gates for several uses including analyzing images, parsing documents with figures, and transcribing text from images.

Please note that the ChatGPT API is a general term that refers to OpenAI APIs that use GPT-based models, including the gpt-3.5-turbo, gpt-4, and gpt-4-turbo models.

The ChatGPT API is primarily optimized for chat but it also works well for text completion tasks. Whether you want to generate code, translate languages, or draft documents, this API can handle it all.

To get access to the GPT-4 API, you need to make a successful payment of $1 or more. Otherwise, you might get an error similar to “The model `gpt-4` does not exist or you do not have access to it.”

Using the API for Chat Completion

You need to configure the chat model to get it ready for an API call. Here’s an example:

`from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI()

response = client.chat.completions.create(

model = “gpt-3.5-turbo-0125”,

temperature = 0.8,

max_tokens = 3000,

response_format={ “type”: “json_object” },

messages = [

{“role”: “system”, “content”: “You are a funny comedian who tells dad jokes. The output should be in JSON format.”},

{“role”: “user”, “content”: “Write a dad joke related to numbers.”},

{“role”: “assistant”, “content”: “Q: How do you make 7 even? A: Take away the s.”},

{“role”: “user”, “content”: “Write one related to programmers.”}

]

)`

The ChatGPT API sends a response in the following format:

You can extract the content from the response, as a JSON string, with this code:

print(response.choices [0].message.content)

Running this code produces the following output:

The code demonstrates a ChatGPT API call using Python. Note that the model understood the context (“dad joke”) and the type of response (Q&A form) that we were expecting, based on the prompts fed to it.

The most significant part of the configuration is the messages parameter which accepts an array of message objects. Each message object contains a role and content. You can use three types of roles:

- system which sets up the context and behavior of the assistant.

- user which gives instructions to the assistant. The end user will typically provide this, but you can also provide some default user prompts in advance.

- assistant which can include example responses.

You can further customize the temperature and max_tokens parameters of the model to get the output according to your requirements.

The higher the temperature, the higher the randomness of the output, and vice-versa. If you want your responses to be more focused and deterministic, go for the lower temperature value. And if you want it to be more creative, use a higher value. The temperature value ranges between 0 and 2.

Like ChatGPT, the API also has a word limit. Use the max_tokens parameter to limit the length of responses. Be aware that setting a lower max_tokens value can cause issues as it may cut off the output mid-way.

At the time of writing, the gpt-3.5-turbo model has a token limit of 4,096, while gpt-4‘s is 8,192. The latest gpt-3.5-turbo-0125 and gpt-4-turbo-preview models have limits of 16,385 and 128,000 respectively.

After high demand from developers, OpenAI has introduced JSON mode which instructs the model to always return a JSON object. You can enable JSON mode by setting response_format to { “type”: “json_object” }. Currently, this feature is only available to the latest models: gpt-3.5-turbo-0125 and gpt-4-turbo-preview.

You can further configure the model using the other parameters provided by OpenAI .

Using the ChatGPT API for Text Completion

In addition to multi-turn conversation tasks, the Chat Completions API (ChatGPT API) does a good job with text completion. The following example demonstrates how you can configure the ChatGPT API for text completion:

`

from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI()

response = client.chat.completions.create(

model = “gpt-3.5-turbo”,

temperature = 0.8,

max_tokens = 3000,

messages = [

{“role”: “system”, “content”: “You are a poet who creates poems that evoke emotions.”},

{“role”: “user”, “content”: “Write a short poem for programmers.”}

]

)

print(response.choices [0].message.content)`

You don’t even need to provide the system role and its content. Providing just the user prompt will do the work for you.

messages = [ {"role": "user", "content": "Write a short poem for programmers."} ]

Running the above code will generate a poem for programmers, for example:

ChatGPT API Pricing

The ChatGPT API pricing is based on the “price per 1,000 tokens” model. For chat completion requests, the cost is calculated based on the number of input tokens plus the number of output tokens returned by the API. In layman’s terms, tokens are equivalent to pieces of words, where 1,000 tokens are approximately equal to 750 words.

| Model | Input | Output |

|---|---|---|

| gpt-4-0125-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4-1106-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4-1106-vision-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4 | $0.03 / 1K tokens | $0.06 / 1K tokens |

| gpt-4-32k | $0.06 / 1K tokens | $0.12 / 1K tokens |

| gpt-3.5-turbo-0125 | $0.0005 / 1K tokens | $0.0015 / 1K tokens |

| gpt-3.5-turbo-instruct | $0.0015 / 1K tokens | $0.0020 / 1K tokens |

Note that the pricing may change over time with improvements in the model.

Build Next Generation Apps Using the ChatGPT API

The ChatGPT API has opened gates for developers around the world to build innovative products with the power of AI.

You can use this tool to develop applications like story writers, code translators, marketing copy generators, and text summarizers. Your imagination is the limit to building applications using this technology.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

With the release of its API, OpenAI has opened up the capabilities of ChatGPT to everyone. You can now seamlessly integrate ChatGPT’s features into your application.

Follow these steps to get started, whether you’re looking to integrate ChatGPT into your existing application or develop new applications with it.

1. Getting an OpenAI API Key

To start using the ChatGPT API, you need to obtain an API key.

- Sign up or log in to the official OpenAI platform.

- Once you’re logged in, click on the API keys tab in the left pane.

- Next, click on the Create new secret key button to generate the API key.

- You won’t be able to view the API key again, so copy it and store it somewhere safe.

The code used in this project is available in a GitHub repository and is free for you to use under the MIT license.

2. Setting Up the Development Environment

You can use the API endpoint directly or take advantage of the openai Python/JavaScript library to start building ChatGPT API-powered applications. This guide uses Python and the openai-python library.

To get started:

- Create a Python virtual environment

- Install the openai and python-dotenv libraries via pip:

pip install openai python-dotenv - Create a .env file in the root of your project directory to store your API key securely.

- Next, in the same file, set the OPENAI_API_KEY variable with the key value that you copied earlier:

OPENAI_API_KEY="YOUR_API_KEY"

Make sure you do not accidentally share your API key via version control. Add a .gitignore file to your project’s root directory and add “.env” to it to ignore the dotenv file.

3. Making ChatGPT API Requests

The OpenAI API’s GPT-3.5 Turbo, GPT-4, and GPT-4 Turbo are the same models that ChatGPT uses. These powerful models are capable of understanding and generating natural language text and code. GPT-4 Turbo can even process image inputs which opens the gates for several uses including analyzing images, parsing documents with figures, and transcribing text from images.

Please note that the ChatGPT API is a general term that refers to OpenAI APIs that use GPT-based models, including the gpt-3.5-turbo, gpt-4, and gpt-4-turbo models.

The ChatGPT API is primarily optimized for chat but it also works well for text completion tasks. Whether you want to generate code, translate languages, or draft documents, this API can handle it all.

To get access to the GPT-4 API, you need to make a successful payment of $1 or more. Otherwise, you might get an error similar to “The model `gpt-4` does not exist or you do not have access to it.”

Using the API for Chat Completion

You need to configure the chat model to get it ready for an API call. Here’s an example:

`from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI()

response = client.chat.completions.create(

model = “gpt-3.5-turbo-0125”,

temperature = 0.8,

max_tokens = 3000,

response_format={ “type”: “json_object” },

messages = [

{“role”: “system”, “content”: “You are a funny comedian who tells dad jokes. The output should be in JSON format.”},

{“role”: “user”, “content”: “Write a dad joke related to numbers.”},

{“role”: “assistant”, “content”: “Q: How do you make 7 even? A: Take away the s.”},

{“role”: “user”, “content”: “Write one related to programmers.”}

]

)`

The ChatGPT API sends a response in the following format:

You can extract the content from the response, as a JSON string, with this code:

print(response.choices [0].message.content)

Running this code produces the following output:

The code demonstrates a ChatGPT API call using Python. Note that the model understood the context (“dad joke”) and the type of response (Q&A form) that we were expecting, based on the prompts fed to it.

The most significant part of the configuration is the messages parameter which accepts an array of message objects. Each message object contains a role and content. You can use three types of roles:

- system which sets up the context and behavior of the assistant.

- user which gives instructions to the assistant. The end user will typically provide this, but you can also provide some default user prompts in advance.

- assistant which can include example responses.

You can further customize the temperature and max_tokens parameters of the model to get the output according to your requirements.

The higher the temperature, the higher the randomness of the output, and vice-versa. If you want your responses to be more focused and deterministic, go for the lower temperature value. And if you want it to be more creative, use a higher value. The temperature value ranges between 0 and 2.

Like ChatGPT, the API also has a word limit. Use the max_tokens parameter to limit the length of responses. Be aware that setting a lower max_tokens value can cause issues as it may cut off the output mid-way.

At the time of writing, the gpt-3.5-turbo model has a token limit of 4,096, while gpt-4‘s is 8,192. The latest gpt-3.5-turbo-0125 and gpt-4-turbo-preview models have limits of 16,385 and 128,000 respectively.

After high demand from developers, OpenAI has introduced JSON mode which instructs the model to always return a JSON object. You can enable JSON mode by setting response_format to { “type”: “json_object” }. Currently, this feature is only available to the latest models: gpt-3.5-turbo-0125 and gpt-4-turbo-preview.

You can further configure the model using the other parameters provided by OpenAI .

Using the ChatGPT API for Text Completion

In addition to multi-turn conversation tasks, the Chat Completions API (ChatGPT API) does a good job with text completion. The following example demonstrates how you can configure the ChatGPT API for text completion:

`

from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI()

response = client.chat.completions.create(

model = “gpt-3.5-turbo”,

temperature = 0.8,

max_tokens = 3000,

messages = [

{“role”: “system”, “content”: “You are a poet who creates poems that evoke emotions.”},

{“role”: “user”, “content”: “Write a short poem for programmers.”}

]

)

print(response.choices [0].message.content)`

You don’t even need to provide the system role and its content. Providing just the user prompt will do the work for you.

messages = [ {"role": "user", "content": "Write a short poem for programmers."} ]

Running the above code will generate a poem for programmers, for example:

ChatGPT API Pricing

The ChatGPT API pricing is based on the “price per 1,000 tokens” model. For chat completion requests, the cost is calculated based on the number of input tokens plus the number of output tokens returned by the API. In layman’s terms, tokens are equivalent to pieces of words, where 1,000 tokens are approximately equal to 750 words.

| Model | Input | Output |

|---|---|---|

| gpt-4-0125-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4-1106-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4-1106-vision-preview | $0.01 / 1K tokens | $0.03 / 1K tokens |

| gpt-4 | $0.03 / 1K tokens | $0.06 / 1K tokens |

| gpt-4-32k | $0.06 / 1K tokens | $0.12 / 1K tokens |

| gpt-3.5-turbo-0125 | $0.0005 / 1K tokens | $0.0015 / 1K tokens |

| gpt-3.5-turbo-instruct | $0.0015 / 1K tokens | $0.0020 / 1K tokens |

Note that the pricing may change over time with improvements in the model.

Build Next Generation Apps Using the ChatGPT API

The ChatGPT API has opened gates for developers around the world to build innovative products with the power of AI.

You can use this tool to develop applications like story writers, code translators, marketing copy generators, and text summarizers. Your imagination is the limit to building applications using this technology.

Also read:

- [New] Key Points in YouTube Monetization Overhaul

- [New] Key to Generating an Exclusive Tag on TikTok for 2024

- [Updated] Elevate Your Video Conferencing Mastering Zoom Filters

- [Updated] High-End Humor Scripts

- 24 Techniques for Recording Virtual Conferences on a Dime for 2024

- Accelerating PC Management: How to Swiftly Open the Control Panel in Windows 10 with YL Computing Techniques

- Boosting Concentration: Effective Strategies for Enhanced Productivity When Telecommuting - Insights From ZDNet

- Exploring the Unknown: Emerging Job Positions in the AI Era - Deciphering Their Elusive Titles on ZDNet

- Free WinX DVD Author Software: Top Rated Free DVD Burning Tool for Windows 10 & 11

- French Language Essentials for Travelers: Getting It Right on Arrival

- How to Write a Winning Cybersecurity Resume: Dos and Don'ts Revealed by ZDNet Experts

- Mastering Social Connections: An Introvert's Guide to Successful Networking with Tips From ZDNet

- Mended Defect: Keyboard Type Lag Issue

- Riding the Wave of Change: How AI Will Phase Out Certain Careers Yet Spark Opportunities in Novel Domains | ZDNet

- Shifting Paradigms: How Coding Is Transforming Yet Again - Insights From Tech Experts

- Top 10 Programming Bootcamp Picks : Your Guide According to ZDNet

- Title: Leveraging ChatGPT's API Power Efficiently

- Author: Brian

- Created at : 2025-01-19 00:40:40

- Updated at : 2025-01-24 18:44:54

- Link: https://tech-savvy.techidaily.com/leveraging-chatgpts-api-power-efficiently/

- License: This work is licensed under CC BY-NC-SA 4.0.