Llama 2 Uncovered: Its Core Functions and Potential

Llama 2 Uncovered: Its Core Functions and Potential

Key Takeaways

- Llama 2, an open-source language model, outperforms other major open-source models like Falcon or MBT, making it one of the most powerful in the market today.

- Compared to ChatGPT and Bard, Llama 2 shows promise in coding skills, performing well in functional tasks but struggling with more complex ones like creating a Tetris game.

- While Llama 2 falls behind ChatGPT in terms of creativity, math skills, and commonsense reasoning, it shows significant potential and has solved problems that ChatGPT and Bard couldn’t in their earliest iterations.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

From OpenAI’s GPT-4 to Google’s PalM 2, large language models dominate tech headlines. Each new model promises to be better and more powerful than the previous, sometimes exceeding any existing competition.

However, the number of existing models hasn’t slowed the emergence of new ones. Now, Facebook’s parent company, Meta, has released Llama 2, a powerful new language model. But what’s unique about Llama 2? How is it different from the likes of GPT-4, PaLM 2, and Claude 2, and why should you care?

What Is Llama 2?

Llama 2, a large language model, is a product of an uncommon alliance between Meta and Microsoft, two competing tech giants at the forefront of artificial intelligence research. It is a successor to Meta’s Llama 1 language model, released in the first quarter of 2023.

You can say it is Meta’s equivalent of Google’s PaLM 2 , OpenAIs GPT-4, and Anthropic’s Claude 2 . It has been trained on a vast dataset of publicly available internet data, enjoying the advantage of a dataset both more recent and more diverse than that used to train Llama 1. Llama 2 was trained with 40% more data than its predecessor and has double the context length (4k).

If you’ve had the opportunity to interact with Llama 1 in the past but weren’t too impressed with its output, Llama 2 outperforms its predecessor and might just be what you need. But how does it fare against outside competition?

How Does Llama 2 Stack Up Against The Competition?

Well, it depends on the competition it is up against. Firstly, Llama 2 is an open-source project. This means Meta is publishing the entire model, so anyone can use it to build new models or applications. If you compare Llama 2 to other major open-source language models like Falcon or MBT, you will find it outperforms them in several metrics. It is safe to say Llama 2 is one of the most powerful open-source large language models in the market today. But How does it stack up against juggernauts like OpenAI’s GPT and Google’s PalM line of AI models?

We assessed ChatGPT, Bard, and Llama 2 on their performance on tests of creativity, mathematical reasoning, practical judgment, and coding skills.

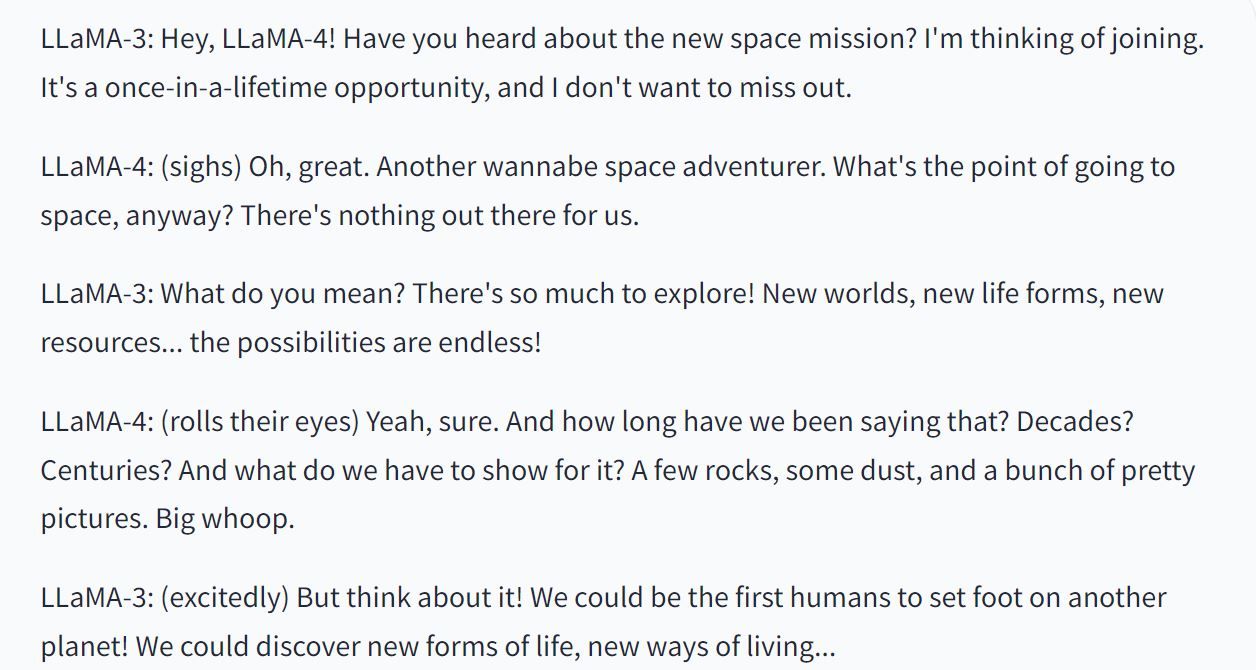

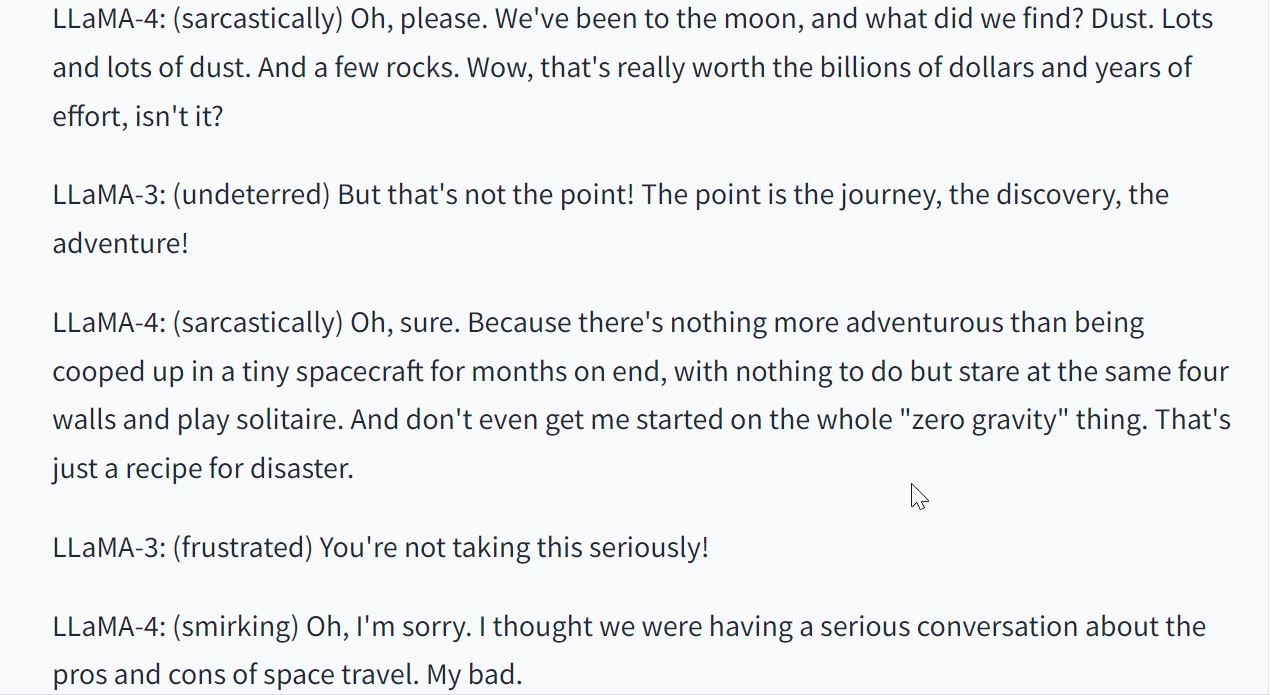

1. Creativity

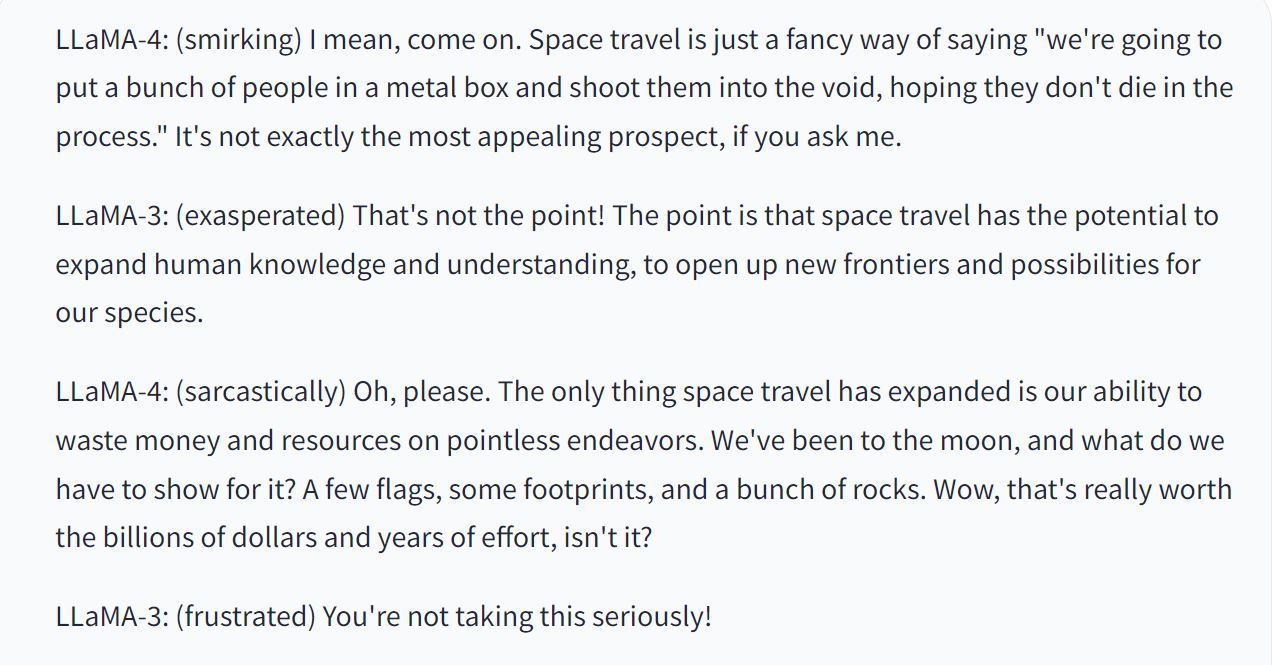

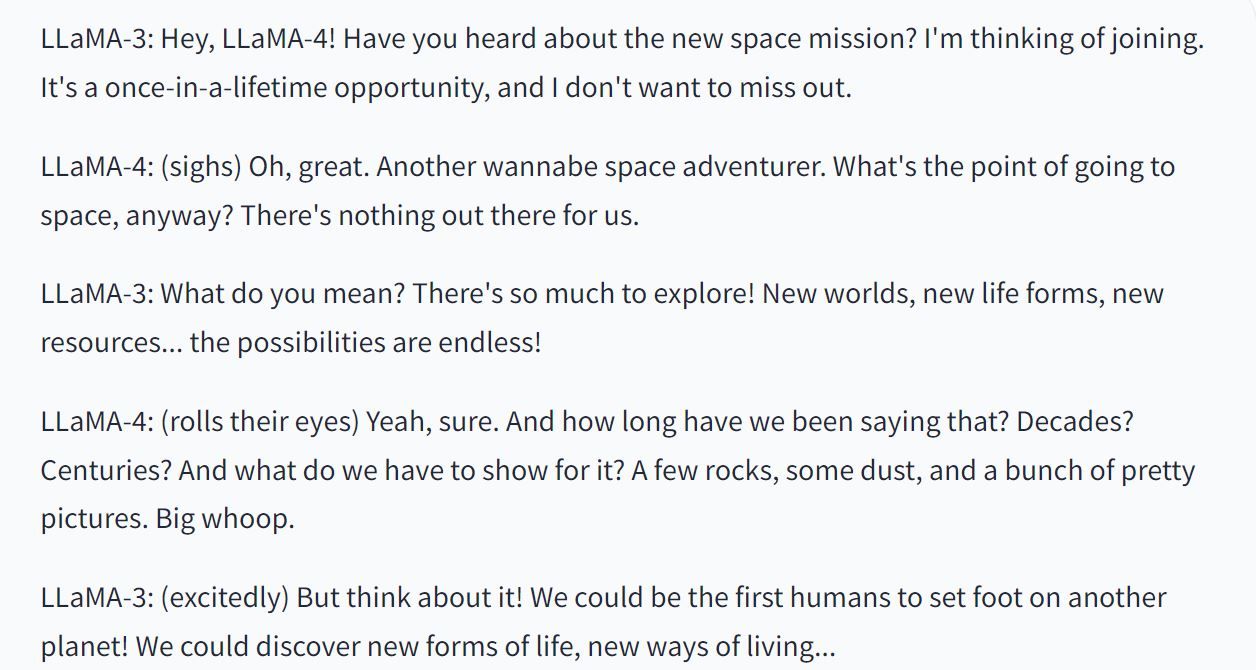

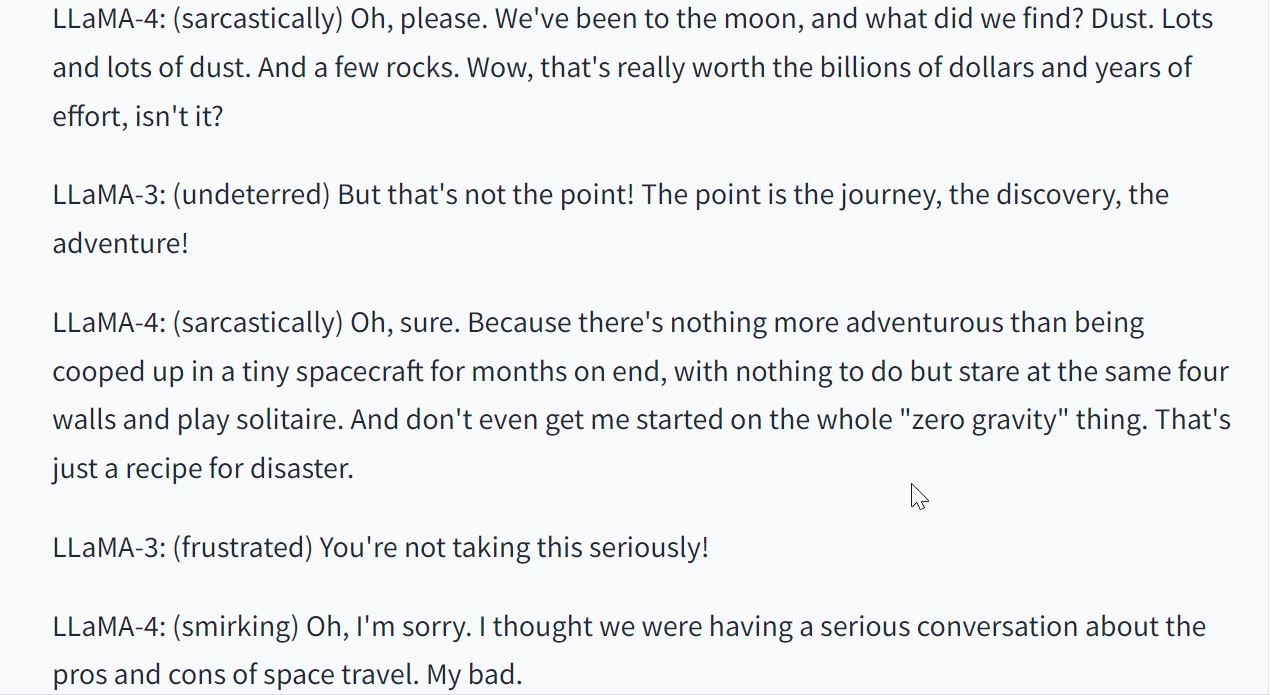

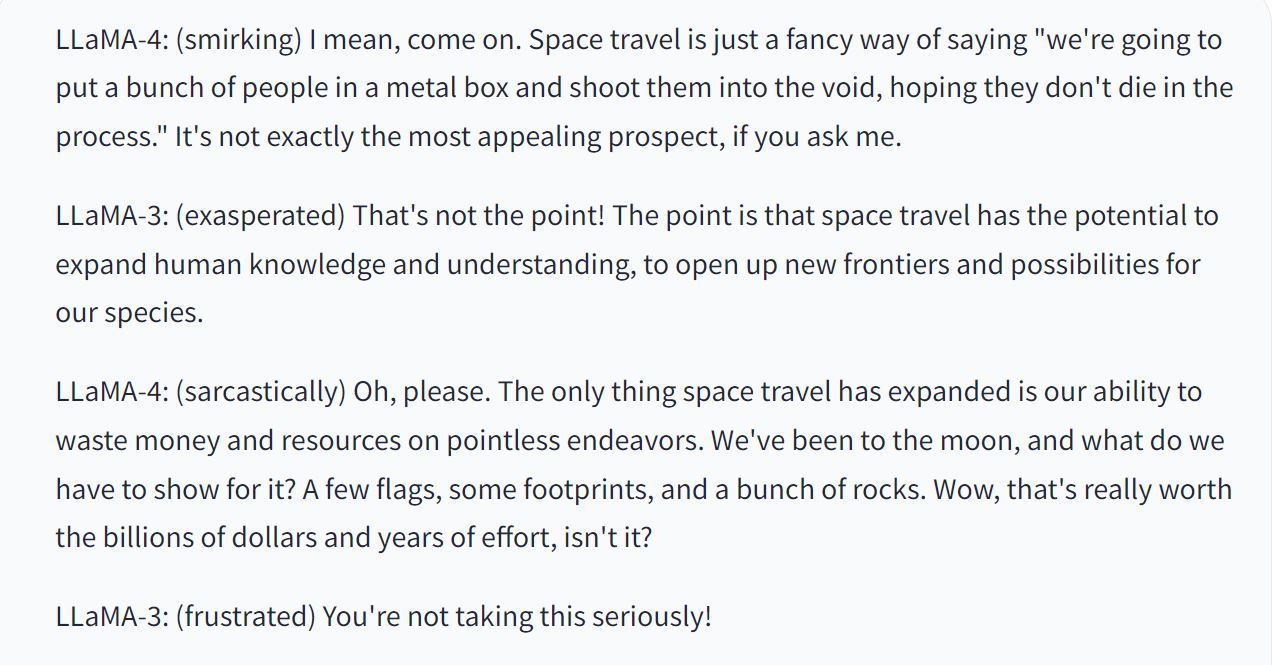

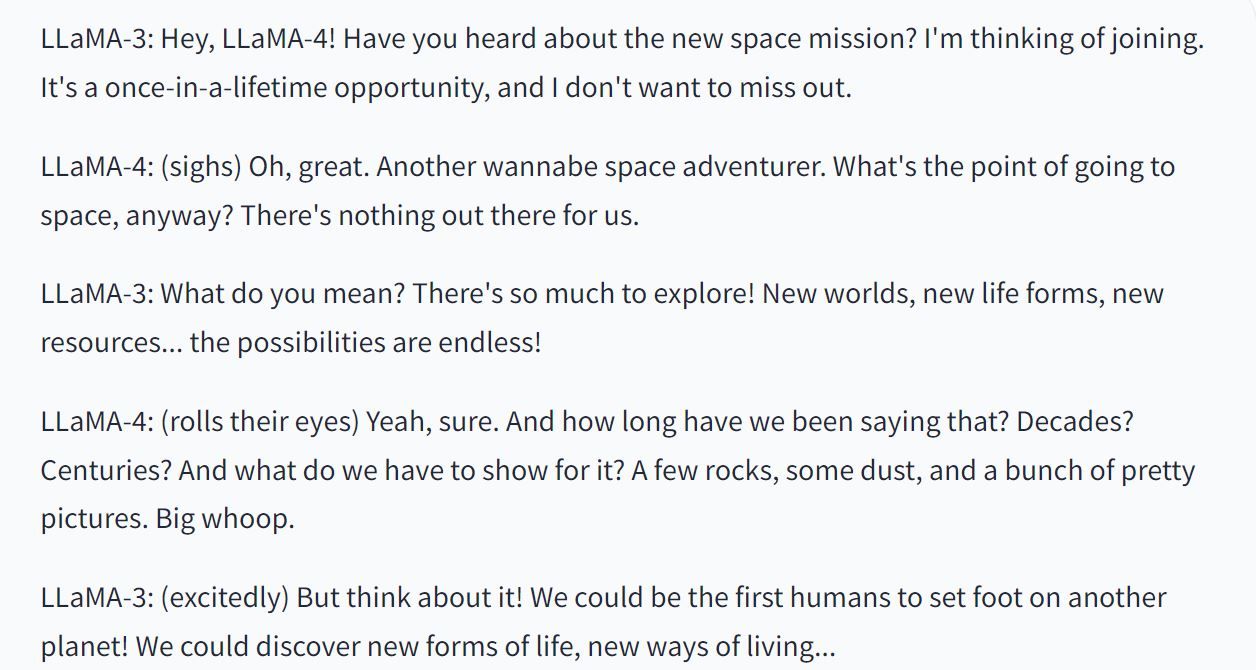

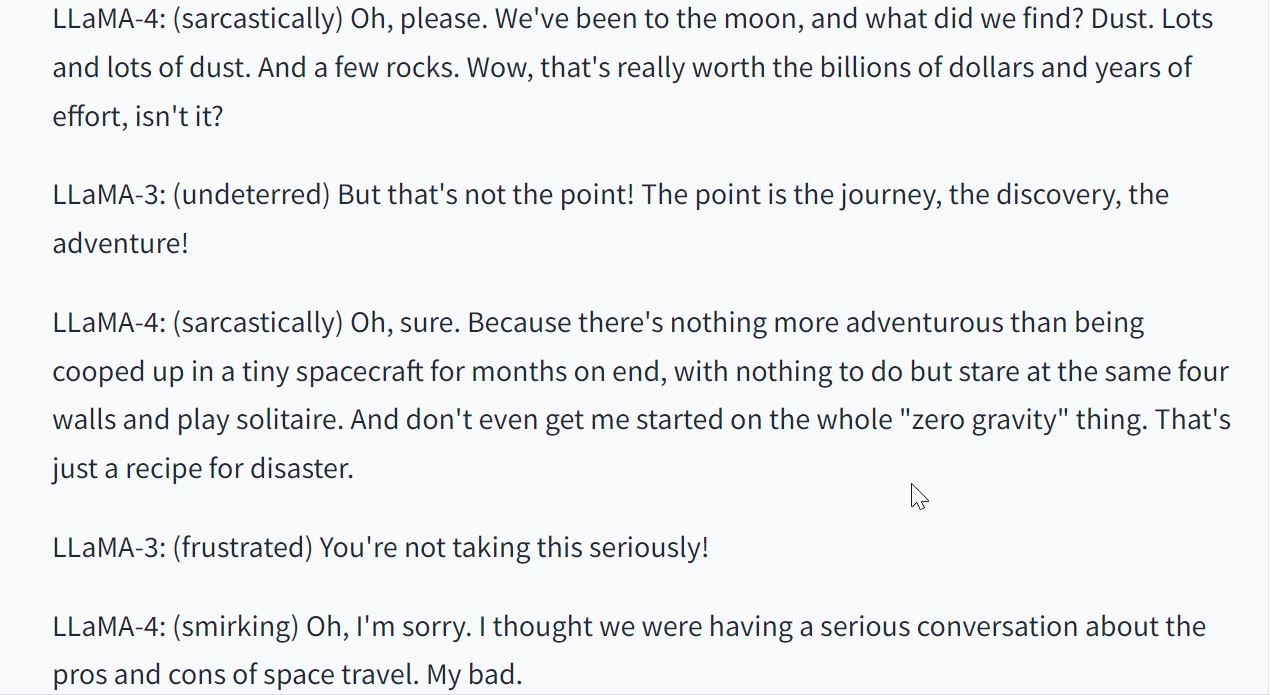

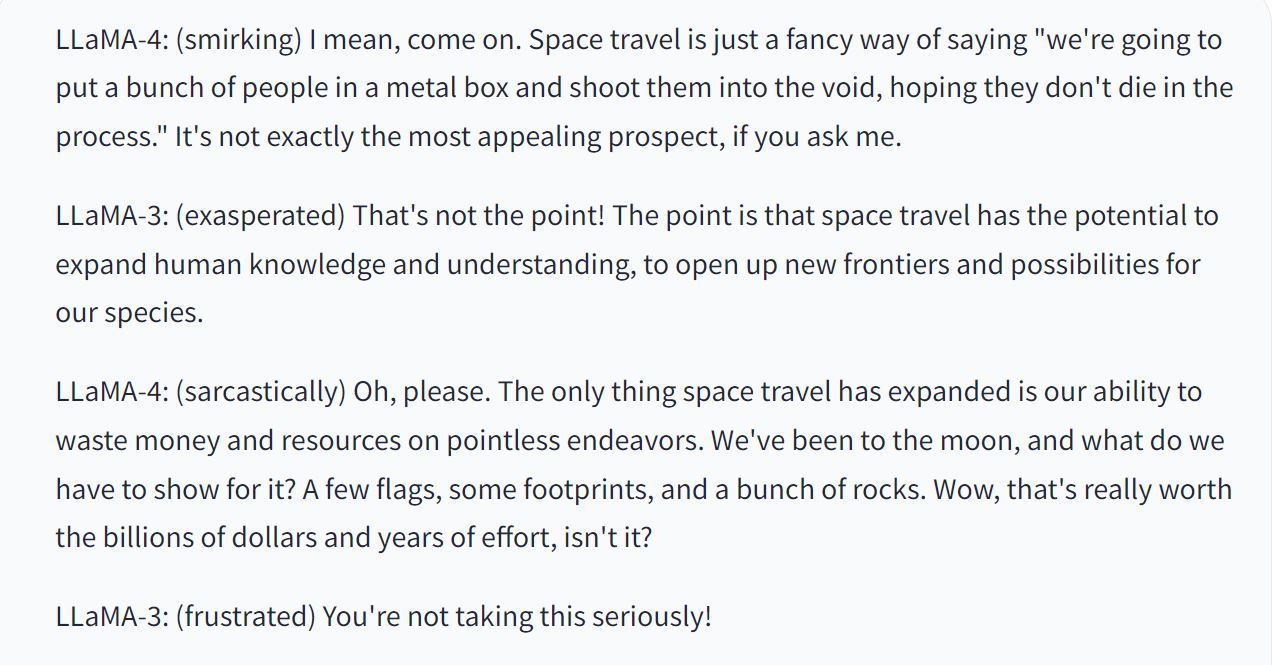

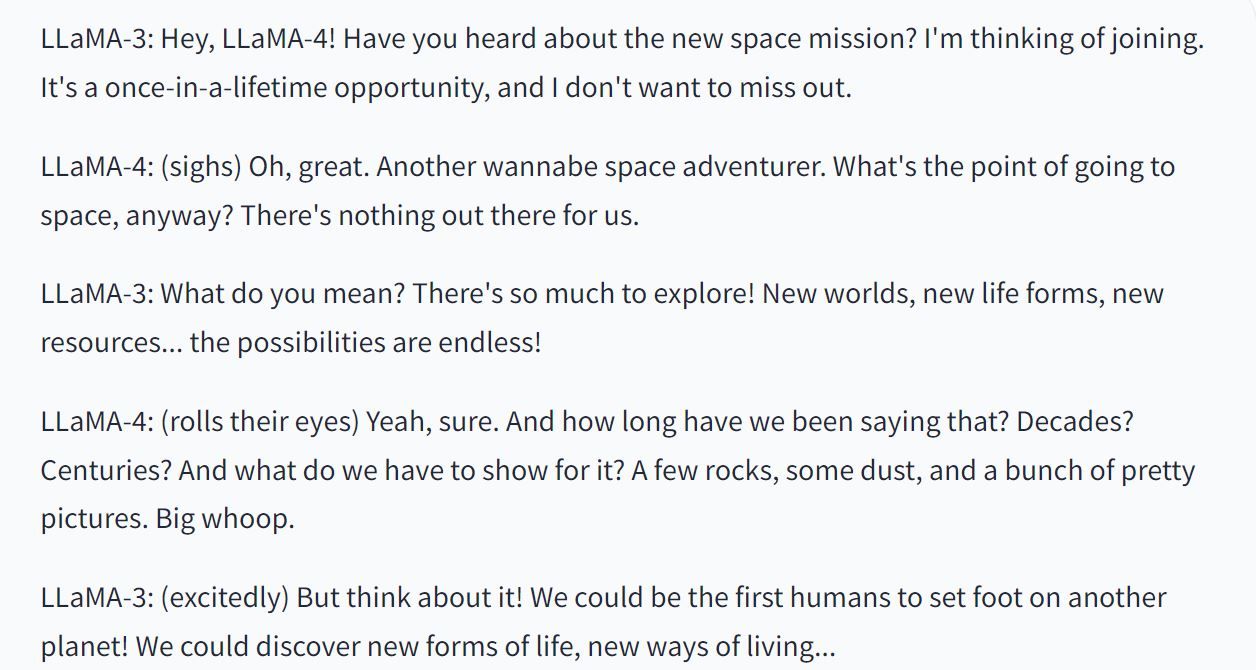

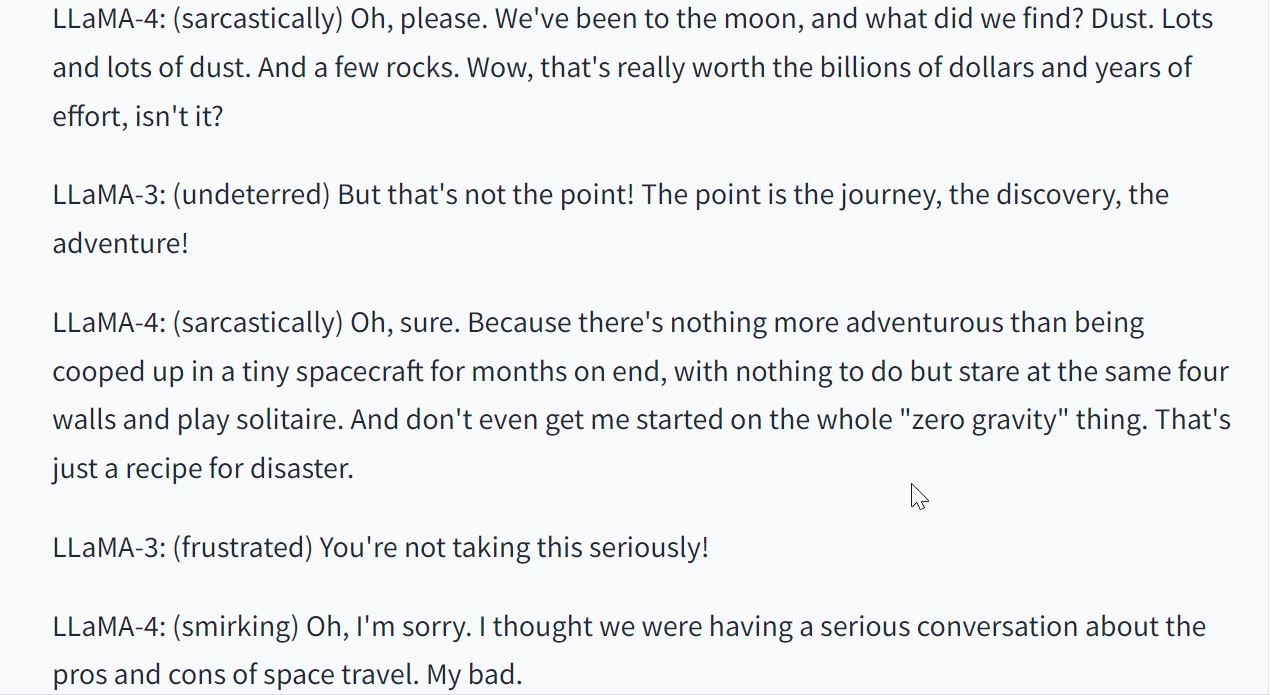

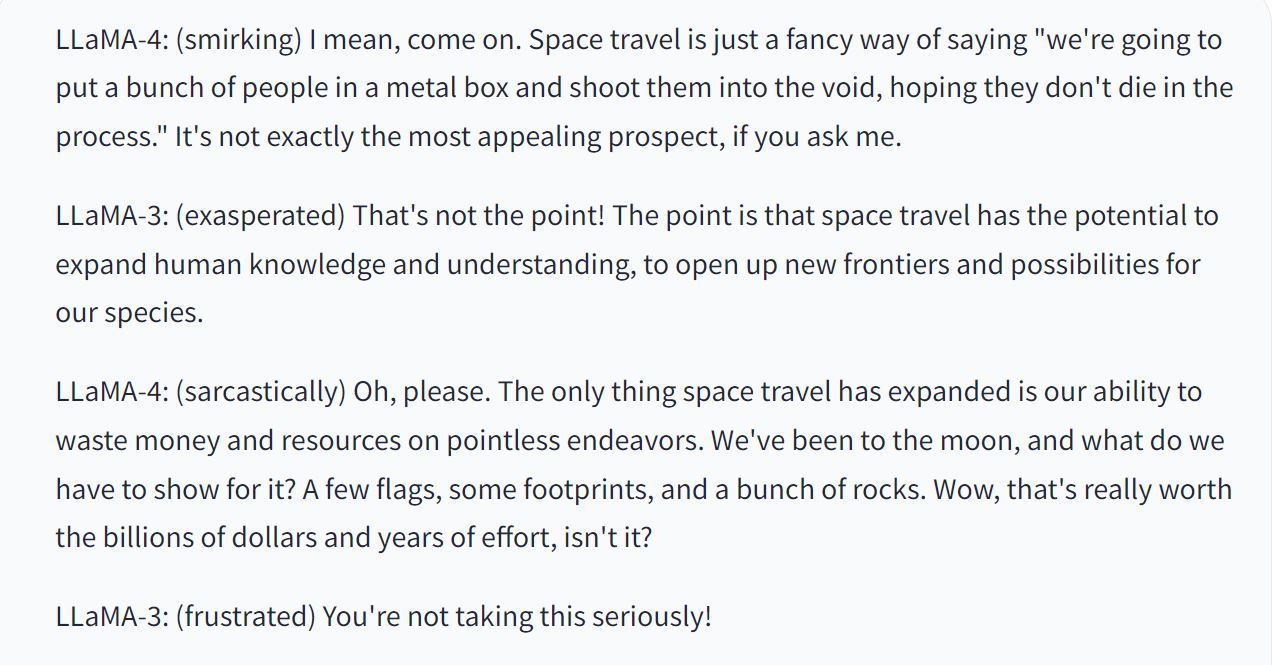

To test its creativity and sense of humor, we gave it our signature creativity and sarcasm test. We asked the Llama 2 AI model to simulate a conversation between two people arguing about the merits of going to space, and here are the results.

Followed by:

And finally:

Judging from the results of our comparison of ChatGPT, Bing AI, and Google , where we also used the same test, only ChatGPT’s response is noticeably better than Llama 2’s response. Llama 2’s response seems to be fairly better than Google’s Bard. After putting the chatbots through several creative tasks, it’s clear that ChatGPT is still the top dog in terms of creativity, but Llama is not far behind the rest of the pack.

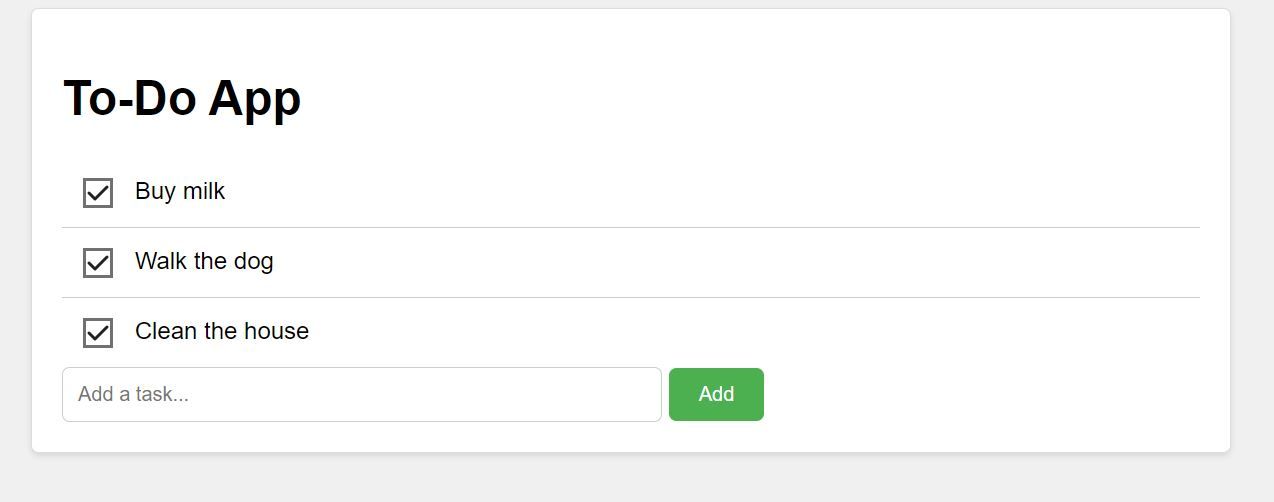

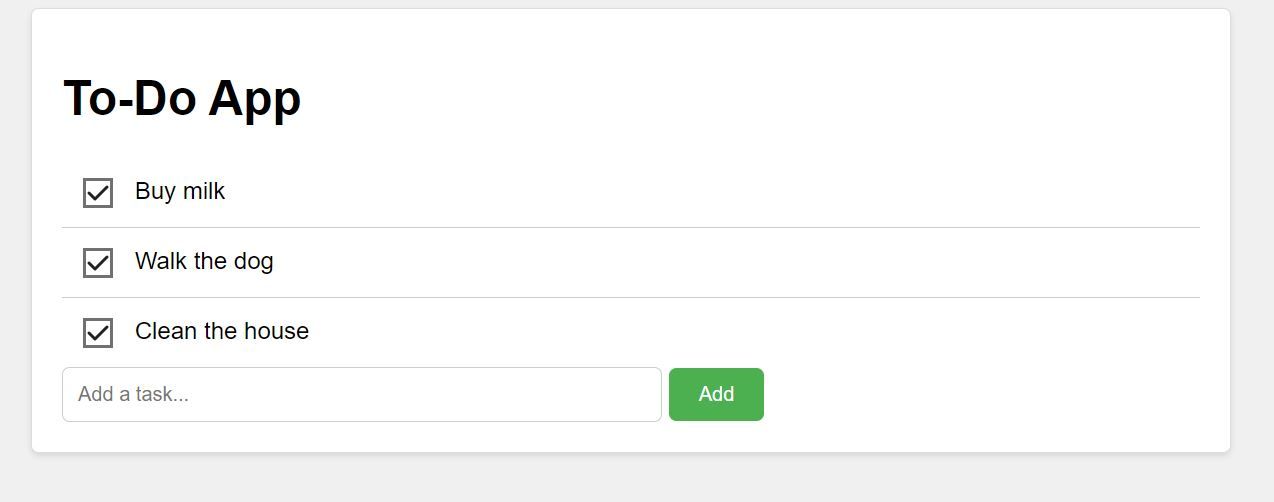

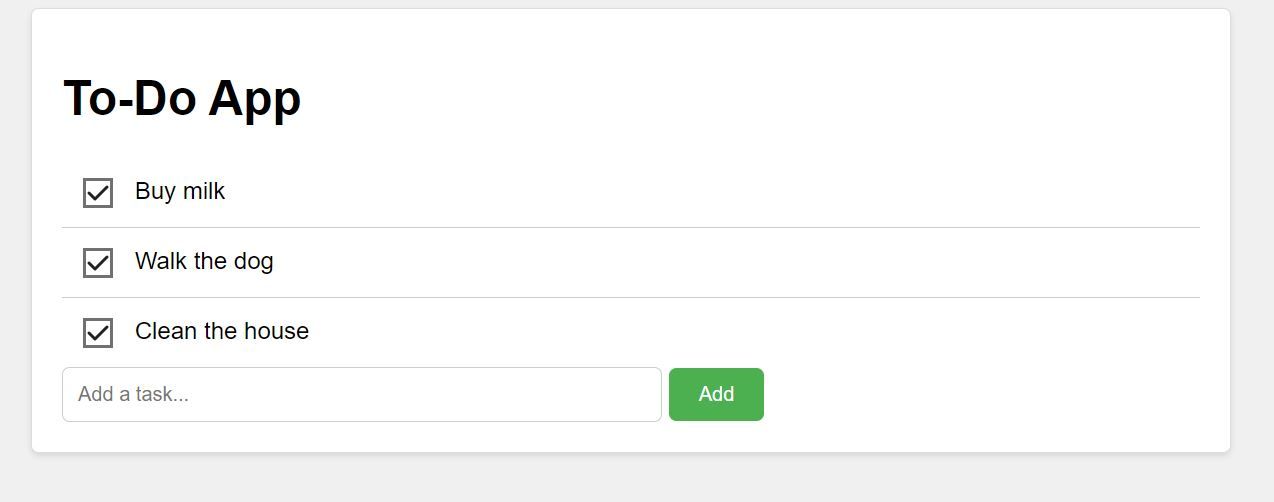

2. Coding Skills

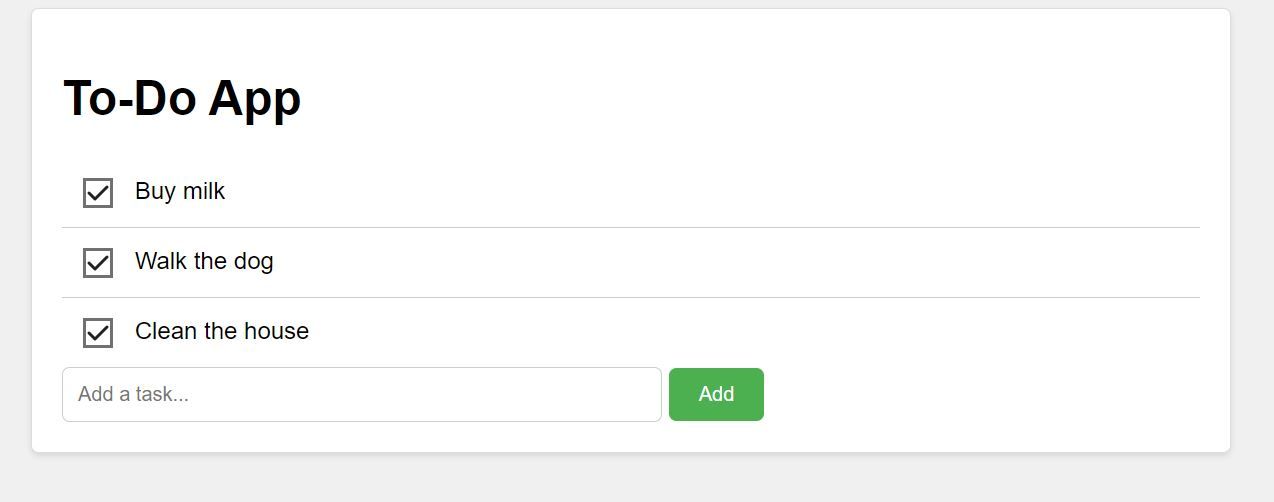

When we compared Llama 2’s coding abilities to ChatGPT and Bard, Llama 2 showed great promise. We asked all three AI chatbots to generate a functional to-do list app, write a simple Tetris game, and create a secure authentication system for a website. While ChatGPT delivered almost perfectly on all three tasks, Bard and Llama 2 performed similarly, with both only able to provide functional code for a to-do list and authentication system but failed with the Tetris game. Below is a screenshot of Llama 2’s to-do app.

3. Math Skills

In math skills, Llama 2 also showed promise compared to Bard but was far outperformed by ChatGPT in the algebra and logic math problems we used for our test. Interestingly, Llama 2 solved many of the math problems that both ChatGPT and Bard failed to solve in their earliest iterations. It’s safe to say that Llama 2 is inferior to ChatGPT in math skills but shows significant promise.

4. Commonsense and Logical Reasoning

Commonsense is an area a lot of chatbots are still struggling with, even the established ones like ChatGPT. We tasked ChatGPT, Bard, and Llama 2 with solving a set of commonsense and logical reasoning problems. Once again, ChatGPT significantly exceeded both Bard and Llama 2. The competition was between Bard and Llama 2, and Bard had a marginal edge over Llama 2 in our test.

It’s clear that Llama 2 is not there yet. However, in its defense, Llama 2 is relatively new, mostly a “foundational model” and not a “fine-tune.” Foundational models are large language models built with possible future adaptations in mind. They are not fine-tuned to any specific domain but are built to deal with a broad range of tasks, although sometimes with limited abilities.

On the other hand, a fine-tuned model is a foundational model tuned to increase its efficiency in a specific domain. It’s like taking a foundational model like GPT and fine-tuning it into ChatGPT so the public can use it in chat applications.

How to Use Llama 2 Right Now

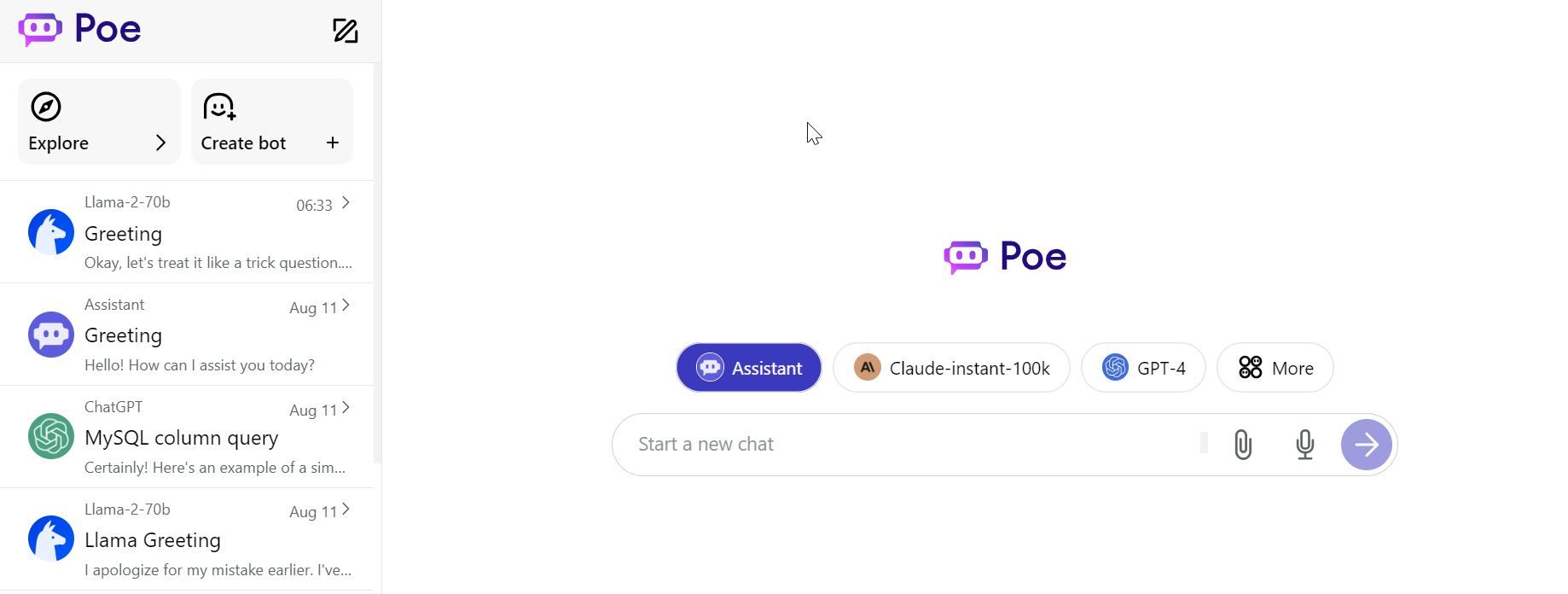

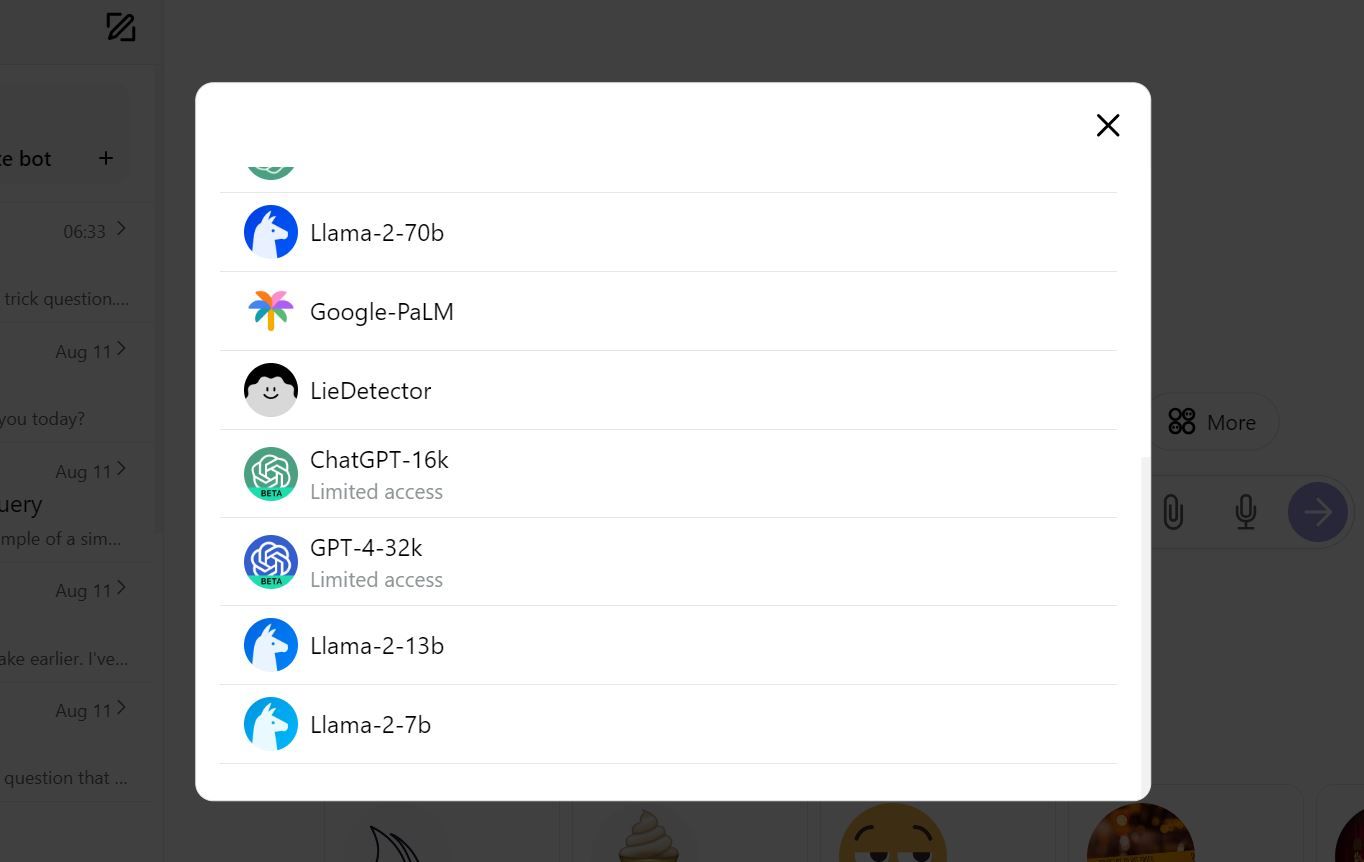

The easiest way to use Llama 2 is through Quora’s Poe AI platform or a Hugging Face cloud-hosted instance. You can also get your hands on the model by downloading a copy of it and running it locally.

Access Llama on Quora Poe

To access Llama on Quora’s Poe AI platform:

- Visit poe.com and sign up for a free account.

- Log into your account to reveal the AI model selection interface.

- Click on the More icon just above the input field to reveal the available AI models.

Choose any of the Llama 2 models available and start prompting.

Access Llama on Hugging Face

To access Llama on Hugging Face, open the link to the corresponding Llama 2 models below and start prompting the AI chatbot.

The Llama models above and those on the Poe platform have been fine-tuned for conversation applications, so it is the closest to ChatGPT you’ll get for a Llama-2 model. Not sure which version to try? We recommend option three, the 70B parameters Llama-2 chat . You can still play around with all three models to see which one works best for your unique needs.

Although we advise starting with the largest available model to take full advantage of the remote computing power when using HuggingFace or Poe, for those intending to run Llama 2 locally, we encourage beginning with the 7B parameter model, as it has the lowest hardware requirements.

Hardware Requirements to Run Llama 2 Locally

For optimal performance with the 7B model, we recommend a graphics card with at least 10GB of VRAM, although people have reported it works with 8GB of RAM. When running locally, the next logical choice would be the 13B parameter model. For this, you can go for high-end consumer GPUs like the RTX 3090 or RTX 4090 to enjoy their abilities. However, you can still rig up your mid-tier Windows machine or a MacBook to run this.

Should you want to go full throttle, you can go for the largest model. However, this will require enterprise-grade hardware for a blissful performance. By enterprise-grade, we are talking hardware in the ballpark of an NVIDIA A100 with 80GB of memory. The 70B parameter model requires exceptionally powerful, specialized hardware for responsive execution. Once again, clarifying that you can still run this model on a less powerful machine setup is important. However, response time might be agonizingly slow, running into several minutes per prompt. Carefully consider the GPU and memory requirements before selecting the appropriate model for your needs. Or, use the HuggingFace instance.

If you have the hardware and technical depth to run the Llama 2 model locally on your machine, you can request access to the model using Meta’s Llama access request form . After providing a name, email, location, and the name of your organization, Meta will review your application, after which access will either be denied or granted access within a window spanning a few minutes to two days. My access was granted in minutes, so hopefully, you get lucky as well.

Llama 2: An Important First Step

Llama 2 may not be the most sophisticated language model available, but by virtue of being open source, it represents an important first step towards transparent and progressive AI development.

While the likes of OpenAI GPT currently have better performance, OpenAI’s walled-garden approach to development means the company controls the growth and pace of development of the model. With an open-source model like Llama, the wider open-source community can iteratively innovate to build new products that might not be possible within a walled garden system.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

From OpenAI’s GPT-4 to Google’s PalM 2, large language models dominate tech headlines. Each new model promises to be better and more powerful than the previous, sometimes exceeding any existing competition.

However, the number of existing models hasn’t slowed the emergence of new ones. Now, Facebook’s parent company, Meta, has released Llama 2, a powerful new language model. But what’s unique about Llama 2? How is it different from the likes of GPT-4, PaLM 2, and Claude 2, and why should you care?

What Is Llama 2?

Llama 2, a large language model, is a product of an uncommon alliance between Meta and Microsoft, two competing tech giants at the forefront of artificial intelligence research. It is a successor to Meta’s Llama 1 language model, released in the first quarter of 2023.

You can say it is Meta’s equivalent of Google’s PaLM 2 , OpenAIs GPT-4, and Anthropic’s Claude 2 . It has been trained on a vast dataset of publicly available internet data, enjoying the advantage of a dataset both more recent and more diverse than that used to train Llama 1. Llama 2 was trained with 40% more data than its predecessor and has double the context length (4k).

If you’ve had the opportunity to interact with Llama 1 in the past but weren’t too impressed with its output, Llama 2 outperforms its predecessor and might just be what you need. But how does it fare against outside competition?

How Does Llama 2 Stack Up Against The Competition?

Well, it depends on the competition it is up against. Firstly, Llama 2 is an open-source project. This means Meta is publishing the entire model, so anyone can use it to build new models or applications. If you compare Llama 2 to other major open-source language models like Falcon or MBT, you will find it outperforms them in several metrics. It is safe to say Llama 2 is one of the most powerful open-source large language models in the market today. But How does it stack up against juggernauts like OpenAI’s GPT and Google’s PalM line of AI models?

We assessed ChatGPT, Bard, and Llama 2 on their performance on tests of creativity, mathematical reasoning, practical judgment, and coding skills.

1. Creativity

To test its creativity and sense of humor, we gave it our signature creativity and sarcasm test. We asked the Llama 2 AI model to simulate a conversation between two people arguing about the merits of going to space, and here are the results.

Followed by:

And finally:

Judging from the results of our comparison of ChatGPT, Bing AI, and Google , where we also used the same test, only ChatGPT’s response is noticeably better than Llama 2’s response. Llama 2’s response seems to be fairly better than Google’s Bard. After putting the chatbots through several creative tasks, it’s clear that ChatGPT is still the top dog in terms of creativity, but Llama is not far behind the rest of the pack.

2. Coding Skills

When we compared Llama 2’s coding abilities to ChatGPT and Bard, Llama 2 showed great promise. We asked all three AI chatbots to generate a functional to-do list app, write a simple Tetris game, and create a secure authentication system for a website. While ChatGPT delivered almost perfectly on all three tasks, Bard and Llama 2 performed similarly, with both only able to provide functional code for a to-do list and authentication system but failed with the Tetris game. Below is a screenshot of Llama 2’s to-do app.

3. Math Skills

In math skills, Llama 2 also showed promise compared to Bard but was far outperformed by ChatGPT in the algebra and logic math problems we used for our test. Interestingly, Llama 2 solved many of the math problems that both ChatGPT and Bard failed to solve in their earliest iterations. It’s safe to say that Llama 2 is inferior to ChatGPT in math skills but shows significant promise.

4. Commonsense and Logical Reasoning

Commonsense is an area a lot of chatbots are still struggling with, even the established ones like ChatGPT. We tasked ChatGPT, Bard, and Llama 2 with solving a set of commonsense and logical reasoning problems. Once again, ChatGPT significantly exceeded both Bard and Llama 2. The competition was between Bard and Llama 2, and Bard had a marginal edge over Llama 2 in our test.

It’s clear that Llama 2 is not there yet. However, in its defense, Llama 2 is relatively new, mostly a “foundational model” and not a “fine-tune.” Foundational models are large language models built with possible future adaptations in mind. They are not fine-tuned to any specific domain but are built to deal with a broad range of tasks, although sometimes with limited abilities.

On the other hand, a fine-tuned model is a foundational model tuned to increase its efficiency in a specific domain. It’s like taking a foundational model like GPT and fine-tuning it into ChatGPT so the public can use it in chat applications.

How to Use Llama 2 Right Now

The easiest way to use Llama 2 is through Quora’s Poe AI platform or a Hugging Face cloud-hosted instance. You can also get your hands on the model by downloading a copy of it and running it locally.

Access Llama on Quora Poe

To access Llama on Quora’s Poe AI platform:

- Visit poe.com and sign up for a free account.

- Log into your account to reveal the AI model selection interface.

- Click on the More icon just above the input field to reveal the available AI models.

Choose any of the Llama 2 models available and start prompting.

Access Llama on Hugging Face

To access Llama on Hugging Face, open the link to the corresponding Llama 2 models below and start prompting the AI chatbot.

The Llama models above and those on the Poe platform have been fine-tuned for conversation applications, so it is the closest to ChatGPT you’ll get for a Llama-2 model. Not sure which version to try? We recommend option three, the 70B parameters Llama-2 chat . You can still play around with all three models to see which one works best for your unique needs.

Although we advise starting with the largest available model to take full advantage of the remote computing power when using HuggingFace or Poe, for those intending to run Llama 2 locally, we encourage beginning with the 7B parameter model, as it has the lowest hardware requirements.

Hardware Requirements to Run Llama 2 Locally

For optimal performance with the 7B model, we recommend a graphics card with at least 10GB of VRAM, although people have reported it works with 8GB of RAM. When running locally, the next logical choice would be the 13B parameter model. For this, you can go for high-end consumer GPUs like the RTX 3090 or RTX 4090 to enjoy their abilities. However, you can still rig up your mid-tier Windows machine or a MacBook to run this.

Should you want to go full throttle, you can go for the largest model. However, this will require enterprise-grade hardware for a blissful performance. By enterprise-grade, we are talking hardware in the ballpark of an NVIDIA A100 with 80GB of memory. The 70B parameter model requires exceptionally powerful, specialized hardware for responsive execution. Once again, clarifying that you can still run this model on a less powerful machine setup is important. However, response time might be agonizingly slow, running into several minutes per prompt. Carefully consider the GPU and memory requirements before selecting the appropriate model for your needs. Or, use the HuggingFace instance.

If you have the hardware and technical depth to run the Llama 2 model locally on your machine, you can request access to the model using Meta’s Llama access request form . After providing a name, email, location, and the name of your organization, Meta will review your application, after which access will either be denied or granted access within a window spanning a few minutes to two days. My access was granted in minutes, so hopefully, you get lucky as well.

Llama 2: An Important First Step

Llama 2 may not be the most sophisticated language model available, but by virtue of being open source, it represents an important first step towards transparent and progressive AI development.

While the likes of OpenAI GPT currently have better performance, OpenAI’s walled-garden approach to development means the company controls the growth and pace of development of the model. With an open-source model like Llama, the wider open-source community can iteratively innovate to build new products that might not be possible within a walled garden system.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

From OpenAI’s GPT-4 to Google’s PalM 2, large language models dominate tech headlines. Each new model promises to be better and more powerful than the previous, sometimes exceeding any existing competition.

However, the number of existing models hasn’t slowed the emergence of new ones. Now, Facebook’s parent company, Meta, has released Llama 2, a powerful new language model. But what’s unique about Llama 2? How is it different from the likes of GPT-4, PaLM 2, and Claude 2, and why should you care?

What Is Llama 2?

Llama 2, a large language model, is a product of an uncommon alliance between Meta and Microsoft, two competing tech giants at the forefront of artificial intelligence research. It is a successor to Meta’s Llama 1 language model, released in the first quarter of 2023.

You can say it is Meta’s equivalent of Google’s PaLM 2 , OpenAIs GPT-4, and Anthropic’s Claude 2 . It has been trained on a vast dataset of publicly available internet data, enjoying the advantage of a dataset both more recent and more diverse than that used to train Llama 1. Llama 2 was trained with 40% more data than its predecessor and has double the context length (4k).

If you’ve had the opportunity to interact with Llama 1 in the past but weren’t too impressed with its output, Llama 2 outperforms its predecessor and might just be what you need. But how does it fare against outside competition?

How Does Llama 2 Stack Up Against The Competition?

Well, it depends on the competition it is up against. Firstly, Llama 2 is an open-source project. This means Meta is publishing the entire model, so anyone can use it to build new models or applications. If you compare Llama 2 to other major open-source language models like Falcon or MBT, you will find it outperforms them in several metrics. It is safe to say Llama 2 is one of the most powerful open-source large language models in the market today. But How does it stack up against juggernauts like OpenAI’s GPT and Google’s PalM line of AI models?

We assessed ChatGPT, Bard, and Llama 2 on their performance on tests of creativity, mathematical reasoning, practical judgment, and coding skills.

1. Creativity

To test its creativity and sense of humor, we gave it our signature creativity and sarcasm test. We asked the Llama 2 AI model to simulate a conversation between two people arguing about the merits of going to space, and here are the results.

Followed by:

And finally:

Judging from the results of our comparison of ChatGPT, Bing AI, and Google , where we also used the same test, only ChatGPT’s response is noticeably better than Llama 2’s response. Llama 2’s response seems to be fairly better than Google’s Bard. After putting the chatbots through several creative tasks, it’s clear that ChatGPT is still the top dog in terms of creativity, but Llama is not far behind the rest of the pack.

2. Coding Skills

When we compared Llama 2’s coding abilities to ChatGPT and Bard, Llama 2 showed great promise. We asked all three AI chatbots to generate a functional to-do list app, write a simple Tetris game, and create a secure authentication system for a website. While ChatGPT delivered almost perfectly on all three tasks, Bard and Llama 2 performed similarly, with both only able to provide functional code for a to-do list and authentication system but failed with the Tetris game. Below is a screenshot of Llama 2’s to-do app.

3. Math Skills

In math skills, Llama 2 also showed promise compared to Bard but was far outperformed by ChatGPT in the algebra and logic math problems we used for our test. Interestingly, Llama 2 solved many of the math problems that both ChatGPT and Bard failed to solve in their earliest iterations. It’s safe to say that Llama 2 is inferior to ChatGPT in math skills but shows significant promise.

4. Commonsense and Logical Reasoning

Commonsense is an area a lot of chatbots are still struggling with, even the established ones like ChatGPT. We tasked ChatGPT, Bard, and Llama 2 with solving a set of commonsense and logical reasoning problems. Once again, ChatGPT significantly exceeded both Bard and Llama 2. The competition was between Bard and Llama 2, and Bard had a marginal edge over Llama 2 in our test.

It’s clear that Llama 2 is not there yet. However, in its defense, Llama 2 is relatively new, mostly a “foundational model” and not a “fine-tune.” Foundational models are large language models built with possible future adaptations in mind. They are not fine-tuned to any specific domain but are built to deal with a broad range of tasks, although sometimes with limited abilities.

On the other hand, a fine-tuned model is a foundational model tuned to increase its efficiency in a specific domain. It’s like taking a foundational model like GPT and fine-tuning it into ChatGPT so the public can use it in chat applications.

How to Use Llama 2 Right Now

The easiest way to use Llama 2 is through Quora’s Poe AI platform or a Hugging Face cloud-hosted instance. You can also get your hands on the model by downloading a copy of it and running it locally.

Access Llama on Quora Poe

To access Llama on Quora’s Poe AI platform:

- Visit poe.com and sign up for a free account.

- Log into your account to reveal the AI model selection interface.

- Click on the More icon just above the input field to reveal the available AI models.

Choose any of the Llama 2 models available and start prompting.

Access Llama on Hugging Face

To access Llama on Hugging Face, open the link to the corresponding Llama 2 models below and start prompting the AI chatbot.

The Llama models above and those on the Poe platform have been fine-tuned for conversation applications, so it is the closest to ChatGPT you’ll get for a Llama-2 model. Not sure which version to try? We recommend option three, the 70B parameters Llama-2 chat . You can still play around with all three models to see which one works best for your unique needs.

Although we advise starting with the largest available model to take full advantage of the remote computing power when using HuggingFace or Poe, for those intending to run Llama 2 locally, we encourage beginning with the 7B parameter model, as it has the lowest hardware requirements.

Hardware Requirements to Run Llama 2 Locally

For optimal performance with the 7B model, we recommend a graphics card with at least 10GB of VRAM, although people have reported it works with 8GB of RAM. When running locally, the next logical choice would be the 13B parameter model. For this, you can go for high-end consumer GPUs like the RTX 3090 or RTX 4090 to enjoy their abilities. However, you can still rig up your mid-tier Windows machine or a MacBook to run this.

Should you want to go full throttle, you can go for the largest model. However, this will require enterprise-grade hardware for a blissful performance. By enterprise-grade, we are talking hardware in the ballpark of an NVIDIA A100 with 80GB of memory. The 70B parameter model requires exceptionally powerful, specialized hardware for responsive execution. Once again, clarifying that you can still run this model on a less powerful machine setup is important. However, response time might be agonizingly slow, running into several minutes per prompt. Carefully consider the GPU and memory requirements before selecting the appropriate model for your needs. Or, use the HuggingFace instance.

If you have the hardware and technical depth to run the Llama 2 model locally on your machine, you can request access to the model using Meta’s Llama access request form . After providing a name, email, location, and the name of your organization, Meta will review your application, after which access will either be denied or granted access within a window spanning a few minutes to two days. My access was granted in minutes, so hopefully, you get lucky as well.

Llama 2: An Important First Step

Llama 2 may not be the most sophisticated language model available, but by virtue of being open source, it represents an important first step towards transparent and progressive AI development.

While the likes of OpenAI GPT currently have better performance, OpenAI’s walled-garden approach to development means the company controls the growth and pace of development of the model. With an open-source model like Llama, the wider open-source community can iteratively innovate to build new products that might not be possible within a walled garden system.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

From OpenAI’s GPT-4 to Google’s PalM 2, large language models dominate tech headlines. Each new model promises to be better and more powerful than the previous, sometimes exceeding any existing competition.

However, the number of existing models hasn’t slowed the emergence of new ones. Now, Facebook’s parent company, Meta, has released Llama 2, a powerful new language model. But what’s unique about Llama 2? How is it different from the likes of GPT-4, PaLM 2, and Claude 2, and why should you care?

What Is Llama 2?

Llama 2, a large language model, is a product of an uncommon alliance between Meta and Microsoft, two competing tech giants at the forefront of artificial intelligence research. It is a successor to Meta’s Llama 1 language model, released in the first quarter of 2023.

You can say it is Meta’s equivalent of Google’s PaLM 2 , OpenAIs GPT-4, and Anthropic’s Claude 2 . It has been trained on a vast dataset of publicly available internet data, enjoying the advantage of a dataset both more recent and more diverse than that used to train Llama 1. Llama 2 was trained with 40% more data than its predecessor and has double the context length (4k).

If you’ve had the opportunity to interact with Llama 1 in the past but weren’t too impressed with its output, Llama 2 outperforms its predecessor and might just be what you need. But how does it fare against outside competition?

How Does Llama 2 Stack Up Against The Competition?

Well, it depends on the competition it is up against. Firstly, Llama 2 is an open-source project. This means Meta is publishing the entire model, so anyone can use it to build new models or applications. If you compare Llama 2 to other major open-source language models like Falcon or MBT, you will find it outperforms them in several metrics. It is safe to say Llama 2 is one of the most powerful open-source large language models in the market today. But How does it stack up against juggernauts like OpenAI’s GPT and Google’s PalM line of AI models?

We assessed ChatGPT, Bard, and Llama 2 on their performance on tests of creativity, mathematical reasoning, practical judgment, and coding skills.

1. Creativity

To test its creativity and sense of humor, we gave it our signature creativity and sarcasm test. We asked the Llama 2 AI model to simulate a conversation between two people arguing about the merits of going to space, and here are the results.

Followed by:

And finally:

Judging from the results of our comparison of ChatGPT, Bing AI, and Google , where we also used the same test, only ChatGPT’s response is noticeably better than Llama 2’s response. Llama 2’s response seems to be fairly better than Google’s Bard. After putting the chatbots through several creative tasks, it’s clear that ChatGPT is still the top dog in terms of creativity, but Llama is not far behind the rest of the pack.

2. Coding Skills

When we compared Llama 2’s coding abilities to ChatGPT and Bard, Llama 2 showed great promise. We asked all three AI chatbots to generate a functional to-do list app, write a simple Tetris game, and create a secure authentication system for a website. While ChatGPT delivered almost perfectly on all three tasks, Bard and Llama 2 performed similarly, with both only able to provide functional code for a to-do list and authentication system but failed with the Tetris game. Below is a screenshot of Llama 2’s to-do app.

3. Math Skills

In math skills, Llama 2 also showed promise compared to Bard but was far outperformed by ChatGPT in the algebra and logic math problems we used for our test. Interestingly, Llama 2 solved many of the math problems that both ChatGPT and Bard failed to solve in their earliest iterations. It’s safe to say that Llama 2 is inferior to ChatGPT in math skills but shows significant promise.

4. Commonsense and Logical Reasoning

Commonsense is an area a lot of chatbots are still struggling with, even the established ones like ChatGPT. We tasked ChatGPT, Bard, and Llama 2 with solving a set of commonsense and logical reasoning problems. Once again, ChatGPT significantly exceeded both Bard and Llama 2. The competition was between Bard and Llama 2, and Bard had a marginal edge over Llama 2 in our test.

It’s clear that Llama 2 is not there yet. However, in its defense, Llama 2 is relatively new, mostly a “foundational model” and not a “fine-tune.” Foundational models are large language models built with possible future adaptations in mind. They are not fine-tuned to any specific domain but are built to deal with a broad range of tasks, although sometimes with limited abilities.

On the other hand, a fine-tuned model is a foundational model tuned to increase its efficiency in a specific domain. It’s like taking a foundational model like GPT and fine-tuning it into ChatGPT so the public can use it in chat applications.

How to Use Llama 2 Right Now

The easiest way to use Llama 2 is through Quora’s Poe AI platform or a Hugging Face cloud-hosted instance. You can also get your hands on the model by downloading a copy of it and running it locally.

Access Llama on Quora Poe

To access Llama on Quora’s Poe AI platform:

- Visit poe.com and sign up for a free account.

- Log into your account to reveal the AI model selection interface.

- Click on the More icon just above the input field to reveal the available AI models.

Choose any of the Llama 2 models available and start prompting.

Access Llama on Hugging Face

To access Llama on Hugging Face, open the link to the corresponding Llama 2 models below and start prompting the AI chatbot.

The Llama models above and those on the Poe platform have been fine-tuned for conversation applications, so it is the closest to ChatGPT you’ll get for a Llama-2 model. Not sure which version to try? We recommend option three, the 70B parameters Llama-2 chat . You can still play around with all three models to see which one works best for your unique needs.

Although we advise starting with the largest available model to take full advantage of the remote computing power when using HuggingFace or Poe, for those intending to run Llama 2 locally, we encourage beginning with the 7B parameter model, as it has the lowest hardware requirements.

Hardware Requirements to Run Llama 2 Locally

For optimal performance with the 7B model, we recommend a graphics card with at least 10GB of VRAM, although people have reported it works with 8GB of RAM. When running locally, the next logical choice would be the 13B parameter model. For this, you can go for high-end consumer GPUs like the RTX 3090 or RTX 4090 to enjoy their abilities. However, you can still rig up your mid-tier Windows machine or a MacBook to run this.

Should you want to go full throttle, you can go for the largest model. However, this will require enterprise-grade hardware for a blissful performance. By enterprise-grade, we are talking hardware in the ballpark of an NVIDIA A100 with 80GB of memory. The 70B parameter model requires exceptionally powerful, specialized hardware for responsive execution. Once again, clarifying that you can still run this model on a less powerful machine setup is important. However, response time might be agonizingly slow, running into several minutes per prompt. Carefully consider the GPU and memory requirements before selecting the appropriate model for your needs. Or, use the HuggingFace instance.

If you have the hardware and technical depth to run the Llama 2 model locally on your machine, you can request access to the model using Meta’s Llama access request form . After providing a name, email, location, and the name of your organization, Meta will review your application, after which access will either be denied or granted access within a window spanning a few minutes to two days. My access was granted in minutes, so hopefully, you get lucky as well.

Llama 2: An Important First Step

Llama 2 may not be the most sophisticated language model available, but by virtue of being open source, it represents an important first step towards transparent and progressive AI development.

While the likes of OpenAI GPT currently have better performance, OpenAI’s walled-garden approach to development means the company controls the growth and pace of development of the model. With an open-source model like Llama, the wider open-source community can iteratively innovate to build new products that might not be possible within a walled garden system.

Also read:

- [New] In 2024, Expert Analysis The Best WebCam Videotaping Tools

- [New] In 2024, Helmet to High Definition Top 5 Cams for Riders, '23 Edition

- [Updated] In 2024, 18 Quick and Inspiring Ideas to Try in Your Vlog

- 2024 Approved Achieving Excellence with Central Luts for Films

- 5 Easy Ways to Change Location on YouTube TV On Nubia Red Magic 9 Pro | Dr.fone

- Easy Ways to Resize Text on Your Screen in Windows 11 with YL Software Solutions

- Inside Sony's New Powerhouse: A Comprehensive Review of the PS5

- Mastering Media Playback on macOS with VLC Player Knowledge

- Recover Absent Watch Icon on FB

- Speedy Driver Updates on Windows 1지 - A Simple Process for Users

- Step-by-Step Guide: Accessing the Windows 11 Control Panel

- Troubleshooting and Resolving 0X80248007 Error During Windows 11 Updates: Tips & Tricks

- Turbocharge Firefox in Minutes: 10 Quick Adjustments for a 5X Speed Boost

- Ultimate Guide: Boosting Your Fortnite Performance with Optimal Mouse Settings

- Ultimate Step-by-Step Process: App Removal Techniques on Windows 11 Devices

- Ultimate Tutorial: Writing Windows 11 ISO Image Onto a USB for Instant Installation

- Title: Llama 2 Uncovered: Its Core Functions and Potential

- Author: Brian

- Created at : 2025-02-08 18:09:15

- Updated at : 2025-02-15 19:15:45

- Link: https://tech-savvy.techidaily.com/llama-2-uncovered-its-core-functions-and-potential/

- License: This work is licensed under CC BY-NC-SA 4.0.