Mandates in Machine Learning Regulations

Mandates in Machine Learning Regulations

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Key Takeaways

- AI needs stricter monitoring, as cybersecurity vulnerabilities and privacy concerns continue to emerge.

- The government, tech companies, and end users all have a role to play in regulating AI, but each approach has its limitations.

- Media outlets, non-profit organizations, tech industry associations, academic institutions, and law enforcement agencies also contribute to the regulation of AI.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

The general public has differing views on AI—some believe machines will replace human workers altogether, while others claim AI is a fad. One thing everyone agrees on, however, is that AI needs stricter monitoring.

Despite the importance of AI regulation, it has taken a back seat to training. Developers are so obsessed with building the next biggest AI model that they’re trading cybersecurity for rapid advancement. The question isn’t if AI needs regulation; it’s which governing body with adequate funding, human resources, and technological capacity will take the initiative.

So, who should regulate AI?

Government Bodies

Various people, from consumers to tech leaders, hope the government will regulate AI . Publicly funded institutions have the resources to do so. Even Elon Musk and Sam Altman, two main drivers of the AI race, believe that some privacy concerns surrounding AI are too dangerous for governing bodies to overlook.

The government should focus on protecting its constituents’ privacy and civil liberties if it takes over AI regulation. Cybercriminals keep finding ways to exploit AI systems in their schemes. Individuals not well-versed in AI might easily get fooled by synthesized voices, deepfake videos, and bot-operated online profiles.

However, one major issue with the government regulating AI is that it might inadvertently stifle innovation. AI is a complex, evolving technology. Unless the officials overseeing deployment, development, and training guidelines understand how AI works, they might make premature, inefficient judgments.

AI Developers, Tech Companies, and Laboratories

Considering the potential roadblocks that might arise from the government monitoring AI, many would rather have tech companies spearhead regulation. They believe developers should be responsible for the tech they release. Self-regulation enables them to drive innovation and focus on advancing these systems efficiently.

Moreover, their in-depth understanding of AI will help them make fair, informed guidelines prioritizing user safety without compromising functionality. As with any technology, industry expertise streamlines monitoring. Assigning untrained officials to regulate technologies they barely understand might present more problems than benefits.

Take the 2018 U.S. Senate hearing about Facebook’s data privacy laws as an example. In this report by The Washington Post , you’ll see that many lawmakers are confused with Facebook’s basic functions. So unless the U.S. Senate creates a sole department of tech specialists, they’re likely not qualified to regulate such an advanced, ever-changing system like AI.

However, the main issue with tech companies regulating themselves is that shady corporations might abuse their power. With no intervening third party, they’re basically free to do whatever they want.

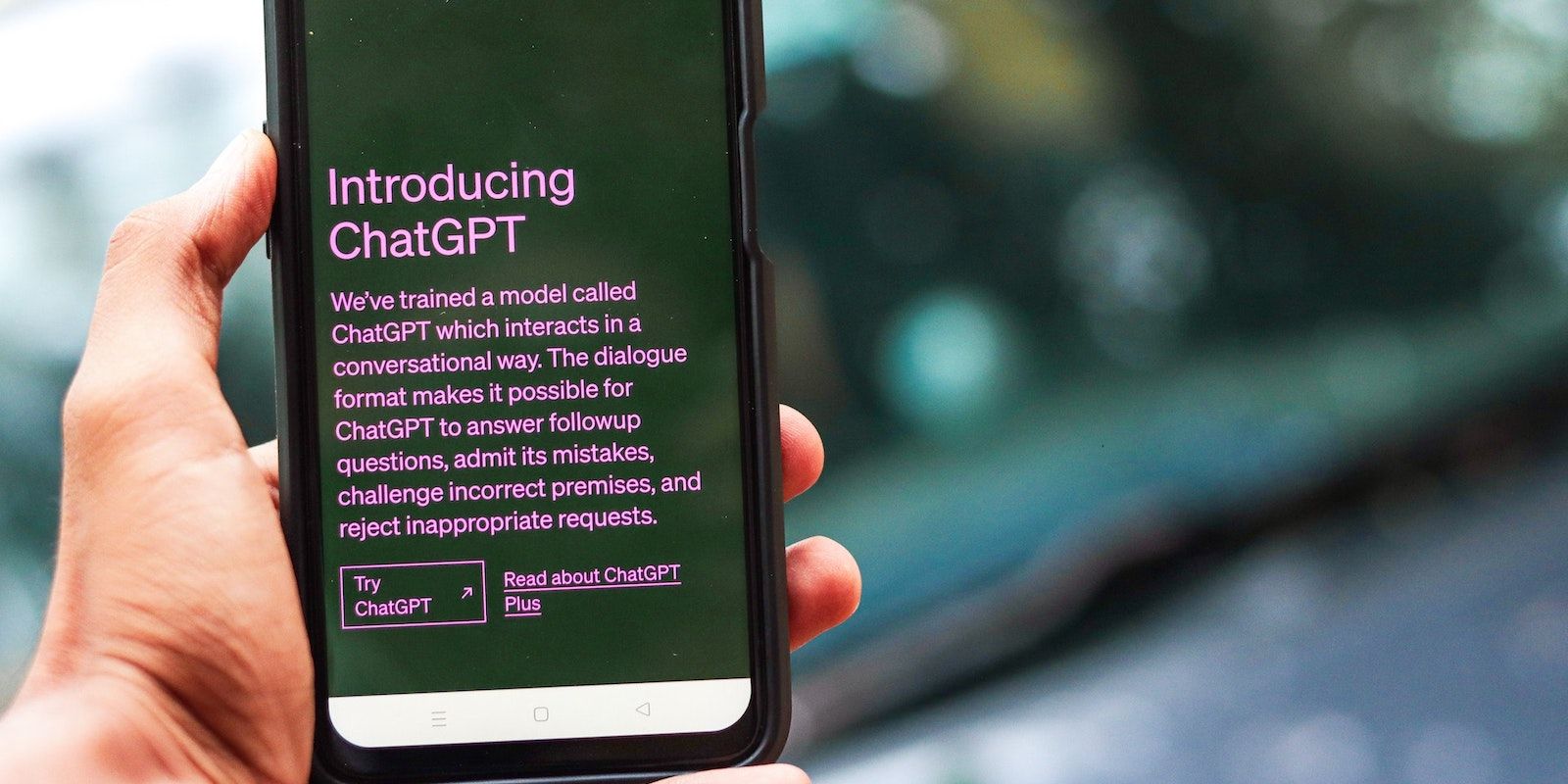

End Users

Some fear that government and private entities will abuse AI systems. They’re unsure about granting a handful of governing bodies total control over such powerful technologies, especially since AI is still evolving. They might eventually fight over authority rather than work toward efficient regulation.

To mitigate these risks, skeptics believe that end users deserve free rein to use AI models how they want. They say government bodies should only interfere when AI users break the law. It’s an ambitious goal, but it could technically be achieved if open-source AI developers dominated market shares.

That said, this setup puts non-tech-savvy individuals at a disadvantage. Users are responsible for setting the restrictions within their systems—unfortunately, not everyone has the resources to do so.

It’s also short-sighted to remove proprietary models from the market. The proliferation of open-source AI models has several positive and negative impacts ; for some, the cons outweigh the pros.

Other Entities That Play a Role in the Regulation of AI

Although major entities will spearhead the regulation of AI, there are bodies that play significant roles:

1. Media Outlets

Media outlets play a critical role in shaping the public’s perception of AI. They report industry developments, share new tools, bring awareness to the harmful uses of AI, and interview experts about relevant concerns.

Most of the facts end users know about AI basically come from media outlets. Publishing false data, whether on purpose or not, will cause irreversible damage—you can’t underestimate how fast misinformation spreads.

2. Non-Governmental Organizations

Several non-profit organizations are centered around protecting AI users’ privacy and civil liberties. They educate the public through free resources, advocate for new policies, cooperate with government officials, and voice out overlooked concerns.

The only issue with NPOs is they’re usually short on resources. Since they aren’t connected to the government, they rely on private solicitations and donations for day-to-day operations. Sadly, only a few organizations get adequate funding.

3. Tech Industry Associations

AI-focused tech industry associations can represent the public’s rights and interests. Like NPOs, they work with lawmakers, represent concerned individuals, advocate for fair policies, and bring awareness to specific issues.

The difference, however, is that they often have ties to private companies. Their members still do solicitations, but they’ll usually get enough funding from their parent organizations as long as they deliver results.

4. Academic Institutions

Although AI comes with several risks, it is inherently neutral. All biases, privacy issues, security errors, and potential cybercrime activities stem from humans, so AI by itself isn’t something to fear.

But very few already understand how modern AI models work. Misconceptions skew people’s perception of AI, perpetuating baseless fears like AI taking over humanity or stealing jobs.

Academic institutions could fill these educational gaps through accessible resources. There aren’t too many scholarly works on modern LLMs and NLP systems yet. The public can use AI more responsibly and combat cybercrimes if they wholly understand how it works.

5. Law Enforcement Agencies

Law enforcement agencies should expect to encounter more AI-enabled cyberattacks . With the proliferation of generative models, crooks can quickly synthesize voices, generate deepfake images, scrape personally identifiable information (PII), and even create entirely new personas.

Most agencies aren’t equipped to handle these crimes. They should invest in new systems and train their officers on modern cybercrimes; otherwise, they’ll have trouble catching these crooks.

The Future of AI Regulation

Considering AI’s fast-paced nature, it’s unlikely for a single governing body to control it. Yes, tech leaders will hold more power than consumers, but various entities must cooperate to manage AI risks without impeding advancements. It’s best to set control measures now while artificial general intelligence (AGI) is still a distant goal.

That said, AI regulation is just as distant as AGI. In the meantime, users must observe safety practices to combat AI-driven threats. Good habits like limiting the people you connect with online and securing your digital PII already go a long way.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

The general public has differing views on AI—some believe machines will replace human workers altogether, while others claim AI is a fad. One thing everyone agrees on, however, is that AI needs stricter monitoring.

Despite the importance of AI regulation, it has taken a back seat to training. Developers are so obsessed with building the next biggest AI model that they’re trading cybersecurity for rapid advancement. The question isn’t if AI needs regulation; it’s which governing body with adequate funding, human resources, and technological capacity will take the initiative.

So, who should regulate AI?

Government Bodies

Various people, from consumers to tech leaders, hope the government will regulate AI . Publicly funded institutions have the resources to do so. Even Elon Musk and Sam Altman, two main drivers of the AI race, believe that some privacy concerns surrounding AI are too dangerous for governing bodies to overlook.

The government should focus on protecting its constituents’ privacy and civil liberties if it takes over AI regulation. Cybercriminals keep finding ways to exploit AI systems in their schemes. Individuals not well-versed in AI might easily get fooled by synthesized voices, deepfake videos, and bot-operated online profiles.

However, one major issue with the government regulating AI is that it might inadvertently stifle innovation. AI is a complex, evolving technology. Unless the officials overseeing deployment, development, and training guidelines understand how AI works, they might make premature, inefficient judgments.

AI Developers, Tech Companies, and Laboratories

Considering the potential roadblocks that might arise from the government monitoring AI, many would rather have tech companies spearhead regulation. They believe developers should be responsible for the tech they release. Self-regulation enables them to drive innovation and focus on advancing these systems efficiently.

Moreover, their in-depth understanding of AI will help them make fair, informed guidelines prioritizing user safety without compromising functionality. As with any technology, industry expertise streamlines monitoring. Assigning untrained officials to regulate technologies they barely understand might present more problems than benefits.

Take the 2018 U.S. Senate hearing about Facebook’s data privacy laws as an example. In this report by The Washington Post , you’ll see that many lawmakers are confused with Facebook’s basic functions. So unless the U.S. Senate creates a sole department of tech specialists, they’re likely not qualified to regulate such an advanced, ever-changing system like AI.

However, the main issue with tech companies regulating themselves is that shady corporations might abuse their power. With no intervening third party, they’re basically free to do whatever they want.

End Users

Some fear that government and private entities will abuse AI systems. They’re unsure about granting a handful of governing bodies total control over such powerful technologies, especially since AI is still evolving. They might eventually fight over authority rather than work toward efficient regulation.

To mitigate these risks, skeptics believe that end users deserve free rein to use AI models how they want. They say government bodies should only interfere when AI users break the law. It’s an ambitious goal, but it could technically be achieved if open-source AI developers dominated market shares.

That said, this setup puts non-tech-savvy individuals at a disadvantage. Users are responsible for setting the restrictions within their systems—unfortunately, not everyone has the resources to do so.

It’s also short-sighted to remove proprietary models from the market. The proliferation of open-source AI models has several positive and negative impacts ; for some, the cons outweigh the pros.

Other Entities That Play a Role in the Regulation of AI

Although major entities will spearhead the regulation of AI, there are bodies that play significant roles:

1. Media Outlets

Media outlets play a critical role in shaping the public’s perception of AI. They report industry developments, share new tools, bring awareness to the harmful uses of AI, and interview experts about relevant concerns.

Most of the facts end users know about AI basically come from media outlets. Publishing false data, whether on purpose or not, will cause irreversible damage—you can’t underestimate how fast misinformation spreads.

2. Non-Governmental Organizations

Several non-profit organizations are centered around protecting AI users’ privacy and civil liberties. They educate the public through free resources, advocate for new policies, cooperate with government officials, and voice out overlooked concerns.

The only issue with NPOs is they’re usually short on resources. Since they aren’t connected to the government, they rely on private solicitations and donations for day-to-day operations. Sadly, only a few organizations get adequate funding.

3. Tech Industry Associations

AI-focused tech industry associations can represent the public’s rights and interests. Like NPOs, they work with lawmakers, represent concerned individuals, advocate for fair policies, and bring awareness to specific issues.

The difference, however, is that they often have ties to private companies. Their members still do solicitations, but they’ll usually get enough funding from their parent organizations as long as they deliver results.

4. Academic Institutions

Although AI comes with several risks, it is inherently neutral. All biases, privacy issues, security errors, and potential cybercrime activities stem from humans, so AI by itself isn’t something to fear.

But very few already understand how modern AI models work. Misconceptions skew people’s perception of AI, perpetuating baseless fears like AI taking over humanity or stealing jobs.

Academic institutions could fill these educational gaps through accessible resources. There aren’t too many scholarly works on modern LLMs and NLP systems yet. The public can use AI more responsibly and combat cybercrimes if they wholly understand how it works.

5. Law Enforcement Agencies

Law enforcement agencies should expect to encounter more AI-enabled cyberattacks . With the proliferation of generative models, crooks can quickly synthesize voices, generate deepfake images, scrape personally identifiable information (PII), and even create entirely new personas.

Most agencies aren’t equipped to handle these crimes. They should invest in new systems and train their officers on modern cybercrimes; otherwise, they’ll have trouble catching these crooks.

The Future of AI Regulation

Considering AI’s fast-paced nature, it’s unlikely for a single governing body to control it. Yes, tech leaders will hold more power than consumers, but various entities must cooperate to manage AI risks without impeding advancements. It’s best to set control measures now while artificial general intelligence (AGI) is still a distant goal.

That said, AI regulation is just as distant as AGI. In the meantime, users must observe safety practices to combat AI-driven threats. Good habits like limiting the people you connect with online and securing your digital PII already go a long way.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

The general public has differing views on AI—some believe machines will replace human workers altogether, while others claim AI is a fad. One thing everyone agrees on, however, is that AI needs stricter monitoring.

Despite the importance of AI regulation, it has taken a back seat to training. Developers are so obsessed with building the next biggest AI model that they’re trading cybersecurity for rapid advancement. The question isn’t if AI needs regulation; it’s which governing body with adequate funding, human resources, and technological capacity will take the initiative.

So, who should regulate AI?

Government Bodies

Various people, from consumers to tech leaders, hope the government will regulate AI . Publicly funded institutions have the resources to do so. Even Elon Musk and Sam Altman, two main drivers of the AI race, believe that some privacy concerns surrounding AI are too dangerous for governing bodies to overlook.

The government should focus on protecting its constituents’ privacy and civil liberties if it takes over AI regulation. Cybercriminals keep finding ways to exploit AI systems in their schemes. Individuals not well-versed in AI might easily get fooled by synthesized voices, deepfake videos, and bot-operated online profiles.

However, one major issue with the government regulating AI is that it might inadvertently stifle innovation. AI is a complex, evolving technology. Unless the officials overseeing deployment, development, and training guidelines understand how AI works, they might make premature, inefficient judgments.

AI Developers, Tech Companies, and Laboratories

Considering the potential roadblocks that might arise from the government monitoring AI, many would rather have tech companies spearhead regulation. They believe developers should be responsible for the tech they release. Self-regulation enables them to drive innovation and focus on advancing these systems efficiently.

Moreover, their in-depth understanding of AI will help them make fair, informed guidelines prioritizing user safety without compromising functionality. As with any technology, industry expertise streamlines monitoring. Assigning untrained officials to regulate technologies they barely understand might present more problems than benefits.

Take the 2018 U.S. Senate hearing about Facebook’s data privacy laws as an example. In this report by The Washington Post , you’ll see that many lawmakers are confused with Facebook’s basic functions. So unless the U.S. Senate creates a sole department of tech specialists, they’re likely not qualified to regulate such an advanced, ever-changing system like AI.

However, the main issue with tech companies regulating themselves is that shady corporations might abuse their power. With no intervening third party, they’re basically free to do whatever they want.

End Users

Some fear that government and private entities will abuse AI systems. They’re unsure about granting a handful of governing bodies total control over such powerful technologies, especially since AI is still evolving. They might eventually fight over authority rather than work toward efficient regulation.

To mitigate these risks, skeptics believe that end users deserve free rein to use AI models how they want. They say government bodies should only interfere when AI users break the law. It’s an ambitious goal, but it could technically be achieved if open-source AI developers dominated market shares.

That said, this setup puts non-tech-savvy individuals at a disadvantage. Users are responsible for setting the restrictions within their systems—unfortunately, not everyone has the resources to do so.

It’s also short-sighted to remove proprietary models from the market. The proliferation of open-source AI models has several positive and negative impacts ; for some, the cons outweigh the pros.

Other Entities That Play a Role in the Regulation of AI

Although major entities will spearhead the regulation of AI, there are bodies that play significant roles:

1. Media Outlets

Media outlets play a critical role in shaping the public’s perception of AI. They report industry developments, share new tools, bring awareness to the harmful uses of AI, and interview experts about relevant concerns.

Most of the facts end users know about AI basically come from media outlets. Publishing false data, whether on purpose or not, will cause irreversible damage—you can’t underestimate how fast misinformation spreads.

2. Non-Governmental Organizations

Several non-profit organizations are centered around protecting AI users’ privacy and civil liberties. They educate the public through free resources, advocate for new policies, cooperate with government officials, and voice out overlooked concerns.

The only issue with NPOs is they’re usually short on resources. Since they aren’t connected to the government, they rely on private solicitations and donations for day-to-day operations. Sadly, only a few organizations get adequate funding.

3. Tech Industry Associations

AI-focused tech industry associations can represent the public’s rights and interests. Like NPOs, they work with lawmakers, represent concerned individuals, advocate for fair policies, and bring awareness to specific issues.

The difference, however, is that they often have ties to private companies. Their members still do solicitations, but they’ll usually get enough funding from their parent organizations as long as they deliver results.

4. Academic Institutions

Although AI comes with several risks, it is inherently neutral. All biases, privacy issues, security errors, and potential cybercrime activities stem from humans, so AI by itself isn’t something to fear.

But very few already understand how modern AI models work. Misconceptions skew people’s perception of AI, perpetuating baseless fears like AI taking over humanity or stealing jobs.

Academic institutions could fill these educational gaps through accessible resources. There aren’t too many scholarly works on modern LLMs and NLP systems yet. The public can use AI more responsibly and combat cybercrimes if they wholly understand how it works.

5. Law Enforcement Agencies

Law enforcement agencies should expect to encounter more AI-enabled cyberattacks . With the proliferation of generative models, crooks can quickly synthesize voices, generate deepfake images, scrape personally identifiable information (PII), and even create entirely new personas.

Most agencies aren’t equipped to handle these crimes. They should invest in new systems and train their officers on modern cybercrimes; otherwise, they’ll have trouble catching these crooks.

The Future of AI Regulation

Considering AI’s fast-paced nature, it’s unlikely for a single governing body to control it. Yes, tech leaders will hold more power than consumers, but various entities must cooperate to manage AI risks without impeding advancements. It’s best to set control measures now while artificial general intelligence (AGI) is still a distant goal.

That said, AI regulation is just as distant as AGI. In the meantime, users must observe safety practices to combat AI-driven threats. Good habits like limiting the people you connect with online and securing your digital PII already go a long way.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

The general public has differing views on AI—some believe machines will replace human workers altogether, while others claim AI is a fad. One thing everyone agrees on, however, is that AI needs stricter monitoring.

Despite the importance of AI regulation, it has taken a back seat to training. Developers are so obsessed with building the next biggest AI model that they’re trading cybersecurity for rapid advancement. The question isn’t if AI needs regulation; it’s which governing body with adequate funding, human resources, and technological capacity will take the initiative.

So, who should regulate AI?

Government Bodies

Various people, from consumers to tech leaders, hope the government will regulate AI . Publicly funded institutions have the resources to do so. Even Elon Musk and Sam Altman, two main drivers of the AI race, believe that some privacy concerns surrounding AI are too dangerous for governing bodies to overlook.

The government should focus on protecting its constituents’ privacy and civil liberties if it takes over AI regulation. Cybercriminals keep finding ways to exploit AI systems in their schemes. Individuals not well-versed in AI might easily get fooled by synthesized voices, deepfake videos, and bot-operated online profiles.

However, one major issue with the government regulating AI is that it might inadvertently stifle innovation. AI is a complex, evolving technology. Unless the officials overseeing deployment, development, and training guidelines understand how AI works, they might make premature, inefficient judgments.

AI Developers, Tech Companies, and Laboratories

Considering the potential roadblocks that might arise from the government monitoring AI, many would rather have tech companies spearhead regulation. They believe developers should be responsible for the tech they release. Self-regulation enables them to drive innovation and focus on advancing these systems efficiently.

Moreover, their in-depth understanding of AI will help them make fair, informed guidelines prioritizing user safety without compromising functionality. As with any technology, industry expertise streamlines monitoring. Assigning untrained officials to regulate technologies they barely understand might present more problems than benefits.

Take the 2018 U.S. Senate hearing about Facebook’s data privacy laws as an example. In this report by The Washington Post , you’ll see that many lawmakers are confused with Facebook’s basic functions. So unless the U.S. Senate creates a sole department of tech specialists, they’re likely not qualified to regulate such an advanced, ever-changing system like AI.

However, the main issue with tech companies regulating themselves is that shady corporations might abuse their power. With no intervening third party, they’re basically free to do whatever they want.

End Users

Some fear that government and private entities will abuse AI systems. They’re unsure about granting a handful of governing bodies total control over such powerful technologies, especially since AI is still evolving. They might eventually fight over authority rather than work toward efficient regulation.

To mitigate these risks, skeptics believe that end users deserve free rein to use AI models how they want. They say government bodies should only interfere when AI users break the law. It’s an ambitious goal, but it could technically be achieved if open-source AI developers dominated market shares.

That said, this setup puts non-tech-savvy individuals at a disadvantage. Users are responsible for setting the restrictions within their systems—unfortunately, not everyone has the resources to do so.

It’s also short-sighted to remove proprietary models from the market. The proliferation of open-source AI models has several positive and negative impacts ; for some, the cons outweigh the pros.

Other Entities That Play a Role in the Regulation of AI

Although major entities will spearhead the regulation of AI, there are bodies that play significant roles:

1. Media Outlets

Media outlets play a critical role in shaping the public’s perception of AI. They report industry developments, share new tools, bring awareness to the harmful uses of AI, and interview experts about relevant concerns.

Most of the facts end users know about AI basically come from media outlets. Publishing false data, whether on purpose or not, will cause irreversible damage—you can’t underestimate how fast misinformation spreads.

2. Non-Governmental Organizations

Several non-profit organizations are centered around protecting AI users’ privacy and civil liberties. They educate the public through free resources, advocate for new policies, cooperate with government officials, and voice out overlooked concerns.

The only issue with NPOs is they’re usually short on resources. Since they aren’t connected to the government, they rely on private solicitations and donations for day-to-day operations. Sadly, only a few organizations get adequate funding.

3. Tech Industry Associations

AI-focused tech industry associations can represent the public’s rights and interests. Like NPOs, they work with lawmakers, represent concerned individuals, advocate for fair policies, and bring awareness to specific issues.

The difference, however, is that they often have ties to private companies. Their members still do solicitations, but they’ll usually get enough funding from their parent organizations as long as they deliver results.

4. Academic Institutions

Although AI comes with several risks, it is inherently neutral. All biases, privacy issues, security errors, and potential cybercrime activities stem from humans, so AI by itself isn’t something to fear.

But very few already understand how modern AI models work. Misconceptions skew people’s perception of AI, perpetuating baseless fears like AI taking over humanity or stealing jobs.

Academic institutions could fill these educational gaps through accessible resources. There aren’t too many scholarly works on modern LLMs and NLP systems yet. The public can use AI more responsibly and combat cybercrimes if they wholly understand how it works.

5. Law Enforcement Agencies

Law enforcement agencies should expect to encounter more AI-enabled cyberattacks . With the proliferation of generative models, crooks can quickly synthesize voices, generate deepfake images, scrape personally identifiable information (PII), and even create entirely new personas.

Most agencies aren’t equipped to handle these crimes. They should invest in new systems and train their officers on modern cybercrimes; otherwise, they’ll have trouble catching these crooks.

The Future of AI Regulation

Considering AI’s fast-paced nature, it’s unlikely for a single governing body to control it. Yes, tech leaders will hold more power than consumers, but various entities must cooperate to manage AI risks without impeding advancements. It’s best to set control measures now while artificial general intelligence (AGI) is still a distant goal.

That said, AI regulation is just as distant as AGI. In the meantime, users must observe safety practices to combat AI-driven threats. Good habits like limiting the people you connect with online and securing your digital PII already go a long way.

Also read:

- [New] Decoding Unlisted Videos A Deep Dive Into YouTube Secrecy for 2024

- [Updated] 2024 Approved Screen Success Brand Endorsement Strategies

- [Updated] 2024 Approved Securing Your Social Snapshot Instagram Edition

- [Updated] In 2024, Exploring the Frontier Top 360° Cameras for Industry Pros, 2023

- [Updated] Top 25 Anime Visionaries on TikTok Changing Content Norms for 2024

- Constructing Urban Dreams: In-Depth Review of the Captivating City-Builder, Cities: Skylines

- Content Design Powered by Canva & GPT's Batch Capabilities

- Effortless Data Management for ChatGPT Conversations: Our Picks

- Enhancing Design with ChatGPT's User Persona Creation

- How to Effortlessly Migrate Your Pictures From Google Photos to iCloud - A Comprehensive Guide | DigitalSavvy

- How to recover old music from your Vivo S18 Pro

- In 2024, How to Bypass FRP on Vivo Y78t?

- M1 Vs. M2 iPad Pro Showdown: In-Depth Review & Buyer's Guide for Tech Enthusiasts

- Overcome the Stubbornness of a Non-Opening Windows Notepad with Ease

- The Ultimate Guide to Using ChatGPT for Personal Health

- To Subscribe or Not to Subscribe: The Price Dilemnium of EA Play

- Top Cellphone Bargains in March 2024 - Insider Tips

- Top Performing Laptops & Desktops : Comprehensive Review of Apple, Dell, and Other Brands by ZDNet

- What You Need to Know About Apple's Latest Feature: Google Gemini for iPhones, According to ZDNet

- Title: Mandates in Machine Learning Regulations

- Author: Brian

- Created at : 2024-11-01 03:33:35

- Updated at : 2024-11-07 10:45:03

- Link: https://tech-savvy.techidaily.com/mandates-in-machine-learning-regulations/

- License: This work is licensed under CC BY-NC-SA 4.0.