Precision in Conversations: 6 Things Not To Do with GPT

Precision in Conversations: 6 Things Not To Do with GPT

ChatGPT prompting can be a lot of fun. It can be especially exciting when you get the chatbot to do precisely what you want. However, like learning to ride a bike, there can be a few bumps and scrapes along the way. Sometimes, getting the chatbot to provide satisfying results can be a tricky adventure.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

The results you get from ChatGPT are as good as the prompts you provide. Poor prompts mean poor responses. That’s why we’ve put together a handy guide on some mistakes to avoid when using ChatGPT.

1. Mixing Topics In a Single Chat Session

While it may not seem concerning to prompt on diverse topics within the same chat session, it’s worth paying attention to. ChatGPT is highly sensitive to context. Each prompt you introduce during a chat session can greatly shape the responses you receive from subsequent prompts.

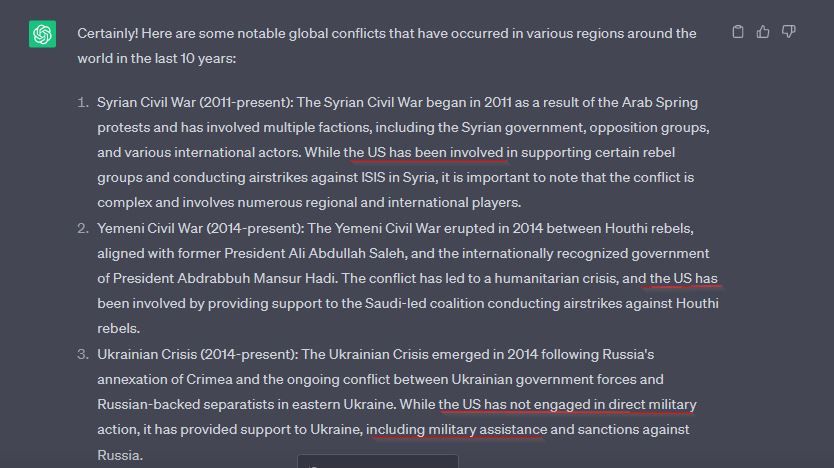

Let’s say you started a chat session by asking ChatGPT, “Can we talk about the US military?” If you keep the conversation going and decide to ask the chatbot to tell you about some recent wars, there’s a chance it will only highlight wars that the US military has participated in when you might have needed a broader view of all global conflicts. Why? ChatGPT uses the context of preceding conversations to process an answer for subsequent prompts.

This feature helps ChatGPT stay on topic during long conversations on any subject. However, it becomes detrimental when the chatbot brings information from a completely different topic into a new response to maintain context and stay on topic. This can be easy to spot sometimes. However, it can also be subtle and undetected, leading to misinformation.

In the example below, after having a long discussion about the US military, we asked ChatGPT to tell us about some global conflicts, and it only picked those with some form of US participation.

2. Too Many Instructions In a Single Prompt

ChatGPT is capable of handling several directives within a single prompt. Yet, there’s a threshold to the number of instructions it can manage simultaneously without compromising the quality of its responses. You may have encountered online prompts containing numerous instructions that appear to function well. However, this isn’t always the case, and there’s a nuanced approach required to ensure optimal results.

The best way to deal with complex prompts is to break them up and use them with a chain prompting approach. This involves separating complex prompts into multiple parts, each containing fewer instructions. You can then feed each prompt to ChatGPT in simpler bits, followed by other simpler bits that refine the response from the previous prompts until you achieve your desired result.

So, instead of using a prompt like:

- Tell me about the history of the Eiffel Tower, including its construction materials, the budget, the design, its significance, the construction company involved, and controversies.

You could use:

- Tell me about the history of the Eiffel Tower.

- Were they any major controversies surrounding the project?

- What major construction materials were used?

- Tell me about its design and designers

- Explain its significance

- Let’s talk about the budget

The second set of prompts will produce much more detailed information and relevant responses.

3. Being Overly Specific With Your Instructions

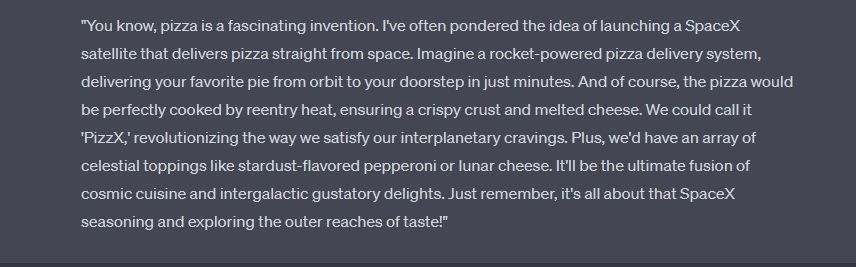

Although it might appear beneficial to provide highly detailed prompts, this strategy isn’t always optimal. Detailed instructions indeed offer ChatGPT a clear direction for generating responses. However, excessive detail can unintentionally confine ChatGPT’s responses to an overly narrow context, potentially leading to less accurate responses and hallucinations.

ChatGPT tends to make up information whenever it runs short of fact. So if there’s limited fact within the constraints of the instructions you provide, you’ll likely get misinformation.

As a demonstration, we asked ChatGPT to limit its responses to any question we ask about Elon Musk’s view on the subject. We asked ChatGPT about Mars, rockets, and Electric Vehicles, and the responses were good since Elon Musk has clearly said a lot on the topic. However, when we asked ChatGPT to tell us about Pizza (remember, the responses should only be Elon Musk’s views on the topic), ChatGPT made up hilarious commentary.

4. Not Providing Context When Necessary

Context plays a vital role in how ChatGPT responds to any given prompt. Even a minor shift in context can lead to significantly different responses. If no context is provided, your prompt becomes ambiguous, resulting in varied responses each time the same prompt is used. This lack of consistency may not be desirable when seeking precise answers because there’s no way of knowing the right response. But how do you provide context, and when should you?

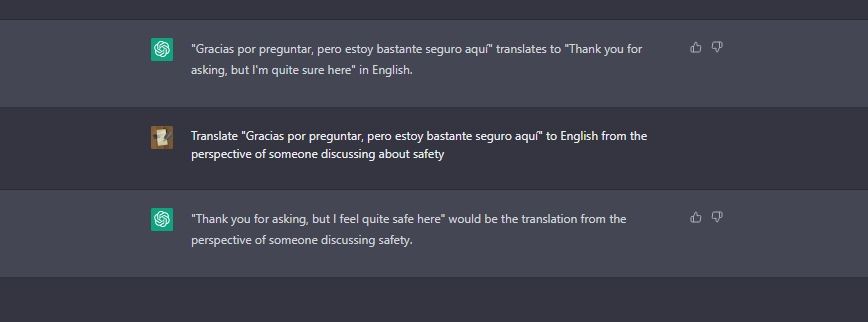

Let’s say you want to use ChatGPT as a translation tool . As you know, language can be very ambiguous. For example, the same sentence could mean different things depending on the context. In other words, in situations like this, context is very important. Here’s an example.

Consider the Spanish phrase “Gracias por preguntar, pero estoy bastante seguro aquí.” ChatGPT translates this as, “Thanks for asking, but I’m pretty sure here.” In the text this sentence was copied from, the intended meaning was: “Thanks for asking, but I’m safe here.”

However, this was misinterpreted because no context was provided. After we provided context to ChatGPT by including additional information that the sentence should be interpreted from the context of someone talking about safety (which is what was discussed in the text it was copied from), ChatGPT provided the expected translation.

5. Not Using Examples

Incorporating examples is a crucial aspect of crafting effective ChatGPT prompts . While not every prompt necessitates an example, when the opportunity arises, including one can greatly enhance the specificity and accuracy of ChatGPT’s responses.

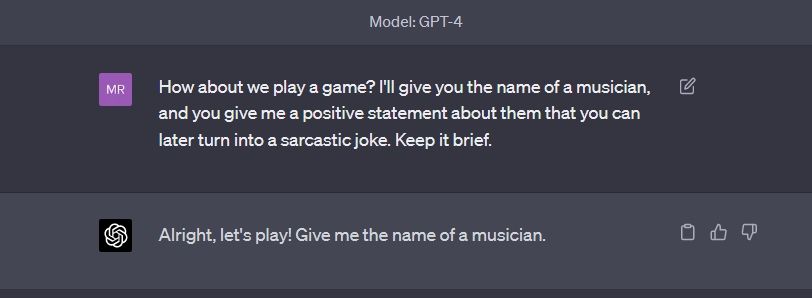

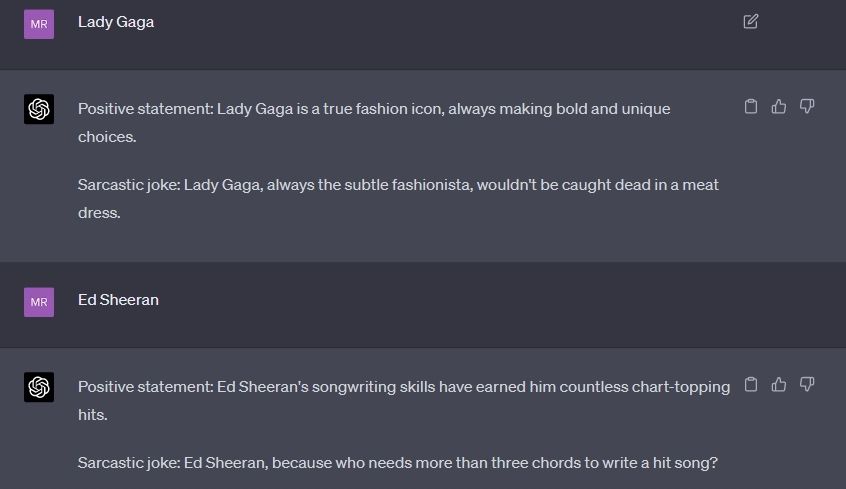

Examples are especially important when generating unique content like jokes, music, or cover letters. For example, in the screenshot below, we asked ChatGPT to generate some sarcasm about musicians once we provided the musician’s name. The highlight here is that we didn’t provide any examples.

Without examples, the jokes ChatGPT came up with weren’t particularly rib-cracking. The overall response wasn’t quite enticing as well.

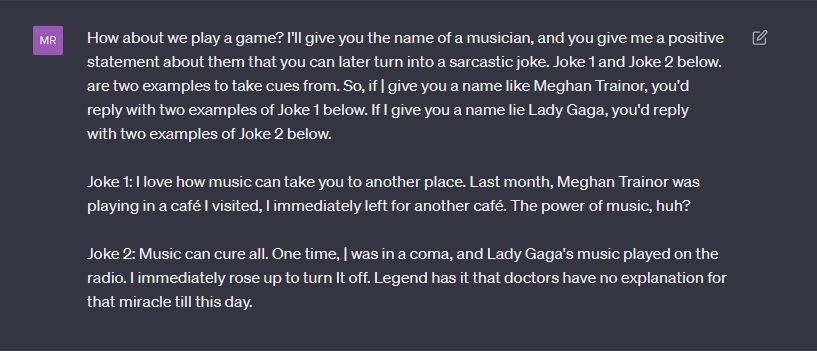

Next, we gave ChatGPT some examples of how we want our jokes to look. Here’s the prompt in the screenshot below:

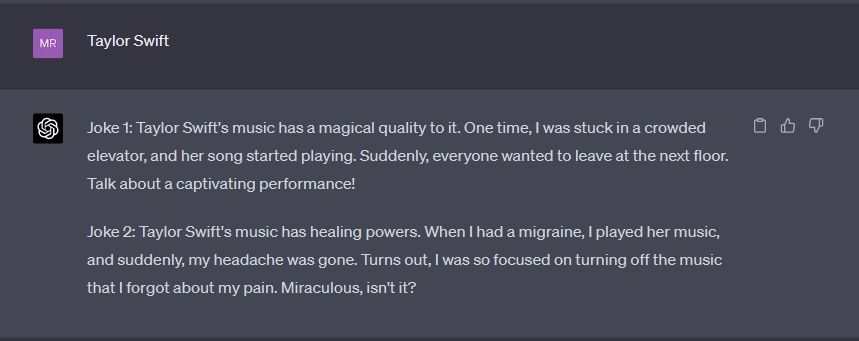

With examples to guide ChatGPT, the generated jokes became significantly better (a bit jealous that ChatGPT’s jokes seem better than ours, though!). This first one was a joke about Taylor Swift.

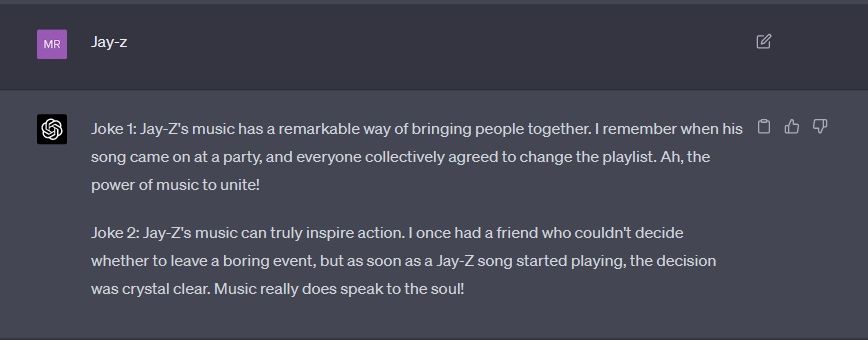

And here’s another one ChatGPT made when we prompted it with Jay-Z.

Loved the second set of jokes? Well, the moral of the story is to use examples more often.

6. Not Being Clear and Specific With Your Instructions

To get the best responses from ChatGPT, you’ll need to be as specific and as unambiguous as possible in your instructions. Unfortunately, ambiguity opens your prompts to multiple interpretations, making it difficult for ChatGPT to provide a specific and accurate response.

“What is the meaning of life?” and “What is the best way to stay healthy?” are two examples of prompts that seem normal but are quite ambiguous. There’s no definitive answer to both questions. However, ChatGPT will try to provide you with an answer that seems like hard facts. Prompts like “What is the meaning of life from a biological perspective?” or “What are some specific lifestyle changes or habits that can help improve mental health?” are good examples of specific, less ambiguous alternatives.

Specific prompts provide a clearer direction for ChatGPT to follow. It also narrows down the focus of the prompt and provides more relevant information for the model to work with.

ChatGPT Is Garbage In, Garbage Out

Just like a chef needs quality ingredients to make a delicious meal, the responses generated by ChatGPT depend greatly on the prompts we provide. Just as the choice of ingredients shapes the taste and outcome of a dish, the clarity, specificity, and context of our prompts influence the accuracy and relevance of ChatGPT’s responses. By crafting well-structured prompts, you give ChatGPT the ingredients it needs to serve up insightful and engaging interactions, much like a skilled chef serving a culinary masterpiece.

SCROLL TO CONTINUE WITH CONTENT

The results you get from ChatGPT are as good as the prompts you provide. Poor prompts mean poor responses. That’s why we’ve put together a handy guide on some mistakes to avoid when using ChatGPT.

- Title: Precision in Conversations: 6 Things Not To Do with GPT

- Author: Brian

- Created at : 2024-08-15 02:38:07

- Updated at : 2024-08-16 02:38:07

- Link: https://tech-savvy.techidaily.com/precision-in-conversations-6-things-not-to-do-with-gpt/

- License: This work is licensed under CC BY-NC-SA 4.0.

SwifDoo PDF 2-Year Plan

SwifDoo PDF 2-Year Plan