Shielding Against Unauthorized AI Interaction Leaks

Shielding Against Unauthorized AI Interaction Leaks

ChatGPT has proven to be an invaluable tool for work. Yet, online privacy concerns highlight the need for vigilance in protecting sensitive data. Recent incidents of ChatGPT data breaches serve as a stark reminder that technology is susceptible to privacy threats. Here are some tips for using ChatGPT responsibly to protect the confidentiality of your work data.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

1. Don’t Save Your Chat History

One of the simplest yet effective steps to protect your privacy is to avoid saving your chat history. ChatGPT, by default, stores all interactions between users and the chatbot. These conversations are collected to train OpenAI’s systems and are subject to inspection by moderators.

While account moderation ensures that you’re complying with Open AI’s terms and services, it also opens up security risks for the user. In fact, The Verge reports that companies like Apple, J.P. Morgan, Verizon, and Amazon have banned their employees from using AI tools due to fear of leaked or collected confidential information entered into these systems.

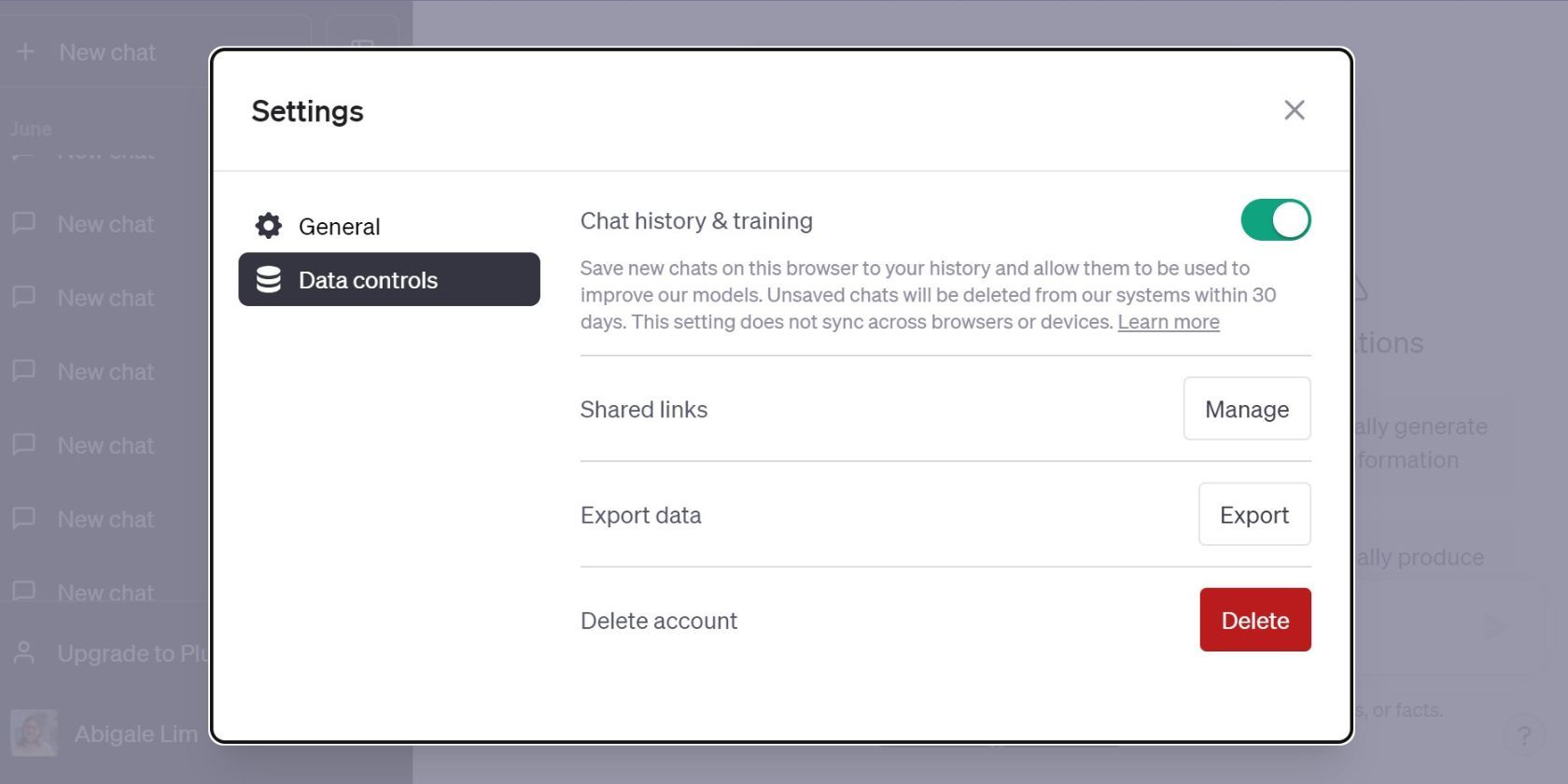

Follow these steps to disable chat history:

- Click the ellipsis or three dots beside your ChatGPT account name.

- Click on Settings.

- Click on Data controls.

- Toggle off Chat history and training.

Note that OpenAI says that even with this setting enabled, conversations are retained for 30 days with the option for moderators to review them for abuse before permanent deletion. Still, disabling chat history is one of the best things you can do if you want to continue using ChatGPT.

Tip: If you need access to your data on ChatGPT, export them first. You can also save them by taking screenshots, writing notes manually, copy-pasting them into a separate application, or using secure cloud storage.

2. Delete Conversations

One of the big problems with OpenAI’s ChatGPT is potential data breaches. The ChatGPT outage that prompted an investigation by the Federal Trade Commission shows just how risky it is to use the app.

According to OpenAI’s update on the March 20, 2023 outage , a bug in an open-source library caused the incident. The leak allowed users to view the chat history titles of other users. It also exposed payment-related information of 1.2% of ChatGPT Plus subscribers, including names, credit card information, and email addresses.

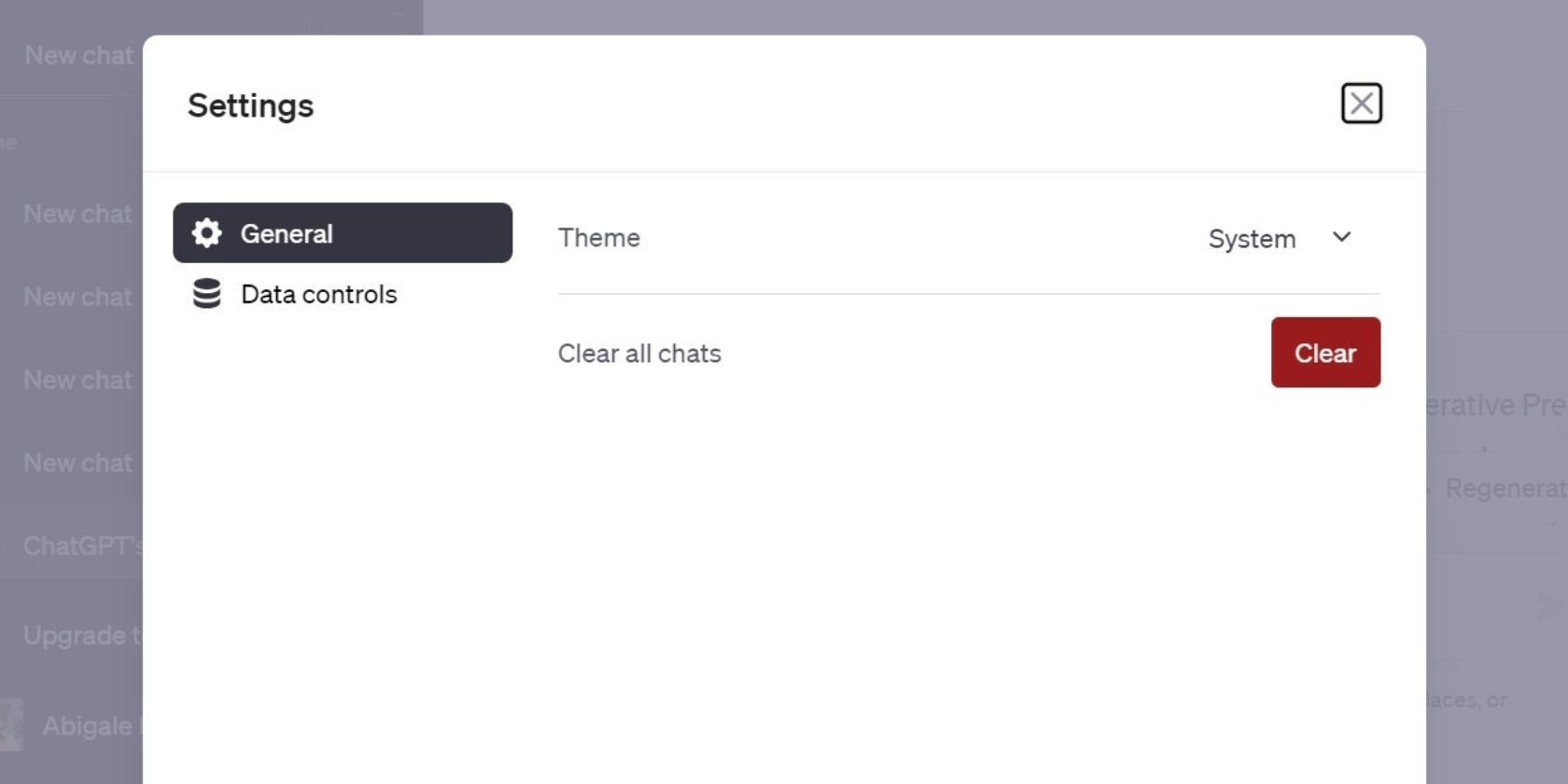

Deleting your conversations helps protect your data from these possible threats. Take the following steps to delete your chats:

- Click the ellipsis or three dots beside your ChatGPT account name.

- Click Settings.

- Under General, click Clear to clear all chats.

Another option is to select each conversation and delete it. This method is helpful if you still want to keep some of your chats. On the list of conversations, click the chat you want to delete. Select the trash icon to remove the data.

3. Don’t Feed ChatGPT Sensitive Work Information

Exercise caution and refrain from providing ChatGPT with sensitive work-related information. One of the most common online privacy myths is that companies will protect your data just because a general statement in their terms of service says so.

Avoid sharing financial records, intellectual property, customer information, and protected health information. You increase the risk of sharing confidential data with cybercriminals. This might even lead to legal problems for you and your company.

The massive ChatGPT data leak from June 2022 to May 2023 illustrates how important this point is. Search Engine Journal reports that over 100,000 ChatGPT account credentials were compromised and sold on dark web marketplaces because of the incident.

Limit interactions with ChatGPT to non-confidential queries and avoid sharing proprietary details. Moreover, apply good password hygiene and enable two-factor authentication to prevent compromising your account.

4. Use Data Anonymization Techniques

Data anonymization techniques help protect individual privacy while retaining insights from datasets. When using ChatGPT for work, apply these techniques to prevent any direct or indirect identification of individuals in the data.

Here are a few basic data anonymization techniques from the Personal Data Protection Commission of Singapore :

- Attribute Suppression: Remove an entire part of data that isn’t needed for your query. Let’s say you need to analyze a customer’s spending patterns. You can feed ChatGPT the transaction amounts and purchase dates. But don’t share the customer’s name and credit card information since these aren’t necessary for the analysis.

- Pseudonymization: Replace identifiable information with pseudonyms. For example, you could replace patient names in a health record with pseudonyms like “Patient001,” “Patient002,” and so on.

- Data Perturbation: Slightly modify data values within a certain range. For example, when sharing patient age data, you could add small random values (e.g., ±2 years) to each individual’s actual age.

- Generalization: Deliberately reduce data. For instance, instead of exposing people’s exact ages, you could generalize the data by grouping ages into broader ranges (e.g., 20-30, 31-40, etc.).

- Character masking: Show only a portion of sensitive data. For example, you can type only the first three digits of a phone number and replace the rest with X (e.g., 555-XXX-XXXX).

Anonymization is not foolproof since data can be de-anonymized. Understand de-anonymization and how to prevent it before utilizing any of these techniques. Evaluate the risks before you release anonymized data.

5. Limit Access to Sensitive Data

Limiting access to sensitive work data is crucial when workers are allowed to use ChatGPT. If you’re working in a leadership role, restrict access to sensitive information to authorized personnel who require it for their specific roles.

Additionally, implement access controls to safeguard your company’s data. For instance, role-based access control (RBAC) gives authorized employees access to only the necessary data to perform their jobs. You can also conduct regular access reviews to ensure that access controls are effective. Don’t forget to revoke access for employees who change roles or leave the company.

6. Be Wary of Third-Party Apps

Whether third-party ChatGPT apps and browser extensions are safe is an important question to ask. Before using any of these apps for work, carefully vet and scrutinize them. Make sure that they’re not collecting and retaining information for questionable purposes.

Don’t install shady apps that ask for random permissions on your phone. Verify their data handling practices to check if they align with your organization’s privacy standards.

Use ChatGPT Responsibly for Work

Maintaining privacy while using ChatGPT for work is tricky. If you absolutely must use ChatGPT to do your job, understand the privacy risks involved. There’s no fail-safe method to protect your data once you hand them over to an AI tool. But you can take steps to cut the chances of data leaks.

ChatGPT has many practical uses for work, but you should protect your company data while using it. Understanding ChatGPT’s privacy policy should help you decide if the tool is worth the risk.

SCROLL TO CONTINUE WITH CONTENT

Also read:

- [New] 2024 Approved Simplified Video Transferring Techniques for YouTube Enthusiasts

- [New] In 2024, Sonic Showcase Latest Features

- [New] Seamless Screen Stretch Cycle YouTube on Television Display for 2024

- [New] What's Encrypted Exploring the Role of Facebook's Blue Icon, In 2024

- 2024 Approved Finding Your Perfect Match Premium Videographer Recruitment

- Accessing Bing's Powerful AI Search: Step-by-Step

- Can’t Access the Internet on Another Device via iPhone? Fix iPad/iPhone Tethering Now

- Comprehensive Compilation of 20 Best Github ChatGPT Sessions

- Crafting Responsive Bots: The Job of Today's Engineers

- Cutting-Edge Tips for Figma Background Removal

- Expert Insights Into Motherboards, CPUs & More: Join Tom's Technology Journey

- How to Open and Use the Windows Terminal in Quake Mode

- How to Open the Volume Mixer in Windows 11

- Inside Investment: Examining the Pros of ChatGPT Premium

- Peek Into Google’s Future - Understanding Its Gemini Artificial Intelligence Project

- Rectifying ChatGPT Errors: Plugin Integration Gone Wrong

- Ultimate Guide to Choosing the Finest Small Tablets of 2024, as Tested by Tech Gurus | Insights

- Title: Shielding Against Unauthorized AI Interaction Leaks

- Author: Brian

- Created at : 2024-10-30 18:41:29

- Updated at : 2024-11-01 18:16:20

- Link: https://tech-savvy.techidaily.com/shielding-against-unauthorized-ai-interaction-leaks/

- License: This work is licensed under CC BY-NC-SA 4.0.