Step-by-Step: Integrating Nvidia’s AI Chatbot

Step-by-Step: Integrating Nvidia’s AI Chatbot

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Quick Links

- What Is Nvidia Chat with RTX?

- How to Download and Install Chat with RTX

- How to Use Nvidia Chat with RTX

- Is Nvidia’s Chat with RTX Any Good?

- What if I Don’t Have an RTX 30 or 40 Series GPU?

Key Takeaways

- Nvidia Chat with RTX is an AI chatbot that runs locally on your PC, using TensorRT-LLM and RAG for customized responses.

- Install Chat with RTX has the following minimum requirements: an RTX GPU, 16GB RAM, 100GB storage, and Windows 11.

- Use Chat with RTX to set up files for RAG, ask questions, analyze YouTube videos, and ensure data security.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

Nvidia has launched Chat with RXT, an AI chatbot that operates on your PC and offers features similar to ChatGPT and more! All you need is an Nvidia RTX GPU, and you’re all set to start using Nvidia’s new AI chatbot.

What Is Nvidia Chat with RTX?

Nvidia Chat with RTX is an AI software that lets you run a large language model (LLM) locally on your computer . So, instead of going online to use an AI chatbot like ChatGPT, you can use Chat with RTX offline whenever you want.

Chat with RTX uses TensorRT-LLM, RTX acceleration, and a quantized Mistral 7-B LLM to provide fast performance and quality responses on par with other online AI chatbots. It also provides retrieval-augmented generation (RAG), allowing the chatbot to read through your files and enable customized answers based on the data you provide. This allows you to customize the chatbot to provide a more personal experience.

If you want to try out Nvidia Chat with RTX, here’s how to download, install, and configure it on your computer.

How to Download and Install Chat with RTX

Nvidia has made running an LLM locally on your computer much easier. To run Chat with RTX, you only need to download and install the app, just as you would with any other software. However, Chat with RTX does have some minimum specification requirements to install and use properly.

- RTX 30-Series or 40-Series GPU

- 16GB RAM

- 100GB free memory space

- Windows 11

If your PC does pass the minimum system requirement, you can go ahead and install the app.

- Step 1: Download the Chat with RTX ZIP file.

- Download: Chat with RTX (Free—35GB download)

- Step 2: Extract the ZIP file by right-clicking and selecting a file archive tool like 7Zip or double-clicking the file and selecting Extract All.

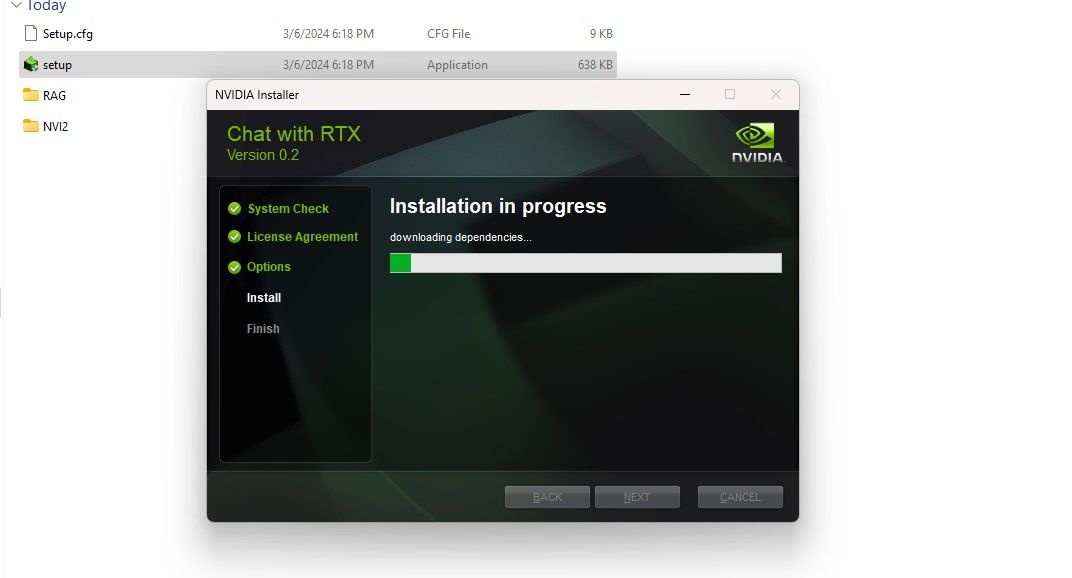

- Step 3: Open the extracted folder and double-click setup.exe. Follow the onscreen instructions and check all the boxes during the custom installation process. After hitting Next, the installer will download and install the LLM and all dependencies.

The Chat with RTX installation will take some time to finish as it downloads and installs a large amount of data. After the installation process, hit Close, and you’re done. Now, it’s time for you to try out the app.

How to Use Nvidia Chat with RTX

Although you can use Chat with RTX like a regular online AI chatbot, I strongly suggest you check its RAG functionality, which enables you to customize its output based on the files you give access to.

Step 1: Create RAG Folder

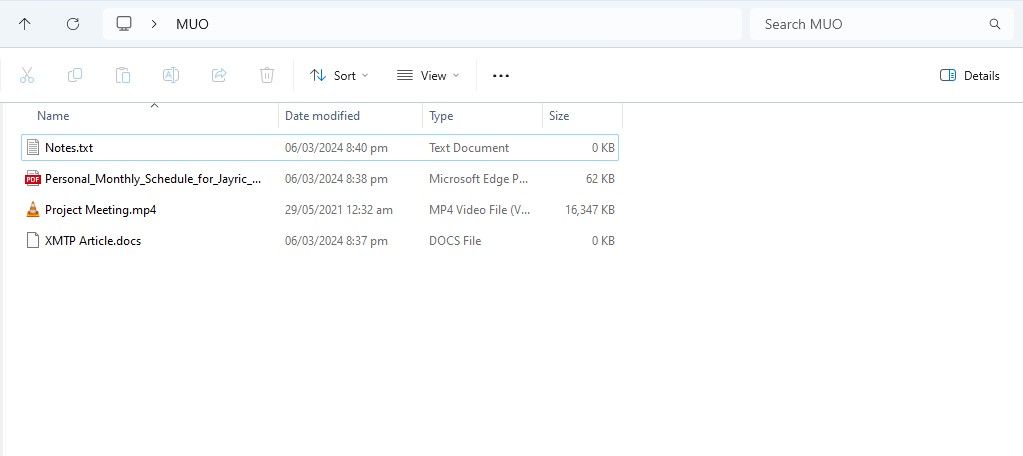

To start using RAG on Chat with RTX, create a new folder to store the files you want the AI to analyze.

After creation, place your data files into the folder. The data you store can cover many topics and file types, such as documents, PDFs, text, and videos. However, you may want to limit the number of files you place in this folder so as not to affect performance. More data to search through means Chat with RTX will take longer to return responses for specific queries (but this is also hardware-dependent).

Now your database is ready, you can set up Chat with RTX and start using it to answer your questions and queries.

Step 2: Set Up Environment

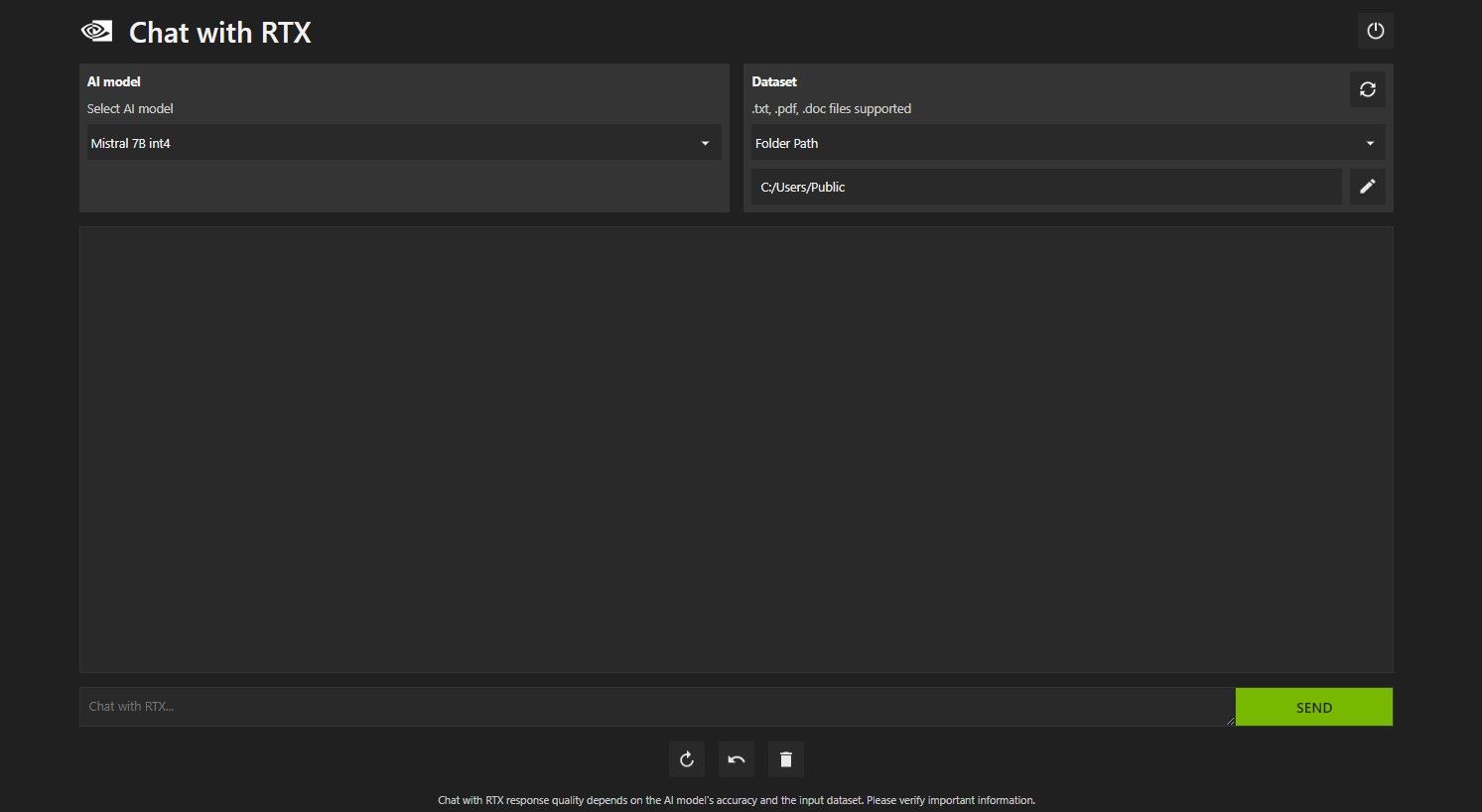

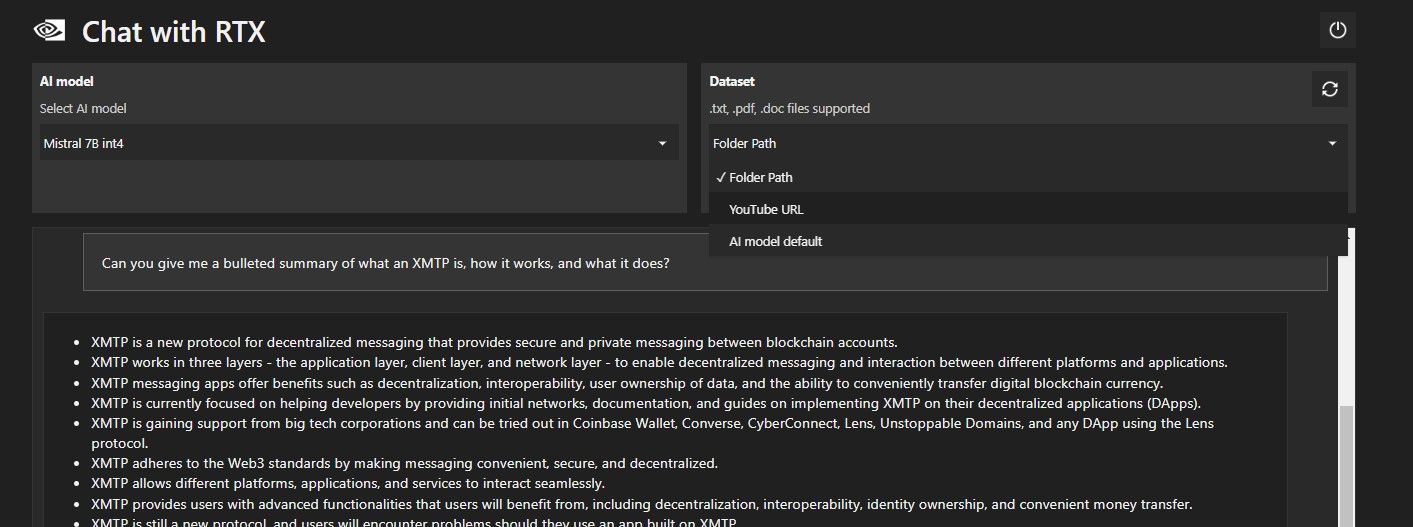

Open Chat with RTX. It should look like the image below.

Under Dataset, make sure that the Folder Path option is selected. Now click on the edit icon below (the pen icon) and select the folder containing all the files you want Chat with RTX to read. You can also change the AI model if other options are available (at the time of writing, only Mistral 7B is available).

You are now ready to use Chat with RTX.

Step 3: Ask Chat with RTX Your Questions

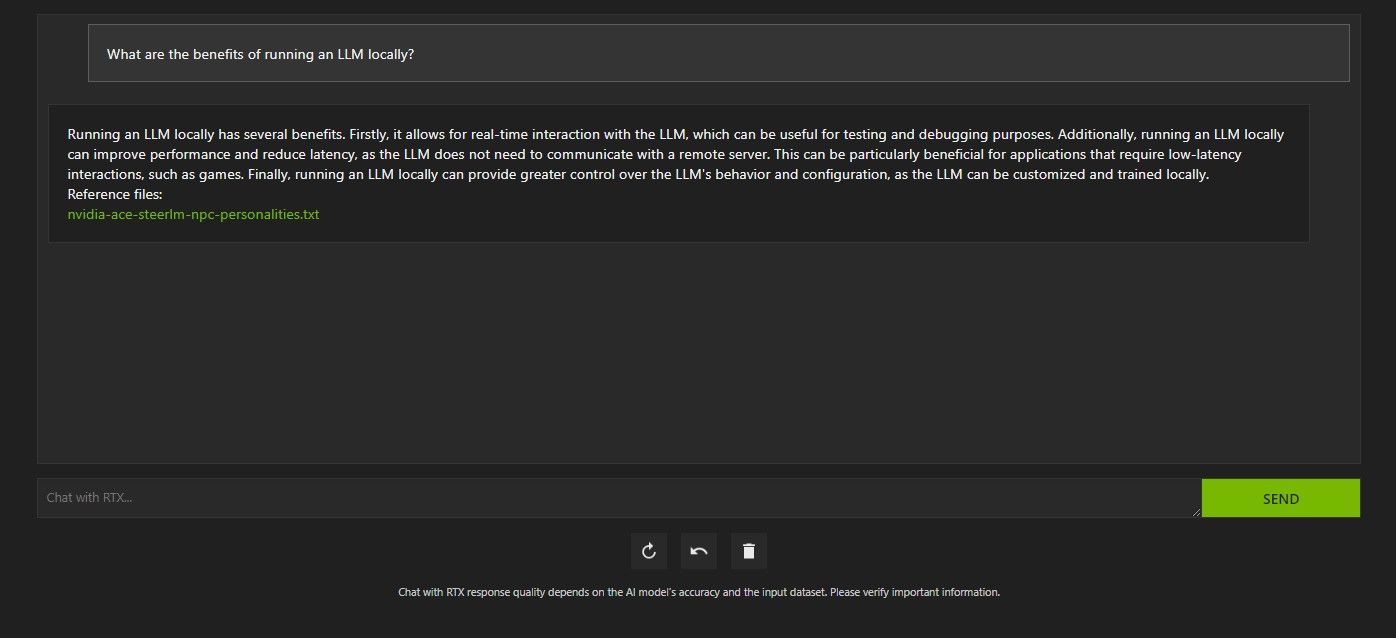

There are several ways to query Chat with RTX. The first one is to use it like a regular AI chatbot. I asked Chat with RTX about the benefits of using a local LLM and was satisfied with its answer. It wasn’t enormously in-depth, but accurate enough.

But since Chat with RTX is capable of RAG, you can also use it as a personal AI assistant.

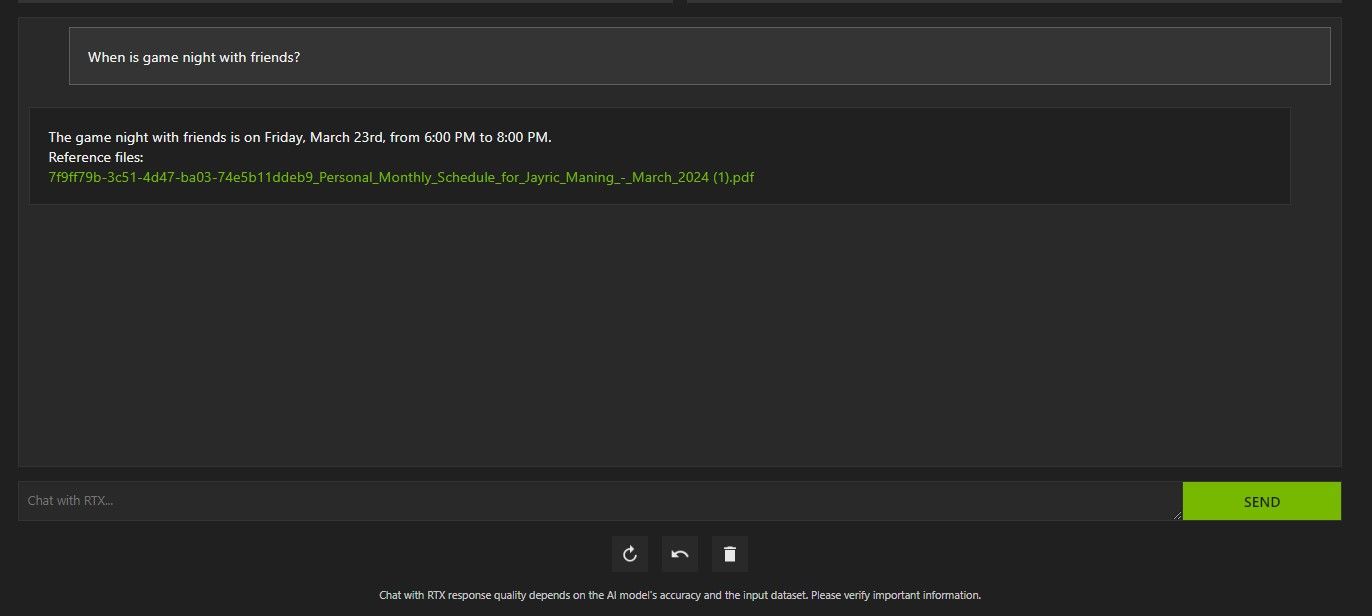

Above, I’ve used Chat with RTX to ask about my schedule. The data came from a PDF file containing my schedule, calendar, events, work, and so on. In this case, Chat with RTX has pulled the correct calendar data from the data; you’ll have to keep your data files and calendar dates updated for features like this to work properly until there are integrations with other apps.

There are many ways you can use Chat with RTX’s RAG to your advantage. For example, you can use it to read through legal papers and give a summary, generate code relevant to the program you’re developing, get bulleted highlights about a video you’re too busy to watch, and so much more!

Step 4: Bonus Feature

In addition to your local data folder, you can use Chat with RTX to analyze YouTube videos. To do so, under Dataset, change the Folder Path to YouTube URL.

Copy the YouTube URL you want to analyze and paste it below the drop-down menu. Then ask away!

Chat with RTX’s YouTube video analysis was pretty good and delivered accurate information, so it could be handy for research, quick analysis, and more.

Is Nvidia’s Chat with RTX Any Good?

ChatGPT provides RAG functionality. Some local AI chatbots have significantly lower system requirements . So, is Nvidia Chat with RTX even worth using?

The answer is yes! Chat with RTX is worth using despite the competition.

One of the biggest selling points of using Nvidia Chat with RTX is its ability to use RAG without sending your files to a third-party server. Customizing GPTs through online services can expose your data . But since Chat with RTX runs locally and without an internet connection, using RAG on Chat with RTX ensures your sensitive data is safe and only accessible on your PC.

As for other locally running AI chatbots running Mistral 7B, Chat with RTX performs better and faster. Although a big part of the performance boost comes from using higher-end GPUs, the use of Nvidia TensorRT-LLM and RTX acceleration made running Mistral 7B faster on Chat with RTX when compared to other ways of running a chat-optimized LLM.

It is worth noting that the Chat with RTX version we are currently using is a demo. Later releases of Chat with RTX will likely become more optimized and deliver performance boosts.

What if I Don’t Have an RTX 30 or 40 Series GPU?

Chat with RTX is an easy, fast, and secure way of running an LLM locally without the need for an internet connection. If you’re also interested in running an LLM or local but don’t have an RTX 30 or 40 Series GPU, you can try other ways of running an LLM locally. Two of the most popular ones would be GPT4ALL and Text Gen WebUI. Try GPT4ALL if you want a plug-and-play experience locally running an LLM. But if you’re a bit more technically inclined, running LLMs through Text Gen WebUI will provide better fine-tuning and flexibility.

Also read:

- [New] Expand Your Instagram Skills Advanced Use of Queries for 2024

- [New] Ultimate Brain Challenge - Top Trivia Networks for '24 for 2024

- 8 Workable Fixes to the SIM not provisioned MM#2 Error on Infinix Smart 8 Plus | Dr.fone

- Authentication Error Occurred on Lava Yuva 2? Here Are 10 Proven Fixes | Dr.fone

- In 2024, Keep Your Tweets Compliant with Aspect Ratio Requirements

- In 2024, The Art of Video Storytelling How to Craft Impactful and Informative Edu-Vids on YouTube

- Small Business Success Stories: Leveraging Newsletter Optimization Techniques and Analytics by MassMail to Enhance Subscriber Engagement

- The Complete Guide to Xiaomi Redmi K70 Pro FRP Bypass Everything You Need to Know

- Top VPN Promotions Available Today - Exclusive Offers by ZDNet

- Top-Rated VPN Providers : Comprehensive Evaluations by Tech Gurus - ZDNet

- Top-Rated VPNs Compatible with Windows: Comprehensive Analysis & Reviews | TechRadar

- Ultimate Guide to the Highest Quality Android VPN Providers of 2024 - Thoroughly Assessed and Recommended | DigitalNet

- Ultimate Guide to the Highest Quality Netflix VPNs of 2024 - Expertly Tested and Evaluated | CNET

- Ultimate Guide: Disabling Your VPN Connection Across Various Gadgets - Tips From ZDNet

- Uncover the Secrets to Endless Hours in Game Mode with Our Detailed Mavix M 9 Chair Test - A True Comfort Guide for Gamers

- Understanding the Importance of VPN Services: Protect Your Digital Life with Comprehensive Guidance From ZDNET

- Understanding the Need for Switching Your IP: A Comprehensive Guide on How and When to Do It Effectively

- Understanding VPN: What It Is & Why You Need It – Insightful Answers From ZDNet's In-Depth Exploration

- Updated Slow Mo to Fast Mo Top 10 Free Video Speed Changing Apps for iOS and Android for 2024

- Title: Step-by-Step: Integrating Nvidia’s AI Chatbot

- Author: Brian

- Created at : 2024-11-04 07:47:13

- Updated at : 2024-11-06 19:28:35

- Link: https://tech-savvy.techidaily.com/step-by-step-integrating-nvidias-ai-chatbot/

- License: This work is licensed under CC BY-NC-SA 4.0.