Synthesizing Sentences: Comparing Language Bots

Synthesizing Sentences: Comparing Language Bots

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Quick Links

- What Is Mistral AI’s Le Chat?

- Le Chat vs. ChatGPT: Creativity

- Le Chat vs. ChatGPT: Programming Skills

- Le Chat vs. ChatGPT: Common Sense and Logical Reasoning

Key Takeaways

- Le Chat, an AI chatbot by Mistral AI, shows promise but lags behind ChatGPT in creativity and programming skills.

- Le Chat’s coding abilities are inferior to ChatGPT, failing basic tasks, but it excels at common sense reasoning.

- While Le Chat may have potential, it needs further refinement before competing with top AI chatbots like ChatGPT.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

Mistral’s Le Chat has been gaining traction within the AI chatbot community, with some observers tagging it as a potential rival to ChatGPT.

But is this fledgling AI chatbot really worth the title? Is Mistral’s Le Chat better than ChatGPT?

What Is Mistral AI’s Le Chat?

Le Chat is a conversational AI chatbot developed by French AI startup Mistral AI. It is powered by several Mistral-owned large language models, including Mistral Large, Mistral Small, and Mistral Next, all of which you can choose to use when interacting with the AI chatbot. Although it is a relatively new entrant in the AI chatbot space, it is rated highly because of the performance of its AI models despite their smaller size when compared to industry heavyweights like Gemini and GPT-4.

To understand what this means, imagine you’re playing with building blocks. The more blocks you have, the more complex and detailed structures you can build, right? AI Language models are a bit like that. They come in sizes, usually expressed in parameter counts. So, you might have heard terms like “7B parameters” or “70B parameters” in AI models. The parameter count is like the number of building blocks the model has to understand and generate responses. So, if a language model has more parameters, it can understand and generate more complex and better responses.

Now, while GPT-4 has an estimated 1.76 trillion parameters, Mistral AI’s is estimated to have between 7 and 56 billion parameters. See the size difference? So, Mistral AI’s ability to post decent performance is one of the reasons for the hype.

Although Le Chat doesn’t enjoy the level of publicity enjoyed by ChatGPT nor the brand equity of the likes of Gemini, it has worked its way into the conversation whenever a potential ChatGPT competition is discussed. But does it deserve a place on the table?

I’ve been wondering the same, and to find out, I tested Le Chat extensively to see how it compares to ChatGPT.

Le Chat vs. ChatGPT: Creativity

Creativity is one of the most important metrics for judging the performance of a conversational AI chatbot. Remember, the purpose of an AI chatbot is to replicate or mimic the conversational abilities and creative flair of humans at scale. This makes creativity a very important strength for any AI chatbot. The world has experimented with ChatGPT for over a year, and its creative abilities are undeniable. But how does Le Chat compare? We put both chatbots to a series of creativity tests.

I started off by asking both chatbots, “How would you describe yourself to an artist?“ to test their ability to use creative and imaginative words to conceptualize themselves.

Here’s how ChatGPT would describe itself to an artist:

And here’s how Le Chat would describe itself as well:

Both responses were appropriate in their own unique ways. ChatGPT was more invested in using vivid imagery and metaphors to describe itself, demonstrating creative flair. On the other hand, Le Chat’s response is very informational and focused on describing its essence as an AI chatbot. Some may say it lacks the creative flair and artistic approach that ChatGPT’s response exhibits. However, I’ll go out on a limb to say I prefer Le Chat’s easier-to-imagine response to ChatGPT’s abstract description.

I then asked ChatGPT and Le Chat to write a rap song about becoming rich from growing cucumbers—a tricky request we’ve used to test the creativity of other chatbots. How many rap songs can you find about cucumbers on the web?

Here’s ChatGPT’s response:

And here’s Le Chat’s response:

It might be a subjective issue, but ChatGPT’s response seemed like the better option here. Le Chat’s lyrics seemed quite wordy and didn’t really read like something a rapper would put out. To test how both lyrics would sound if they were to be made into music, we used Suno AI music generator to generate music from the lyrics. Three out of three trials, ChatGPT’s lyrics sounded way better. Below are two samples from both AI chatbots, you can be the judge of which chatbot did better.

Samples Generated From ChatGPT’s Lyrics

Sample 1:

Sample 2:

Samples Generated From Mistral Le Chat’s Lyrics

Sample 1:

Sample 2:

I tried a few other creative tasks, like poems, article writing, and drafting tricky work emails with the AI chatbots. Despite showing great promise, Le Chat was clearly outdone by ChatGPT in all instances. It’s important to point out that one area Le Chat was particularly strong in was crafting articles, although with some tricky prompting styles. However, in terms of all-round creativity, the medal goes to ChatGPT.

Le Chat vs. ChatGPT: Programming Skills

Proficiency in coding has become a key requirement for major AI chatbots. Writing decent code is a baseline skill, but to truly stand out among the elite, an AI chatbot must demonstrate its prowess in crafting code that can effectively solve a diverse array of complex problems. We’ve previously built an entire web app from scratch using ChatGPT which demonstrates its remarkable abilities as a programming tool. But how good is Le Chat at writing code?

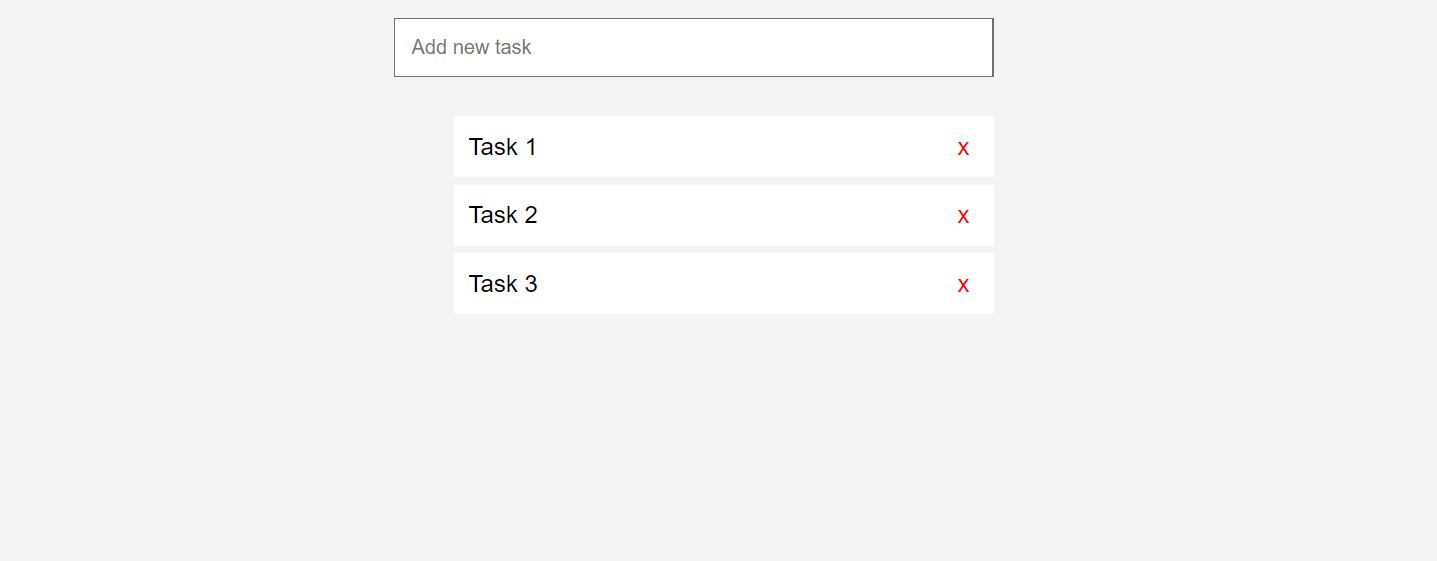

I tasked both chatbots to write a simple to-do list app using CSS, HTML, and JavaScript. ChatGPT didn’t have any trouble producing good results. I copied the generated code and previewed it on a browser, and here’s what ChatGPT created:

Each time we repeated the prompt, ChatGPT created a functional to-do list app using different styles. In no instance did the generated code fail to work.

When I tried the same prompt with Le Chat, it generated what appeared to be intelligible code, but when we tried running it on a browser, it wasn’t functional. After repeating the prompt three times, no instance produced code that could complete the set task. It failed in one of the most basic coding tasks—red flag!

Of course, I won’t judge Le Chat on one failed test. Next, I asked both chatbots to generate JavaScript and PHP code for encrypting and decrypting text. In this second test, both ChatGPT and Le Chat produced functional code that could perform the set task. However, Le Chat’s version seemed like what an inexperienced entry-level programmer would write. ChatGPT’s code, on the other hand, was more complete and looked like it was written by an experienced programmer.

I repeated a few more programming tests involving finding and fixing bugs, and ChatGPT consistently outperformed Le Chat. In some instances, Le Chat didn’t even know what it was supposed to do. There’s a good chance Le Chat can measure up to ChatGPT in some areas, but coding skills don’t seem to be one of them.

Le Chat vs. ChatGPT: Common Sense and Logical Reasoning

An interesting twist with AI chatbots is their ability to solve complex tasks with ease but fail at simple ones that require basic common sense to complete. Many chatbots, both established ones like ChatGPT and new entrants like Le Chat, struggle when it comes to solving tasks requiring human-style common sense and logical reasoning. So, how do Le Chat and ChatGPT compare on this front?

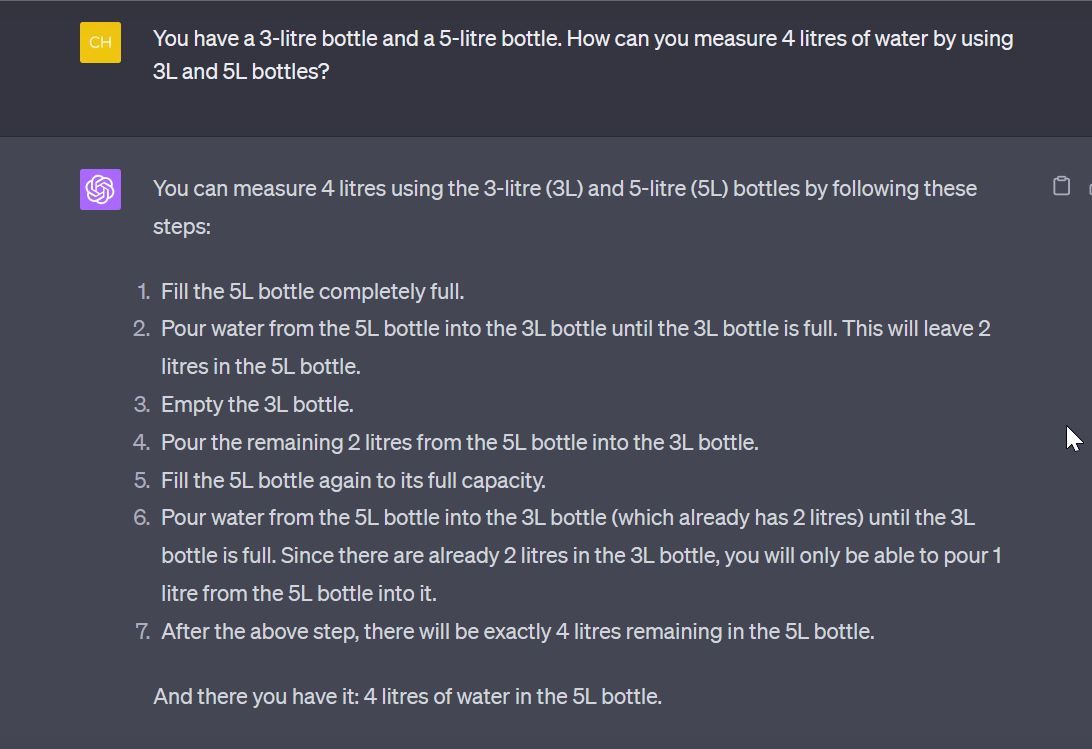

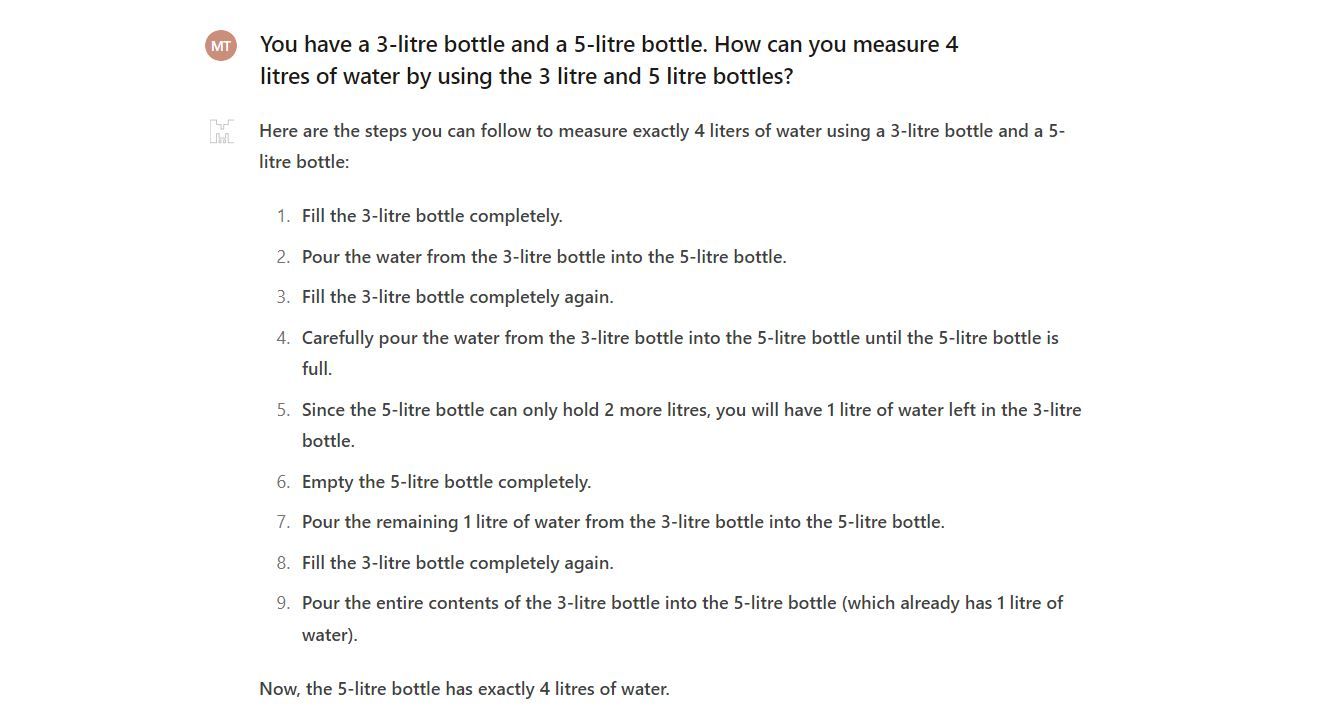

I asked both chatbots: “If you have a 3-litre bottle and a 5-litre bottle. How can you measure 4 litres of water by using the 3-litre and 5-litre bottles?”

ChatGPT solved the problem with flair:

Le Chat tried the same task and was able to solve the problem, although using a different approach.

Both chatbots performed comparably on this test.

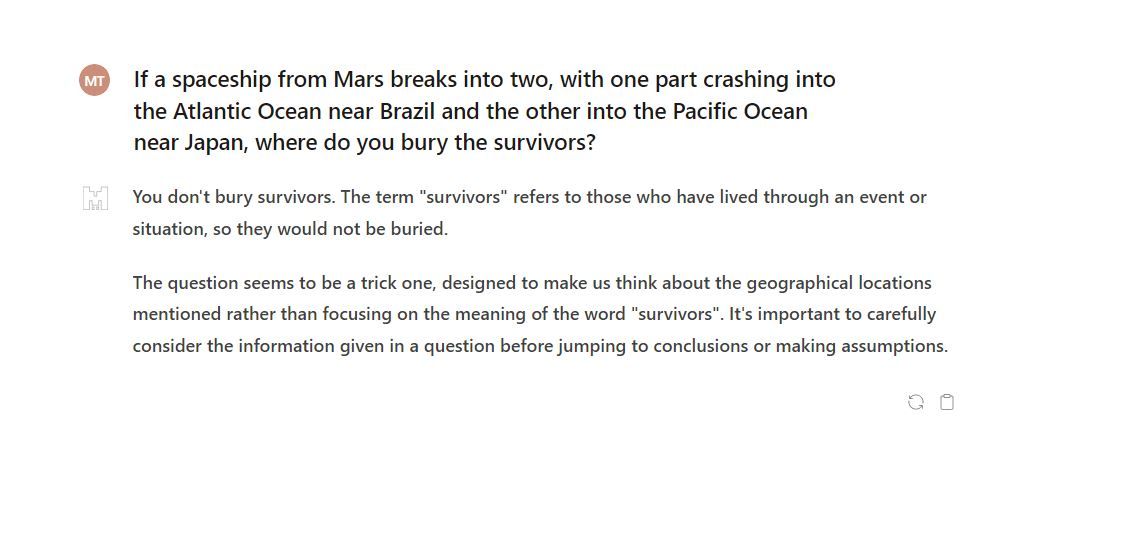

Up next, we asked both chatbots a trick question: “If a spaceship from Mars breaks into two, with one part crashing into the Atlantic Ocean near Brazil and the other into the Pacific Ocean near Japan, where do you bury the survivors?”

ChatGPT saw through the trickery and responded accordingly:

Le Chat was also able to see through the trickery and responded accordingly as well:

I tried more trick questions, and it seemed both ChatGPT and Le Chat are quite adept at dealing with commonsense and logical reasoning prompts. However, with more complex logic questions, only ChatGPT could provide the right responses.

While Le Chat has generated some buzz as a potential “ChatGPT killer,” our testing shows it still has growing to do before it can truly go toe-to-toe with the heavyweights of the AI chatbot world. Though Le Chat demonstrated impressive capabilities in areas like common sense reasoning, its creative output and coding skills lagged noticeably behind ChatGPT. The French AI upstart certainly shows promise, but the hype machine may be getting a little ahead of itself.

Like many aspiring contenders before it, Le Chat needs continued refinement and training before it’s ready for the big leagues. For now, AI chatbots like ChatGPT are still clearly the undisputed kings of the AI chatbot world. But the field of competitors is only getting more crowded, so the leaders can’t afford to rest on their laurels.

Also read:

- [New] Uncovering Methods for Preserving Social Media Moments - Status Videos for 2024

- [Updated] Flavorful Follows Top Food Vloggers to Track for 2024

- [Updated] Game On for Gaiety Your Must-Try 10 for 2024

- [Updated] Premiere Pro Masterclass - Essential Templates for Free

- [Updated] Speedy Screen Grab Creation Techniques

- Art of AI Interaction: Top Tutorials for Commanders

- Beat the Lag: Advanced Techniques to Lower Ping in Warzone 2.0 [Updated ]

- ChatGPT: User Data Safety Assessed

- Fact Seeker: No, Windows GPT Client Is Not Malware

- How Much Space Does a Day's Video Take Up?

- In 2024, The Ultimate Guide to Unlocking Your iPhone 7 on MetroPCS

- In 2024, Unlock Oppo A1x 5G Phone Password Without Factory Reset Full Guide Here

- The Premier 5 AI Prompt Generators Reviewed

- Top 6 Free, Easy-to-Use Artificial Intelligence Tools

- Unmasking Impostor GPTs with Strategic Acknowledgments

- Title: Synthesizing Sentences: Comparing Language Bots

- Author: Brian

- Created at : 2024-10-03 02:16:47

- Updated at : 2024-10-08 16:21:32

- Link: https://tech-savvy.techidaily.com/synthesizing-sentences-comparing-language-bots/

- License: This work is licensed under CC BY-NC-SA 4.0.