The Dynamics of Colossal Machine Learning Constructs

The Dynamics of Colossal Machine Learning Constructs

Large language models (LLMs) are the underlying technology that has powered the meteoric rise of generative AI chatbots. Tools like ChatGPT, Google Bard, and Bing Chat all rely on LLMs to generate human-like responses to your prompts and questions.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

But just what are LLMs, and how do they work? Here we set out to demystify LLMs.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

What Is a Large Language Model?

In its simplest terms, an LLM is a massive database of text data that can be referenced to generate human-like responses to your prompts. The text comes from a range of sources and can amount to billions of words.

Among common sources of text data used are:

- Literature: LLMs often contain enormous amounts of contemporary and classical literature. This can include books, poetry, and plays.

- Online content: An LLM will most often contain a large repository of online content, including blogs, web content, forum questions & responses, and other online text.

- News and current affairs: Some, but not all, LLMs can access current news topics. Certain LLMs, like GPT-3.5, are restricted in this sense.

- Social Media: Social media represents a huge resource of natural language. LLMs use text from major platforms like Facebook, Twitter, and Instagram.

Of course, having a huge database of text is one thing, but LLMs need to be trained to make sense of it to produce human-like responses. How it does this is what we cover next.

How Do LLMs Work?

How do LLMs use these repositories to create their responses? The first step is to analyze the data using a process called deep learning.

Deep learning is used to identify the patterns and nuances of human language. This includes gaining an understanding of grammar and syntax. But importantly, it also includes context. Understanding context is a crucial part of LLMs.

Let’s look at an example of how LLMs can use context.

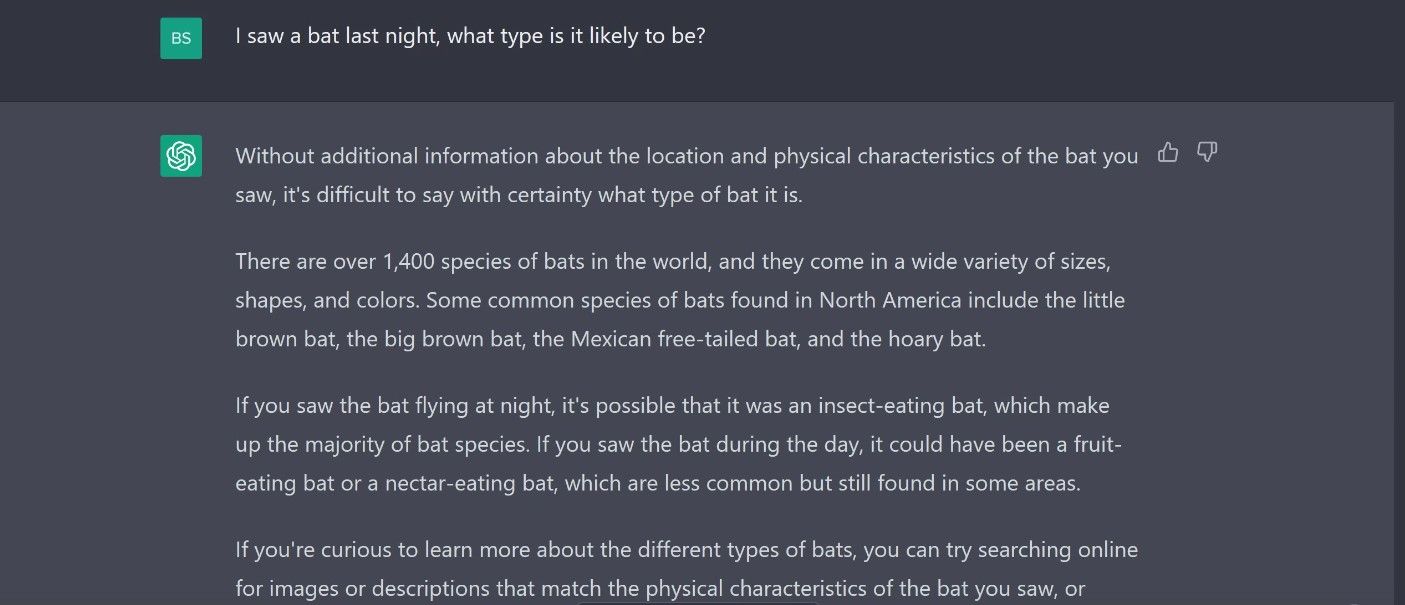

The prompt in the following image mentions seeing a bat at night. From this, ChatGPT understood that we were talking about an animal and not, for instance, a baseball bat. Of course, other chatbots like Bing Chat or Google Bard may answer this completely differently.

However, it isn’t infallible, and as this example shows, sometimes you will need to supply additional information to get the desired response.

In this instance, we deliberately threw a bit of a curve ball to demonstrate how easily context is lost. But humans can misunderstand the context of questions too, and it only needs an extra prompt to correct the response.

To generate these responses, LLMs use a technique called natural language generation (NLG). This involves examining the input and using the patterns learned from its data repository to generate a contextually correct and relevant response.

But LLMs go deeper than this. They can also tailor replies to suit the emotional tone of the input. When combined with contextual understanding, the two facets are the main drivers that allow LLMs to create human-like responses.

To summarize, LLMs use a massive text database with a combination of deep learning and NLG techniques to create human-like responses to your prompts. But there are limitations to what this can achieve.

What Are the Limitations of LLMs?

LLMs represent an impressive technological achievement. But the technology is far from perfect, and there are still plenty of limitations as to what they can achieve. Some of the more notable of these are listed below:

- Contextual understanding: We mentioned this as something LLMs incorporate into their answers. However, they don’t always get it right and are often unable to understand the context, leading to inappropriate or just plain wrong answers.

- Bias: Any biases present in the training data can often be present in responses. This includes biases towards gender, race, geography, and culture.

- Common sense: Common sense is difficult to quantify, but humans learn this from an early age simply by watching the world around them. LLMs do not have this inherent experience to fall back on. They only understand what has been supplied to them through their training data, and this does not give them a true comprehension of the world they exist in.

- An LLM is only as good as its training data: Accuracy can never be guaranteed. The old computer adage of “Garbage In, Garbage Out” sums this limitation up perfectly. LLMs are only as good as the quality and quantity of their training data allow them to be.

There is also an argument that ethical concerns can be considered a limitation of LLMs, but this subject falls outside the scope of this article.

3 Examples of Popular LLMs

The continuing advance of AI is now largely underpinned by LLMs. So while they aren’t exactly a new technology, they have certainly reached a point of critical momentum, and there are now many models.

Here are some of the most widely used LLMs.

1. GPT

Generative Pre-trained Transformer (GPT) is perhaps the most widely known LLM. GPT-3.5 powers the ChatGPT platform used for the examples in this article, while the newest version, GPT-4, is available through a ChatGPT Plus subscription . Microsoft also uses the latest version in its Bing Chat platform .

2. LaMDA

This is the initial LLM used by Google Bard, Google’s AI chatbot. The version Bard was initially rolled out with was described as a “lite” version of the LLM. The more powerful PaLM iteration of the LLM superseded this.

3. BERT

BERT stands for Bi-directional Encoder Representation from Transformers. The bidirectional characteristics of the model differentiate BERT from other LLMs like GPT .

Plenty more LLMs have been developed, and offshoots are common from the major LLMs. As they develop, these will continue to grow in complexity, accuracy, and relevance. But what does the future hold for LLMs?

The Future of LLMs

These will undoubtedly shape the way we interact with technology in the future. The rapid uptake of models like ChatGPT and Bing Chat is a testament to this fact. In the short term, AI is unlikely to replace you at work . But there is still uncertainty about just how big a part in our lives these will play in the future.

Ethical arguments may yet have a say in how we integrate these tools into society. However, putting this to one side, some of the expected LLM developments include:

- Improved Efficiency:With LLMs featuring hundreds of millions of parameters, they are incredibly resource hungry. With improvements in hardware and algorithms, they are likely to become more energy-efficient. This will also quicken response times.

- Improved Contextual Awareness:LLMs are self-training; the more usage and feedback they get, the better they become. Importantly, this is without any further major engineering. As technology progresses, this will see improvements in language capabilities and contextual awareness.

- Trained for Specific Tasks:The Jack-of-all-trade tools that are the public face of LLMs are prone to errors. But as they develop and users train them for specific needs, LLMs can play a large role in fields like medicine, law, finance, and education.

- Greater Integration: LLMs could become personal digital assistants. Think of Siri on steroids, and you get the idea. LLMs could become virtual assistants that help you with everything from suggesting meals to dealing with your correspondence.

These are just a few of the areas where LLMs are likely to become a larger part of the way we live.

LLMs Transforming and Educating

LLMs are opening up an exciting world of possibilities. The rapid rise of chatbots such as ChatGPT, Bing Chat, and Google Bard is evidence of the resources being poured into the field.

Such a proliferation of resources can only see these tools becoming more powerful, versatile, and accurate. The potential applications of such tools are vast, and at the moment, we are only scratching the surface of an incredible new resource.

SCROLL TO CONTINUE WITH CONTENT

But just what are LLMs, and how do they work? Here we set out to demystify LLMs.

Also read:

- [Updated] 2024 Approved Structure Your Storytelling with Chapter Tags in Vimeo Videos

- [Updated] Chronicles that Captivate Top YouTube Storytelling Channels 2023 for 2024

- 2024 Approved Best Apps Allow You to Transcribe Speech to Text Offline

- Crafting an Intuitive Household with ChatGPT’s Technology

- Determining Peak Frames Per Second for Superior Slow Moto Cinematography for 2024

- Gemini AI Emerges – Is It The New Champion Over ChatGPT?

- In 2024, Comparing Syma X8C to Previous Models

- In 2024, How To Simulate GPS Movement With Location Spoofer On Vivo V29e? | Dr.fone

- Tailored Screen Time: ChatGPT's Film Recommendations

- Top Apple Watch Savings & Bargains: Exclusive Offers in July 2024 - ZDNET

- Title: The Dynamics of Colossal Machine Learning Constructs

- Author: Brian

- Created at : 2024-10-23 18:21:46

- Updated at : 2024-10-26 23:02:47

- Link: https://tech-savvy.techidaily.com/the-dynamics-of-colossal-machine-learning-constructs/

- License: This work is licensed under CC BY-NC-SA 4.0.