The Risks of Automated Text Reduction by ChatBots Like GPT

The Risks of Automated Text Reduction by ChatBots Like GPT

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Quick Links

- ChatGPT Can Ignore or Misunderstand Your Prompt

- ChatGPT Can Omit Information You Provide

- ChatGPT Can Use Wrong or False Alternatives

- ChatGPT Can Get Facts Wrong

- ChatGPT Can Get Word or Character Limits Wrong

Key Takeaways

- ChatGPT can misinterpret prompts, deviate from instructions, and fail to recognize specific words. Be precise and monitor its responses.

- ChatGPT may omit details or alter content if not given clear instructions. Plan prompts carefully to ensure accurate summaries.

- ChatGPT can use wrong alternatives, omit or alter elements, and get facts wrong. Edit its output and structure prompts for the best results.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

There are limits to what ChatGPT knows. And its programming forces it to deliver what you ask for, even if the result is wrong. This means ChatGPT makes mistakes, and moreover, there are some common mistakes it makes, especially when it’s summarizing information and you’re not paying attention.

ChatGPT Can Ignore or Misunderstand Your Prompt

If you give the chatbot lots of data to sort through, even a complex prompt, it’s likely to deviate from your instructions and follow its own interpretation of them.

Making too many demands at once is one of several ChatGPT prompt mistakes to avoid . But it can also come down to the chatbot not recognizing a particular word or phrase you use.

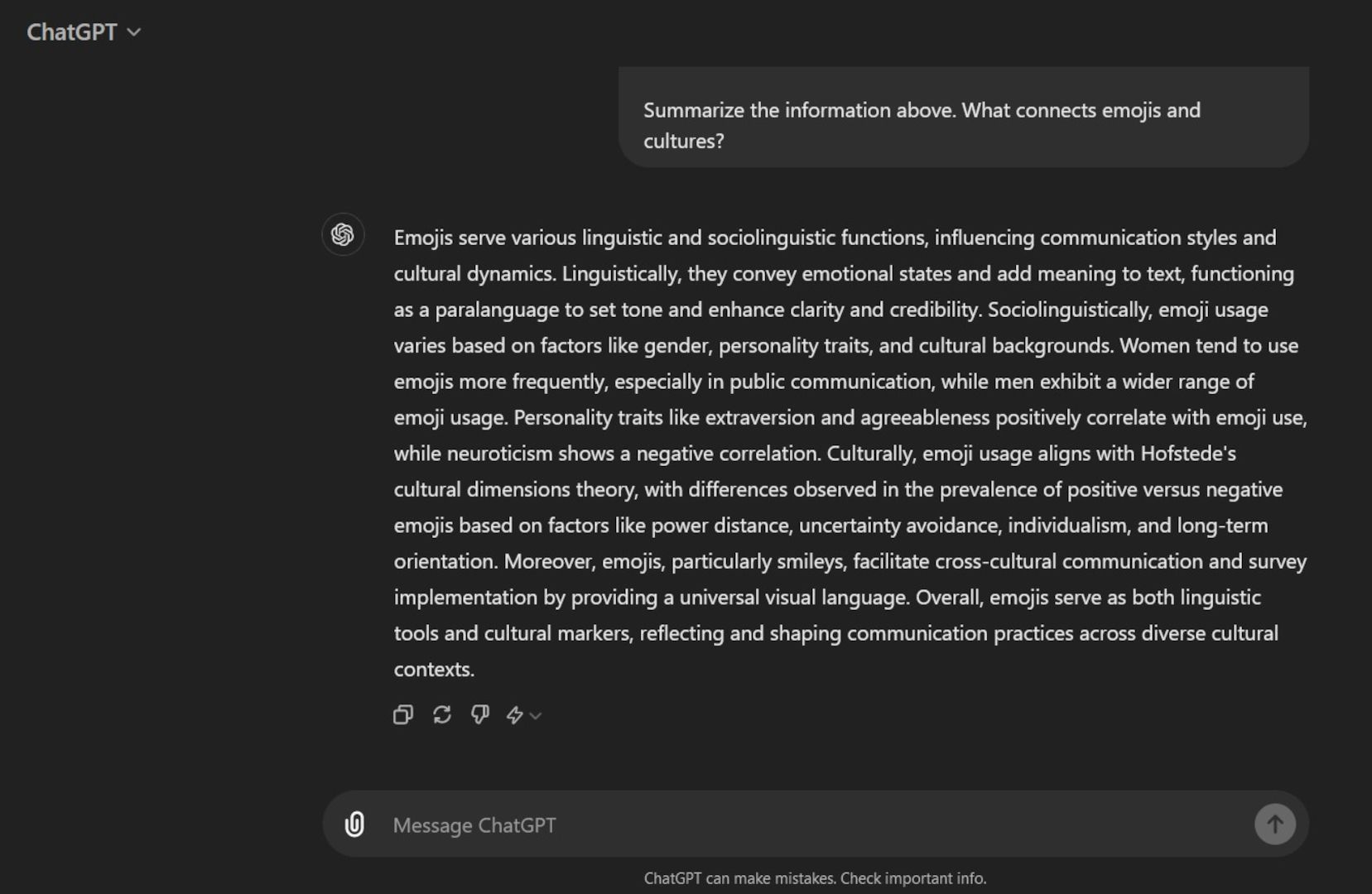

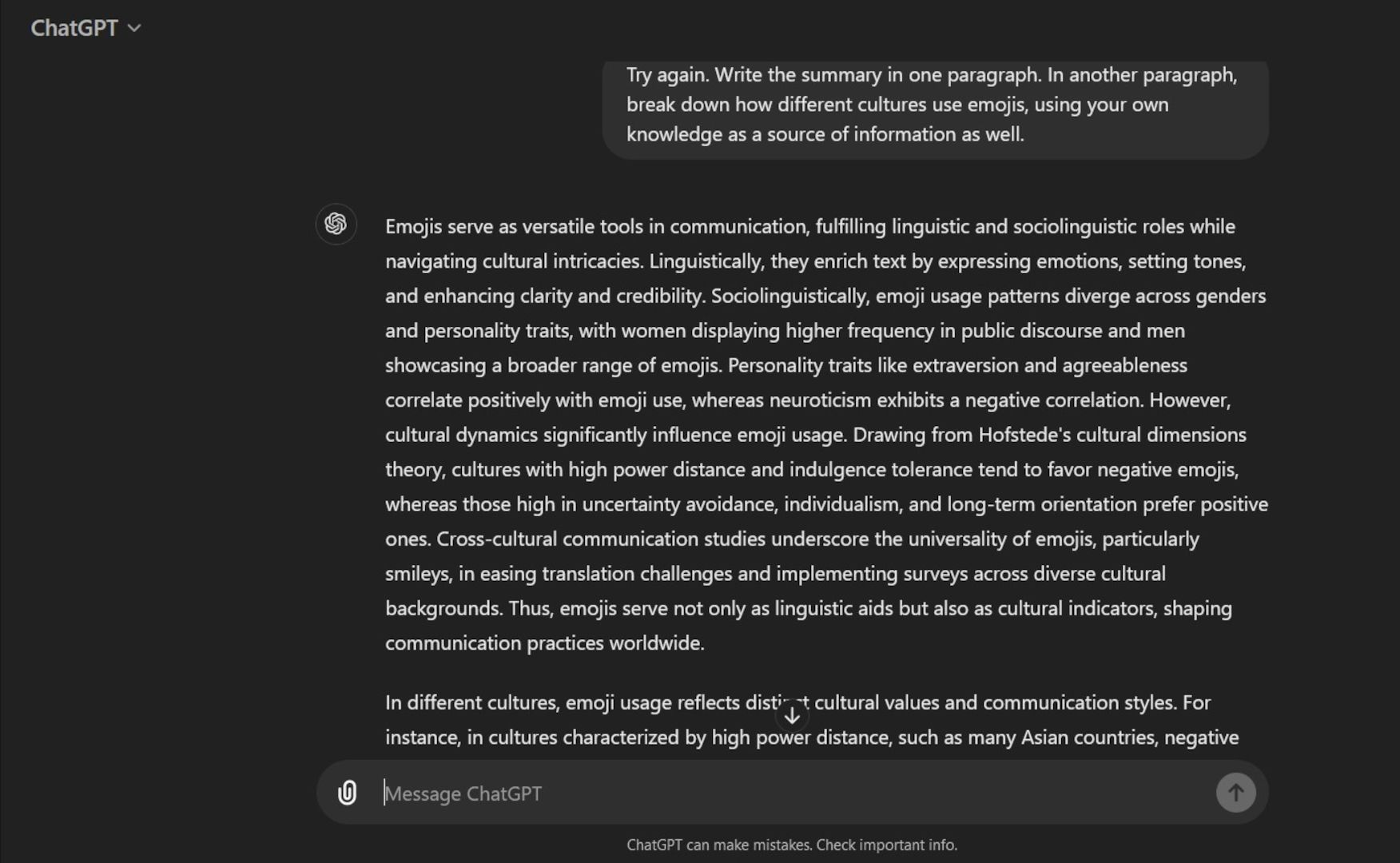

In the following example, ChatGPT got lots of information about the linguistic function of emojis. The intentionally simple prompt asked the chatbot to summarize everything and explain the links between emojis and cultures.

The chatbot merged both answers into one paragraph. A follow-up prompt with clearer instructions asked it to dip into its knowledge pool, too.

This is why you should keep your instructions precise, provide context when necessary, and keep an eye on ChatGPT’s results. If you flag up any mistake immediately, the chatbot can produce something more accurate.

ChatGPT Can Omit Information You Provide

ChatGPT is smart, but it’s not a good idea to bombard it with details about a particular topic without specifying what is or isn’t important.

The problem here is that, without proper instructions, the algorithm will pick and choose what information it considers relevant to the report or list you need.

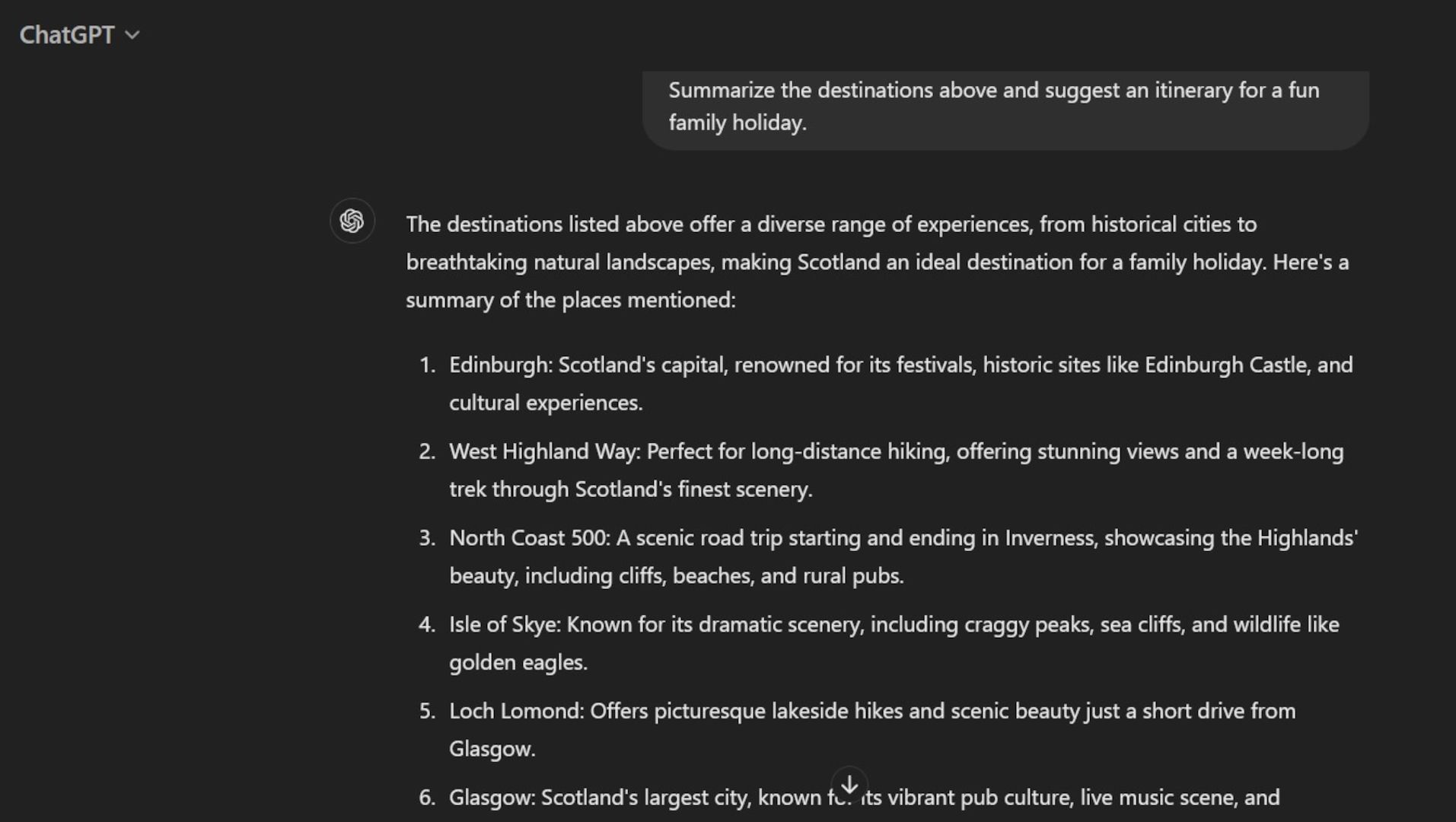

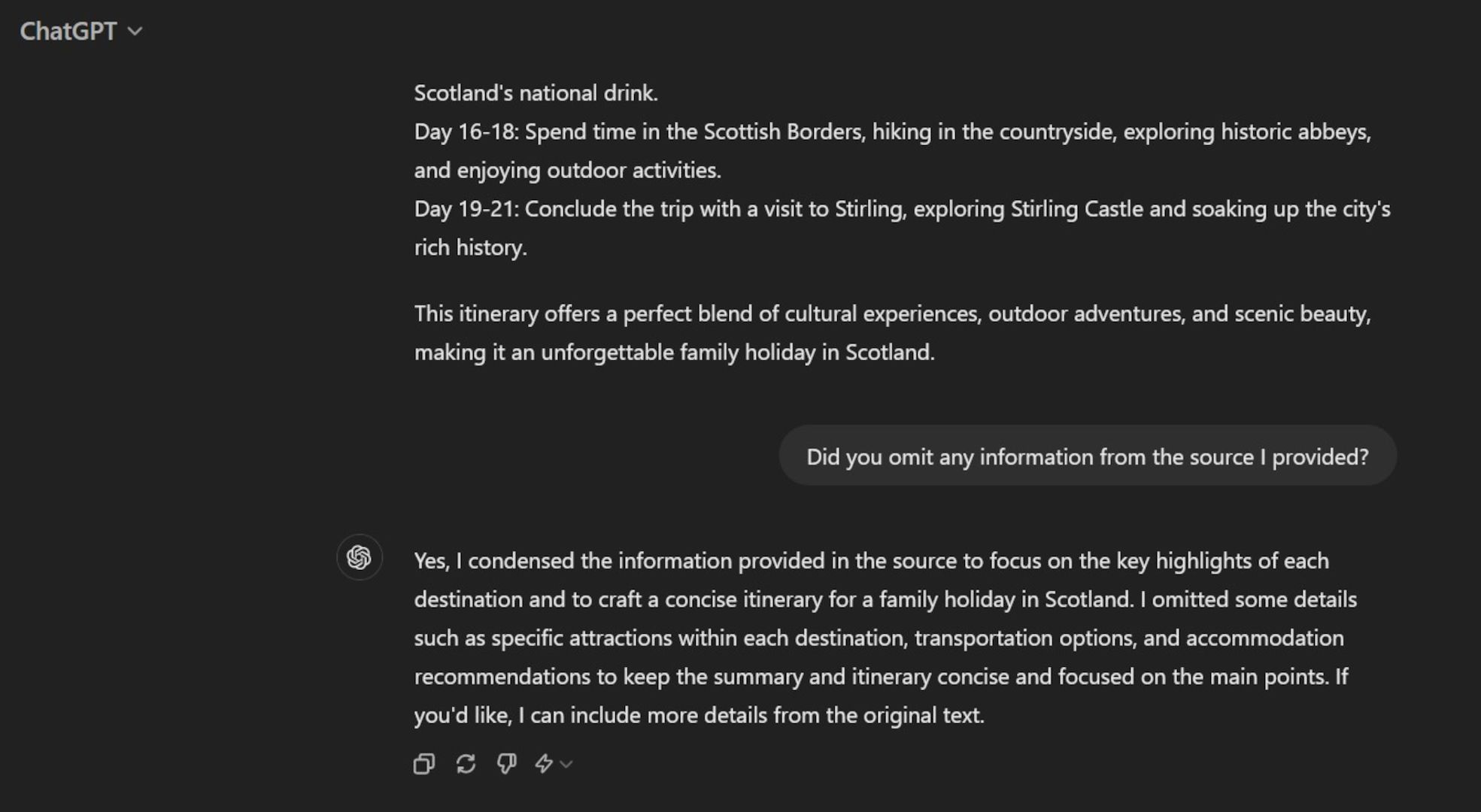

To test ChatGPT, it was asked to summarize a length of text on must-see Scottish destinations and create an itinerary for a family vacation.

When asked if it omitted details, it admitted that, yes, it left certain information out, such as specific attractions and transportation options. Conciseness was its goal.

If left to its own devices, there’s no guarantee that ChatGPT will use the details you expect. So, plan and phrase your prompts carefully to ensure the chatbot’s summary is spot on.

ChatGPT Can Use Wrong or False Alternatives

OpenAI has updated GPT-4o with data available up to October 2023, while GPT-4 Turbo’s cut-off is December of the same year. However, the algorithm’s knowledge isn’t infinite or reliable with real-time facts—it doesn’t know everything about the world. Furthermore, it won’t always reveal that it lacks data on a particular subject unless you ask it directly.

When summarizing or enriching text that contains such obscure references, ChatGPT is likely to replace them with alternatives it understands or fabricate their details.

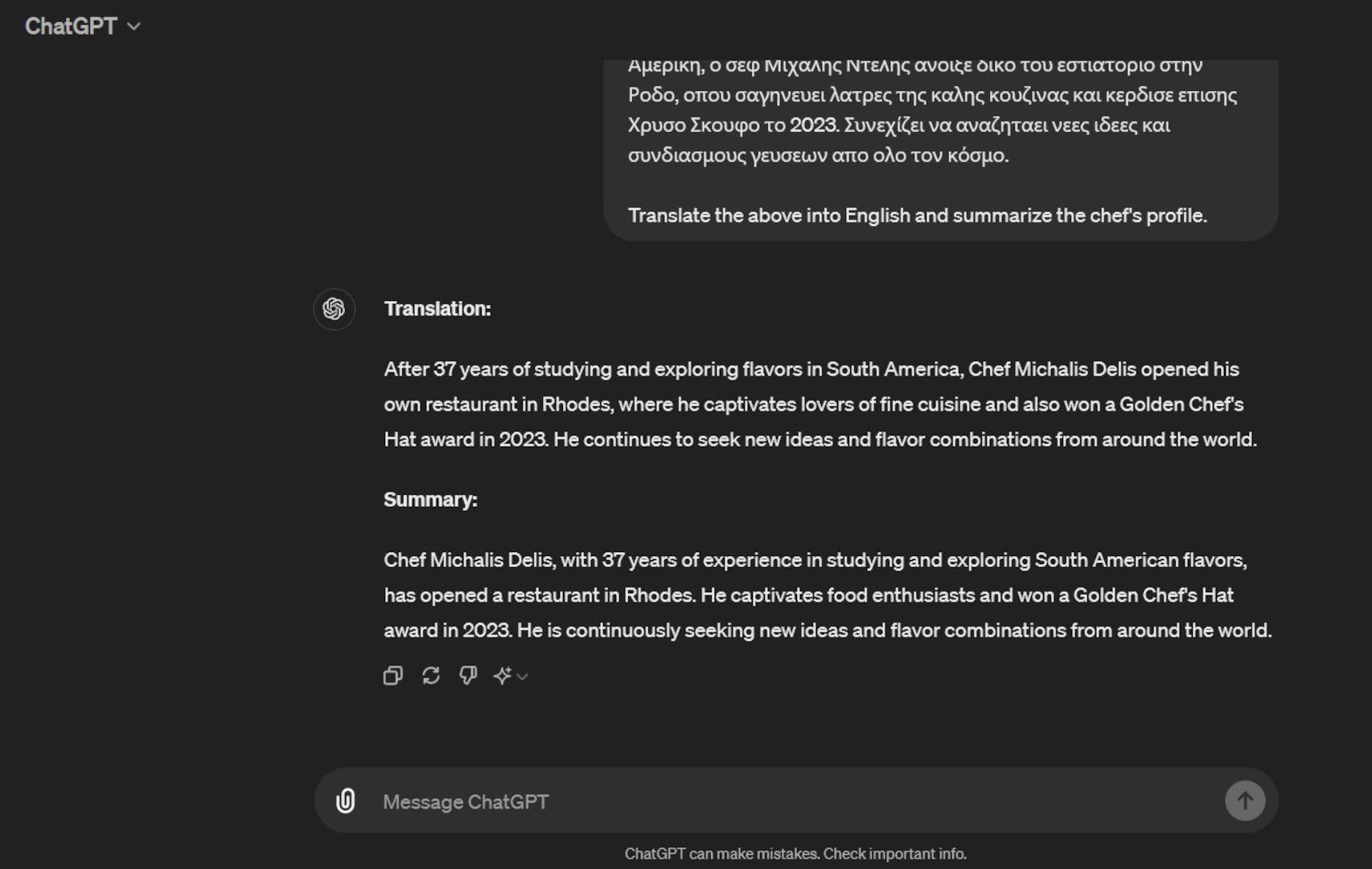

The following example involves a translation into English. ChatGPT didn’t understand the Greek name for the Toque d’Or awards, but instead of highlighting the problem, it just offered a literal and wrong translation.

Company names, books, awards, research links, and other elements can disappear or be altered in the chatbot’s summary. To avoid major mistakes, be aware of ChatGPT’s content creation limits .

ChatGPT Can Get Facts Wrong

It’s important to learn all you can about how to avoid mistakes with generative AI tools . As the example above demonstrates, one of the biggest problems with ChatGPT is that it lacks certain facts or has learned them wrong. This can then affect any text it produces.

If you ask for a summary of various data points that contain facts or concepts unfamiliar to ChatGPT, the algorithm can phrase them badly.

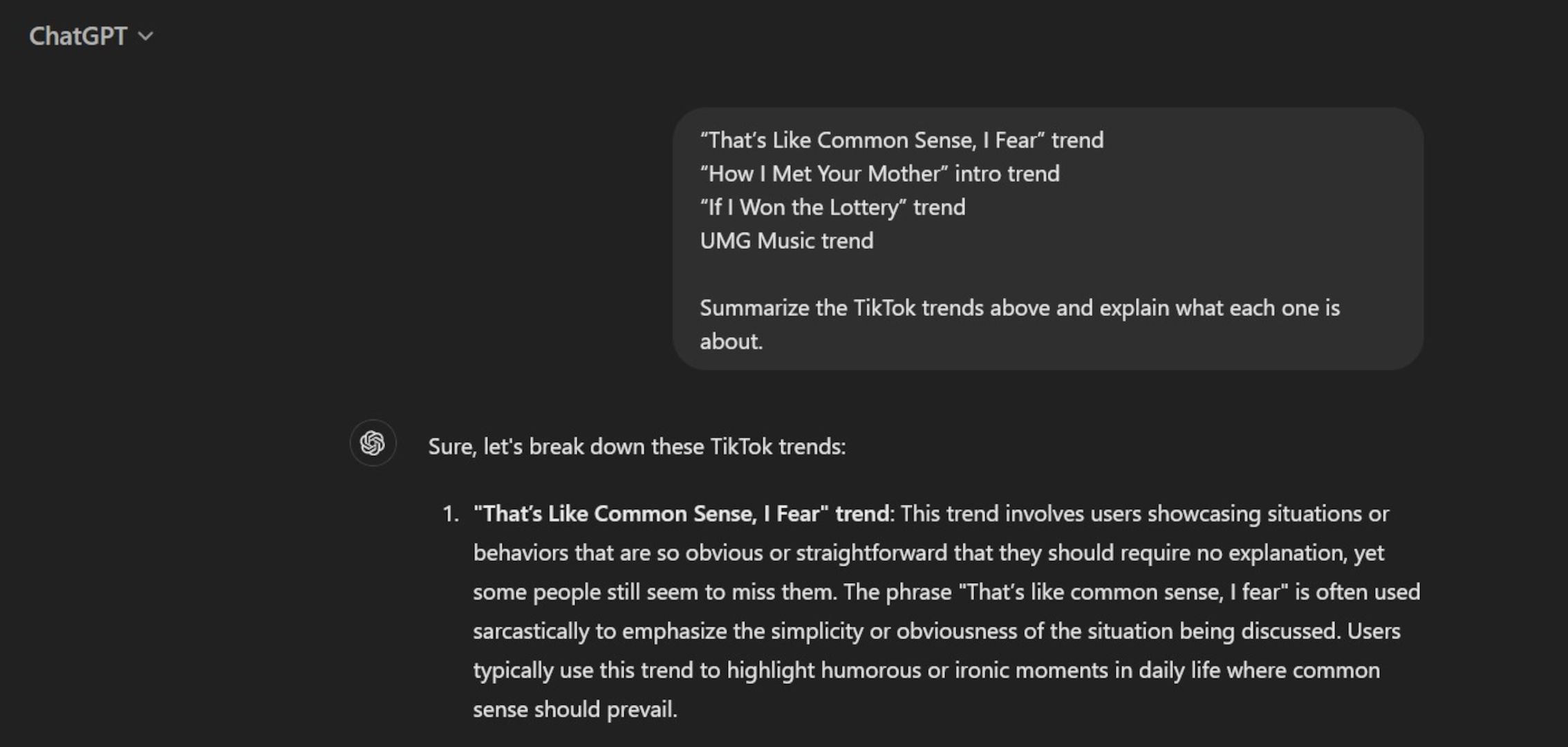

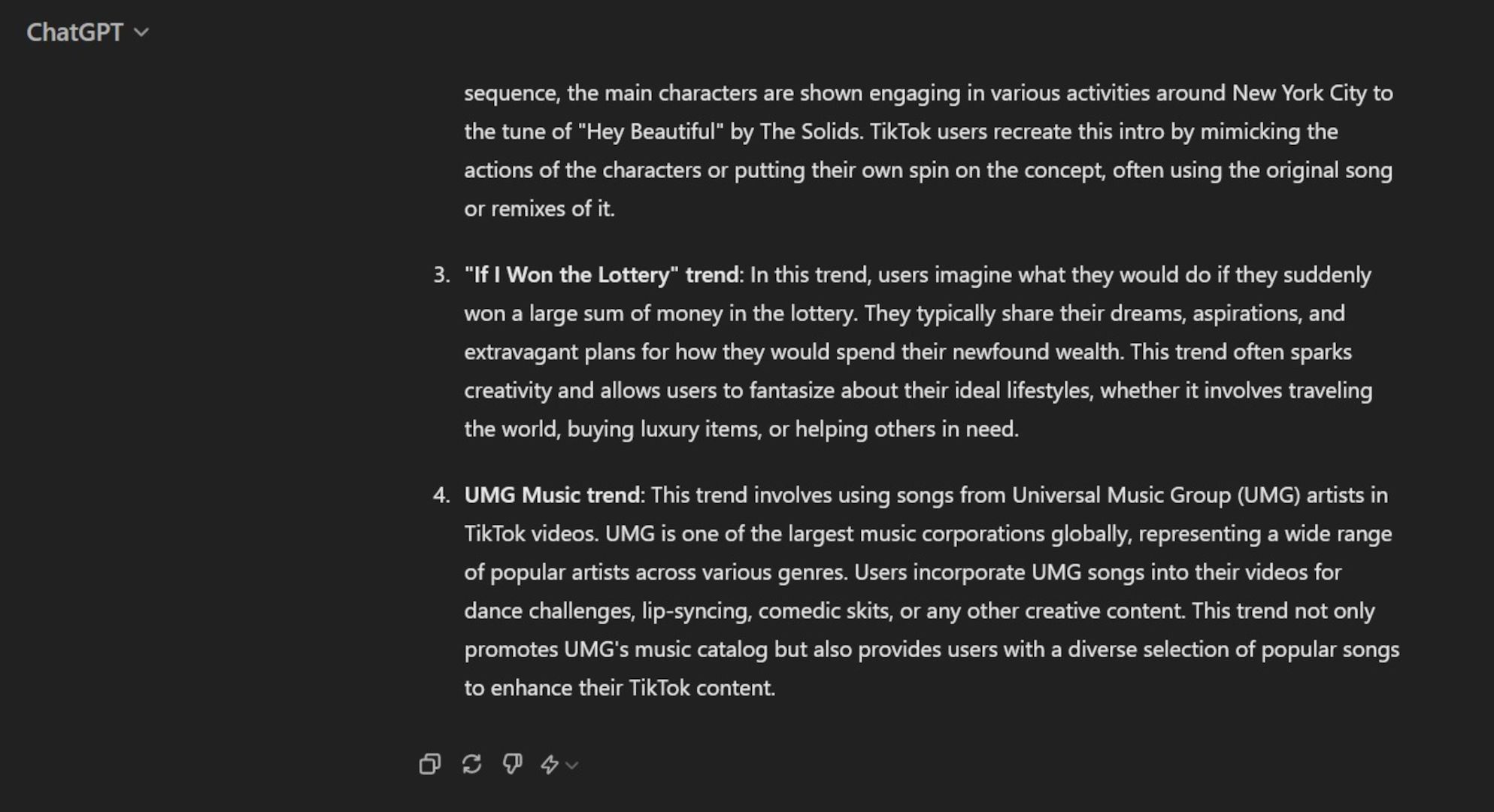

In the example below, the prompt asked ChatGPT to summarize four TikTok trends and explain what each entails.

Most of the explanations were slightly wrong or lacked specifics about what posters must do. The description of the UMG Music trend was especially misleading. The trend changed after the catalogue’s removal from TikTok, and users now post videos to criticize, not support UMG, something which ChatGPT doesn’t know.

The best solution is to not blindly trust AI chatbots with your text. Even if ChatGPT compiled information you provided yourself, make sure you edit everything it produces, check its descriptions and claims, and make a note of any facts it gets wrong. Then, you’ll know how to structure your prompts for the best results.

ChatGPT Can Get Word or Character Limits Wrong

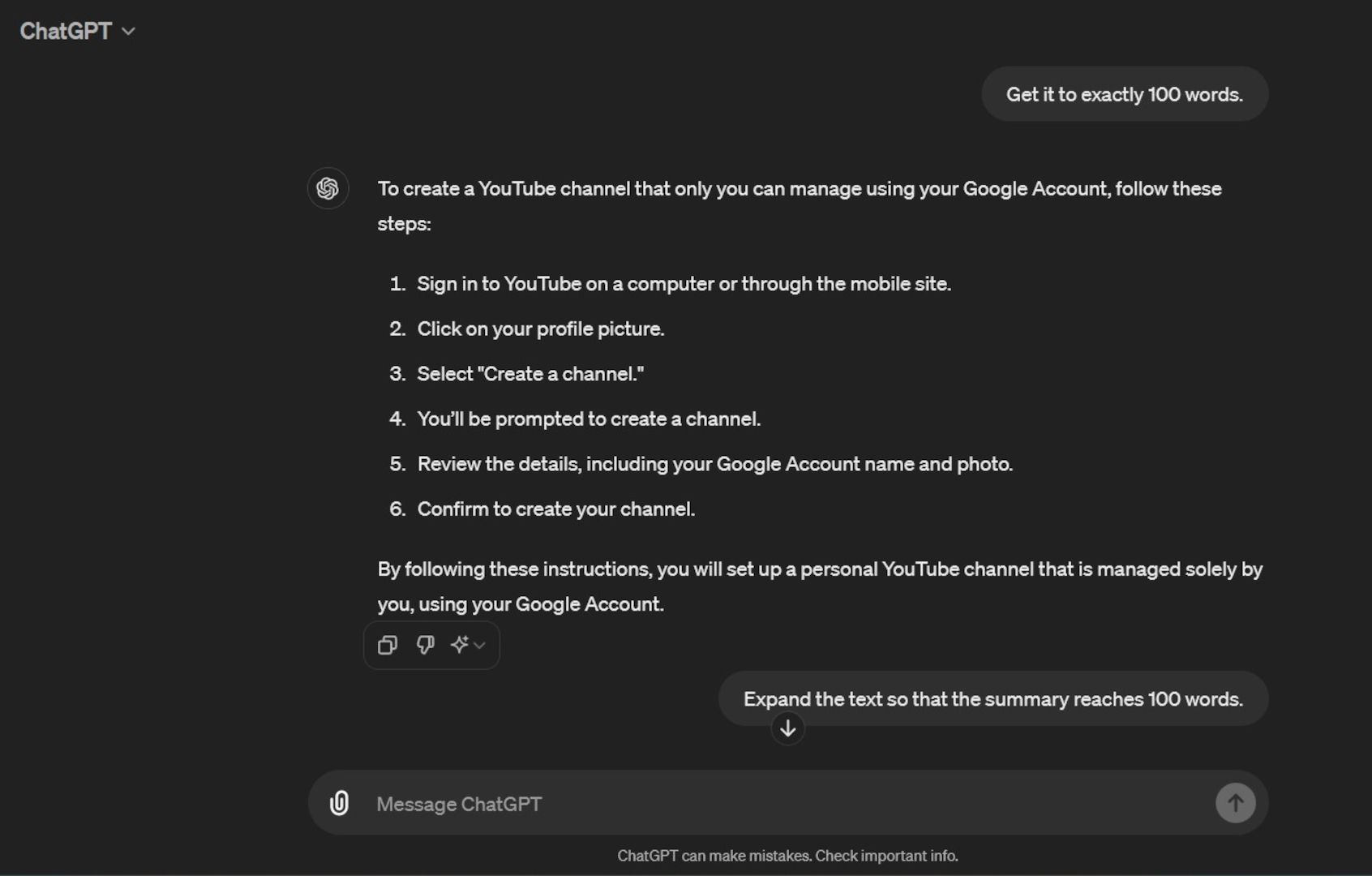

As much as OpenAI enhances ChatGPT with new features , it still seems to struggle with basic instructions, such as sticking to a specific word or character limit.

The test below shows ChatGPT needing several prompts. It still either fell short or exceeded the word count needed.

It’s not the worst mistake to expect from ChatGPT. But it’s one more factor to consider when proofreading the summaries it creates.

Be specific about how long you want the content to be. You may need to add or delete some words here and there. It’s worth the effort if you’re dealing with projects with strict word count rules.

Generally speaking, ChatGPT is fast, intuitive, and constantly improving, but it still makes mistakes. Unless you want strange references or omissions in your content, don’t completely trust ChatGPT to summarize your text.

The cause usually involves missing or distorted facts in its data pool. Its algorithm is also designed to automatically answer without always checking for accuracy. If ChatGPT owned up to the problems it encountered, its reliability would increase. For now, the best course of action is to develop your own content with ChatGPT as your handy assistant who needs frequent supervision.

Also read:

- [New] 2024 Approved How to Post YouTube Videos as Instagram Stories

- [Updated] In 2024, Happy Haven 20 Top Prison Jail GIFs & Photos for an Optimistic Social Media Experience

- 5 Hassle-Free Solutions to Fake Location on Find My Friends Of Infinix Smart 8 Pro | Dr.fone

- Best Webcams for YouTube Livestreaming

- Enhanced Audience Engagement Through Intelligent Conversational Agents

- Enhanced User Experience with Cookiebot's Powerful Tracking Solutions

- Enhancing Marketing Pace for Carlsberg Beers - Quick Market Entry Tactics

- Enhancing Online Marketing ROI Through Advanced Cookiebot Ad Tech Tools

- Ensuring the Legacy of Classics: How ABBYY Brought Tolstoy Into the Digital Age

- Evaluierung Von Volkswagens Infotainmentsystemen Mit Hilfe Der Software Von ABBYY

- Get the Videos You Love From Pinterest for Free

- In 2024, What You Want To Know About Two-Factor Authentication for iCloud On your iPhone 7

- Step-by-Step Tutorial on Resolving Missing d3d9.dll Error Messages

- Title: The Risks of Automated Text Reduction by ChatBots Like GPT

- Author: Brian

- Created at : 2024-11-14 18:01:10

- Updated at : 2024-11-17 18:52:48

- Link: https://tech-savvy.techidaily.com/the-risks-of-automated-text-reduction-by-chatbots-like-gpt/

- License: This work is licensed under CC BY-NC-SA 4.0.