The Role of SHAP Explainer in AI Interpretability

The Role of SHAP Explainer in AI Interpretability

Artificial intelligence (AI) research and deployment companies, like OpenAI, continuously release features for the benefit of humanity. In addition to ChatGPT, DALL-E, Point-E, and other successful tools, OpenAI has released Shap-E, a new innovative model.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

So what is OpenAI’s Shap-E, and what can it do for you?

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

What Is OpenAI’s Shap-E?

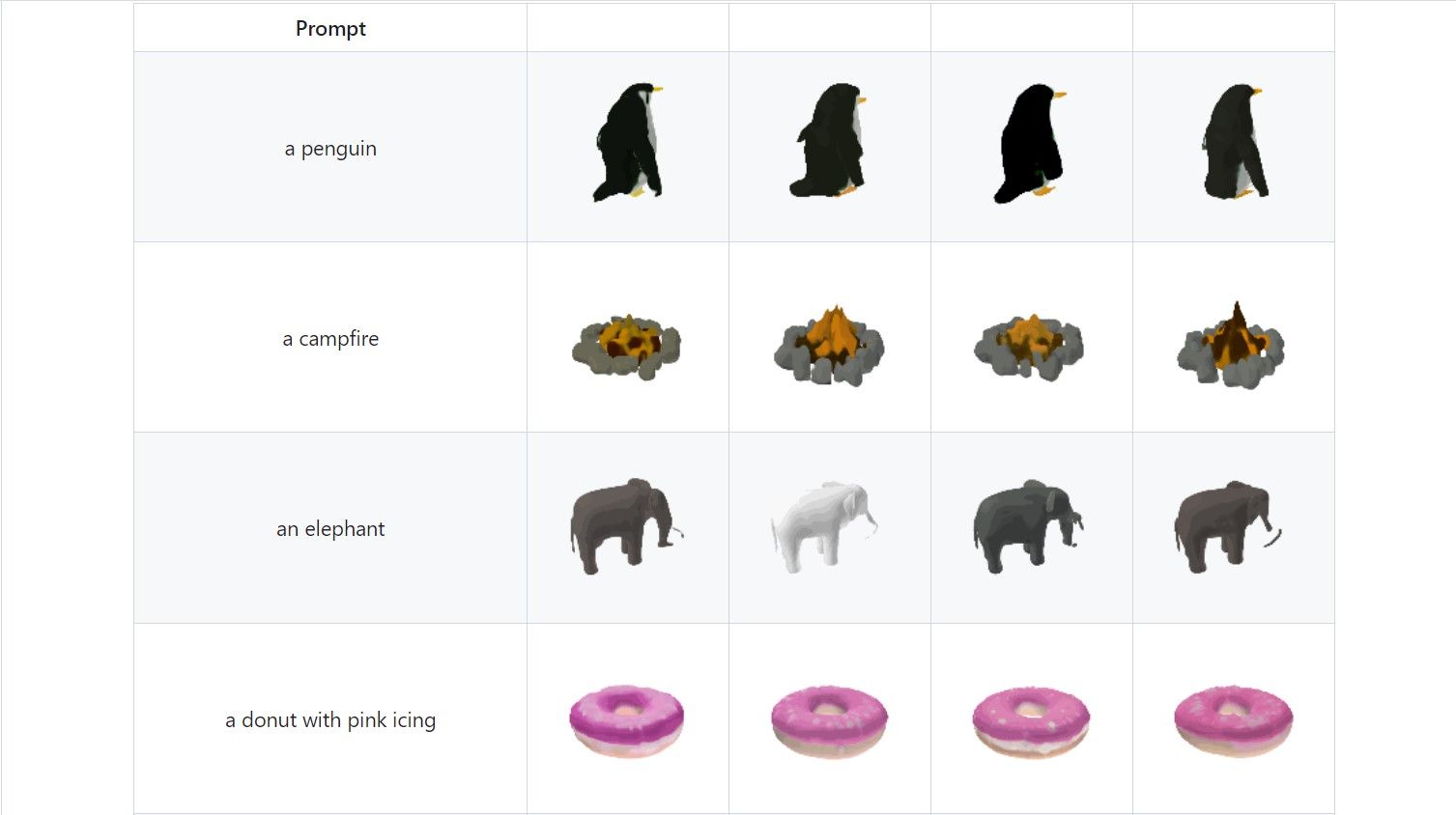

In May 2023, Alex Nichol and Heewon Jun, OpenAI researchers and contributors, released a paper announcing Shap-E , the company’s latest innovation. Shap-E is a new tool trained on a massive dataset of paired 3D images and text that can generate 3D models from text or images. It is similar to DALL-E, which can create 2D images from text , but Shap-E produces 3D assets.

Shap-E is trained on a conditional diffusion model and 3D asset mapping. Mapping 3D assets means that Shap-E learns to associate text or images with corresponding 3D models from a large dataset of existing 3D objects. A conditional diffusion model is a generative model that starts from a noisy version of the target output and gradually refines it by removing noise and adding details.

By combining these two components, Shap-E can generate realistic and diverse 3D models that match the given text or image input and can be viewed from different angles and lighting conditions.

How You Can Use OpenAI’s Shap-E

Shap-E has not been made public like other OpenAI tools, but its model weight, inference code, and samples are available for download on the Shap-E GitHub page.

You can download the Shap-E code for free and install it using the Python pip command on your computer. You also need an NVIDIA GPU and a high-performance CPU, as Shap-E is very resource-intensive.

After installation, open the 3D images you generate on Microsoft Paint 3D. Likewise, you can convert the images into STL files if you want to print them using 3D printers.

You can also report issues and find solutions to issues already raised by others on the Shap-E GitHub page.

What You Can Do With OpenAI’s Shap-E

Shap-E enables you to describe complex ideas using a visual representation of ideas. The potential applications for this technology are limitless, especially as visuals typically have far more reaching effects than texts.

As an architect, you can use Shap-E to create 3D models of buildings and structures based on written descriptions. You can specify the structures’ dimensions, materials, colors, and styles using simple sentences. For example, you can prompt it with: “Make a skyscraper with 60 floors and glass balustrades,” and export the result(s) to other software for further editing if you like the results you get.

Gamers and animation artists can improve virtual environments and visual experiences by creating detailed 3D objects and characters. In engineering, you can describe components, specifications, and functions of machinery and equipment and get the results in 3D models before creating physical prototypes.

Moreover, even in fields like education, Shap-E can help educators communicate complex and abstract ideas to their students in subjects like biology, geometry, and physics.

Although it is still a work in progress, Shap-E is a step ahead of OpenAI’s POINT-E , which produces 3D point clouds based on text prompts. The point clouds are limited in their expressiveness and resolution, often producing blurry or incomplete shapes.

Generate 3D Models Using OpenAI’s Shap-E

Shap-E is an impressive demonstration of the power of AI to create 3D content from natural language or images. With it, you can create 3D objects for computer games, interactive VR experiences, prototypes, and other purposes. Although there are no assurances regarding the output quality, the AI model provides you with a fast and efficient way to create a 3D model of anything.

Besides, this AI model is an important contribution in the deep learning space and will likely lead to future advanced innovations and creations.

SCROLL TO CONTINUE WITH CONTENT

So what is OpenAI’s Shap-E, and what can it do for you?

Also read:

- [Updated] Smooth Shadows and Dynamics Motion Blur Techniques Explained

- Authentic AI Revealed - Crackdown on VPNs

- Can ChatGPT Save Your Life in the Wilderness?

- Cut Off GPT Communication Now

- Does find my friends work on Oppo Reno 10 Pro+ 5G | Dr.fone

- Final Cut Pro Tutorial Adding Picture-in-Picture Overlays to Your Videos for 2024

- How to Fix Error 495 While Download/Updating Android Apps On Oppo A59 5G | Dr.fone

- In 2024, Expert Tips Streaming Success Using ZOOM & FB Live

- In 2024, How to Remove an AirTag from Your Apple ID Account On Apple iPhone 15 Pro?

- Is Your Apple Gadget Outdated? Understanding 'Vintage' Mode & Its Implications for Users

- Make Big Bucks Fast with a Focus on YouTube Short Video Creation for 2024

- Play MOV movies on Samsung Galaxy F34 5G, is it possible?

- Showdown of Wrist-Worn Marvels: Google Pixel Watch 2 Vs. Apple Watch S

- The Elusive Self-Editing in Algorithms

- The Financial Key to Boosting Your Video's Reach for 2024

- Top Strategies: Leveraging ChatGPT with Microsoft Excel

- Transform Your Way of Life Using ChatGPT Wisdom

- Transforming Thoughts Into Verse: Writing a Poetry Collection Using ChatGPT

- Upgrade or Not? Expert Advice on Choosing the iPhone 16 - Perspectives Editors

- Title: The Role of SHAP Explainer in AI Interpretability

- Author: Brian

- Created at : 2024-12-02 19:30:04

- Updated at : 2024-12-06 19:55:02

- Link: https://tech-savvy.techidaily.com/the-role-of-shap-explainer-in-ai-interpretability/

- License: This work is licensed under CC BY-NC-SA 4.0.