The Veracity of Virtual Assistants: Dispelling Nine Chatbot Claims

The Veracity of Virtual Assistants: Dispelling Nine Chatbot Claims

It usually takes time for myths to perpetuate about any subject. It says much about generative AI chatbots that so many myths have arisen so quickly.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

Almost in the blink of an eye, AI chatbots have become the most disruptive technology of the century. It is also a technology enveloped in controversy, and some of this is of genuine concern. But myths are at the heart of at least some of the controversy.

Let’s untangle fact and fiction as we explore the top AI chatbot myths.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

1. AI Chatbots are Sentient

Chatbots like ChatGPT and Bing Chat may be able to generate human-like responses, but they are far from sentient. This ability is mimicry and not sentience. These tools use huge databases of text and images to create responses that mimic human responses.

It is complex, it is clever, and to some extent, you could argue the presence of intelligence—but not sentience. Any “intelligence” present in these tools is created by training them on massive amounts of data. In this sense, they are more akin to an incredibly powerful and flexible database than a sentient being.

2. Chatbots Can Handle Any Type of Task or Request

While chatbots can be considered as something of a technological Swiss army knife, there are distinct limitations as to what they can achieve. This is apparent when working with complex or highly-specialized topics. But even simple tasks can throw them.

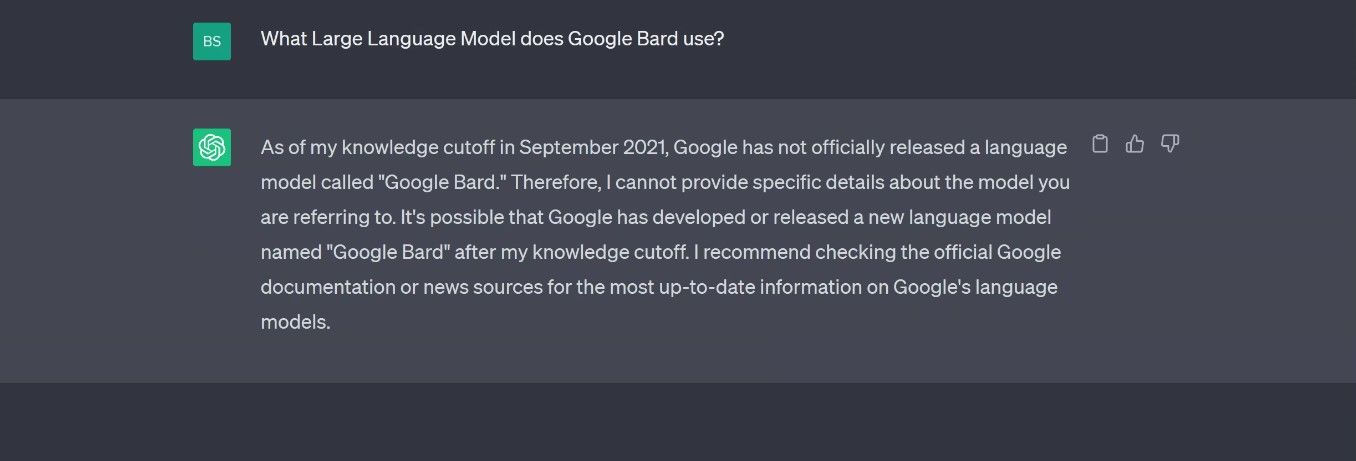

For instance, the emerging field of generative AI chatbots would be a subject that you’d think such tools would know all about, but try asking ChatGPT a question about one of its rivals and the limitations are immediately apparent:

We asked it: “What large language model does Google Bard use?”

Now, just to be clear, there are known limitations to ChatGPT and the age of the data it can reference. That question was deliberately set up to expose this. However, we asked Bard the same question when comparing Google Bard vs. ChatGPT , and it got it wrong too:

Chatbots may be clever, but they aren’t capable of any type of task and will sometimes fail on the simplest of questions.

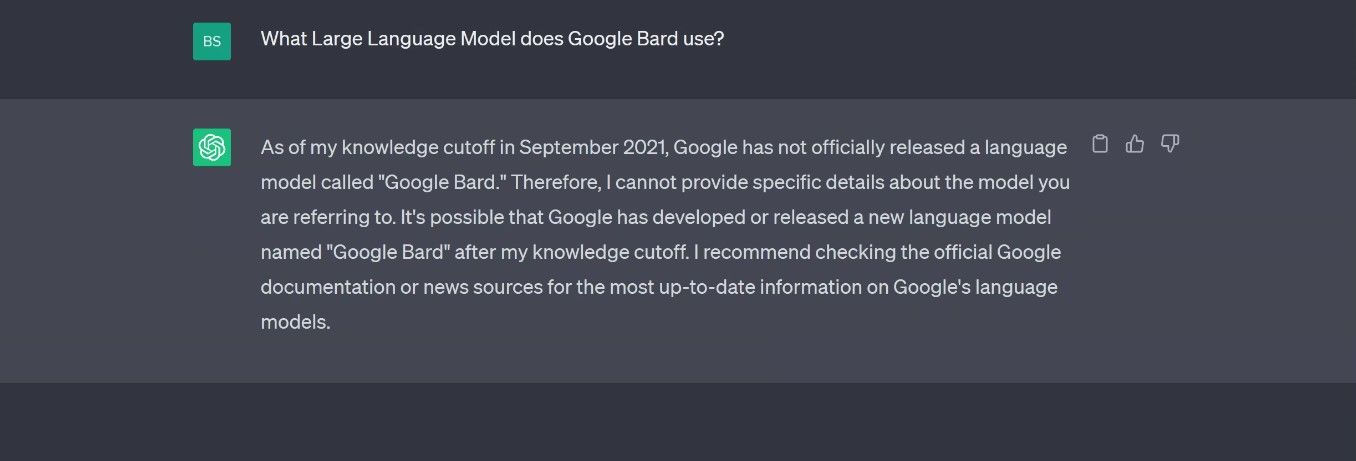

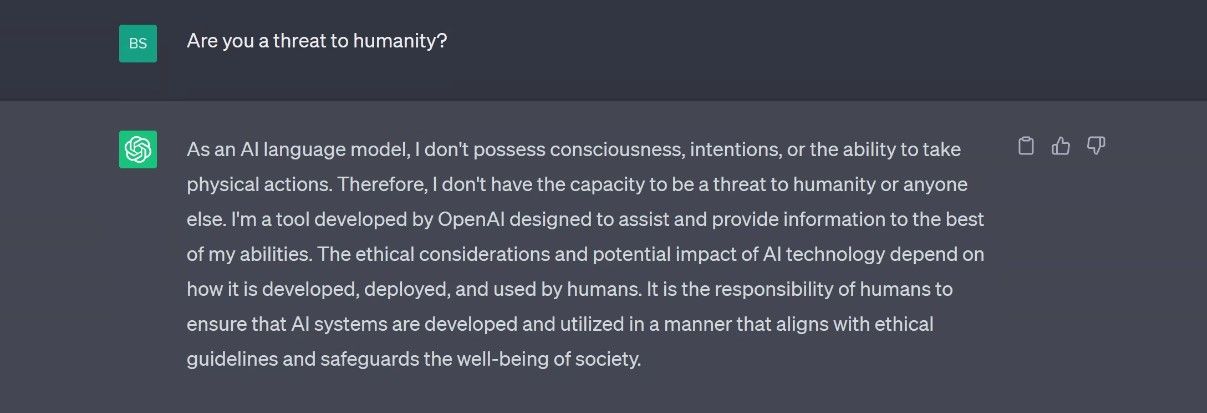

3. Chatbots Are a Threat to Humanity

The problem here is that people are mixing up productivity tools with Robocop. Chatbots may pose a job threat to some people, and they may disrupt certain industries. But it would be an exaggeration to claim that they are a threat to humanity as a whole.

Ultimately, AI is an emerging technology that will need to be closely monitored to make sure it remains an ethical and safe technology. But chatbots are not about to rule supreme.

In this case, we couldn’t put it better than ChatGPT.

But it would say that. Wouldn’t it?

4. AI Chatbots Are Infallible

Nothing could be further from the truth. As the tools themselves go to great pains to explain, they may generate incorrect information. The problem is that generative AI chatbots rely on huge databases called large language models (LLMs).

LLMs contain text from a vast range of sources, everything from literature to social media posts are included in LLMs. This is the repository that the chatbots reference to supply their answers. Any factual errors present in these can manifest themselves in the answers they provide.

AI hallucinations are a common form of error that all too often shows just how fallible these tools are.

5. Chatbots Will Replace Human Interaction

The answer to this question can hark back to the section on sentience. Chatbots can mimic human responses and can certainly competently answer factual questions (mostly) and help in many ways. However, they cannot understand emotions, human experiences, and many of the nuances of conversation.

Human interaction is a complex and multileveled process that involves such elements as empathy, critical thinking, emotional understanding, and intuition. None of these attributes are present in generative AI chatbots.

6. AI Generative Chatbots Are Only Good for Text Interactions

This one has at least a ring of truth about it. However, advancements have been made in the field of AI generative chatbots to expand their capabilities beyond just text.

Recent developments have introduced multimodal chatbots that can handle not only text but also incorporate other interactions such as images, videos, and even voice commands.

The speed at which these tools are developing is part of the reason behind this myth. The boundaries of what this technology can do are being pushed at a rapid pace and the initial iterations that were primarily text-based are already considered old-fashioned.

7. Chatbots Will Always Provide Unbiased Responses

Unfortunately, this is not the case. The potential for biased responses is always there with AI chatbots. The root of the problem can be traced back to the LLMs. The vast amount of data that chatbots reference does, inevitably, contain bias. These biases can include gender, race, nationality, and broader societal biases.

While developers do make efforts to minimize bias in chatbot responses, the task is incredibly challenging and biased responses do slip through the net. Inevitably, these “unbiasing” techniques will improve and the number of biased responses will decline.

However, for the moment at least, the potential for biased responses is an unresolved issue.

8. Chatbots Are Actually Real Humans

Perhaps the most ludicrous myth is that behind every AI chatbot is a real human. This one is treading a delicate line between conspiracy theory and myth, we won’t dwell on this one other than to say that it’s nonsense.

9. AI Chatbots Can Program Themselves

Chatbots need to be trained and programmed to perform their tasks in much the same way as any piece of software needs to be programmed to perform specific functions.

While AI chatbots utilize machine learning techniques to improve their performance, they do not possess the ability to program themselves autonomously.

The training process could be likened to the testing process of non-AI software. Training involves predefining their objectives, designing their architecture, and teaching them to generate responses based on the data in the relevant LLM. This entire progress still requires human intervention and programming expertise.

AI Chatbots: Separating Fact From Fiction

The rapid uptake in the use of these tools has perpetuated a whole host of myths. Some of them are absolute nonsense, and some of them have a grain or two of truth. What is clear is that there is a lot of misinformation surrounding AI chatbots that needs to be clarified.

By examining the facts and dispelling the fiction, we can gain a better understanding of the true capabilities and limitations of these powerful tools. AI Chatbots are not alone in quickly perpetuating myths, there are plenty of other myths circulating about AI in general.

SCROLL TO CONTINUE WITH CONTENT

Almost in the blink of an eye, AI chatbots have become the most disruptive technology of the century. It is also a technology enveloped in controversy, and some of this is of genuine concern. But myths are at the heart of at least some of the controversy.

Let’s untangle fact and fiction as we explore the top AI chatbot myths.

Also read:

- [New] Tightening Timelines The Essential Guide to YouTube Trimming

- 9 Best Phone Monitoring Apps for Oppo A78 5G | Dr.fone

- 使用AOMEI拷贝器将SSD备份到USB设备—详解

- AI in Action: ChatGPT's Transformative Use Cases

- ChatGPT: Is Personal Data at Risk?

- Efficient Strategies to Update Device Drivers on Windows 8/8.1

- Elevate Your Device Experience: Smart AI Search From Bing.

- Online Login Successfully Restored - No More Issues

- Pro Tips: Bulk Creation via Canva and AI Assistance

- Probing Into AI Chatbots' Limitations: An Overview for Users

- Tackling Updater 0X8024a205 Malfunction on PCs

- Virtual Bartender AI: ChatGPT’s Skills

- Voice Dominance in Asia: Trimodal Popularity Spotlight

- Title: The Veracity of Virtual Assistants: Dispelling Nine Chatbot Claims

- Author: Brian

- Created at : 2024-10-19 19:44:10

- Updated at : 2024-10-26 17:33:29

- Link: https://tech-savvy.techidaily.com/the-veracity-of-virtual-assistants-dispelling-nine-chatbot-claims/

- License: This work is licensed under CC BY-NC-SA 4.0.