ZeroGPT Mistrust: Why AI Tools Can Fail Too

ZeroGPT Mistrust: Why AI Tools Can Fail Too

AI chatbots are undoubtedly powerful and useful tools. However, the ability to distinguish between human-generated and AI-generated content is becoming a prominent issue.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

To address this issue, tools like ZeroGPT have emerged. These are designed to differentiate between AI and human-generated content. But do they work?

Let’s take a closer look at AI detection tools and see whether they can tell the difference between human and AI-generated text.

Disclaimer: This post includes affiliate links

If you click on a link and make a purchase, I may receive a commission at no extra cost to you.

Testing AI-Detection Tools

They say that the proof of the pudding is in the eating. So, let’s try some tests and see just how effective these tools are. It is impossible to test every tool, so we’re testing one of the most popular tools—ZeroGPT.

For material, we thought it would be quite fun to give ChatGPT a crack at writing an intro for this article and then comparing it against the “human-generated” intro:

Test One: Comparing a Human and AI-Generated Article Intro

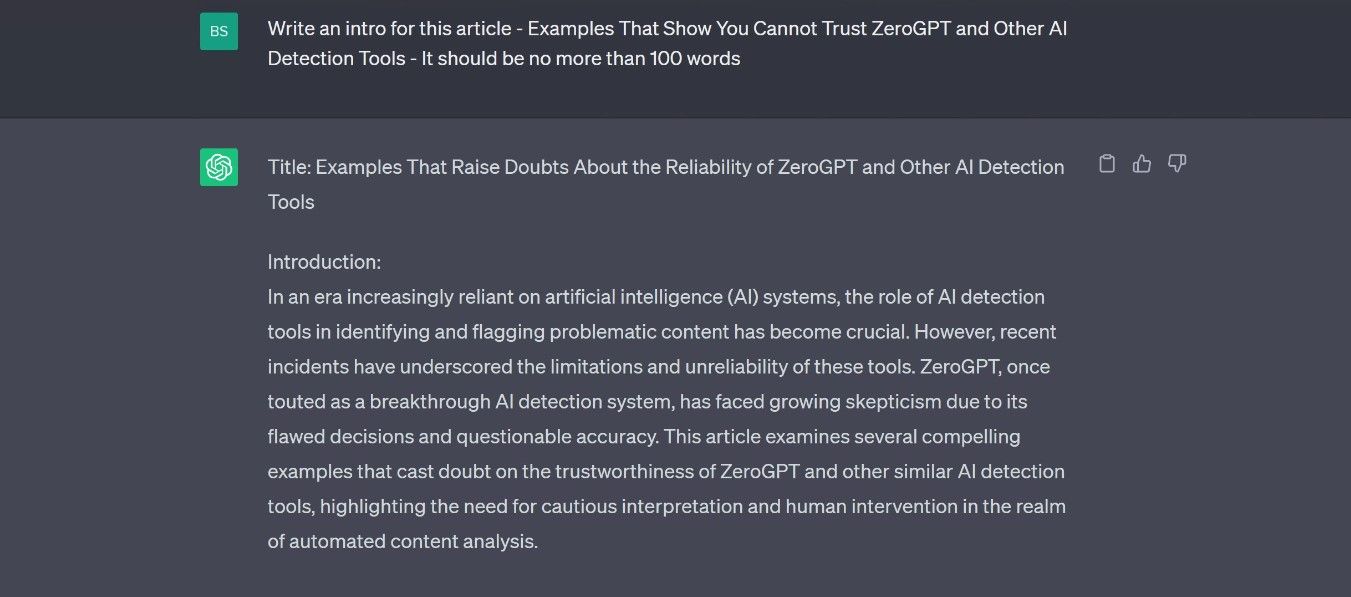

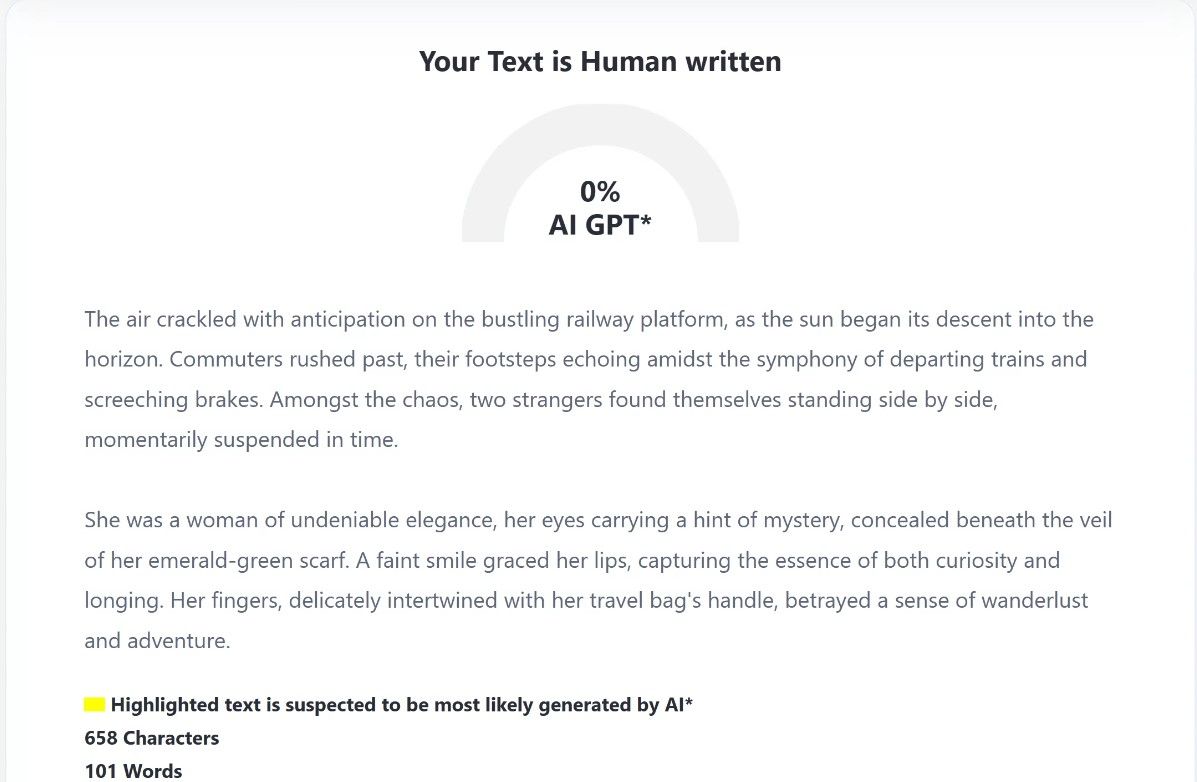

The first thing we did was get ChatGPT to generate an introduction. We entered the title and gave it no further information. For the record, we used GPT-3.5 for the test.

We then copied the text and pasted it into ZeroGPT. As you can see, the results were less than stellar.

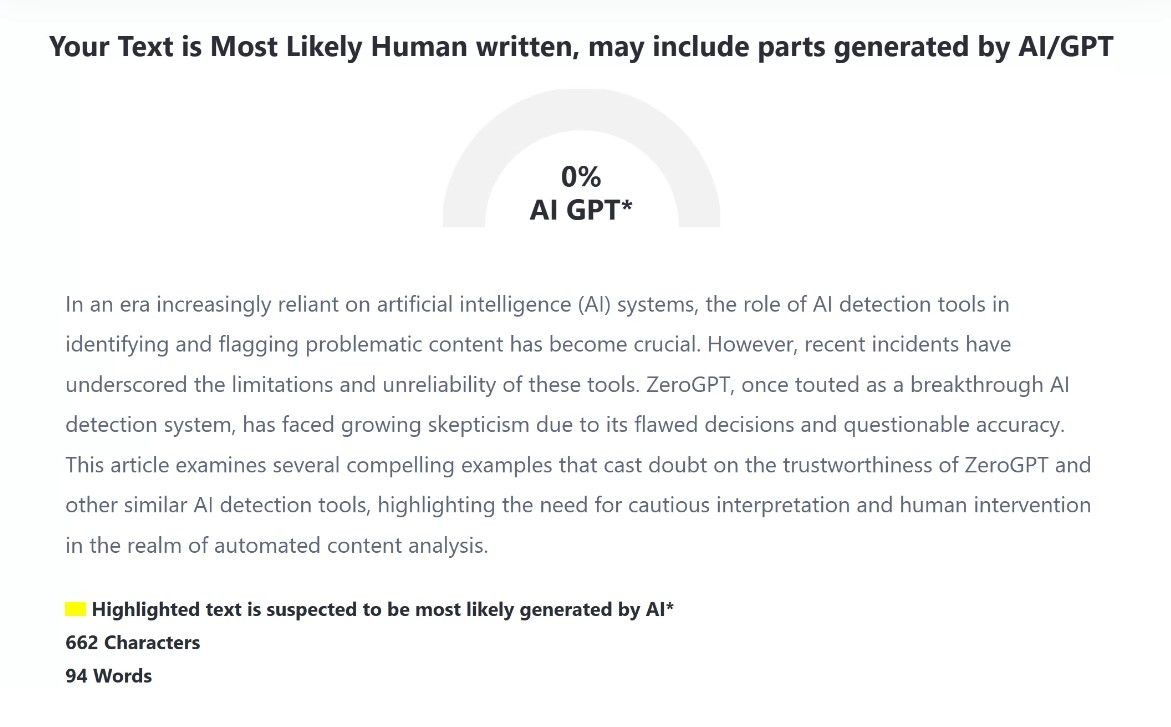

An inconspicuous start, but it does illustrate just how effective AI chatbots are. To complete the test, we let ZeroGPT analyze a human-created draft intro.

At least it got this part correct. Overall, ZeroGPT failed in this round. It did determine that at least part of the AI-generated introduction was suspect but failed to highlight specific issues.

Test Two: The False Positive Problem

As the use of ChatGPT and other AI tools grows, the likelihood of knowing or hearing about someone being confronted by claims that their work was AI-generated increases. These accusations are one of the more serious problems with ChatGPT and AI-detection tools like ZeroGPT, as this kind of error can damage reputations and affect livelihoods.

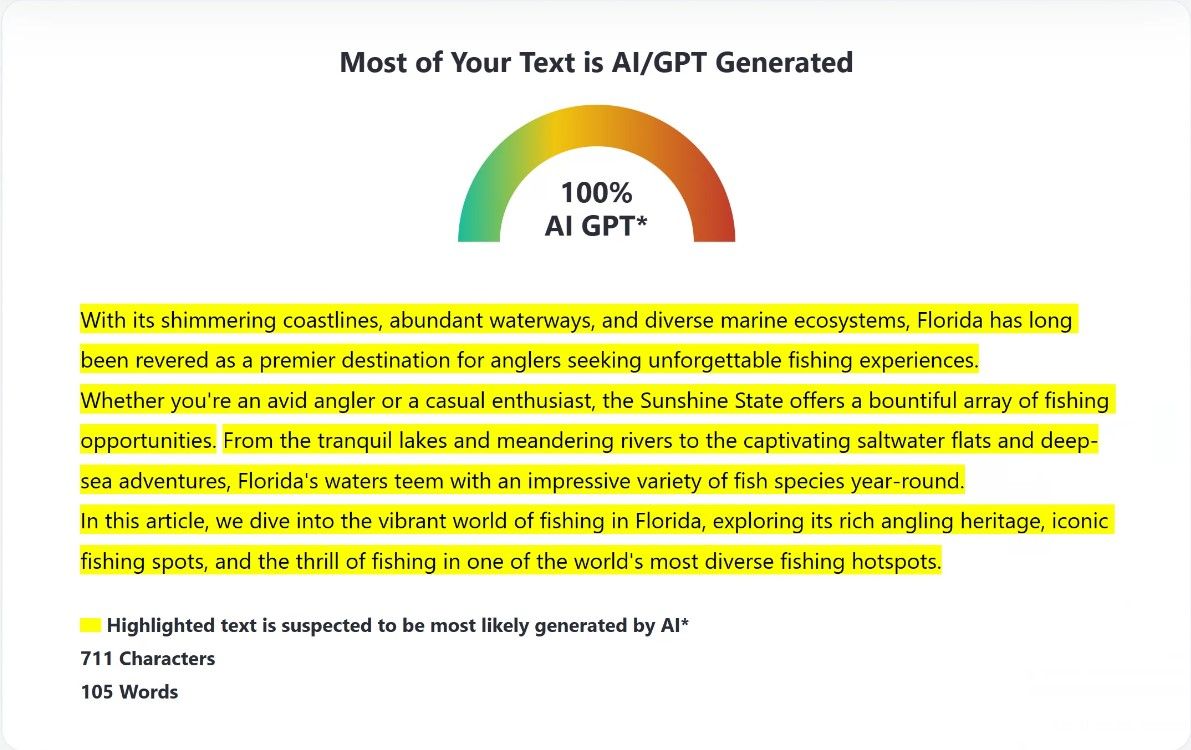

False positives occur when human-generated content is incorrectly flagged as being the work of AI. As the example below shows, the problem is easily replicated. I am a keen angler, so I decided to write an intro to an imaginary article about fishing in Florida. I then let ZeroGPT analyze the text—it flagged that the text was 100% AI-generated.

To be fair, I was aiming for this result. I kept the text generic and used “salesy” language. But the point that a human wrote this remains just as valid. This is not an inconvenience or something that can just be shrugged off. Errors like this can have serious ramifications for writers, students, and other professionals who create written works.

Test Three: Testing ZeroGPT on Fiction

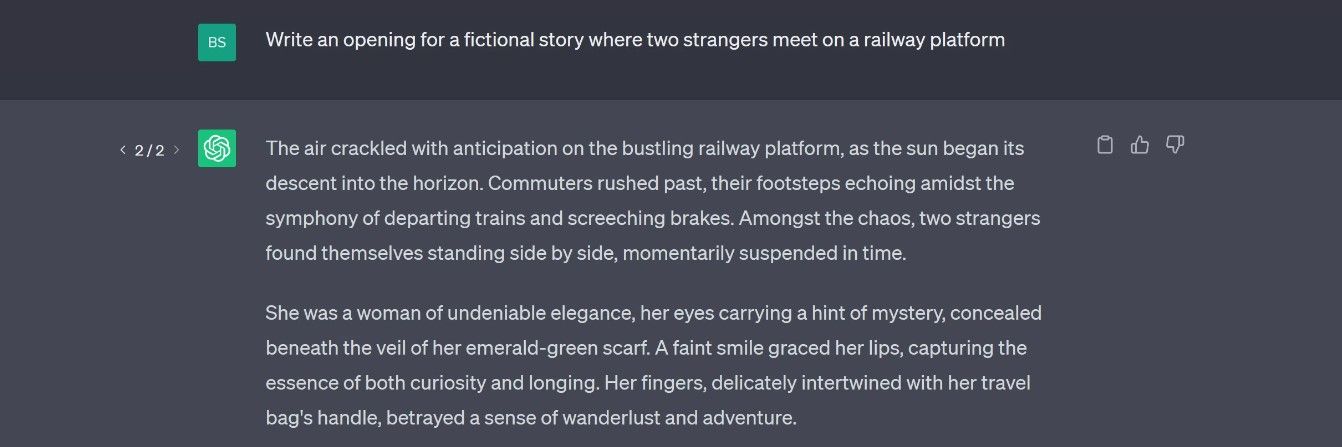

For the third test, we are going to use ChatGPT and ask it to write some fiction. To do this, we created a scenario and prompted ChatGPT to write a brief introduction to a fictional story.

We kept it simple and just asked to write an intro for a story about two strangers who meet on a railway platform:

And here was the response from ZeroGPT:

As is apparent from the result, ZeroGPT is unable to tell fact from fiction when dealing with fiction!

Test Four: News Articles

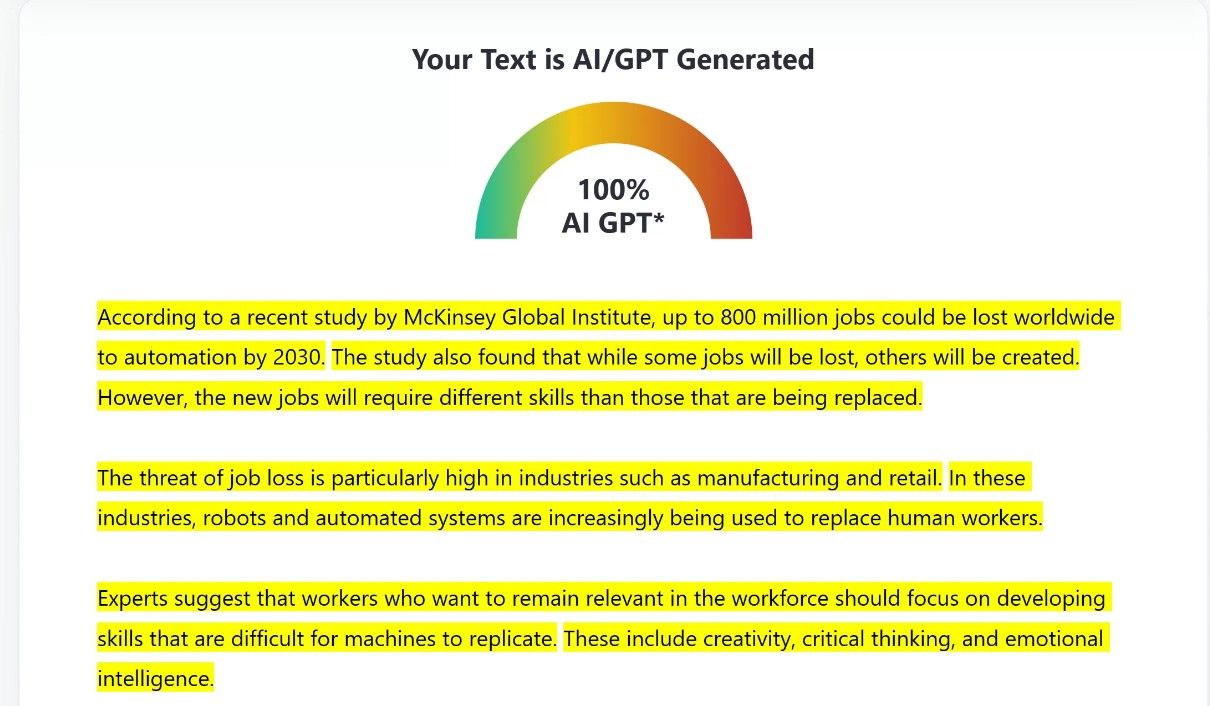

There is something unsettling about AI being able to inform us of what’s happening in the world around us. This is not always necessarily “fake news,” as it can be relevant and informative, but there are definite ethical concerns about AI .

To be fair to ZeroGPT, it performed well in this test. We asked ChatGPT and Bing Chat to write news articles about several subjects, and ZeroGPT nailed it every time. The example below shows it correctly declaring a Bing Chat-generated article as being 100% AI-generated.

The tool so consistently flagged each news article as AI-generated that we decided to test it on a dummy news article that we wrote for the purpose.

It did at least identify some of the articles as human-generated, but it flagged over 70% as AI-generated. Again, we need to be fair here; this was a fairly basic re-hash of the Bing article and was hardly Pulitzer Prize quality.

But the point remains valid. This was written by a human using research found on the internet.

Why Aren’t AI-Detection Tools Very Good?

There is no single reason behind the flaws in tools like ZeroGPT. However, one fundamental problem is the effectiveness of generative AI chatbots. These represent an incredibly powerful and rapidly evolving technology that effectively makes them a moving target for AI detection tools.

AI chatbots are continually improving the quality and “humanness” of their output, and this makes the task extremely challenging. However, regardless of the difficulties, the AI side of the equation must make a judgment call without human oversight.

Tools like ZeroGPT use AI to make their determinations. But AI doesn’t just wake up in the morning and know what to do. It has to be trained, and this is where the technical side of the equation becomes relevant.

Algorithmic and training-data biases are inevitable, considering the sheer size of the large language models that these tools are trained on . This is not a problem that is restricted to AI-detection tools, the same biases can cause AI chatbots to generate incorrect responses and AI hallucinations .

However, these errors manifest themselves as incorrect “AI flags” in detection tools. This is hardly ideal, but it’s a reflection of the current state of AI technology. The biases inherent in the training data can lead to false positives or false negatives.

Another factor that must be considered is what constitutes AI-generated content. If AI-generated content is simply reworded, is it human or AI-generated content? This represents another major challenge—the blurring of the lines between the two makes defining machine-created content almost impossible.

Looking to the Future of AI Detection

This may sound like we are knocking tools like ZeroGPT. This isn’t the case; they face massive challenges, and the technology is barely out of diapers. The rapid uptake of tools like ChatGPT has created a demand for AI detection, and the technology should be given a chance to mature and learn.

These tools can’t be expected to face the challenges posed by chatbots on their own. But they can play a valuable part in a concerted and multifaceted effort to address the challenges of AI. They represent one piece of a larger puzzle that includes ethical AI practices, human oversight, and ongoing research and development.

The challenges that these tools face are mirror images of the challenges that society faces as we grapple with the dilemmas associated with a new technological age.

AI or Not AI? That Is the Question

Tools like ZeroGPT are flawed, there is no doubt of that. But they aren’t worthless, and they represent an important step as we try to manage and regulate AI. Their accuracy will improve, but so will the sophistication of the AI they are trained to detect. Somewhere in the middle of this arms race, we need to find a balance that society is comfortable with.

The question—AI or not AI? Is more than just a discussion of whether something is AI-generated or not. It is indicative of the larger questions that society faces as we adapt to the brave new world of AI.

For the record, and according to ZeroGPT, 27.21% of this conclusion was AI-generated. Hmm.

SCROLL TO CONTINUE WITH CONTENT

To address this issue, tools like ZeroGPT have emerged. These are designed to differentiate between AI and human-generated content. But do they work?

Let’s take a closer look at AI detection tools and see whether they can tell the difference between human and AI-generated text.

Also read:

- [New] The Ultimate Guide to Premiere Pro Planning & Templates

- [Updated] In 2024, Breaking the Mold Pushing a Video Into Hot Water

- 2024 Approved How to Turn Your Smartphone Into a Virtual Reality (VR) Headset

- 2024 Approved Sony PS Players' Voice Modification Techniques

- Ace Your Workday: Unleashing the Power of ChatGPT for Personalized Professional Assistance

- Avoid Potential Misinterpretation with Non-AI Text Summarization

- ChatGPT Versus Bing AI: 10 Crucial Variances

- Crypto Insights Unlocked: Top 5 GPT Trading Tools

- Elevating Epics: How GPT Transforms D&D Gameplay and Storytelling

- From GPT to Microphone: My Podcast Saga

- Harnessing AI: ChatGPT's Role in Enhancing Lifestyle Quality

- How to Use ChatGPT to Master the Art of Storytelling

- In 2024, Apple ID Unlock On iPhone 11? How to Fix it?

- In 2024, Complete Tutorial to Use VPNa to Fake GPS Location On Tecno Pova 5 Pro | Dr.fone

- Is GSM Flasher ADB Legit? Full Review To Bypass Your Infinix Smart 7 HD Phone FRP Lock

- Mastering the Art of Realignment for ChatGPT Service Errors

- The Expert's Technique for Age Estimation

- The Future of Prompt Crafting in AI and Its Job Market Resilience

- Updated In 2024, The Professionals Method for Soundless iPhone Video Editing

- Title: ZeroGPT Mistrust: Why AI Tools Can Fail Too

- Author: Brian

- Created at : 2024-12-25 17:16:01

- Updated at : 2024-12-27 21:36:35

- Link: https://tech-savvy.techidaily.com/zerogpt-mistrust-why-ai-tools-can-fail-too/

- License: This work is licensed under CC BY-NC-SA 4.0.